Video and Vision Processing Suite Intel® FPGA IP User Guide

A newer version of this document is available. Customers should click here to go to the newest version.

23.3. Warp IP Block Description

The video output process generates RGB or YUV format Intel FPGA streaming video by reading the warped image data from external memory.

The IP generates arbitrary warps based on a transform mesh. If you turn on Use easy warp, it offers a fixed set of transforms (rotations and mirroring) without the transform mesh.

The IP processes the buffered input video by the configured number of warp engines to apply the required warp. Three coefficient tables control the warp engines to define the warp that the IP applies. External memory stores the three coefficient tables that are generated using the Warp IP software API.

The IP defines the required warp with a backward mapping from the output to the input pixel positions. It represents the warp as a subsampled mesh that defines the mapping in 8x8 regions. For output pixel mappings within the 8x8 positions, the warp engine applies bilinear interpolation.

The IP writes back the resultant warped image to external memory in to one of two output video buffers. The IP writes to these dual output buffers alternately.

The figure shows a high-level block diagram for the Warp IP with its connection to external memory.

Coefficient Tables

Each engine within the Warp IP has read access to its own set of three coefficient tables that define and control the image transform that the IP applies. The three different tables are:

- Mesh coefficients that define the output to input pixel transform

- Fetch coefficients that control the loading of the input image into the cache memory within the engine(s).

- Filter coefficients that control the mapping from the cache memory as the IP generates the interpolated or filtered output pixels.

The format of the mesh coefficients is different to the mesh data that you provide to the software API. The Software API uses 32-bit signed integers for the mesh values; the Warp IP uses a 16-bit offset binary format.

The IP needs just the mesh data to define the warp. The software API uses this mesh data to generate the required coefficient tables.

Warp Mesh Interpolation

The IP defines the warp transform using an 8x8 subsampled mesh. This mesh defines the mapping from the output pixel positions to the corresponding input pixel positions. The 8x8 subsampled mesh requires that only the mappings for the following output pixel positions are defined:

(0,0), (8,0), (16,0) … (W, 0)(0,8), (8,8), (16,8) … (W, 8)

.

(0,H), (8, H), (16, H) … (W, H)where W=8*ceil(image width/8) and H=8*ceil(image height/8)

To generate the output pixel positions that lie in between these 8x8 positions, the Warp IP uses bilinear interpolation.

Output Pixel Interpolation and Filtering

The IP generates output pixels with the pixel data from the associated input pixel positions as defined by the warp that the IP applies. The IP generates output pixel values with a bicubic interpolation calculation using a 4x4 kernel of the associated input pixel values.

The weightings for the interpolation over the 4x4 kernel are a combination of a bicubic function and a variable low pass filtering function. The software API automatically applies low pass filtering, which it bases on the amount of downscaling that results for that particular region of the warp.

Blank Skip Regions

When you configure the Warp IP to substantially downscale regions of an image, large areas of the output image can map to points outside the input image. These unmapped regions result in the IP producing black.

Because these regions in the output image do not require any processing of the input image by the Warp IP, for efficiency the IP skips the processing associated with these regions. This skipping process is setup automatically by the software API which determines, from the desired warp mapping, which regions you may program to skip.

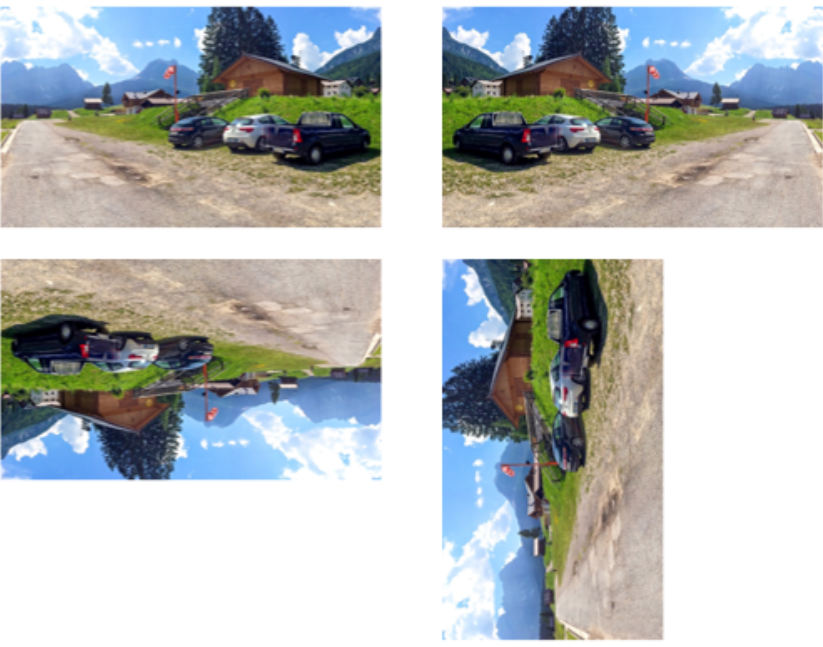

Easy Warp

When you turn on Use easy warp:

- The IP supports rotations of 0°, 90°, 180° and 270° and you can apply a horizontal mirror operation before the selected rotation.

- The IP does not configure any engines.

- The IP applies the required rotational or mirrored transform by the video output block as it reads data from external memory.

- The IP does not require any processing engines, giving resource savings and memory bandwidth savings.

For easy warp rotations of 0 or 180°, the maximum input width dimension must not exceed the maximum output width. For easy warp rotations of 90° or 270°, the transposing of vertical and horizontal dimensions places restrictions on the input resolution.

| MAX_OUTPUT_WIDTH | Input Height Restriction |

|---|---|

| 2048 | 1088 |

| 3840 | 2176 |

The figure shows a high-level block diagram for the Warp IP with Use Easy warp with its connection to external memory.