Visible to Intel only — GUID: GUID-95DBF9A7-D77A-49D7-B139-3F74677B1A11

Introduction

Install and Launch Intel® Advisor

Set Up Project

Analyze Vectorization Perspective

Analyze CPU Roofline

Model Threading Designs

Model Offloading to a GPU

Analyze GPU Roofline

Design and Analyze Flow Graphs

Minimize Analysis Overhead

Analyze MPI Applications

Manage Results

Command Line Interface

Troubleshooting

Reference

Appendix

Notices and Disclaimers

Annotation Report, Clear Description of Storage Row

Annotation Report, Disable Observations in Region Row

Annotation Report, Pause Collection Row

Annotation Report, Inductive Expression Row

Annotation Report, Lock Row

Annotation Report, Observe Uses Row

Annotation Report, Reduction Row

Annotation Report, Re-enable Observations at End of Region Row

Annotation Report, Resume Collection Row

Annotation Report, Site Row

Annotation Report, Task Row

Annotation Report, User Memory Allocator Use Row

Annotation Report, User Memory Deallocator Use Row

Intel® oneAPI Threading Building Blocks (oneTBB) Mutexes

Intel® oneAPI Threading Building Blocks (oneTBB) Simple Mutex - Example

Test the Intel® oneAPI Threading Building Blocks (oneTBB) Synchronization Code

Parallelize Functions - Intel® oneAPI Threading Building Blocks (oneTBB) Tasks

Parallelize Data - Intel® oneAPI Threading Building Blocks (oneTBB) Counted Loops

Parallelize Data - Intel® oneAPI Threading Building Blocks (oneTBB) Loops with Complex Iteration Control

Add OpenMP Code to Synchronize the Shared Resources

OpenMP Critical Sections

Basic OpenMP Atomic Operations

Advanced OpenMP Atomic Operations

OpenMP Reduction Operations

OpenMP Locks

Test the OpenMP Synchronization Code

Parallelize Functions - OpenMP Tasks

Parallelize Data - OpenMP Counted Loops

Parallelize Data - OpenMP Loops with Complex Iteration Control

Where to Find the Flow Graph Analyzer

Launching the Flow Graph Analyzer

Flow Graph Analyzer GUI Overview

Flow Graph Analyzer Workflows

Designer Workflow

Generating C++ Stubs

Preferences

Scalability Analysis

Collecting Traces from Applications

Nested Parallelism in Flow Graph Analyzer

Analyzer Workflow

Experimental Support for OpenMP* Applications

Sample Trace Files

Additional Resources

accuracy

append

app-working-dir

assume-dependencies

assume-hide-taxes

assume-ndim-dependency

assume-single-data-transfer

auto-finalize

batching

benchmarks-sync

bottom-up

cache-binaries

cache-binaries-mode

cache-config

cache-simulation

cache-sources

cachesim

cachesim-associativity

cachesim-cacheline-size

cachesim-mode

cachesim-sampling-factor

cachesim-sets

check-profitability

clear

config

count-logical-instructions

count-memory-instructions

count-memory-objects-accesses

count-mov-instructions

count-send-latency

cpu-scale-factor

csv-delimiter

custom-config

data-limit

data-reuse-analysis

data-transfer

data-transfer-histogram

data-transfer-page-size

data-type

delete-tripcounts

disable-fp64-math-optimization

display-callstack

dry-run

duration

dynamic

enable-cache-simulation

enable-data-transfer-analysis

enable-task-chunking

enforce-baseline-decomposition

enforce-fallback

enforce-offloads

estimate-max-speedup

evaluate-min-speedup

exclude-files

executable-of-interest

exp-dir

filter

filter-by-scope

filter-reductions

flop

force-32bit-arithmetics

force-64bit-arithmetics

format

gpu

gpu-carm

gpu-sampling-interval

hide-data-transfer-tax

ignore

ignore-app-mismatch

ignore-checksums

instance-of-interest

integrated

interval

limit

loop-call-count-limit

loop-filter-threshold

loops

mark-up

mark-up-list

memory-level

memory-operation-type

mix

mkl-user-mode

model-baseline-gpu

model-children

model-extended-math

model-system-calls

module-filter

module-filter-mode

mpi-rank

mrte-mode

ndim-depth-limit

option-file

overlap-taxes

pack

profile-gpu

profile-intel-perf-libs

profile-jit

profile-python

profile-stripped-binaries

project-dir

quiet

recalculate-time

record-mem-allocations

record-stack-frame

reduce-lock-contention

reduce-lock-overhead

reduce-site-overhead

reduce-task-overhead

refinalize-survey

remove

report-output

report-template

result-dir

resume-after

return-app-exitcode

search-dir

search-n-dim

select

set-dependency

set-parallel

set-parameter

show-all-columns

show-all-rows

show-functions

show-loops

show-not-executed

show-report

small-node-filter

sort-asc

sort-desc

spill-analysis

stack-access-granularity

stack-stitching

stack-unwind-limit

stacks

stackwalk-mode

start-paused

static-instruction-mix

strategy

support-multi-isa-binaries

target-device

target-gpu

target-pid

target-process

target-system

threading-model

threads

top-down

trace-mode

trace-mpi

track-memory-objects

track-stack-accesses

track-stack-variables

trip-counts

verbose

with-stack

Error Message: Application Sets Its Own Handler for Signal

Error Message: Cannot Collect GPU Hardware Metrics for the Selected GPU Adapter

Error Message: Memory Model Cache Hierarchy Incompatible

Error Message: No Annotations Found

Error Message: No Data Is Collected

Error Message: Stack Size Is Too Small

Error Message: Undefined Linker References to dlopen or dlsym

Problem: Broken Call Tree

Problem: Code Region is not Marked Up

Problem: Debug Information Not Available

Problem: No Data

Problem: Source Not Available

Problem: Stack in the Top-Down Tree Window Is Incorrect

Problem: Survey Tool does not Display Survey Report

Problem: Unexpected C/C++ Compilation Errors After Adding Annotations

Problem: Unexpected Unmatched Annotations in the Dependencies Report

Warning: Analysis of Debug Build

Warning: Analysis of Release Build

Dangling Lock

Data Communication

Data Communication, Child Task

Inconsistent Lock Use

Lock Hierarchy Violation

Memory Reuse

Memory Reuse, Child Task

Memory Watch

Missing End Site

Missing End Task

Missing Start Site

Missing Start Task

No Tasks in Parallel Site

One Task Instance in Parallel Site

Orphaned Task

Parallel Site Information

Thread Information

Unhandled Application Exception

Dialog Box: Corresponding Command Line

Dialog Box: Create a Project

Dialog Box: Create a Result Snapshot

Dialog Box: Options - Assembly

Editor Tab

Dialog Box: Options - General

Dialog Box: Options - Result Location

Dialog Box: Project Properties - Analysis Target

Dialog Box: Project Properties - Binary/Symbol Search

Dialog Box: Project Properties - Source Search

Pane: Advanced View

Pane: Analysis Workflow

Pane: Roofline Chart

Pane: GPU Roofline Chart

Project Navigator Pane

Toolbar: Intel Advisor

Annotation Report

Window: Dependencies Source

Window: GPU Roofline Regions

Window: GPU Roofline Insights Summary

Window: Memory Access Patterns Source

Window: Offload Modeling Summary

Window: Offload Modeling Report - Accelerated Regions

Window: Perspective Selector

Window: Refinement Reports

Window: Suitability Report

Window: Suitability Source

Window: Survey Report

Window: Survey Source

Window: Threading Summary

Window: Vectorization Summary

Visible to Intel only — GUID: GUID-95DBF9A7-D77A-49D7-B139-3F74677B1A11

View Performance Inefficiencies of Data-parallel Constructs

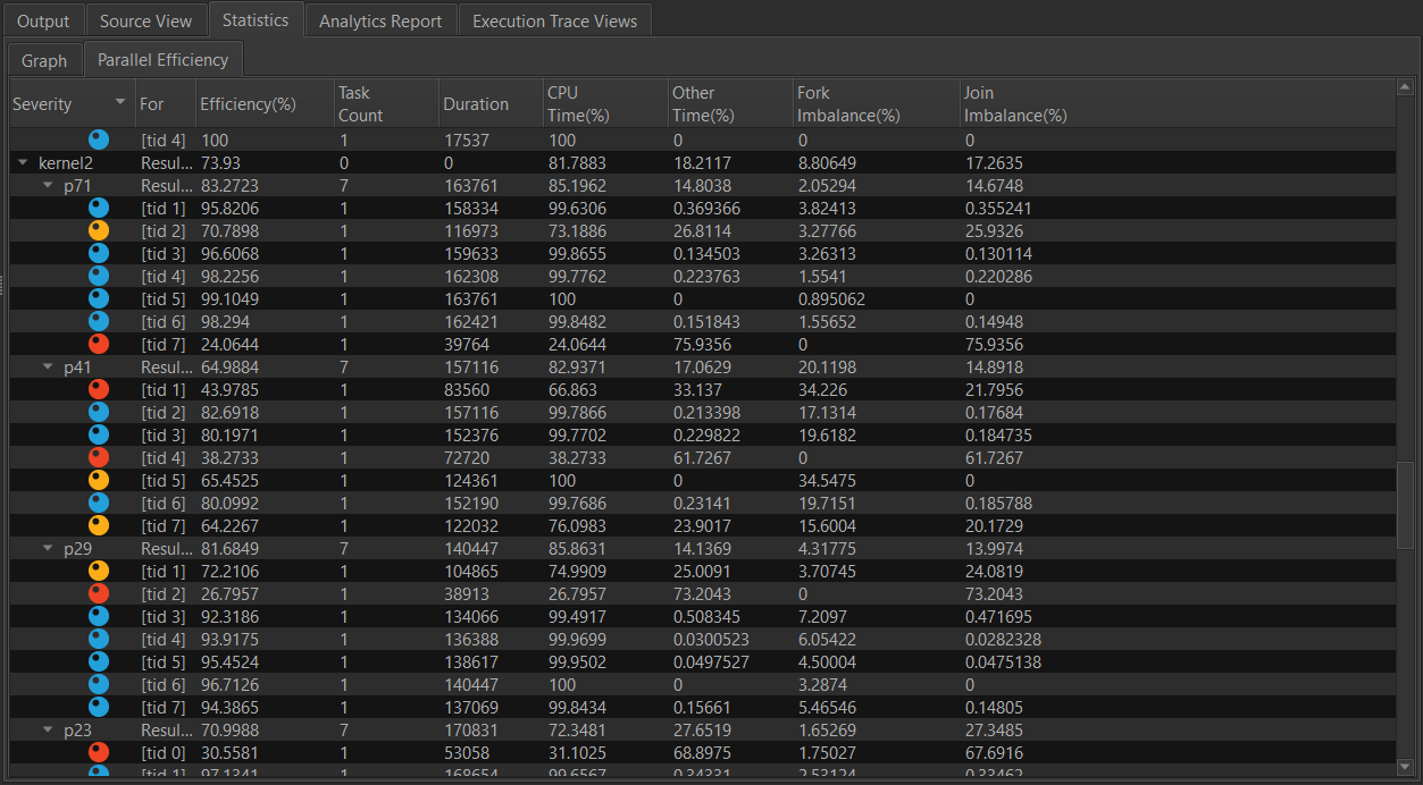

The Statistics Tab also contains the efficiency information for each parallel construct if they are employed by the algorithm. This data will show up under the Parallel Efficiency tab in the Statistics group.

The data parallel construct efficiency for each instance of a kernel. The column provides information that is useful for understanding the execution, and makes inferences to improve performance.

- The parallel algorithms are nested under the kernel name when the kernel name can be demangled correctly.

- The Efficiency column indicates the efficiency of the algorithm, when associated with the algorithm name. For the participating worker threads, the efficiency column indicates the efficiency of the thread while participating in the execution. This data is typically derived from the total time spent on the parallel construct and the time the thread spent participating in other parallel constructs.

- Task Count column indicates the number of tasks executed by the participating thread.

- Duration indicates the time the participating thread spends executing tasks from the parallel construct.

- CPU time is the Duration column data expressed as a percentage of the wall clock time of the parallel construct.

- Other Time will be 0 if the thread fully participates in the execution of tasks from the parallel construct. However, in runtimes such as Intel® oneAPI Threading Building Blocks, the participating threads may steal tasks from other parallel constructs submitted to the device to provide better dynamic load balancing and throughput. In such cases, the Other Time column will indicate the percentage of the total wall clock time the participating thread spends executing tasks from other parallel constructs.

- Fork Imbalance indicates the penalty for waking up threads to participate in the execution of tasks from the parallel construct. For more information, see Startup Penalty.

- Join Imbalance indicates the degree of imbalanced execution of tasks from the parallel constructs by the participating worker threads. For more information, see Data Parallel Efficiency.

Parent topic: Examine a SYCL Application Graph