Developer Reference for Intel® oneAPI Math Kernel Library for C

A newer version of this document is available. Customers should click here to go to the newest version.

Graphs in Linear Algebra

Graphs are a fundamental abstraction in mathematics and computer science. Often, they are used to represent relations among objects, with objects represented as graph vertices and relations between them represented as graph edges. Graphs are useful not only for storing data, but also for performing operations on the data (via various graph traversals, for example).

Because the nature of graphs is tightly coupled with the concept of relations or connections between vertices, graph data are often stored as matrices. Most graphs in real-life applications do not have all possible connections between vertices and can be naturally represented as sparse matrices. Graph operations can then be represented in the language of linear algebra using a set of algebraic operations on algebraic structures called semirings. Based on this fact, the Graph BLAS Forum has begun an open effort to define graph operations in the language of linear algebra which is called GraphBLAS. The idea behind the GraphBLAS initiative is that a wide range of graph operations (conventionally written in think-like-a-vertex language) can be rewritten using a small number of matrix operations.

Using the following definitions, the example below illustrates how you can write a graph algorithm in the language of linear algebra.

An incidence matrix of a graph with M vertices and N edges is a rectangular matrix of size MxN which has element (i,j) equal to 1 if vertex i is incident to the edge j and 0 otherwise.

An adjacency matrix for a finite simple graph G is a square (0,1) -matrix of size NxN, where N is the number of vertices in the graph, which has entry (i,j) for distinct i and j equal to 1 if there exists an edge between vertices i and j and 0 otherwise. Diagonal values are 0. If the graph is undirected, its adjacency matrix will be symmetric.

A semiring as an algebraic structure is a set with two binary operations ⊕ and ⊗ defined. The first is called the additive and the second the multiplicative operation. With respect to ⊕, a semiring is a commutative monoid with an identity element denoted by 0. With respect to ⊗, it is a monoid with an identity element denoted by 1. Also, multiplication and addition must obey the distributive property and multiplication by 0 must turn every element into 0. For example, standard arithmetic with real numbers can be considered as operating within the semiring <R, ⊕, ⊗> with standard definitions of addition and multiplication.

Consider the breadth-first search (BFS) (see Graph Fundamentals for the definition) and how it can be represented through simple linear algebra operations.

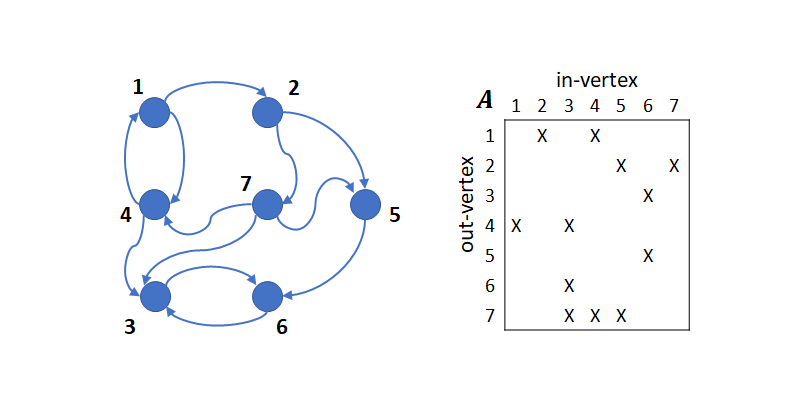

In the figure above, an example directed graph with seven vertices and 12 edges is presented with its adjacency matrix A. For simplicity, only the structure of the matrix is used and for this, denote non-zero entries by X. Row and column indices correspond to the tails and heads of the edges, respectively.

Traversing the graph in BFS consists of multiple steps where each step starts with a current set of vertices and finishes with the starting set for the next step by following the outgoing edges from the current set.

Vector x is defined with length N (where N is the number of vertices in the graph) in which entry j is equal to 1 if vertex j is included into the current set, and 0 otherwise.

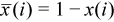

Then, traversing the graph in BFS is equivalent to computing the masked matrix-vector product  where y will be the starting set for the next step and

where y will be the starting set for the next step and  is the structural complement of x (meaning that

is the structural complement of x (meaning that  ). Component-wise, this can be written as:

). Component-wise, this can be written as:

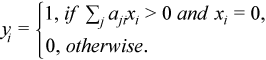

The following figure shows a single step starting from vertex 4.

Product and Performance Information |

|---|

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex. Notice revision #20201201 |