Developer Guide

FPGA Optimization Guide for Intel® oneAPI Toolkits

A newer version of this document is available. Customers should click here to go to the newest version.

Pipelining

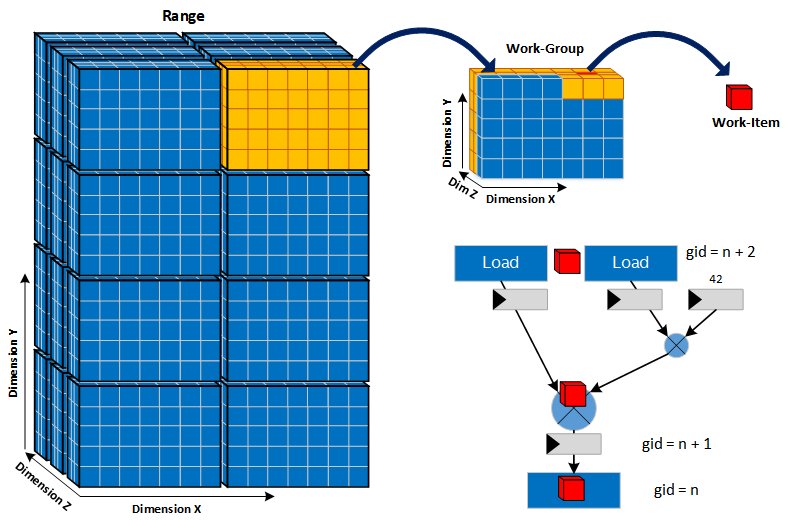

Similar to implementing a CPU with multiple pipeline stages, the compiler generates a deeply pipelined hardware datapath. For more information, refer to Concepts of FPGA Hardware Design and How Source Code Becomes a Custom Hardware Datapath. Pipelining allows for many data items to be processed concurrently (in the same clock cycle) while efficiently using the hardware in the datapath by keeping it occupied.

Example of Vectorization of the Datapath vs. Pipelining the Datapath

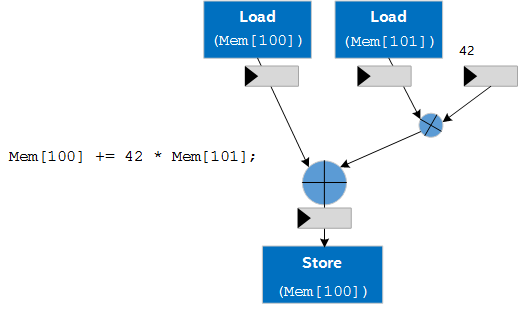

Consider the following example of code mapping to hardware:

Multiple invocations of this code when running on a CPU would not be pipelined. The output of an invocation is completed before inputs are passed to the next invocation of the code. On an FPGA device, this kind of unpipelined invocation results in poor throughput and low occupancy of the datapath because many of the operations are sitting idle while other parts of the datapath are operating on the data.

The following figure shows what throughput and occupancy of invocations look like in this scenario:

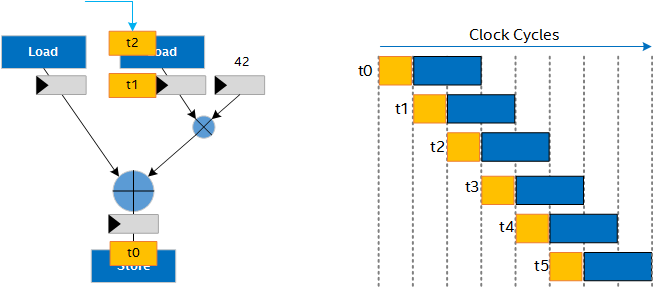

The Intel® oneAPI DPC++/C++ Compiler pipelines your design as much as possible. New inputs can be sent into the datapath each cycle, giving you a fully occupied datapath for higher throughput, as shown in the following figure:

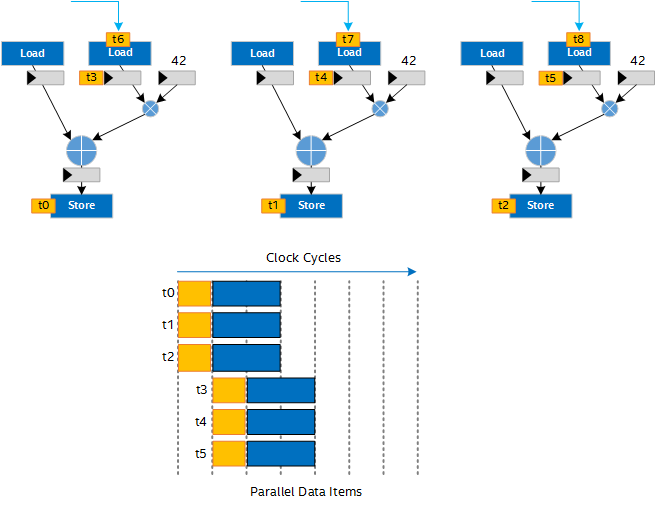

You can gain even further throughput by vectorizing the pipelined hardware. Vectorizing the hardware improves throughput but requires more FPGA area for the additional copies of the pipelined hardware:

Understanding where the data you need to pipeline is coming from is key to achieving high-performance designs on the FPGA. You can use the following sources of data to take advantage of pipelining:

- Work items

- Loop iterations

Pipelining Loops Within a Single Work Item

Within a single work item kernel, loops are the primary source of pipeline parallelism. When the Intel® oneAPI DPC++/C++ Compiler pipelines a loop, it attempts to schedule the loop’s execution such that the next iteration of the loop enters the pipeline before the previous iteration has completed. This pipelining of loop iterations can lead to higher performance.

The number of clock cycles between iterations of the loop is called the Initiation Interval (II). For the highest performance, a new iteration of the loop would start every clock cycle corresponding to an II of 1. Data dependencies that are carried from one iteration of the loop to another can affect the ability to achieve an II of 1. These dependencies are called loop carried dependencies. The II of the loop must be high enough to accommodate all loop carried dependencies.

The II required to satisfy this constraint is a function of the fMAX of the design. If the fMAX is lower, the II might also be lower. Conversely, if the fMAX is higher, a higher II might be required. The Intel® oneAPI DPC++/C++ Compiler automatically identifies these dependencies and builds hardware to resolve them while minimizing the II, subject to the target fMAX.

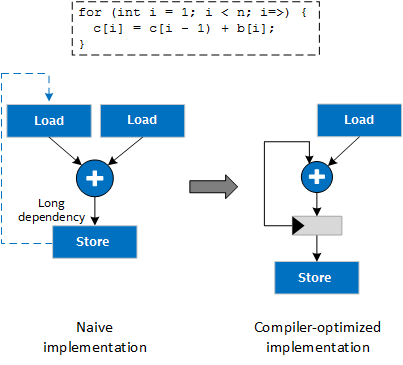

Naively generating hardware for the code in Figure 5 results in two loads: one from memory b and one from memory c. Because the compiler knows that the access to c[i-1] was written to in the previous iteration, the load from c[i-1] can be optimized away.

The dependency on the value stored to c in the previous iteration is resolved in a single clock cycle, so an II of 1 is achieved for the loop even though the iterations are not independent.

In cases where the Intel® oneAPI DPC++/C++ Compiler cannot initially achieve II of 1, you have several optimization strategies to choose from:

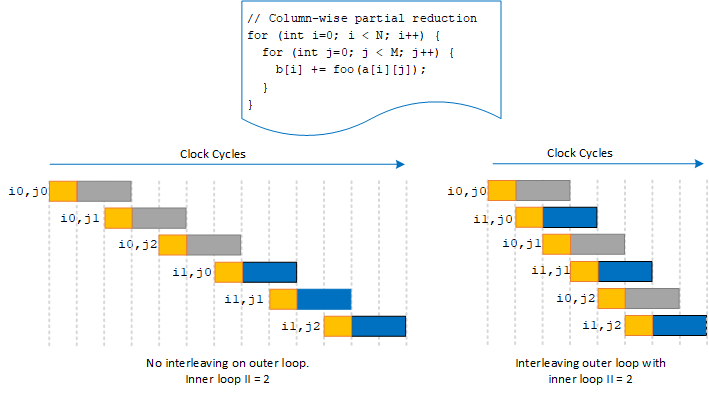

- Interleaving: When a loop nest with an inner loop II is greater than 1, the Intel® oneAPI DPC++/C++ Compiler can attempt to interleave iterations of the outer loop into iterations of the inner loop to utilize the hardware resources better and achieve higher throughput.

Interleaving

For additional information about controlling interleaving in your kernel, refer to max_interleaving Attribute.

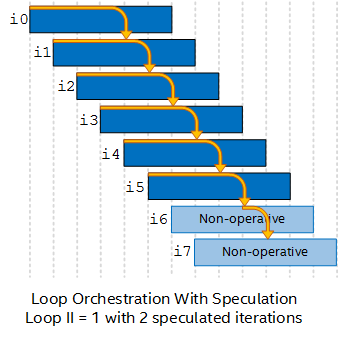

- Speculative Execution: When the critical path affecting II is the computation of the loop's exit condition and not a loop carried dependency, the Intel® oneAPI DPC++/C++ Compiler can attempt to relax this scheduling constraint by speculatively continuing to execute iterations of the loop while the exit condition is being computed. If it is determined that the exit condition is satisfied, the effects of these extra iterations are suppressed. This speculative iteration can achieve lower II and higher throughput, but it can incur additional overhead between the loop invocations (equivalent to the number of speculated iterations). A larger loop trip count helps to minimize this overhead.

NOTE:

A loop invocation is what starts a series of loop iterations. One loop iteration is one execution of the body of a loop.

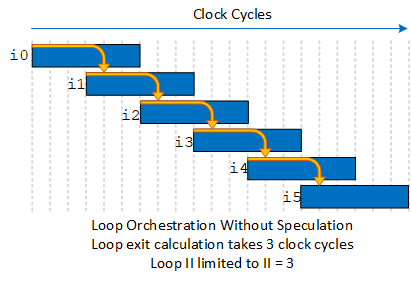

Loop Orchestration Without Speculative Execution

Loop Orchestration With Speculative Execution

These optimizations are applied automatically by the Intel® oneAPI DPC++/C++ Compiler, and they can also be controlled through pragma statements and loop attributes in the design. For additional information, refer to speculated_iterations Attribute

Pipelining Across Multiple Work Items

A workgroup (a range of work items) represents a large set of independent data that can be processed in parallel. Since there are no implicit dependencies between work items, at every clock cycle, the next work item can always enter kernel’s datapath before previous work items have completed, unless there is a dynamic stall in the datapath. The following figure illustrates the pipeline in Figure 1 filled with work items:

Loops are not pipelined for kernels that use more than one work item in the current version of the compiler. This will be relaxed in a future release.