Developer Guide

FPGA Optimization Guide for Intel® oneAPI Toolkits

A newer version of this document is available. Customers should click here to go to the newest version.

Contiguous Memory Accesses

The Intel® oneAPI DPC++/C++ Compiler attempts to dynamically coalesce accesses to adjacent memory locations to improve global memory efficiency. This is effective if consecutive work items access consecutive memory locations in a given load or store operation. The same is true in a single_task invocation if consecutive loop iterations access consecutive memory locations.

Consider the following code example:

q.submit([&](handler &cgh) {

accessor a(a_buf, cgh, read_only);

accessor b(b_buf, cgh, read_only);

accessor c(c_buf, cgh, write_only, no_init);

cgh.parallel_for<class SimpleVadd>(N, [=](id<1> ID) {

c[ID] = a[ID] + b[ID];

});

});The load operation from the accessor a uses an index that is a direct function of the work-item global ID. By basing the accessor index on the work-item global ID, the Intel® oneAPI DPC++/C++ Compiler can ensure contiguous load operations. These load operations retrieve the data sequentially from the input array and send the read data to the pipeline as required. Contiguous store operations then store elements of the result that exits the computation pipeline in sequential locations within global memory.

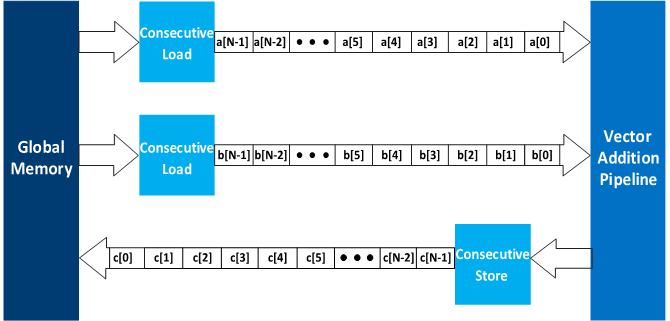

The following figure illustrates an example of the contiguous memory access optimization:

Contiguous load and store operations improve memory access efficiency because they lead to increased access speeds and reduced hardware resource needs. The data travels in and out of the computational portion of the pipeline concurrently, allowing overlaps between computation and memory accesses. Where possible, use work-item IDs that index accesses to arrays in global memory to maximize memory bandwidth efficiency.