A newer version of this document is available. Customers should click here to go to the newest version.

1. FPGA AI Suite Handbook

2. What is the FPGA AI Suite?

3. Design Considerations Before You Begin

4. Installing FPGA AI Suite

5. FPGA AI Suite Tutorial and Design Example Demonstration Applications

6. Creating an Architecture File for the FPGA AI Suite IP

7. Compiling Your Model with the FPGA AI Suite Compiler

8. Generating the FPGA AI Suite IP for Integration into an FPGA Design

9. Optimizing Your FPGA AI Suite IP

10. Integrating FPGA AI Suite IP into an FPGA Design

11. Using FPGA AI Suite as a PCIe* -Attach Platform

12. Using FPGA AI Suite in Hostless DDR-Free Mode

13. Using the FPGA AI Suite in Hostless JTAG Mode

14. Using FPGA AI Suite as an Embedded Platform

15. Using FPGA AI Suite in Video Applications

16. Using the FPGA AI Suite IP with High Bandwidth Memory on Stratix® 10 MX and Agilex™ 7 M-Series Devices

17. Developing Software Applications with the FPGA AI Suite

A. FPGA AI Suite Handbook Archives

B. FPGA AI Suite Handbook Document Revision History

2.6.6.3.1. OpenVINO™ FPGA Runtime Overview

2.6.6.3.2. OpenVINO™ FPGA Runtime Plugin

2.6.6.3.3. FPGA AI Suite Runtime

2.6.6.3.4. FPGA AI Suite Custom Platform

2.6.6.3.5. Memory-Mapped Device (MMD) Driver

2.6.6.3.6. FPGA AI Suite Runtime MMD API

2.6.6.3.7. Board Support Package (BSP) Overview

2.6.6.3.8. Additional FPGA AI Suite SoC Design Example Software Components

4.2.1. Supported FPGA Families

4.2.2. FPGA AI Suite Operating System Prerequisites

4.2.3. Installing FPGA AI Suite

4.2.4. Installing OpenVINO™ Toolkit

4.2.5. Installing Quartus® Prime Pro Edition Software

4.2.6. Setting Required Environment Variables

4.2.7. Finalizing Your FPGA AI Suite Installation

4.2.8. Downloading Precompiled Bitstreams and SD Card Images

4.3.6.2.1. Initial Setup for the SoC Design Example

4.3.6.2.2. (Optional) Creating an SD Card Image (.wic)

4.3.6.2.3. Writing the SoC Design Example SD Card Image (.wic) to an SD Card

4.3.6.2.4. Preparing SoC FPGA Development Kits for the FPGA AI Suite SoC Design Example

4.3.6.2.5. Adding Compiled Graphs (AOT files) to the SD Card

4.3.6.2.6. Verifying FPGA Device Drivers

4.3.6.2.2.1. Installing Prerequisite Software for Building an SD Card Image

4.3.6.2.2.2. Building the FPGA Bitstreams for the SoC Design Example

4.3.6.2.2.3. Installing HPS Disk Image Build Prerequisites

4.3.6.2.2.4. (Optional) Downloading the ImageNet Categories

4.3.6.2.2.5. Building the SD Card Image for the SoC Design Example

4.3.6.2.4.1. Preparing the Agilex™ 5 FPGA E-Series 065B Modular Development Kit for the SoC Design Example

4.3.6.2.4.2. Preparing the Agilex™ 7 FPGA I-Series Transceiver-SoC Development Kit for the SoC Design Example

4.3.6.2.4.3. Preparing the Arria® 10 SX SoC FPGA Development Kit for the SoC Design Example

4.3.6.2.4.4. Configuring the SoC FPGA Development Kit UART Connection

4.3.6.2.4.5. Determining the SoC FPGA Development Kit IP Address

5.1.1. Preparing OpenVINO™ Model Zoo for the PCIe Design Example

5.1.2. Preparing a Model for the PCIe Design Example

5.1.3. Running the Graph Compiler

5.1.4. Preparing an Image Set

5.1.5. Programming the FPGA Device

5.1.6. Performing Inference on the PCIe Design Example

5.1.7. Building an FPGA Bitstream for the PCIe Design Example

5.1.8. Building the Example FPGA Bitstreams

5.1.9. Preparing a ResNet50 v1 Model

5.1.10. Performing Inference on the Inflated 3D (I3D) Graph

5.1.11. Performing Inference on YOLOv3 and Calculating Accuracy Metrics

5.1.12. Performing Inference Without an FPGA Board

5.2.1.1. Setup the OFS Environment for the FPGA Device in the OFS for PCIe* -Attach Design Example

5.2.1.2. Exporting Trained Graphs from Source Frameworks in the PCIe* Design Example

5.2.1.3. Compiling Exported Graphs Through the FPGA AI Suite in the PCIe* Design Example

5.2.1.4. Compiling the PCIe* Design Example

5.2.1.5. Programming the FPGA Device in the PCIe* Design Example

5.2.1.6. Performing Accelerated Inference with the dla_benchmark Application in the PCIe* Design Example

5.2.1.7. Running the Ported OpenVINO™ Demonstration Applications in the PCIe* Design Example

5.2.1.6.1. Inference on Image Classification Graphs in the PCIe* Design Example

5.2.1.6.2. Inference on Object Detection Graphs in the PCIe* Design Example

5.2.1.6.3. Additional dla_benchmark Options in the PCIe* Design Example

5.2.1.6.4. The dla_benchmark Performance Metrics in the PCIe* Design Example

5.2.3.1. Building an FPGA Bitstream for the JTAG Design Examples

5.2.3.2. Programming the FPGA Device with JTAG Design Example Bitstream

5.2.3.3. Preparing JTAG Design Example Graphs for Inference with FPGA AI Suite

5.2.3.4. Performing Inference with the JTAG Design Example

5.2.3.5. JTAG Design Example Inference Performance Measurement

5.2.3.6. JTAG Design Example Known Issues and Limitations

6.2.2.1. Parameter Group: Global Parameters

6.2.2.2. Parameter Group: activation

6.2.2.3. Parameter Group: pe_array

6.2.2.4. Parameter Group: pool

6.2.2.5. Parameter Group: depthwise

6.2.2.6. Module: softmax

6.2.2.7. Parameter Group: dma

6.2.2.8. Parameter Group: xbar

6.2.2.9. Parameter Group: filter_scratchpad

6.2.2.10. Parameter Group: input_stream_interface

6.2.2.11. Parameter Group: output_stream_interface

6.2.2.12. Parameter Group: config_network

6.2.2.13. Parameter Group: layout_transform_params

6.2.2.14. Parameter Group: lightweight_layout_transform_params

7.5.1. Inputs (dla_compiler Command Options)

7.5.2. Outputs (dla_compiler Command Options)

7.5.3. Reporting (dla_compiler Command Options)

7.5.4. Compilation Options (dla_compiler Command Options)

7.5.5. Architecture Options (dla_compiler Command Options)

7.5.6. Architecture Optimizer Options (dla_compiler Command Options)

7.5.7. Analyzer Tool Options (dla_compiler Command Options)

7.5.8. Miscellaneous Options (dla_compiler Command Options)

9.1. Folding Input

9.2. Parallelizing Inference Using FPGA AI Suite with Multiple Lanes and Multiple Instances

9.3. Transforming Input Data Layout

9.4. Make Precision vs. Performance Trade-offs for Your FPGA AI Suite IP

9.5. FPGA AI Suite IP Supported Layers and Hyperparameter Ranges

9.6. FPGA AI Suite IP Parameterization

9.7. Generating an Optimized Architecture

12.1. Generating Artifacts for Hostless DDR-Free Operation

12.2. Hostless DDR-Free Design Example System Architecture

12.3. Hostless DDR-Free Design Example Quartus® Prime System Console

12.4. Hostless DDR-Free Design Example JTAG to Avalon MM Host Register Map

12.5. Changing the ML Graph in a Hostless DDR-Free Architecture

12.2.2.1. The Modular Scatter-Gather DMA (mSGDMA) Engines in the Hostless DDR-Free Design Example

12.2.2.2. On-Chip Memory Modules in the Hostless DDR-Free Design Example

12.2.2.3. Platform Designer System in the Hostless DDR-Free Design Example

12.2.2.4. PLL Adjustment in the Hostless DDR-Free Design Example

12.3.1. Hostless DDR-Free Design Example Quartus® Prime System Console Script Options

12.3.2. Hostless DDR-Free Design Example Inference Functionality

12.3.3. Hostless DDR-Free Design Example System Reset

12.3.4. Hostless DDR-Free Design Example Input Data Conversion

12.3.5. Measuring Performance in the Hostless DDR-Free Design Example

14.1. FPGA AI Suite SoC Design Example Inference Sequence Overview

14.2. Memory-to-Memory (M2M) Variant Design

14.3. Streaming-to-Memory (S2M) Variant Design

14.4. Top Level

14.5. The SoC Design Example Platform Designer System

14.6. Fabric EMIF Design Component

14.7. PLL Configuration

14.8. Yocto Build and Runtime Linux Environment

14.9. SoC Design Example MMD Layer Hardware Interaction Library

14.10. FPGA AI Suite SoC Design Example Run Process

14.11. FPGA AI Suite SoC Design Example Build Process

14.8.1. Yocto Recipe: recipes-core/images/coredla-image.bb

14.8.2. Yocto Recipe: recipes-bsp/u-boot/u-boot-socfpga_%.bbappend

14.8.3. Yocto Recipe: recipes-drivers/msgdma-userio/msgdma-userio.bb

14.8.4. Yocto Recipe: recipes-drivers/uio-devices/uio-devices.bb

14.8.5. Yocto Recipe: recipes-kernel/linux/linux-socfpga-lts_%.bbappend

14.8.6. Yocto Recipe: recipes-support/devmem2/devmem2_2.0.bb

14.8.7. Yocto Recipe: wic

17.3.1. Files Generated by the FPGA AI Suite Ahead-of-Time (AOT) Splitter Utility

17.3.2. Building the FPGA AI Suite Ahead-of-Time (AOT) Splitter Utility

17.3.3. Running the FPGA AI Suite Ahead-of-Time (AOT) Splitter Utility

17.3.4. FPGA AI Suite Ahead-of-Time (AOT) Splitter Utility Example Application

B.1. FPGA AI Suite Getting Started Guide Document Revision History

B.2. FPGA AI Suite Compiler Reference Manual Document Revision History

B.3. FPGA AI Suite IP Reference Manual Document Revision History

B.4. FPGA AI Suite Example Designs User Guide Revision History

B.5. AN 1008: Using the FPGA AI Suite Docker* Image Document Revision History

B.6. AN 1020: Using the FPGA AI Suite IP with High Bandwidth Memory on Stratix® 10 MX and Agilex™ 7 M-Series Devices Document Revision History

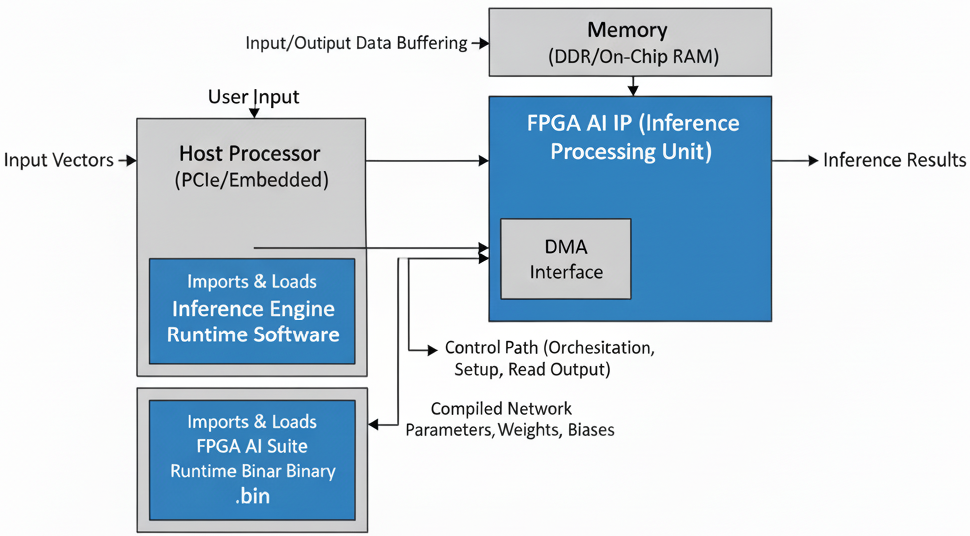

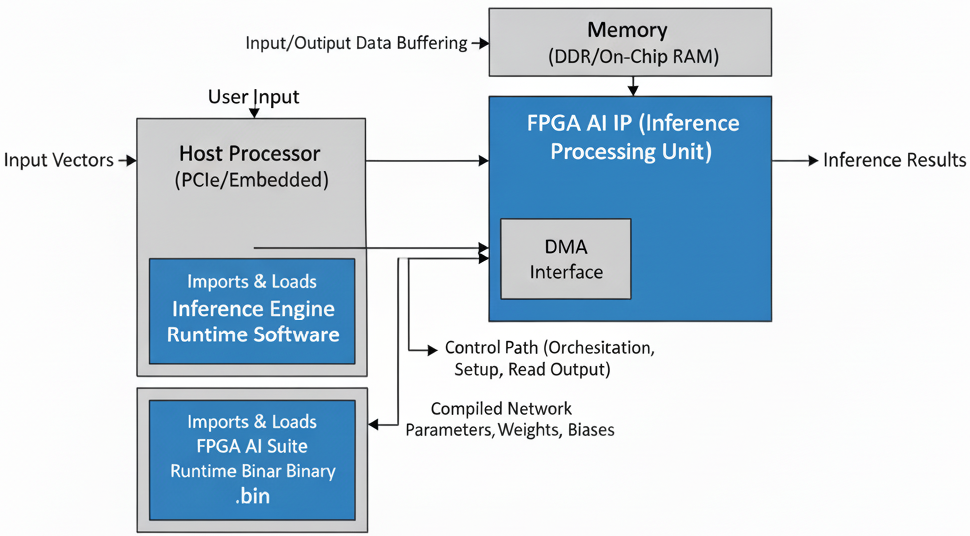

10.2. Interfacing FPGA AI Suite IP an FPGA Design for a Typical System

In a typical FPGA AI Suite IP system, a host processor (either a PCIe* host or an embedded host) serves as the control path to the IP and does the following actions:

- Orchestrating the input

- Sets up the FPGA AI Suite IP

- Reads the output of the FPGA AI Suite IP

- Executes the Inference Engine runtime software (which imports and loads the compiled network parameters, weights, and biases contained in FPGA AI Suite runtime binary (.bin file)

Figure 26. FPGA AI Suite System Architecture