Despite the recent popularity of generative AI, using gradient boosting on decision trees remains the best method for dealing with tabular data. It offers better accuracy compared to many other techniques, even neural networks. Many people use XGBoost*, LightGBM, and CatBoost gradient boosting to solve various real-world problems, conduct research, and compete in Kaggle competitions. Although these frameworks give good performance out of the box, prediction speed can still be improved. Considering that prediction is possibly the most important stage of the machine learning workflow, performance improvements can be quite beneficial.

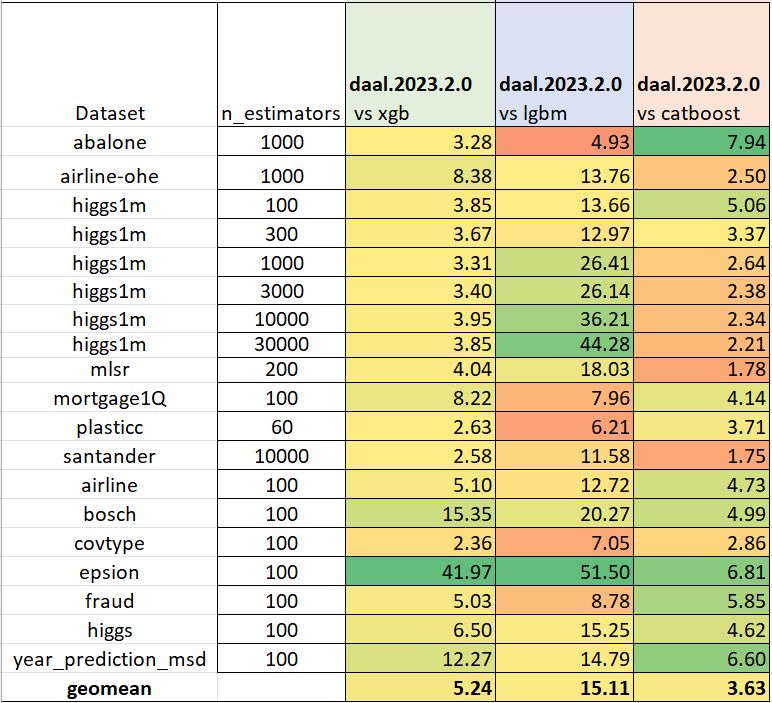

The following example shows how to convert your models to get significantly faster predictions with no quality loss (Table 1).

import daal4py as d4p

d4p_model = d4p.mb.convert_model(xgb_model)

d4p_prediction = d4p_model.predict(test_data)

Updates to oneDAL

It has been a few years since our last article on accelerated inference (Improving the Performance of XGBoost and LightGBM Inference), so we thought it was time to give an update on changes and improvements since then:

- Simplified API and alignment with gradient boosting frameworks

- Support for CatBoost models

- Support for missing values

- Performance improvements

Simplified API and Alignment with Gradient Boosting Frameworks

Now you have only convert_model() and predict() methods. You can easily integrate this into existing code with minimal changes:

You can also convert the trained model to daal4py:

XGBoost:

d4p_model = d4p.mb.convert_model(xgb_model)

LightGBM:

d4p_model = d4p.mb.convert_model(lgb_model)

CatBoost:

d4p_model = d4p.mb.convert_model(cb_model)

Estimators in the scikit-learn* style are also supported:

from daal4py.sklearn.ensemble import GBTDAALRegressor

reg = xgb.XGBRegressor()

reg.fit(X, y)

d4p_predt = GBTDAALRegressor.convert_model(reg).predict(X)

The updated API lets you use XGBoost, LightGBM, and CatBoost models all in one place. You can also use the same predict() method for both classification and prediction, just like you do with the main frameworks. You can learn more in the documentation.

Support for CatBoost Models

Adding CatBoost model support in daal4py significantly enhances the library's versatility and efficiency in handling gradient boosting tasks. CatBoost, short for categorical boosting, is renowned for its exceptional speed and performance, and with daal4py acceleration, you can go even faster. (Note: Categorical features are not supported for inference.) With this addition, daal4py covers three of the most popular gradient boosting frameworks.

Support for Missing Values

Missing values are a common occurrence in real-world data sets. This can happen for a number of reasons, such as human error, data collection issues, or even the natural limitations of an observation mechanism. Ignoring or improperly handling missing values can lead to skewed or biased analyses, which ultimately degrade the performance of machine learning models.

Missing values support is now introduced in daal4py 2023.2 version. You can use models trained on data containing missing values and use data with missing values on inference. This results in more accurate and robust predictions while streamlining the data preparation process for data scientists and engineers.

Performance Improvements

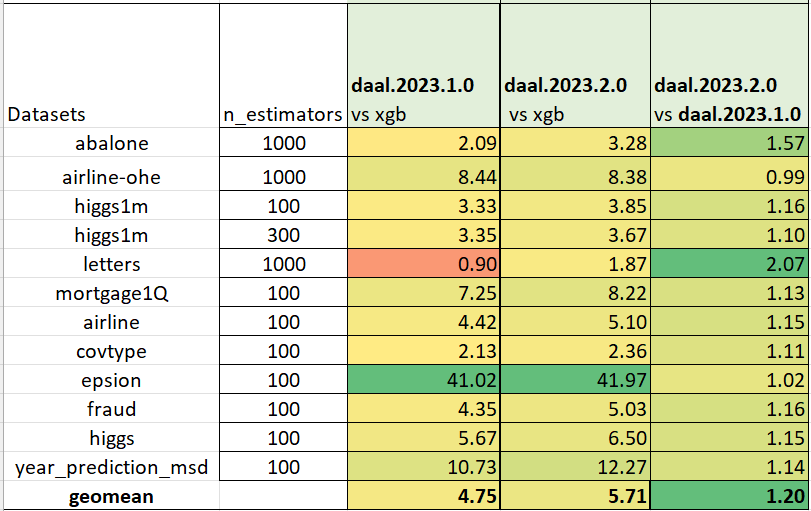

Many additional optimizations have been added to oneDAL since our last performance comparisons (Table 2).

All tests were conducted using scikit-learn_bench running on an AWS* EC2 c6i.12xlarge instance (containing Intel® Xeon® Platinum 8375C with 24 cores) with the following software: Python* 3.11, XGBoost 1.7.4, LightGBM 3.3.5, CatBoost 1.2, and daal4py 2023.2.1.

Your contributions are welcome to extend coverage for new cases and other improvements.

How to Get daal4py

daal4py is the Python interface of the oneDAL library and is available on PyPi, conda-forge, and conda main channels. This is a fully open-sourced project, and we welcome any issues, requests, or contributions to be done via GitHub repo. To install the library from PyPi, simply run:

pip install daal4py

Or the conda-forge variant:

conda install -c conda-forge daal4py --override-channels

These results show that by making a simple change in your code, you can make your gradient-boosting data analysis tasks much faster on your current Intel® hardware. These improvements in performance can lower computing costs and help you get results more quickly — all without sacrificing quality.