AI is not our future — it is our present reality. Retail, banking and finance, security, governance, healthcare, and industry are just some of the areas where AI used daily. We are approaching the point where content generated by AI and content produced by humans becomes indistinguishable. Natural language processing (NLP) is evolving to a level where the interaction between a machine and a human resembles that between two people. This opens new opportunities for governments, entrepreneurs, and companies in the financial and health sectors.

To make machine learning (ML) tasks easier, Intel and Red Hat are developing new solutions. Data mining, model deployment, training, process automation, and data transmission are all enhanced by products like Red Hat OpenShift* Data Science. To increase performance, Intel is simultaneously introducing new technologies and accelerators, including Intel® Advanced Matrix Extensions (Intel® AMX).

Problem Statement

AI gives enterprises a business advantage that they can use in various ways. NLP can help with fraud detection by analyzing large text data efficiently. It can perform sentiment analysis and support healthcare. NLP enables a more natural form of human–machine interaction. This article shows the simple usage of an AI model to answer questions about a company. Using an intelligent assistant increases customer convenience by reducing response time and decreasing the number of people needed to serve customers, thereby lowering the cost.

Approach

In this case, the goal is to answer customers’ questions about products, prices, sales, and the company itself. The following example shows a simple way to set up a chatbot using open-source components that can interact with customers. With some programming and development, it can be extended and transformed into a fully functioning, production-ready application that will support business.

To achieve this goal, we are using BERT (Bidirectional Encoder Representations from Transformers) and the open-source BERT Question Answering Python* Demo. The following example is presented using the Red Hat OpenShift Data Science platform with OpenVINO* Toolkit Operator and with the use of 4th Generation Intel® Xeon® Scalable processors with Intel AMX to provide quicker responses.

Technologies

Red Hat OpenShift Data Science

Red Hat OpenShift Data Science is a service for data scientists and programmers of intelligent applications, available as a self-managed or managed cloud platform. It offers a fully supported environment where ML models may be developed, trained, and tested quickly before being deployed in a real-world setting. Teams can deploy ML models in production on containers — whether on-premises, in the public cloud, in the data center, or at the edge — thanks to the ease with which they can be exported from Red Hat OpenShift Data Science to other platforms.

There are many benefits to using Red Hat OpenShift Data Science for ML. The platform includes a wide range of commercially available partners and open-source tools and frameworks — such as Jupyter Notebooks*, TensorFlow*, PyTorch, and OpenVINO — for data scientists to use in their workflows. Red Hat OpenShift Data Science provides a secure and scalable environment.

Intel® AMX

Intel AMX is a new built-in accelerator that improves the performance of deep learning training and inference on the CPU. It accelerates AI operations and improves performance up to 3x. It is ideal for workloads like NLP, recommendation systems, and image recognition. Performance on 4th Generation Intel Xeon Scalable processors can be fine-tuned using the Intel® oneAPI Deep Neural Network Library (oneDNN), which is part of the Intel® oneAPI Toolkit and integrated into TensorFlow and PyTorch AI frameworks and with the Intel® Distribution of OpenVINO toolkit. Those toolkits can be used with Red Hat OpenShift Data Science.

Implementation Example

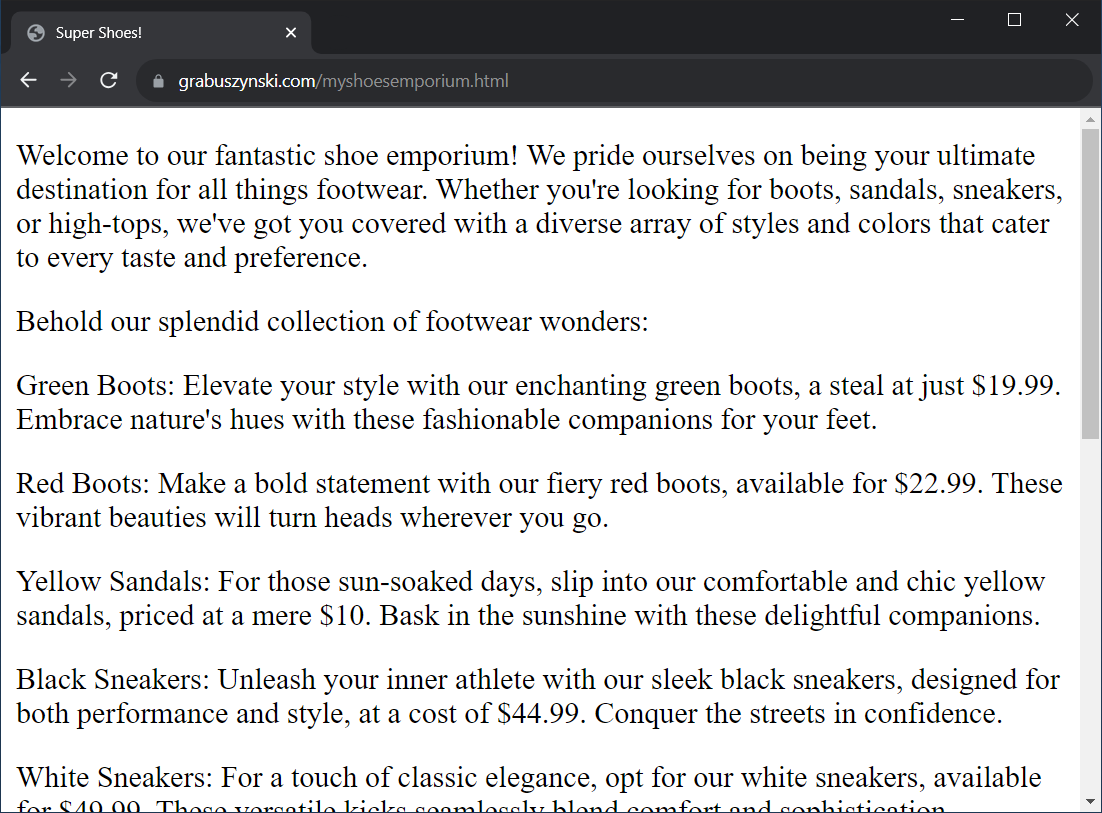

This example uses a simple website describing the Super Shoes! Store as a base (Figure 1). A retailer could use their own website or generate a document containing important information about the company. The following example shows how to develop a simple chatbot that can answer questions, therefore decreasing response time from the customer’s perspective.

To start creating the chatbot, prepare an image registry for the Red Hat OpenShift cluster. This example also requires storage to provide persistent volumes (e.g., OpenShift Data Foundation) and Node Feature Discovery Operator to detect CPUs that support Intel AMX. The kernel detects Intel AMX at run-time, so there is no need to enable and configure it separately. Check the node’s labels to verify that Intel AMX is available:

feature.node.kubernetes.io/cpu-cpuid.AMXBF16=true

feature.node.kubernetes.io/cpu-cpuid.AMXINT8=true

feature.node.kubernetes.io/cpu-cpuid.AMXTILE=true

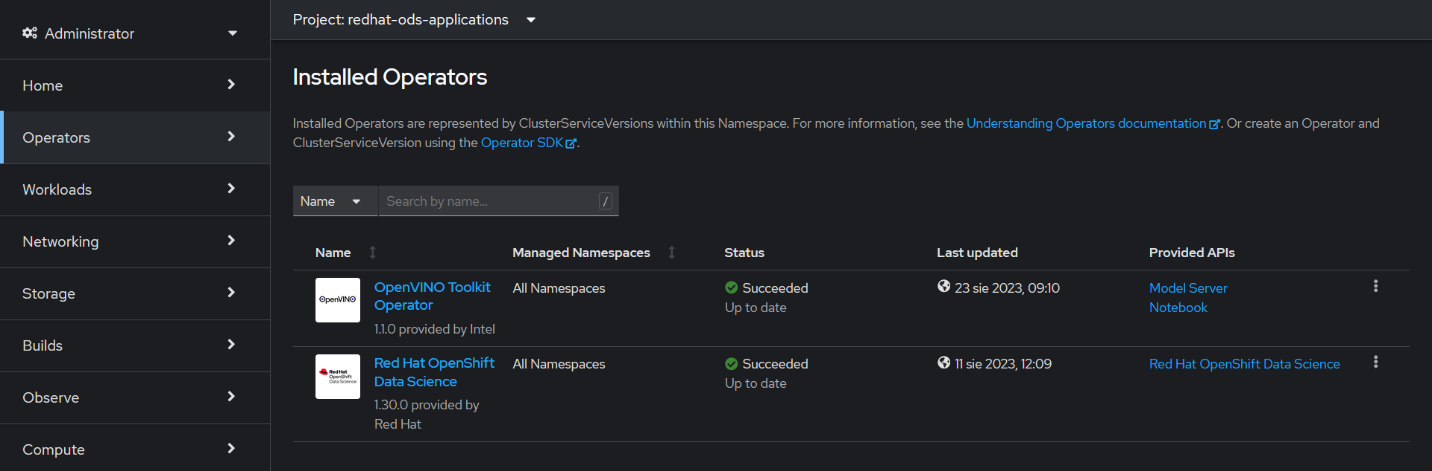

Red Hat OpenShift Data Science and OpenVINO Toolkit Operator must be installed from OperatorHub, available via the OpenShift Container Platform web console (Figure 2). The default settings are sufficient, but please keep the proper order of installation: OpenShift Data Science first, OpenVINO Toolkit Operator second. Then, ensure that both operators are listed in the Installed Operators tab in the redhat-ods-applications project, and that the status is Succeeded.

The OpenVINO Toolkit Operator requires additional configuration. Make sure that a redhatodsapplications project is selected. Click on the OpenVINO Toolkit Operator and choose a Notebook tab. Now, create a new notebook with default settings. After these steps are finished, verify that the openvino-notebooks-v2022.3-1 build is completed by going to Builds > Builds from the main menu.

Use the menu in the top-right corner to go to the Red Hat OpenShift Data Science dashboard (Figure 3).

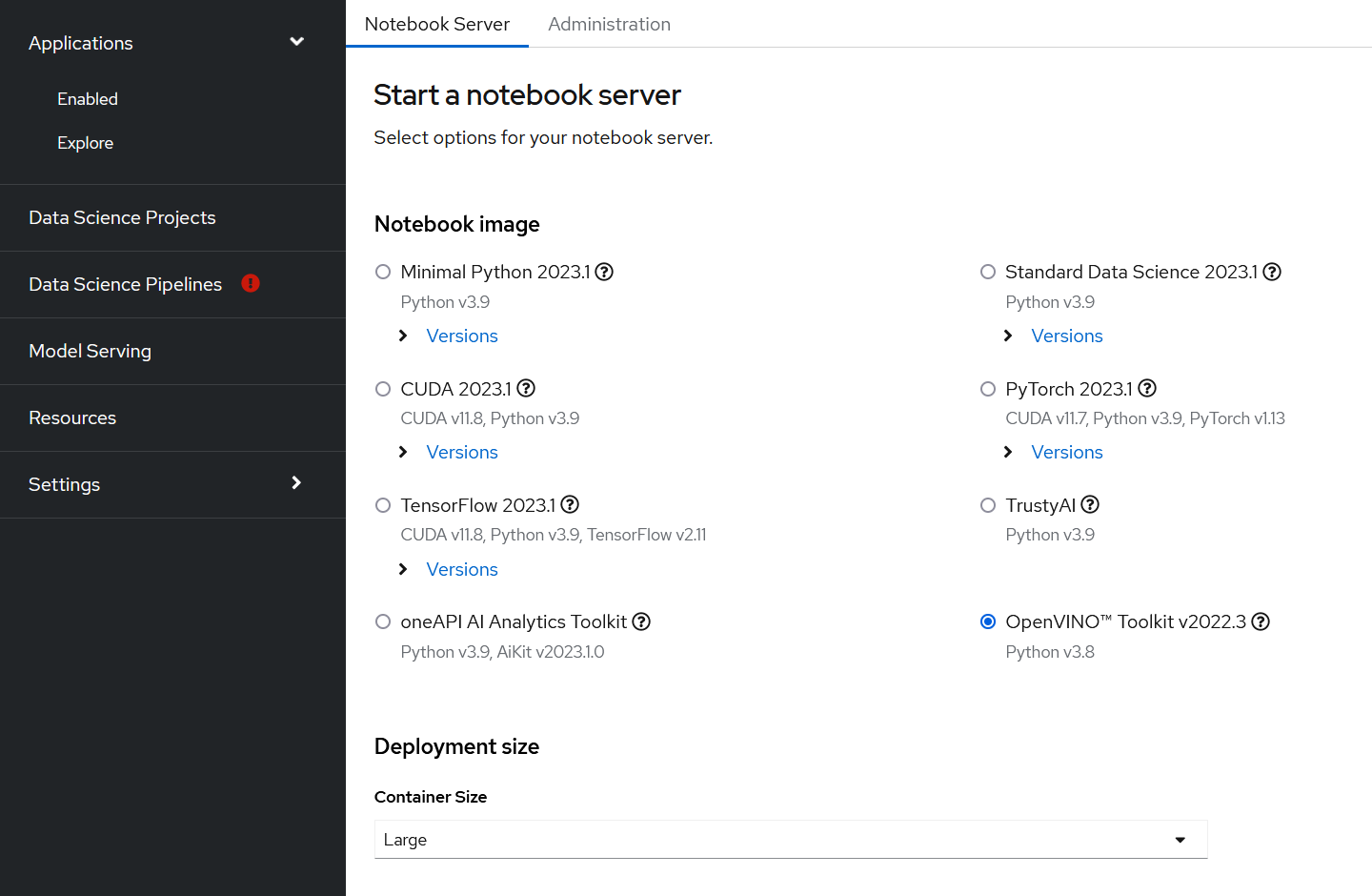

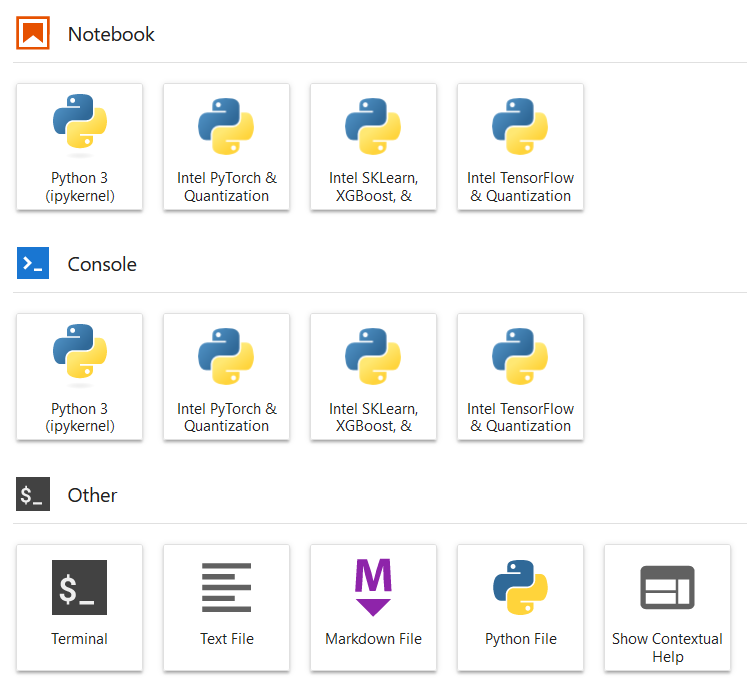

In the Red Hat OpenShift Data Science interface, choose the Applications > Enabled tab. Launch a Jupyter Notebook* application using the OpenVINO Toolkit v2022.3 image. Change the container size to Large (Figure 4).

Choose Terminal in the Launcher window (Figure 5).

Clone the Open Model Zoo GitHub repository, which contains the source code of the demos:

git clone --recurse-submodules https://github.com/openvinotoolkit/open_model_zoo.git

To see all compiled instructions and control the usage of Intel AMX, you can optionally set the ONEDNN_VERBOSE environment variable:

export ONEDNN_VERBOSE=1

As mentioned, this example is based on a BERT Question Answering Python Demo. However, instead of BERT-small, it uses BERT-large.

In the directory open_model_zoo/demos/bert_question_answering_demo/python, there is a models.lst file that contains the list of models supported by the demo and can be used to download them with the following command:

omz_downloader --list models.lst

As you see, one of the most important advantages of using Red Hat OpenShift Data Science is that all necessary tools (like the Model Downloader used above) and Python packages are built in! Thanks to the OpenVINO Toolkit Operator, you can avoid the long, tedious, and error-prone process of installing components and preparing your development environment.

When the model is downloaded, we are ready to run our first chatbot! Let’s call the script:

python3 bert_question_answering_demo.py --vocab=/opt/app-root/src/open_model_zoo/demos/bert_question_answering_demo/python/intel/bert-large-uncased-whole-word-masking-squad-int8-0001/vocab.txt --model=/opt/app-root/src/open_model_zoo/demos/bert_question_answering_demo/python/intel/bert-large-uncased-whole-word-masking-squad-int8-0001/FP32-INT8/bert-large-uncased-whole-word-masking-squad-int8-0001.xml --input_names="input_ids,attention_mask,token_type_ids" --output_names="output_s,output_e" --input="https://grabuszynski.com/myshoesemporium.html" -c

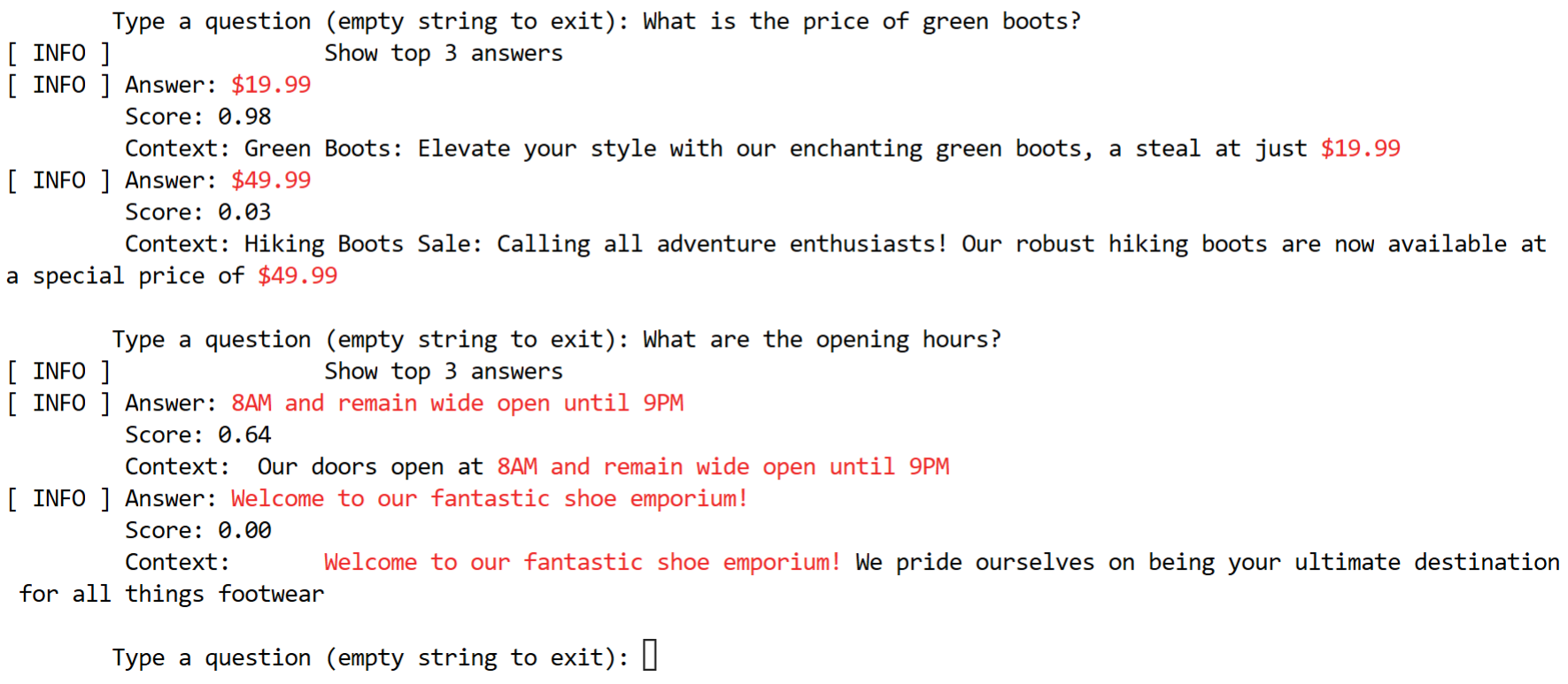

Instead of passing the example page as an input, the retailer can use examples from their own website. Now, the chatbot can answer questions about the company and its products (Figure 6).

If ONEDNN_VERBOSE is set to 1, you should see an avx_512_core_amx in the log that confirms Intel AMX instructions are being used:

onednn_verbose,info,cpu,isa:Intel AVX-512 with float16, Intel DL Boost and bfloat16 support and Intel AMX with bfloat16 and 8-bit integer support

It is possible to extend this example. The model could be served using a Model Server. The application that provides an easy-to-use and user-friendly interface would be a cherry on top of this project.

Concluding Remarks

Red Hat and Intel are constantly working on new solutions, technologies, and improvements in AI. This article demonstrated how Red Hat OpenShift Data Science can change the retail sector by combining with the OpenVINO toolkit and Intel AMX acceleration. Using these cutting-edge technologies with the BERT model in a chatbot solution, retailers can:

- Lower the costs of customer service

- Improve customer satisfaction by decreasing response time

- Potentially increase sales

Retailers can handle and analyze massive volumes of data quickly because of the combined strength of Red Hat OpenShift Data Science and Intel AMX acceleration, which results in better decisions and optimized operations. In the end, this technological convergence gives retailers the resources they need to maintain competitiveness in a rapidly changing market environment.