2.1. IXIA Traffic Test Result

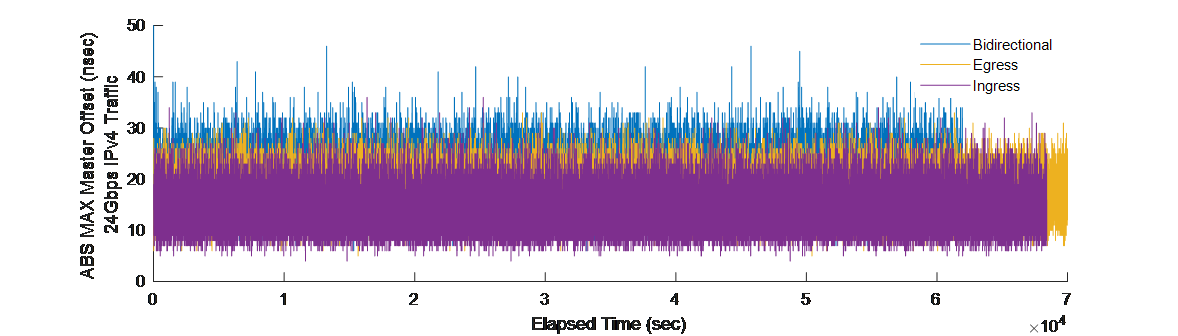

The following analysis captures the PTP performance of the TC-enabled Intel® FPGA PAC N3000 under ingress and egress traffic conditions. In this section, the PTP profile G.8275.1 has been adopted for all traffic tests and data collection.

| G.8275.1 PTP Profile | Ingress Traffic (24Gbps) |

Egress Traffic (24Gbps) |

Bidirectional Traffic (24Gbps) |

|---|---|---|---|

| RMS | 6.35 ns | 8.4 ns | 9.2 ns |

| StdDev (of abs(max) offset) | 3.68 ns | 3.78 ns | 4.5 ns |

| StdDev (of MPD) | 1.78 ns | 2.1 ns | 2.38 ns |

| Max offset | 36 ns | 33 ns | 53 ns |

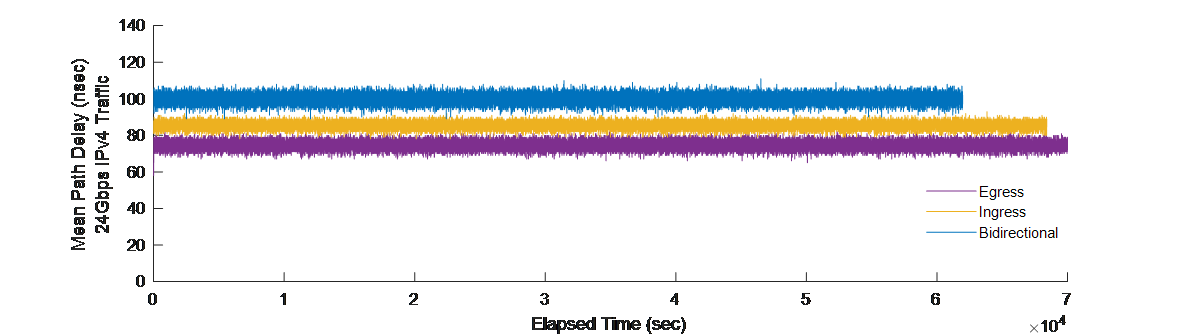

The following figures represent the magnitude of the master offset and the mean path delay (MPD), under a 16-hour long 24 Gbps bidirectional traffic test for different PTP encapsulations. The left graphs in these figures refer to PTP benchmarks under IPv4/UDP encapsulation, while the PTP messaging encapsulation of the right graphs is in L2 (raw Ethernet).

The PTP4l slave performance is quite similar, the worst-case master offset magnitude is 53 ns and 45 ns for IPv4/UDP and L2 encapsulation, respectively. The standard deviation of the magnitude offset is 4.49 ns and 4.55 ns for IPv4/UDP and L2 encapsulation, respectively.

The absolute values of the MPD is not a clear indication of PTP consistency, as it depends on length cables, data path latency and so on; however, looking at the low MPD variations (2.381 ns and 2.377 ns for IPv4 and L2 case, respectively) makes it obvious that the PTP MPD calculation is consistently accurate across both encapsulations. It verifies consistency of the PTP performance across both the encapsulation modes. The level change in the calculated MPD in the L2 graph (in the above figure, right graph) is due to the incremental effect of the applied traffic. Firstly, the channel is idle (MPD rms is 55.3 ns), then ingress traffic is applied (second incremental step, MPD rms is 85.44 ns), followed by simultaneous egress traffic, resulting in a calculated MPD of 108.98 ns.

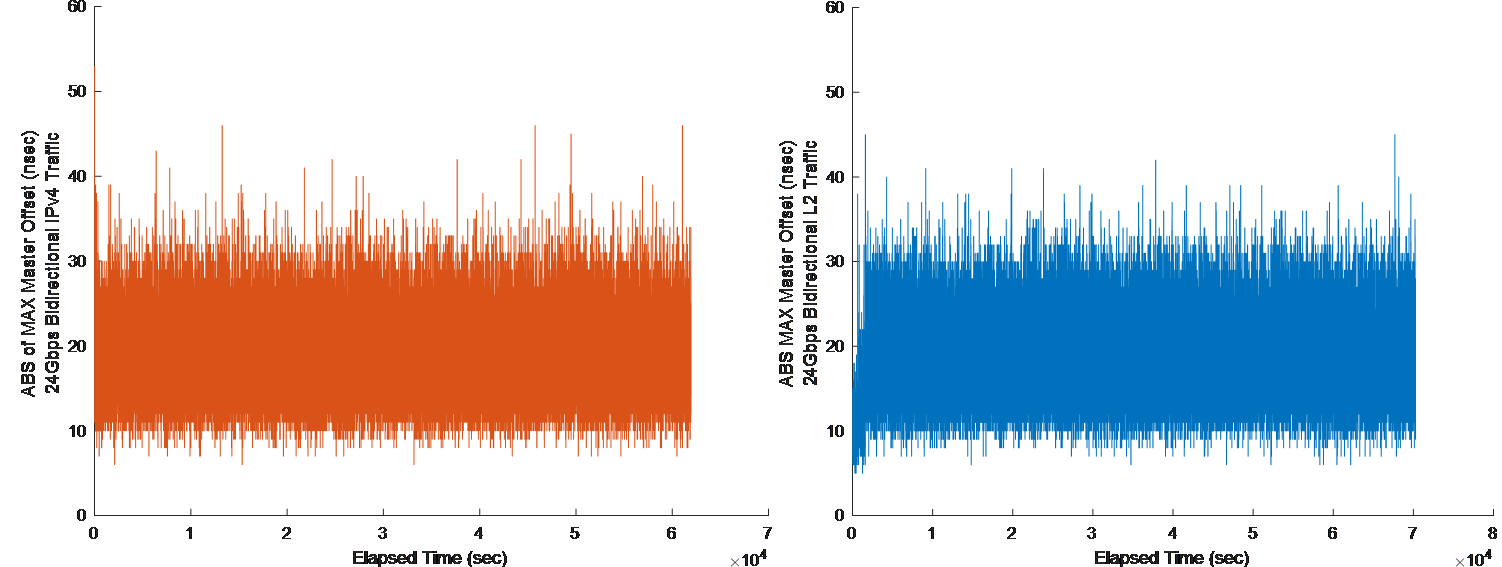

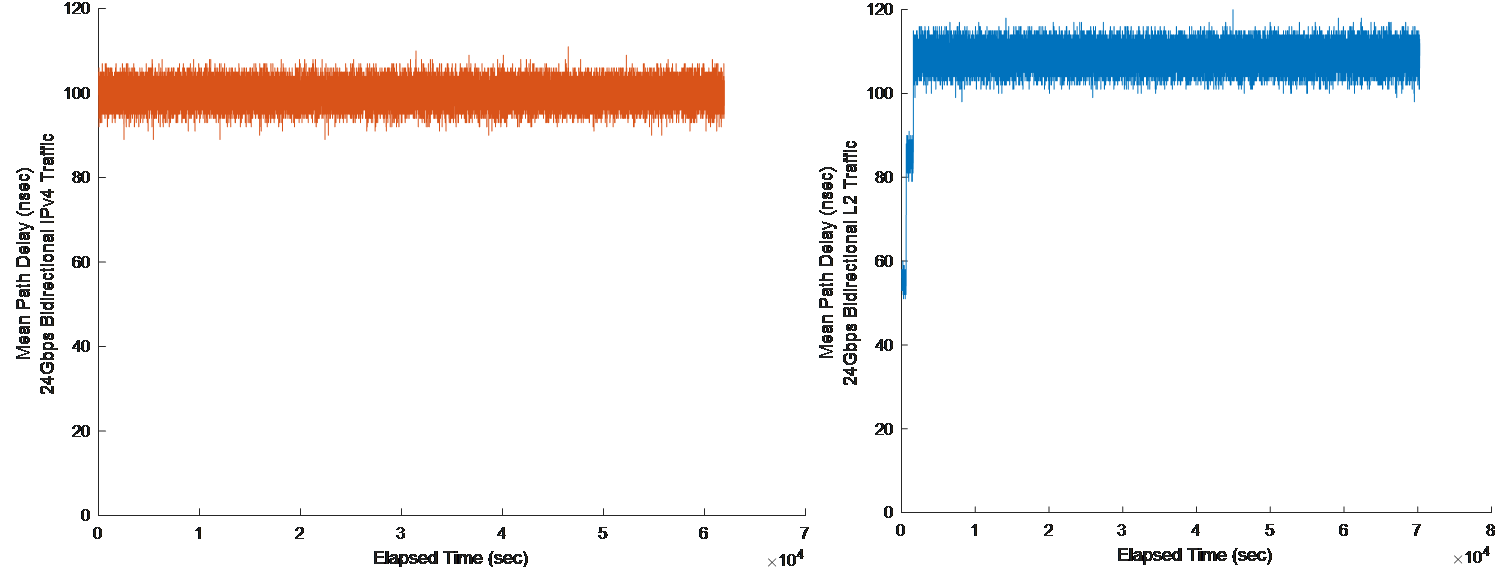

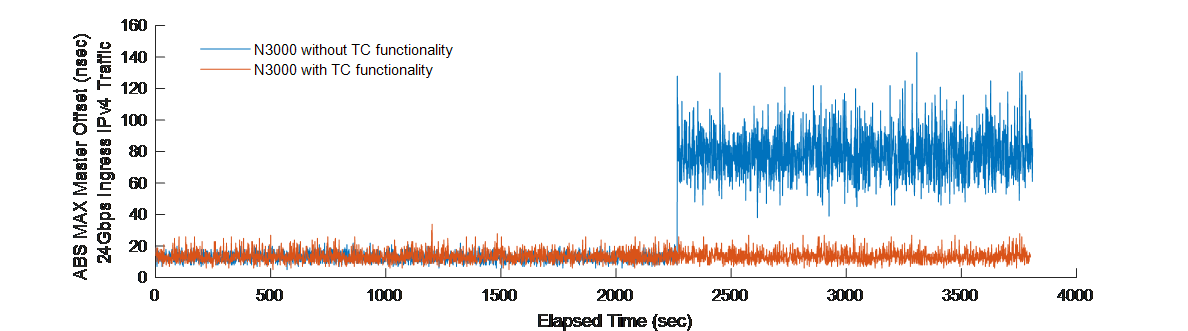

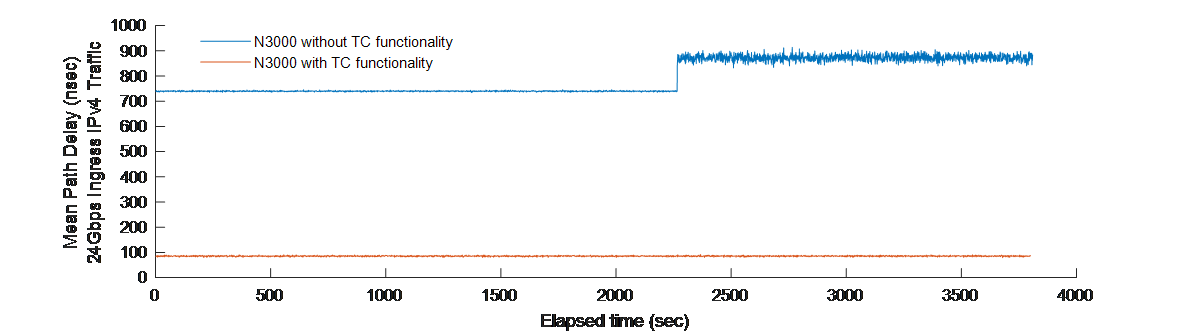

The following figures overlay the magnitude of the master offset and the calculated MPD of the bidirectional traffic test applied to both a PTP4l slave using the Intel® FPGA PAC N3000 with T-TC mechanism, as well as to another that uses the Intel® FPGA PAC N3000 without TC functionality. The T-TC Intel® FPGA PAC N3000 tests (orange) start from time zero, while the PTP test that utilizes the non-TC Intel® FPGA PAC N3000 (blue) starts around T = 2300 seconds.

In the above figure, the PTP performance of the TC-enabled Intel® FPGA PAC N3000 under traffic is similar to the non-TC Intel® FPGA PAC N3000 for the first 2300 seconds. The effectiveness of the T-TC mechanism in Intel® FPGA PAC N3000 is highlighted in the segment of test (after the 2300th second) where equal traffic load is applied to the interfaces of both cards. Similarly in the figure below, the MPD calculations are observed before and after applying the traffic on the channel. The effectiveness of the T-TC mechanism is highlighted in compensating for the residence time of the packets which is the packet latency through the FPGA path between the 25G and the 40G MACs.

These figures show the PTP4l slave’s servo algorithm, due to the residence time correction of the TC, we see small differences in the average path delay calculations. Therefore, the impact of the delay fluctuations on the master offset approximation is reduced.

The following table lists statistical analysis on the PTP performance, which includes the RMS and standard deviation of the master offset, standard deviation of the mean path delay, as well as worst-case master offset for the Intel® FPGA PAC N3000 with and without T-TC support.

| Ingress Traffic (24Gbps) G.8275.1 PTP Profile |

Intel® FPGA PAC N3000 with T-TC | Intel® FPGA PAC N3000 without T-TC |

|---|---|---|

| RMS | 6.34 ns | 40.5 ns |

| StdDev (of abs(max) offset) | 3.65 ns | 15.5 ns |

| StdDev (of MPD) | 1.79 ns | 18.1 ns |

| Max offset | 34 ns | 143 ns |

A direct comparison the TC-supported Intel® FPGA PAC N3000 to the non-TC version shows that the PTP performance is 4x to 6x lower with respect to any of the statistical metrics (worst-case, RMS or standard deviation of master offset). The worst-case master offset for the G.8275.1 PTP configuration of T-TC Intel® FPGA PAC N3000 is 34 ns under ingress traffic conditions at the limit of the channel bandwidth (24.4Gbps).