AN 1020: Using the FPGA AI Suite IP with High Bandwidth Memory on Stratix® 10 MX and Agilex™ 7 M-Series Devices

2.2.1. Memory Size and Bandwidth Considerations

The first consideration when using HBM with the FPGA AI Suite IP is the 2 GB address space limitation created by the structure of the HBM memory. Evaluate whether 2 GB is sufficient memory for your FPGA AI Suite IP instances.

Direct Mapping of an FPGA AI Suite IP Instance to an HBM Channel

If the 2 GB size per channel is enough for each of your FPGA AI Suite IP instances, then the simplest (and most straightforward) path is to use only one pseudo channel per FPGA AI Suite IP instance and leave the second pseudo channel on the same layer and the other HBM channels dangling.

Benefits:

- Easiest implementation, no additional logic required except for data width considerations. For details about data width considerations, refer to Memory Data Width Considerations.

Limitations:

- Only 2 GB of memory is available for each FPGA AI Suite IP instance (instead of the maximum addressable of 4GB).

- Only can use of one HBM channel (or, more precisely, only one pseudo channel), which is half as wide (256 bit vs 512 bit) as the corresponding DDR4 channel in the current FPGA AI Suite IP implementation.

Channel Stitching

If the 2 GB size per channel does not meet your requirements for the total off-die memory per FPGA AI Suite IP instance, you must develop additional logic to point your FPGA AI Suite IP memory accesses toward the HBM memory controller (such an address decoding scheme).

An address decoding scheme can allow you to use two independent channels that each address 2 GB of HBM memory together to provide a 4 GB memory area for a single IP instance. In such a case, you are not making use of the parallel bandwidth of both channels but limiting the system to use only one or the other channel.

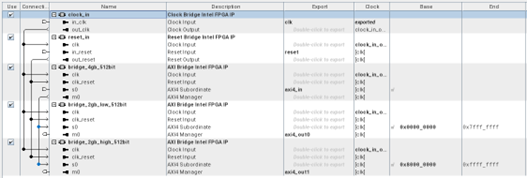

You could implement this scheme in Platform Designer by creating a system with the following features:

- An input pipeline stage that connects to the AXI4 output of the FPGA AI Suite IP instance with an address width that addresses the full 4 GB of memory.

- Two output pipeline stages, each with an address width that addresses 2 GB of memory each. The output stages are parameterized to use either the lower or upper 2 GB of the total 4 GB of memory. The outputs of each of these two output pipeline stages are connected to a separate channel of the HBM memory controller.

Benefits:

- Full 4 GB addressable memory space of FPGA AI Suite IP is available.

Limitations:

- Requires you to create additional address decoding logic to spread the 4 GB addressable memory space of the FPGA AI Suite IP to two 2 GB HBM channels.

- No bandwidth benefits compared to the simple approach in Direct Mapping of an FPGA AI Suite IP Instance to an HBM Channel.

Channel Stitching and Interleaving

Expanding on top of channel stitching, using the available double bandwidth requires additional interleaving logic in addition to the simple address decoding logic described earlier.

For an example of this interleaving logic, review the OpenCL™ Memory Bank Divider implementation described in Intel FPGA SDK for OpenCL™ Pro Edition: Custom Platform Toolkit User Guide . While the OpenCL™ implementation is based on an Avalon® memory-mapped interface, the logic and techniques used can be applied the AXI4 interface using in the FPGA AI Suite IP.

Benefits:

- Full 4 GB addressable memory space of FPGA AI Suite IP is available.

- Ideally bandwidth is increased as two HBM channels are used by interleaving the traffic from the FPGA AI Suite IP instance to the two respective HBM layers.

Limitations:

- Requires you to create additional interleaving and address decoding logic to spread the 4 GB addressable memory space of the FPGA AI Suite IP to two 2 GB HBM channels and handle interleaving at memory page granularity level.