Introduction

AI analysis of medical images needs to keep up with the pace of clinical workflows for real-world adoption. Fundamentally, this means AI models must meet latency and response time expectations, and health AI developers must meet or exceed their performance and price requirements for deploying them.

Developers have choices when it comes to selecting the compute infrastructure for model deployment as they balance the performance needs of the clinical workflow and cost. CPU-based instances in the cloud offer cost advantages and enable the processing of large images and deep models because of the larger available memory.

Intel is working with Microsoft Azure* to optimize a chest X-ray image classification dataset (PadChest) and brain functional magnetic resonance imaging resting-state classification (fMRI) models for Intel® CPUs and deployment on Azure machine learning instances.

Use Cases

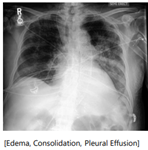

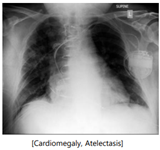

Understanding PadChest

PadChest is a large chest X-ray image dataset with multilabel annotated reports comprised of 160,000 images in 16-bit grayscale .png format gathered across 67,000 patients. Each image is of size approximately 8 MB with a resolution of 2,000 x 2,000 pixels.

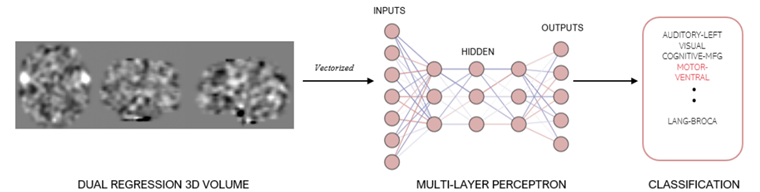

Understanding fMRI

fMRI is a noninvasive imaging technique to understand brain activity. Resting-state fMRI can assess time-varying, spatially related signal changes in the brain that represent a set of intrinsic brain networks.

Resting-state (rs) fMRI can be analyzed using independent component analysis (ICA). For a cohort of subjects with rs-fMRI, one can take advantage of group-level classification, which is then applied to subject-level data to efficiently label a large number of subject-level, rs-fMRI for subsequent AI model generation. The process involves:

- Performing ICA at the group level to identify common, large-scale, intrinsic brain networks.

- Applying dual regression to back project group-level ICAs to subject-specific contributions, transferring the group ICA label to the subject-specific ICA component.

- Inputting subject-specific, labeled ICA components to a deep learning network model trained to label functional brain networks. Our current experiment focused on a ResNet neural network model.

Optimization with Intel® Tools and Frameworks

Intel optimized the models using two different tools: Intel® Extension for PyTorch* and Intel® Distribution of OpenVINO™ toolkit. Developers can choose either of these tools.

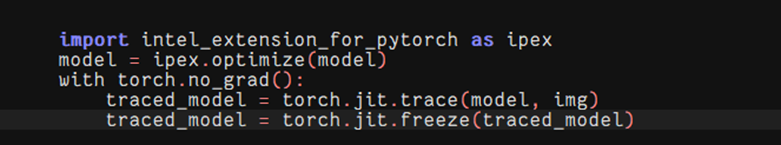

- Intel Extension for PyTorch provides up-to-date feature optimizations for an extra performance boost on Intel CPUs by taking advantage of Intel® Advanced Network Extensions (Intel® AVX-512) Neural Network Instructions and Intel® Advanced Matrix Extensions (Intel® AMX) and requires only minimal code changes that are outlined as follows:

- The OpenVINO toolkit provides additional performance gains and flexibility to deploy on a variety of edge and cloud hardware architectures, including integrated graphics on edge-based client devices. Trained PyTorch and TensorFlow* models are converted to an intermediate representation and applications use the OpenVINO APIs for inference.

These optimizations reduce latency and increase throughput without sacrificing accuracy and preserve the precision of model weights.

Optimized code is available in a Jupyter* Notebook for both PadChest and fMRI use cases.

Results

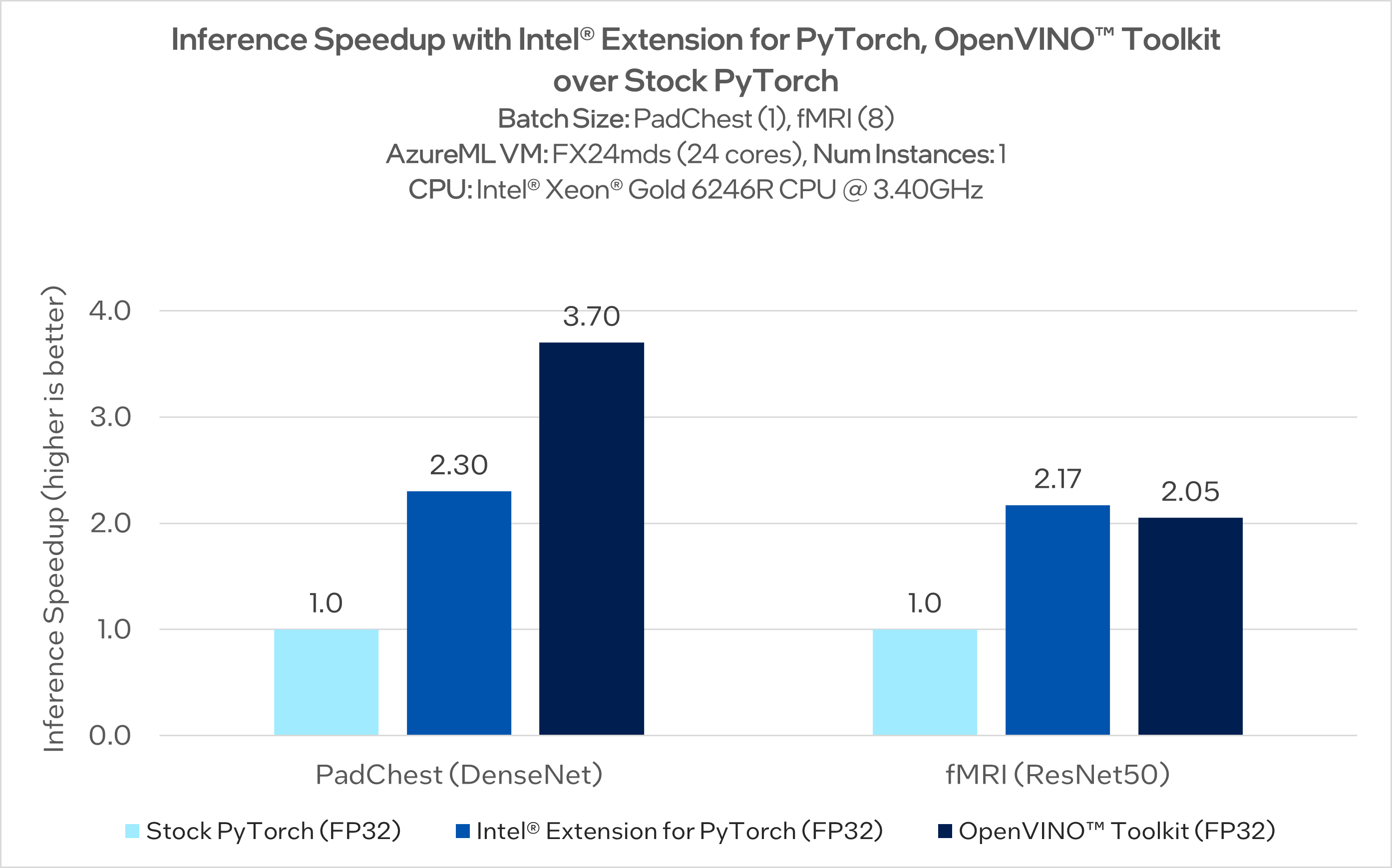

The following is a summary of performance benchmark results. For more information, see System Configurations.

First, model weight precision FP32:

Next is a more detailed comparison of stock PyTorch, Intel Extension for PyTorch, and OpenVINO toolkit performances for each use case.

Next is a more detailed comparison of stock PyTorch, Intel Extension for PyTorch, and OpenVINO toolkit performances for each use case.

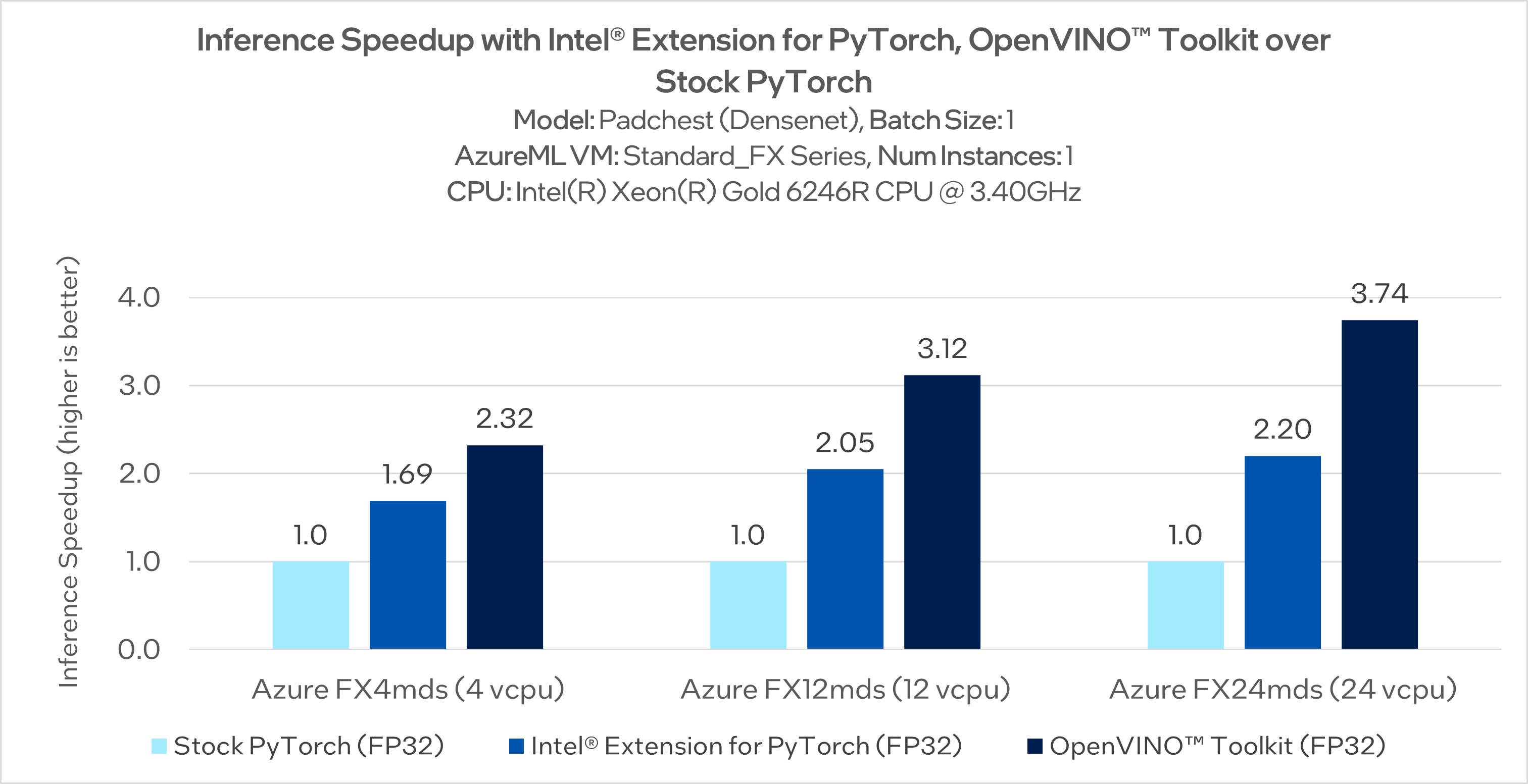

- PadChest (DenseNet, FP32) – Azure machine learning

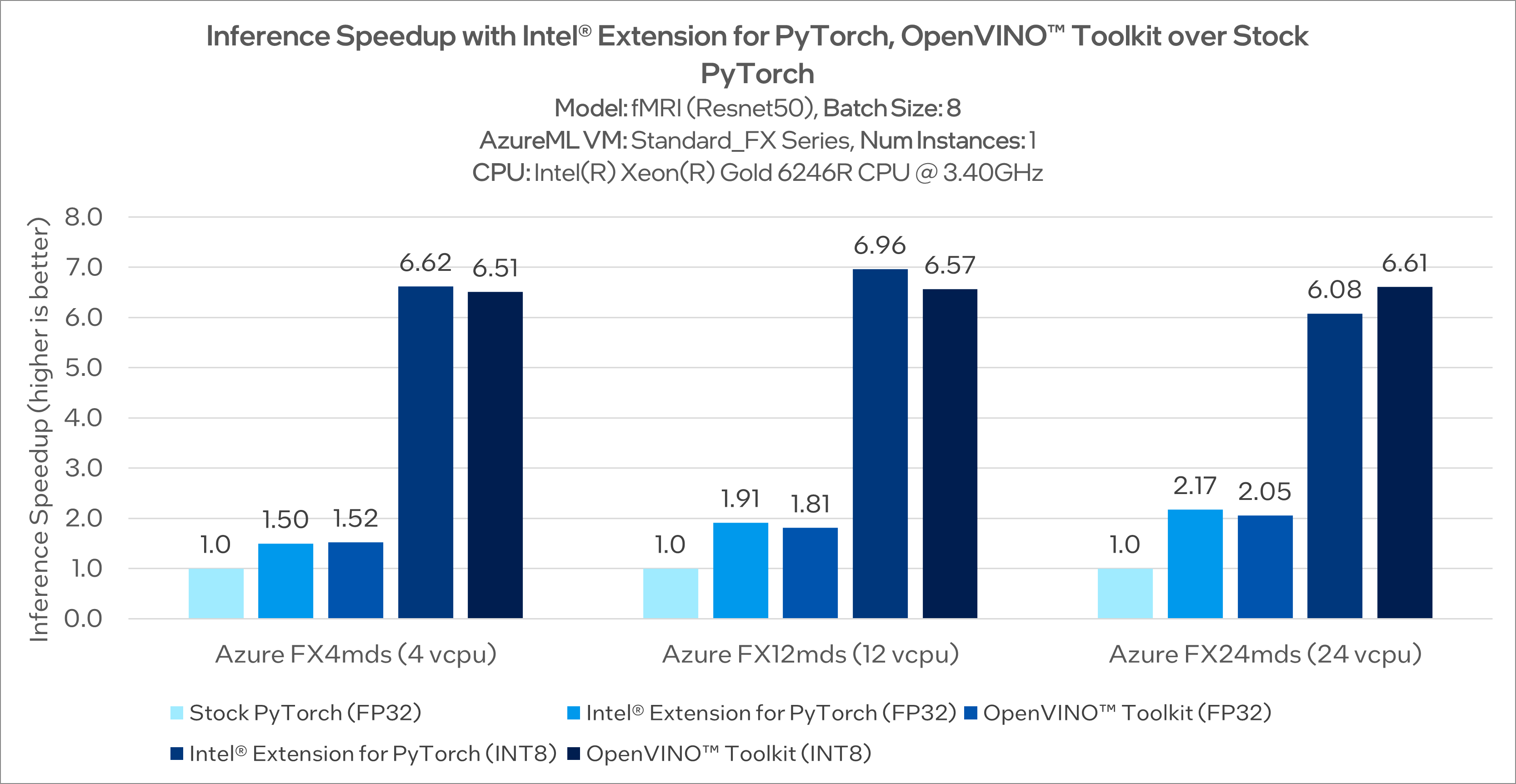

- fMRI (ResNet 50, FP32, int8) – Azure machine learning

For further optimizations, we quantized the fMRI ResNet 50 model weight precision from FP32 to int8. For Intel Extension for PyTorch, we used its graph optimization techniques. For the OpenVINO toolkit, we used the Neural Network Compression Framework (NNCF).

Model quantization to int8 led to a 4x additional increase in performance with an insignificant drop in accuracy.

Discussion

All parties involved were pleasantly surprised with the benchmarking results. This work showcases scalable and cost-effective medical model deployment options for AI developers:

"The Intel team’s optimization of fMRI and PadChest models using Intel Extension for PyTorch and OpenVINO toolkit powered by oneAPI, leading to ~6x increase in performance tailored for medical imaging, showcases best practices that do more than just accelerate running times. These enhancements not only cater to the unique demands of medical image processing but also offer the potential to reduce overall costs and bolster scalability."

— Santamaria-Pang Alberto, principal applied data scientist, Health AI, Microsoft*

At Intel, we are excited by the results so far. As the next steps, we plan to:

- Optimize and benchmark several other medical use cases such as multi-organ segmentation on an abdominal CT.

- Prepare and run PadChest and fMRI Azure machine learning deployment on a Microsoft Nuance* Precision Imaging Network (PIN) platform.

- Publish the details of this work in a series of blog posts.

Conclusion

Azure and Intel are working together to accelerate the PadChest and fMRI model performance on CPU-based Azure machine learning instances by taking advantage of Intel's optimization tools, specifically Intel® Extension for PyTorch* and OpenVINO™ toolkit. The results display that with Intel optimizations, AI developers have the option to deploy their models on CPU-based Azure machine learning instances that give their end users desirable performance and response time.

Learn More

Intel AI and Machine Learning Ecosystem Developer Resources

AI and Machine Learning Portfolio

System Configurations

Test Dates:

- fMRI (OpenVINO toolkit): August 18, 2023

- fMRI (Intel Extension for PyTorch): June 29, 2023

- int8: fMRI (OpenVINO toolkit): August 18, 2023

Hardware:

- CPU: Intel® Xeon® Gold 6246R CPU at 3.40 GHz

- CPU cores: 4, 12, or 24 at 2 threads per core; 2, 6, or 12 cores per socket

- Operating system: Ubuntu* 20.04.6 LTS (Focal Fossa)

- Kernel: 5.15.0-1038-azure

- Memory/Node: 84 GB, 252 GB, 512 GB

Software:

|

|

PyTorch (Stock) |

Intel Extension for PyTorch |

OpenVINO Toolkit |

|

Framework or Toolkit |

1.13.1+cpu |

1.13.100 |

2023.0.0 |

|

Topology or Machine Learning Algorithm |

PadChest (DenseNet) fMRI (ResNet 50) |

PadChest (DenseNet) fMRI (ResNet 50) |

PadChest (DenseNet) fMRI (ResNet 50) |

|

Libraries |

Intel® oneAPI Deep Neural Network Library (oneDNN) |

oneDNN |

oneDNN |

|

Dataset (size, shape) |

PadChest: (1, 1, 224, 224) |

PadChest: (1, 1, 224, 224) |

PadChest: (1, 1, 224, 224) |

|

Precision |

FP32 |

FP32 |

FP32 |

Get the Software

Intel® Distribution of OpenVINO™ Toolkit

Optimize models trained using popular frameworks like TensorFlow*, PyTorch*, and Caffe*, and deploy across a mix of Intel® hardware and environments.

Intel® oneAPI Deep Neural Network Library (oneDNN)

Improve deep learning (DL) application and framework performance on CPUs and GPUs with highly optimized implementations of DL building blocks.

PyTorch* Optimizations from Intel

Intel is one of the largest contributors to PyTorch*, providing regular upstream optimizations to the PyTorch deep learning framework that provide superior performance on Intel® architectures. The Intel® AI Analytics Toolkit (AI Kit) includes the latest binary version of PyTorch tested to work with the rest of the kit, along with Intel® Extension for PyTorch*, which adds the newest Intel optimizations and usability features.