Intel® oneAPI Data Analytics Library Developer Guide and Reference

Cross-entropy Loss

Cross-entropy loss is an objective function minimized in the process of logistic regression training when a dependent variable takes more than two values.

Details

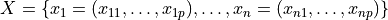

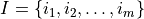

Given n feature vectors  of np-dimensional feature vectors, a vector of class labels

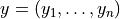

of np-dimensional feature vectors, a vector of class labels  , where

, where  describes the class, to which the feature vector

describes the class, to which the feature vector  belongs, where T is the number of classes, optimization solver optimizes cross-entropy loss objective function by argument

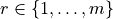

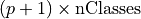

belongs, where T is the number of classes, optimization solver optimizes cross-entropy loss objective function by argument  , it is a matrix of size

, it is a matrix of size  . The cross entropy loss objective function

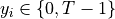

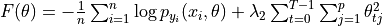

. The cross entropy loss objective function  has the following format

has the following format  where

where

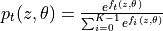

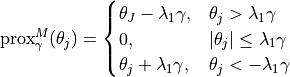

, with

, with  and

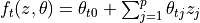

and  ,

,  ,

,

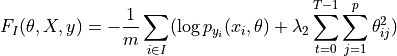

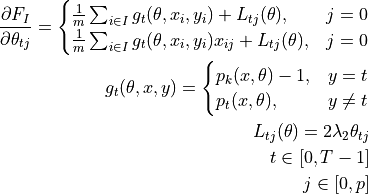

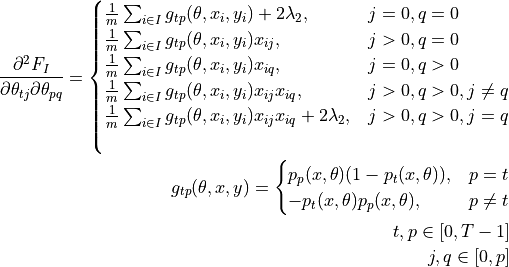

For a given set of indices  ,

,  ,

,  , the value and the gradient of the sum of functions in the argument X respectively have the format:

, the value and the gradient of the sum of functions in the argument X respectively have the format:

where

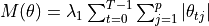

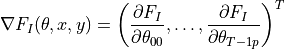

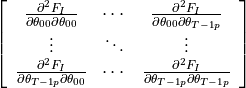

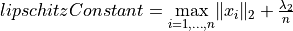

Hessian matrix is a symmetric matrix of size  , where

, where

, where

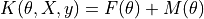

, where  is the learning rate

is the learning rate

For more details, see [Hastie2009].

Computation

Algorithm Input

The cross entropy loss algorithm accepts the input described below. Pass the Input ID as a parameter to the methods that provide input for your algorithm. For more details, see Algorithms.

Input ID |

Input |

argument |

A numeric table of size

NOTE:

The sizes of the argument, gradient, and hessian numeric tables do not depend on interceptFlag. When interceptFlag is set to false, the computation of

value is skipped, but the sizes of the tables should remain the same. value is skipped, but the sizes of the tables should remain the same.

|

data |

A numeric table of size

NOTE:

This parameter can be an object of any class derived from NumericTable.

|

dependentVariables |

A numeric table of size

NOTE:

This parameter can be an object of any class derived from NumericTable, except for PackedTriangularMatrix , PackedSymmetricMatrix , and CSRNumericTable.

|

Algorithm Parameters

The cross entropy loss algorithm has the following parameters. Some of them are required only for specific values of the computation method’s parameter method:

Parameter |

Default value |

Description |

algorithmFPType |

float |

The floating-point type that the algorithm uses for intermediate computations. Can be float or double. |

method |

defaultDense |

Performance-oriented computation method. |

numberOfTerms |

Not applicable |

The number of terms in the objective function. |

batchIndices |

Not applicable |

The numeric table of size

NOTE:

This parameter can be an object of any class derived from NumericTable except PackedTriangularMatrix and PackedSymmetricMatrix .

|

resultsToCompute |

gradient |

The 64-bit integer flag that specifies which characteristics of the objective function to compute. Provide one of the following values to request a single characteristic or use bitwise OR to request a combination of the characteristics:

|

interceptFlag |

true |

A flag that indicates a need to compute |

penaltyL1 |

0 |

L1 regularization coefficient |

penaltyL2 |

0 |

L2 regularization coefficient |

nClasses |

Not applicable |

The number of classes (different values of dependent variable) |

Algorithm Output

For the output of the cross entropy loss algorithm, see Output for objective functions.

with the input argument

with the input argument  with the data

with the data  .

. with dependent variables

with dependent variables  .

. , where m is the batch size, with a batch of indices to be used to compute the function results. If no indices are provided, the implementation uses all the terms in the computation.

, where m is the batch size, with a batch of indices to be used to compute the function results. If no indices are provided, the implementation uses all the terms in the computation. .

.