Accessing the Intel Gaudi Node in the Intel® Tiber® AI Cloud

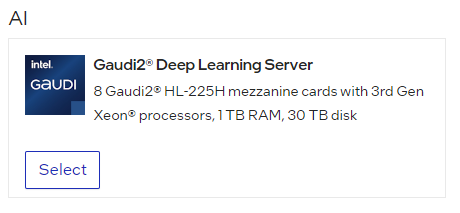

To access an Intel® Gaudi® node in the Intel® Tiber™ AI cloud, go to Intel® Tiber™ AI Cloud Console and access the hardware instances to select the Intel® Gaudi® 2 platform for deep learning and follow the steps to start and connect to the node.

The website will provide an ssh command to login to the node, and it’s advisable to add a local port forwarding to the command to be able to access a local Jupyter Notebook. For example, add the command: ssh -L 8888:localhost:8888 ... to be able to access the Notebook.

Details about setting up Jupyter Notebooks on an Intel® Gaudi® Platform are available here.

Docker Setup

With access to the node, use the latest Intel® Gaudi® Docker image by first calling the Docker run command which will automatically download and run the Docker image:

docker run -itd --name Gaudi_Docker --runtime=habana -e HABANA_VISIBLE_DEVICES=all -e OMPI_MCA_btl_vader_single_copy_mechanism=none --cap-add=sys_nice --net=host --ipc=host vault.habana.ai/gaudi-docker/1.21.0/ubuntu22.04/habanalabs/pytorch-installer-2.6.0:latest

Start the Docker image and enter the Docker environment by issuing the following command:

docker exec -it Gaudi_Docker bash

More information on Gaudi Docker setup and validation can be found here.

Install pre-requisites

Once in the Docker environment, install the necessary libraries:

Start in the root directory and install the DeepSpeed Library:

cd ~

pip install git+https://github.com/HabanaAI/DeepSpeed.git@1.21.0

Now install the Hugging Face Optimum Intel® Gaudi® library and GitHub Examples, selecting the latest validated release of both:

pip install optimum-habana==1.16.0

git clone -b 1.19.0 https://github.com/HabanaAI/Megatron-DeepSpeed.git

Next, transition to the Megatron-DeepSpeed directory and install the set of requirements to perform training:

cd Megatron-DeepSpeed

pip install -r megatron/core/requirements.txt

Setup the correct path for Megatron-DeepSpeed:

export MEGATRON_DEEPSPEED_ROOT=`pwd`

export PYTHONPATH=$MEGATRON_DEEPSPEED_ROOT:$PYTHONPATH

Finally, Set Python 3.10 as the default Python version. If Python 3.10 is not the default version, replace any call to the Python command on your model with $PYTHON and define the environment variable as below:

export PYTHON=/usr/bin/python3.10

Download Dataset

To download datasets used for training Llama2, follow the directions in the Megatron-Deepspeed Github page. This tutorial uses a subset of the Oscar dataset to pre-train language models and word representations.

It is possible to download the full (500GB+) oscar dataset. Or a subset of the dataset can be downloaded for a quick start. These steps are based on the Oscar dataset repository.

First, clone the dataset repository:

cd ~

git clone https://github.com/bigscience-workshop/bigscience.git

cd bigscience/data/oscar

Next, edit the file oscar-to-jsonl.py. This example downloads the zh dataset (Chinese). Edit the file in the language subsets list. Remove the comment on unshuffled_deduplicated_zh and comment out unshuffled_deduplicated_en:

### Build/Load Datasets

# Once this part of the process completes it gets cached, so on subsequent runs it'll be much faster

language_subsets = (

# "unshuffled_deduplicated_ar",

# "unshuffled_deduplicated_sw",

"unshuffled_deduplicated_zh",

# "unshuffled_deduplicated_en",

# "unshuffled_deduplicated_fr",

# "unshuffled_deduplicated_pt",

# "unshuffled_deduplicated_es",

)

Run the Python script that downloads and pre-process the data. Note the use of the -s option, that will download only a subset of the dataset, for the purposes of this tutorial (this operation can take some time, depending on the download speed and hardware used):

$PYTHON ./oscar-to-jsonl.py -s

When the above operation completes, the ~/bigscience/data/oscar/ directory will contain the following data files:

-rw-r--r-- 1 root root 66707628 Jul 26 00:38 oscar-0.jsonl

-rw-r--r-- 1 root root 63555928 Jul 26 00:38 oscar-1.jsonl

-rw-r--r-- 1 root root 59082488 Jul 26 00:38 oscar-2.jsonl

-rw-r--r-- 1 root root 63054515 Jul 26 00:38 oscar-3.jsonl

-rw-r--r-- 1 root root 59592060 Jul 26 00:38 oscar-4.jsonl

The next step is to tokenize the dataset. There are different ways to perform tokenization of a dataset. This example uses the GPT2BPETokenizer method (Byte-Pair Encoding).

According to the directions in the Gaudi Megatron-DeepSpeed github page, the five jsonl files above can be concatenated into a single large file to be tokenized, or the tokenization can be done on each one of the five files separately (and then the 5 tokenized files can be merged). In this tutorial the smaller files are processed individually, to prevent possible host out of memory issues.

The GPT2BPETokenizer method is used to tokenize the five jsonl files separately. First, download the gpt2 vocabulary.json and the merges.txt file:

wget https://s3.amazonaws.com/models.huggingface.co/bert/gpt2-vocab.json

wget https://s3.amazonaws.com/models.huggingface.co/bert/gpt2-merges.txt

Next, create and execute a shell script as follows. This script will tokenize the individual jsonl files one at a time and will write the tokenized files to the zh_tokenized directory. In the sixth line, the number of workers can be changed according to the number of cores in the CPU that is being used:

# tokenize individual jsonl files

# loop count will change based on number of files for a given dataset

mkdir zh_tokenized

for i in $(seq 0 4);

do

$PYTHON $MEGATRON_DEEPSPEED_ROOT/tools/preprocess_data.py --input oscar-${i}.jsonl --output-prefix zh_tokenized/tokenized${i} --tokenizer-type GPT2BPETokenizer --vocab-file gpt2-vocab.json --merge-file gpt2-merges.txt --append-eod --workers 16

done

After the above operation is completed, the “zh_tokenized” directory will contain the following files:

-rw-r--r-- 1 root root 93115006 Jul 26 00:47 tokenized0_text_document.bin

-rw-r--r-- 1 root root 166882 Jul 26 00:47 tokenized0_text_document.idx

-rw-r--r-- 1 root root 88055238 Jul 26 00:47 tokenized1_text_document.bin

-rw-r--r-- 1 root root 166882 Jul 26 00:47 tokenized1_text_document.idx

-rw-r--r-- 1 root root 82539576 Jul 26 00:47 tokenized2_text_document.bin

-rw-r--r-- 1 root root 166882 Jul 26 00:47 tokenized2_text_document.idx

-rw-r--r-- 1 root root 87806904 Jul 26 00:47 tokenized3_text_document.bin

-rw-r--r-- 1 root root 166882 Jul 26 00:47 tokenized3_text_document.idx

-rw-r--r-- 1 root root 82680922 Jul 26 00:48 tokenized4_text_document.bin

-rw-r--r-- 1 root root 166862 Jul 26 00:48 tokenized4_text_document.idx

To complete the tokenization step, the multiple tokenized dataset files generated above should be merged into a single file. For this, run the following commands:

# merge tokenized files

mkdir zh_tokenized_merged

$PYTHON $MEGATRON_DEEPSPEED_ROOT/tools/merge_datasets.py --input zh_tokenized --output-prefix zh_tokenized_merged/tokenized_text_document

Which will result in the zh_tokenized_merged directory created and containing the following merged files:

ls -lt zh_tokenized_merged

-rw-r--r-- 1 root root 834222 Jul 26 00:57 tokenized_text_document.idx

-rw-r--r-- 1 root root 434197646 Jul 26 00:57 tokenized_text_document.bin

To make pretraining easier, copy the gpt2-merges.txt and gpt2-vocab.json files into the zh_tokenized_merged directory. Using the GPT2BPETokenizer with pretraining requires those files to be in the same directory as the data.

cp gpt2-* zh_tokenized_merged

This completes the dataset downloading and preprocessing steps.