As cloud computing becomes increasingly mainstream, it’s important to find new ways to address three of the most pressing challenges associated with cloud—cost, performance and security. And while it may come as a surprise, some of the most useful innovations are being developed beneath the surface in the lower-layer architecture—near the silicon.

Innovation in the Lower Layer

At KubeCon + CloudNative Con North America 2022, developers and innovators from the global open source community gathered to discuss these challenges and innovations. As usual, the community does a lot of the heavy lifting down in the lower layer to enable the kind of seamless, secure cloud computing experiences that end users expect. It’s hard work, with a lot of hidden complexities. And it’s possible to reduce that complexity with a few well-placed innovations.

After my first year at Intel, I quickly came to realize how important lower-layer architecture is to the cloud, including the hardware, drivers, and OS kernel. I’ve had the opportunity to dig in and see how a platform-aware cloud orchestrator function—one that’s aware of the resources available on a platform—can bring the acceleration, isolation, and security at the silicon layer to the upper layer cloud applications.

This kind of intelligence, built into the cloud orchestrator, boosts the effectiveness and efficiency of open source applications. It's all about taking cost, performance and security of the cloud to the next level, while making life as easy as possible for the open source developers making it happen.

Taking Cost, Performance and Security to the Next Level

One great example of improving costs with this kind of automated intelligence is cloud scheduling. When you look at current cloud offerings, scheduling a workload onto a node involves making sure that the node has enough CPU and memory to run the workload. But CPU and memory on a node are grouped in different non-uniform memory access (NUMA) zones—sort of like ZIP codes for the silicon layer—and communication between different NUMA zones is much slower than communication within the same NUMA zone.

So, you can greatly enhance your workload performance if the cloud scheduler can take the low-layer NUMA topology into consideration. Resource utilization rates will also be enhanced, which can result in lower costs for end users.

When it comes to improving performance, another area to look at is disk and network input/output (I/O). Many workloads require guaranteed disk I/O and network I/O resource in addition to CPU and memory, especially for customers like Pinterest*, Twitter* and others. (It’s like needing to know if there’s still room on a plane before you book your ticket.) To support this, the low-layer I/O isolation technologies need to be enabled, and a software stack needs to be built from the low-layer hardware and kernel all the way to the upper-layer workload specification.

Lastly, developers and cloud computing experts know how important tenant isolation and security are in the cloud. Today’s cloud supports data security when data is at rest and in transit, but not when data is in use. And considering how often data is in use, this is an important gap to fill.

To solve this problem, we need encryption to take place at the hardware layer, meaning we need confidential computing technologies like Intel® Software Guard Extensions (SGX) and Intel® Trust Domain Extensions (TDX) to enable a trusted execution environment around data while it’s being processed.

Platform-aware scheduling, I/O isolation, and confidential computing are just a few of the many challenges we’ve been tackling, in close collaboration with the open source community, to take the cost, performance and security for the cloud to the next level.

From Silicon to Serverless—Ongoing Innovation

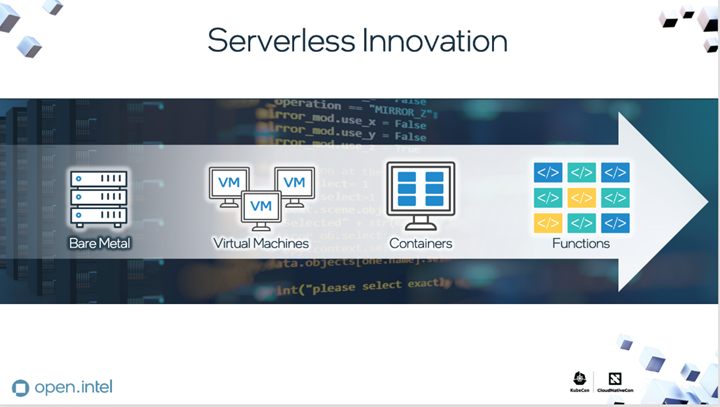

Of course, there’s still a lot more work to do to help drive innovation. The industry is moving from bare metal to serverless. Serverless enables developer velocity by offloading the instantiation and management of application runtime from the developer to the cloud platform.

As it offers many benefits, it also brings many challenges. Two of the major challenges are cold start latency (how long it takes for an app to actually start working after it’s launched) and autoscaling in response to burst traffic (to make sure computing performance doesn’t suffer when things get busy). That’s why we’ve been working on a new snapshot-based way of creating an application container that significantly reduces cold start latency. We’ve also developed a new approach that greatly accelerates autoscaling speed.

All this is just a part of Intel’s software-first approach and our commitment to the open source community. It’s our goal to make sure everyone else in the open source community benefits as much from our work as we do from theirs.

About the author

Cathy Zhang is a Senior Principal Engineer who leads Intel’s company-wide contributions to the cloud native and microservice ecosystem. She has a Ph.D. in computer engineering and has worked in this field for more than 10 years, with 18 U.S. patents and another 11 pending. Over the years, she has co-authored several Cloud Native Computing Foundation* (CNCF) whitepapers and specifications and has served as a track chair for several KubeCon and OpenStack* summits.

Photo by Aron Van de Pol on Unsplash