Developers still working with the Intel NCS2 are advised to transition to the Intel® Edge AI box for video analytics, which offers hardware options to support your needs.

Note Not all options listed include the Intel® Movidius™ Myriad™ X VPU.

- The last order date for the Intel NCS2 was February 28, 2022.

- Technical support will continue until June 30, 2023.

- Warranty support will continue until June 30, 2024.

The Intel® Distribution of OpenVINO™ toolkit will continue to support the Intel NCS2 until version 2022.3, at which point the Intel NCS2 support will be maintained on the 2022.3.x Long-Term Support (LTS) release track.

Additional information can be found on the Product Change Notification (PCN118636) posted in the Intel® Quality Document Management System (Intel® QDMS).

A Plug and Play Development Kit for AI Inferencing

- Build and scale with exceptional performance per watt per dollar on the Intel® Movidius™ Myriad™ X Vision Processing Unit (VPU)

- Start developing quickly on Windows® 10, Ubuntu*, or macOS*

- Develop on common frameworks and out-of-the-box sample applications

- Operate without cloud compute dependence

- Prototype with low-cost edge devices such as Raspberry Pi* 3 and other ARM* host devices

Intel® Distribution of OpenVINO™ Toolkit

Intel NCS2

Develop, fine-tune, and deploy convolutional neural networks (CNNs) on low-power applications that require real-time inferencing.

- Deep learning inference at the edge

- Pretrained models on Open Model Zoo

- A library of functions and preoptimized kernels for faster delivery to market

- Support for heterogeneous execution across computer vision accelerators—CPU, GPU, VPU, and FPGA—using a common API

- Raspberry Pi hardware support

New Features

Explore the latest features and release notes for the toolkit.

- Includes Deep Learning Workbench

- Supports multidevice inference for load balancing

- Offers binary distribution methods via package managers and Docker* containers

- Provides new inference engine centric APIs

- Supports serialized FP16 Intermediate Representation

- Explore use cases for machine translation, natural language processing, and more

Intel® Developer Cloud for the Edge

Test virtually using Intel® Developer Cloud for the Edge. Quickly prototype and develop AI applications in the cloud using the latest Intel® hardware and software tools.

Technical Specifications

Hardware

- Processor: Intel Movidius Myriad X Vision Processing Unit (VPU)

- Supported frameworks: TensorFlow*, Caffe*, Apache MXNet*, Open Neural Network Exchange (ONNX*), PyTorch*, and PaddlePaddle* via an ONNX conversion

- Connectivity: USB 3.0 Type-A

- Dimensions: 2.85 in. x 1.06 in. x 0.55 in. (72.5 mm x 27 mm x 14 mm)

- Operating temperature: 0° C to 40° C

Software

- Intel Distribution of OpenVINO toolkit

- Supported operating systems:

- Ubuntu 16.04.3 LTS (64 bit)

- CentOS* 7.4 (64 bit)

- Windows 10 (64 bit)

- macOS 10.14.4 (or later)

- Raspbian (target only)

- Other (via the open source distribution of OpenVINO™ toolkit)

Intel® Movidius™ Myriad™ X Vision Processing Unit (VPU)

The latest generation of Intel® VPUs includes 16 powerful processing cores (called SHAVE cores) and a dedicated deep neural network hardware accelerator for high-performance vision and AI inference applications—all at low power.

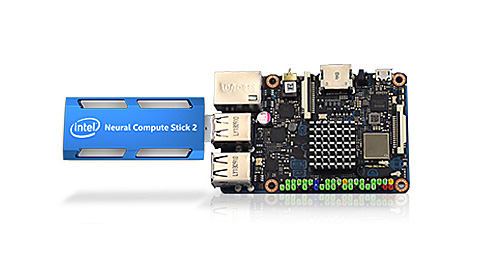

Plug-and-Play AI

Single-Board Computers

Prototype with low-cost edge devices, such as Raspberry Pi* and other Arm* host devices.

Depth-Sensing Cameras

Depth sensing meets plug-and-play edge inferencing with AI using an Intel® RealSense™ Depth Camera D400 series.

Take AI from Prototype to Production

Intel® AI: In Production centralizes resources and partner offers to help you through the process of delivering your solutions for AI at the edge.

Documentation

Optimize Networks for the Intel NCS2

Intel Distribution of OpenVINO Toolkit Preview Support for Intel NCS2 on Raspbian

Intel NCS2 and Open Source OpenVINO Toolkit

Warranty Support & Regulatory Information

Consult the Intel Neural Compute Stick 2 support for initial troubleshooting steps. If the issue persists, follow these instructions to obtain warranty support:

-

For purchases made from a distributor less than 30 days from the time of the warranty support request, contact the distributor where you made the purchase.

-

For purchases made directly from Intel or from a distributor more than 30 days from the time of the warranty support request, contact Intel Warranty Support.

Note You will need to create a support account if you do not have one.