Enhance Your AI Skills Now!

Scale AI solutions across your enterprise to boost productivity and stay ahead in the market. Don't miss out on our resource collection at the end of the article.

Most developers cut their teeth through academia, tinkering, or early-state entrepreneurship. When it comes to accepting a professional role or taking a product from proof-of-concept to production, you are immediately confronted with the immense scale and challenge of deploying a solution at an enterprise scale. Implementing AI at an enterprise scale is like upgrading from a bike to a high-performance sports car –exciting, powerful, and requires careful handling. In this guide, we’ll dive into how you, as a developer, can effectively implement AI in large organizations.

Understanding Enterprise AI

What is Enterprise AI?

Enterprise AI is the large-scale application of AI across various business functions to improve performance, drive growth, and create competitive advantages. Unlike small-scale or experimental AI projects, enterprise AI requires robust infrastructure, strategic alignment, and cross-functional collaboration. Implementing AI at scale can lead to significant benefits, including:

- Improved decision-making through predictive analytics

- Enhanced customer experiences with personalized services

- Operational efficiency and cost savings

- Innovation in products and services

Setting the Foundation

Assessing Business Needs and Opportunities

The first step in implementing AI is identifying business needs and opportunities where AI can create the most impact. In contrast to academic and enthusiast projects, implementing solutions in enterprise involves defining clear goals and estimating the return on investment of the proposed solution. Outside of research labs, solutions in the enterprise need to be tied directly or indirectly to revenue generation for the company. To determine this, you might embark on an analysis that involves the following:

- Identifying areas of the business with a need for improvement where AI would create a unique value add.

- Conducting a business impact analysis to determine your solution's revenue or cost reduction impacts.

- Business leaders will need a clear definition of goals from ideation to final delivery into production to determine if the solution will have a timely market impact.

- Securing budget from your finance, product, and/or R&D organizations to fund your solution.

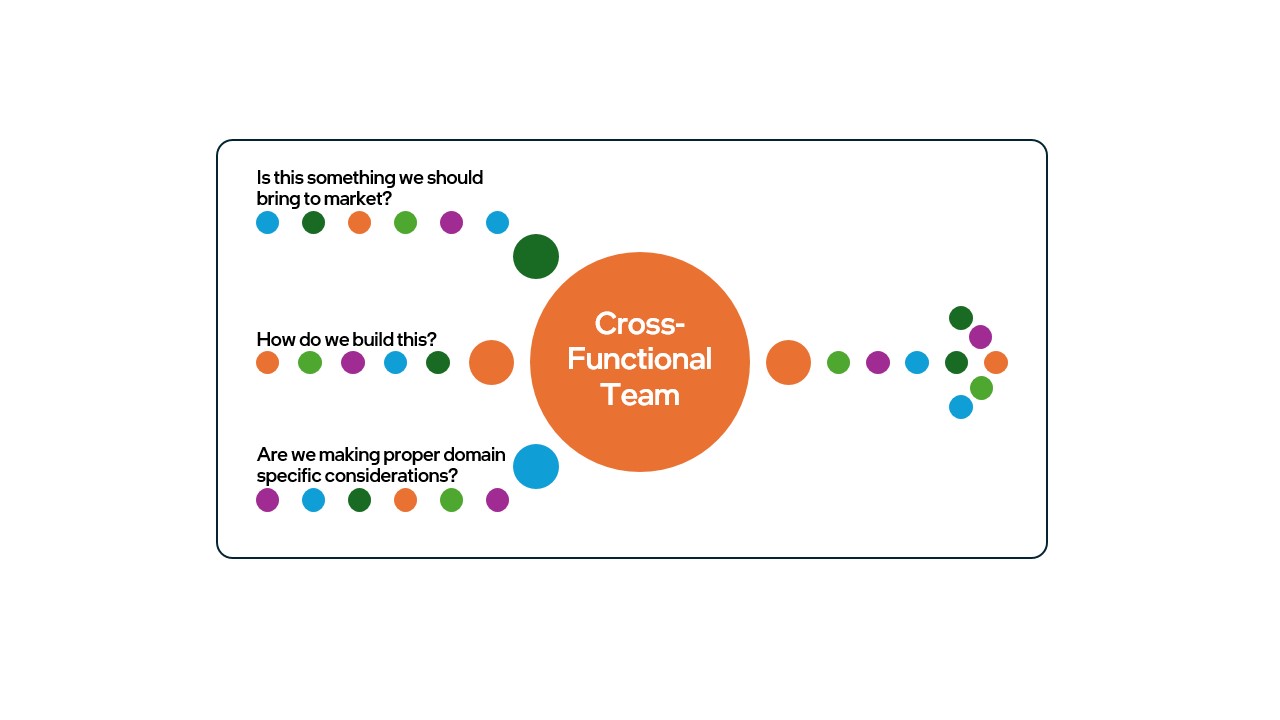

Building a Cross-Functional AI Team and Getting Alignment

Successful AI implementation requires a diverse team that includes data scientists, engineers, domain experts, and business leaders.

You should ask your enterprise AI team some key questions to determine feasibility and proper alignment before embarking on the engineering journey:

- "Is this something we should bring to market?" – This is the essential first question to ask product and business leaders when evaluating a new feature, tool, or application. It helps determine if there is genuine demand from end-users, avoiding wasted resources on initiatives that don’t meet market needs. As Eric Ries, author of The Lean Startup, famously said: “What if we found ourselves building something that nobody wanted? In that case, what did it matter if we did it on time and on budget?”

- "How do we build this?" – This is your opportunity as an engineer to contribute by defining the architecture, technology stack, and implementation strategy. It’s also a critical step in assessing the feasibility of the project and determining a realistic timeline and budget that align with the business objectives.

- "Are we considering domain-specific requirements?" – Consulting domain experts is crucial to ensure your solution aligns with the unique aspects of the industry, discipline, genre, or culture it serves. Domain expertise adds non-generic value, ensuring that the solution is meaningful and relevant to end-users, making the work genuinely impactful.

Choosing the Right AI Technologies

Once your team has alignment on business goals, the next critical step is selecting the appropriate AI technologies to implement. The landscape of AI tools and frameworks is vast, and choosing the right ones can make or break the success of your AI initiative. Here are some considerations to help guide your decision:

- Frameworks and Libraries: Select frameworks that best fit your team’s expertise and the problem you are trying to solve. For deep learning tasks, PyTorch and TensorFlow are widely used in the industry. For more traditional machine learning, Scikit-learn is popular, while libraries like XGBoost and LightGBM are great for structured data tasks.

- Cloud vs On-Premises Solutions: Many enterprises prefer cloud-based AI solutions because of their scalability and ease of deployment. Platforms like AWS SageMaker, Google AI Platform, and Azure Machine Learning provide end-to-end services for building, training, and deploying AI models. However, for highly regulated industries, on-premises solutions might be necessary due to data privacy concerns.

- Model Interpretability Tools: As AI becomes more critical in decision-making, it's essential to understand how models are making predictions. Tools like SHAP, LIME, or Explainable AI (XAI) libraries can help ensure that your AI systems are transparent and interpretable by both engineers and business leaders.

- Not mentioned but still important to consider for supporting AI technologies: Data Infrastructure.

Operationalizing AI

Getting a model into production is only the beginning. Operationalizing AI requires ongoing monitoring, management, and iteration to ensure that models continue to perform as expected. Here are some strategies to operationalize AI effectively:

- Monitoring and Alerting: Just like any software system, AI models can encounter issues in production. These might include data drift, where the input data distribution changes over time, leading to a decline in model performance. Monitoring tools like Evidently AI or Azure’s Application Insights can help detect these issues early and alert engineers.

- Model Retraining: Over time, AI models need to be retrained to keep up with changing data and business conditions. Automating this process through an MLOps pipeline ensures that models remain accurate and relevant without requiring manual intervention.

- Explainability and Audits: In highly regulated industries such as finance or healthcare, AI models may be subject to audits. Ensure that models can be easily explained and that there is a clear audit trail of data usage, training processes, and decision-making outputs.

- Not mentioned but still important to consider for operational AI: Collaboration with IT and DevOps

Security and Compliance

Security and compliance are paramount when deploying AI systems at an enterprise scale. AI systems often handle sensitive data, making them prime targets for cyberattacks. Here's how to ensure your AI initiatives are secure and compliant:

- Data Privacy: Compliance with regulations like GDPR, HIPAA, and CCPA is crucial. Ensure that your data collection, storage, and usage practices are transparent, and implement mechanisms for data anonymization or pseudonymization where necessary.

- Model Security: Adversarial attacks on AI models can lead to compromised results. Techniques such as adversarial training or the use of differential privacy methods can help protect your models from such attacks.

- Role-Based Access Control (RBAC): Implement RBAC to restrict access to sensitive data and model outputs. This ensures that only authorized personnel can view or modify AI models or datasets, adding an additional layer of security.

Implementing AI at an enterprise scale is a complex but rewarding journey. By following a strategic approach and leveraging the right technologies, businesses can unlock significant value and drive innovation. As AI continues to evolve, staying ahead of trends and focusing on ethical and responsible AI practices will be key to long-term success.

Dive into our resource library

Take a look at our expertly curated content focused on implementing retrieval augmented generation (RAG), identifying opportunities to leverage and build microservices, and enhancing the use of data to develop enterprise-level applications with RAG techniques for aspiring and current AI developers. Here, we cover the key strategies and tools to help developers harness the power of RAG for building scalable and impactful AI solutions.

What you’ll learn:

- Implement retrieval augmented generation (RAG)

- Identify opportunities to leverage and build microservices

- Recognize opportunities to use enhance opportunities to leverage data to build enterprise applications with RAG

How to get started

Step 1: Watch this video on running a RAG pipeline on Intel using LangChain

Intel’s Technical Evangelist, Guy Tamir, walks you through a simple explanation and a jupyter notebook to execute RAG, Retrieval-Augmented Generation on Intel hardware using LangChain and accelerated by OpenVINO.

Step 2: Building a ChatQnA Application Service

Image Source: GitHub

This ChatQnA use case performs RAG using LangChain, Redis VectorDB and Text Generation Inference on Intel® Gaudi® 2 AI accelerator or Intel® Xeon® Scalable processor. The Intel Gaudi 2 accelerator supports training and inference for deep learning models particularly for LLMs.

Step 3: Join experts and other community members at the OPEA Community Days

OPEA’s mission is to offer validated enterprise-grade GenAI reference implementations, simplifying development and deployment thereby accelerating time-to-market and a realization of business value. Join us at one of the virtual events we are hosting this fall!

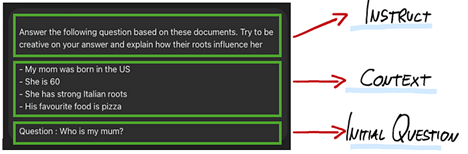

Step 4: Check out this technical article on Understanding Retrieval Augmented Generation (RAG)

Image Source: Medium

In this article, Ezequiel Lanza offers a concise roadmap for developers to scale AI solutions in large organizations. Learn how to assess business needs, build the right teams, select AI technologies, and operationalize models effectively to drive real business impact.