Open source projects have pushed AI innovation further, faster. One result of this evolution is the advent of generative AI (GenAI), which is currently in a state of kinetic innovation, a byproduct of which is fragmentation of techniques and tools. This fragmentation is a barrier to enterprise adoption of GenAI and the immense value it brings to a business. Developers tasked with realizing this value are faced with a dizzying number of choices when it comes to incorporating GenAI. At this early stage, open source collaboration can establish a robust and concrete framework from which to construct and evaluate composable GenAI solutions. With this in mind, Intel, working with several other industry partners, is proud to bring forth the Open Platform for Enterprise AI (OPEA).

OPEA is a new sandbox-level project within the LF AI & Data Foundation. OPEA’s mission is to create an open platform project that enables the creation of open, multi-provider, robust, and composable GenAI solutions that harness the best innovation across the ecosystem.

“OPEA, with the support of the broader community, will address critical pain points of RAG adoption and scale today. It will also define a platform for the next phases of developer innovation that harnesses the potential value generative AI can bring to enterprises and all our lives,” said Melissa Evers, vice president of the Software and Advanced Technology Group and general manager of Strategy to Execution at Intel Corporation.

![]()

Tenets of an OPEA implementation. (Image from opea.dev)

A Solution to GenAI Implementation Fragmentation

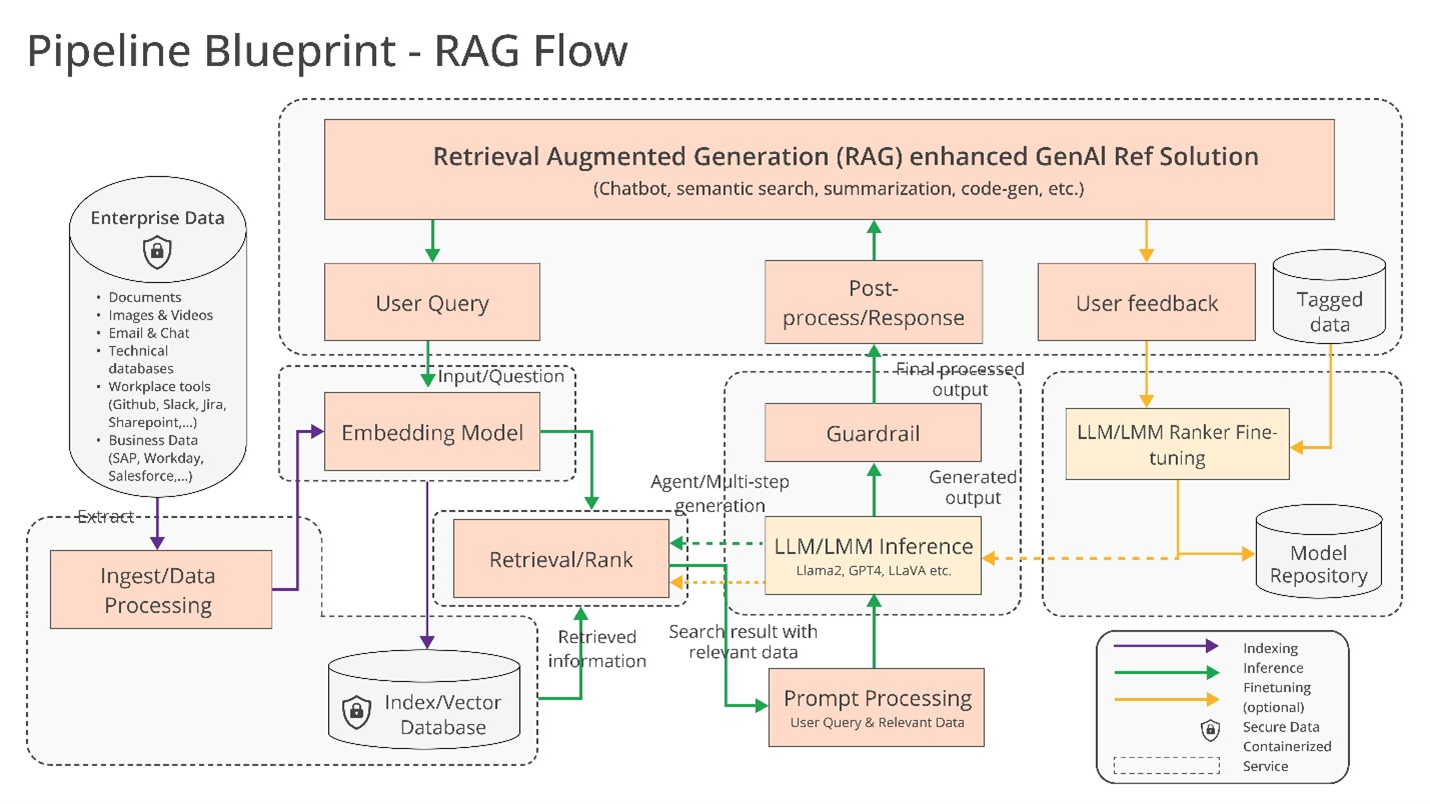

OPEA offers a holistic and straightforward view of a GenAI workflow that includes retrieval augmented generation (RAG) as well as other functionality as needed. The building blocks (open and proprietary) of this workflow include:

- GenAI models – large language models (LLMs), large vision models (LVMs), multimodal models, etc.

- Ingest/data processing

- Embedding models/services

- Indexing/vector/graph data stores

- Retrieval/ranking

- Prompt engines

- Guardrails

- Memory systems

RAG flow reference implementation (Image from opea.dev)

In the coming months, the open source community and enterprise partners will add to and evolve this framework to meet the needs of developers, but as of today, Intel is placing a set of reference implementations into the OPEA Github repo that can be used out of the box. These implementations include frameworks for:

- A chatbot on Intel® Xeon® 6 and Intel® Gaudi® 2

- Document summarization in Intel Gaudi 2

- Visual Question Answering (VQA) on Gaudi 2

- A copilot designed for code generation in Visual Studio Code on Gaudi 2

Evaluating What Matters to Enterprise

This composable framework is only the first part of what OPEA brings to developers. It sits beside an assessment framework that Intel has already made available in the OPEA Github repo. This allows for an agreed upon grading and evaluation system of GenAI flows against vectors such as performance, trustworthiness, scalability, and resilience, ensuring they are indeed enterprise ready.

Let’s take a look at the assessment categories:

Performance is based on a set of black-box benchmarks from real-world use cases with grading from ‘meets benchmark’ to ‘exceeds minimum acceptable performance.’

Features is an appraisal of mandatory and optional capabilities of system components inclusive of interoperability, AI methods, deployment choices, and ease of use.

Trustworthiness looks at the flow’s ability to guarantee quality, security, and robustness as measured by access control and use of features like confidential computing and trusted execution environments.

Enterprise readiness focuses on production needs to deploy a solution in enterprise environments as measured first by reaching the minimum requirements of the performance, features, and trustworthy vectors. This measurement then takes into account documentation, certification, and support response rate.

OPEA will work with the community to offer tests based on this rubric for self-evaluation and provide assessment and grading per request.

A Well-lit Path Through the GenAI Wilderness

OPEA has the capability to take GenAI to the next level by providing a standardized platform for assessment, development, and deployment. Already, we have a strong group of partners invested in this project including Anyscale, Cloudera, DataStax, Domino Datalabs, Hugging Face, IBM, KX Systems, MariaDB Foundation, MinIO, Qdrant, Red Hat, Redis, SAS, Yellowbrick Data, and Zilliz.

Open ecosystem forms the foundation of our approach at Intel. We believe an open ecosystem creates a horizontal playing field that allows multiple players to collaborate and develop innovative solutions faster and more efficiently. Open source development is key to software development. With that spirit, we share this platform with the open source community and look forward to working with you to improve and expand upon it to meet developers’ needs and accelerate GenAI value to business.

Want to participate? Join us at: opea.dev

About the Author

Rachel Roumeliotis, Director of Open Source Strategy at Intel

Rachel has been educating technologists for over 20 years. She comes to Intel most recently from O’Reilly Media, where she was a vice president of content strategy. During her time in the technical content acquisition and creation space, she worked within a wide variety of communities, from open source to AI, as well as security and design. She chaired many technical conferences, but her favorite was OSCON (O’Reilly’s Open Source Software Conference). She chaired that event for five years before bringing O’Reilly’s conference program to the virtual world in spring of 2020. She has also worked with a number of companies like Google, IBM, and Microsoft to tell their stories to developers. She hails from Massachusetts and is a fan of ‘mystery box’ TV shows and sci-fi, horror, and fantasy books.