I am pleased to announce the latest Intel® oneAPI Toolkits update (2021.3).

This update offers improved performance, a new Spack package manager for HPC developers, and an expansion of Anaconda Cloud distribution for Python developers. Intel’s oneAPI Toolkits provide compilers, libraries and analysis tools that implement industry standards including C++, Fortran, Python, MPI, OpenMP, SYCL; and accelerate Intel hardware performance enabling AVX-512, AMX, DL Boost, memory, and cryptography to power complex data-driven applications and workloads. The toolkits support Intel CPUs, GPUs, and FPGAs, include optimizations for the new 3rd gen Intel® Xeon® Scalable platforms (code name Ice Lake server), and are free to download and use.

New in the Update

This release (2021.3) ranks as a 'dot' update adding a generous number of enhancements that make it worthwhile to install. We’ll have one more quarterly update before introducing our next annual (major) release toward the end of this year.

Intel® Compilers and select performance libraries and now available via the Spack package manager.

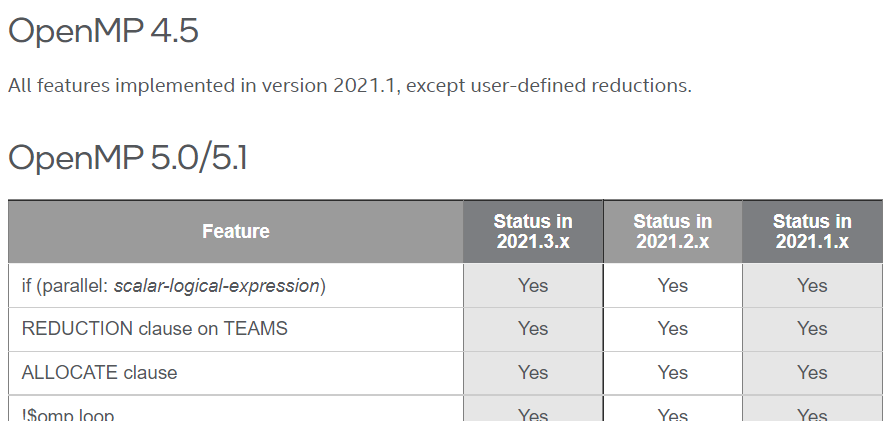

Our multiyear journey to transition our compilers to be fully LLVM-based continues. Our LLVM-based C/C++ compilers are fully ready and add additional 3rd gen Intel Xeon Scalable processor (code name Ice Lake server) optimizations in this release. All our compilers, support OpenMP 4.5 and subset of OpenMP 5.1. The growth in the LLVM-based Fortran (beta) compilers have progressed to include virtually all pre-Fortran-2008 features plus much more. Details on the progress can be found in our Fortran feature status table.

Our multiyear journey to transition our compilers to be fully LLVM-based continues. Our LLVM-based C/C++ compilers are fully ready and add additional 3rd gen Intel Xeon Scalable processor (code name Ice Lake server) optimizations in this release. All our compilers, support OpenMP 4.5 and subset of OpenMP 5.1. The growth in the LLVM-based Fortran (beta) compilers have progressed to include virtually all pre-Fortran-2008 features plus much more. Details on the progress can be found in our Fortran feature status table.

Python acceleration support extends to Python 3.8, and Numba 0.53. Support for Tensorflow 2.5 and Pytorch 1.8.1 are included in this update of the AI Analytics Toolkit.

As of August 5th, an additional update of the AI Analytics Toolkit provides Intel® Extension for Scikit-learn, which helps to speed up machine learning algorithms on Intel CPUs and GPUs across single and multi-nodes with a simple one-line dynamic patching and offers dramatic performance boosts. Support for the 3rd gen Xeon Scalable processors boosts performance for deep learning inference workloads using Intel Optimization for Tensorflow up to 10-100x compared to the stock versions.

As of August 5th, an additional update of the AI Analytics Toolkit provides Intel® Extension for Scikit-learn, which helps to speed up machine learning algorithms on Intel CPUs and GPUs across single and multi-nodes with a simple one-line dynamic patching and offers dramatic performance boosts. Support for the 3rd gen Xeon Scalable processors boosts performance for deep learning inference workloads using Intel Optimization for Tensorflow up to 10-100x compared to the stock versions.

The IoT Toolkit has enhanced its Eclipse plug-in support, and added a new Yocto Project layer meta-intel-oneapi to allow easier integration of the DPC++ runtime, data collectors, libraries, etc. into Yocto Project Linux kernels.

New ray-tracing support features in the Rendering Toolkit includes new support for structured FP16, VDB FP16, VDB Motion Blur, VDB Cubic Filtering, and the capability to handle multiple volumes in the same scene. Embree ray tracing libraries now include ARM support. New denoising mode in Open Image Denoise delivers higher quality final frame renderings. Importing PCD point cloud data, which is often used to represent LiDAR scan data, is supported now by Intel® OSPRay Studio.

The FPGA Add-on for the oneAPI Base Toolkit adds new support for: allocating local memory that is accessible to and shared by all work items of a workgroup; viewing global memory in the System Viewer (previously known as the Graph Viewer) of static report files; and integers with widths greater than 2048 bits (+256 Bytes). Now that’s math!

Get the Toolkits Now

You can get the updates in the same places as you got your original downloads - the oneAPI toolkit site, via containers, apt-get, Anaconda, and other distributions.

The toolkits are also available in the Intel® DevCloud, along with new 3rd gen Intel® Xeon® Scalable platforms (code name Ice Lake server), and training resources. This remote environment is a fantastic place to develop, test and optimize code across an array of Intel CPUs and accelerator for free and without having to set up your own system. If you don't know about DevCloud yet, I recommend you check out my new blog series (#xpublog).

Boosting Performance via Anaconda’s Curated Package Repository

Anaconda, pioneers and leaders in Python data science, offer a great user experience for data science practitioners. Packages and libraries optimized for Intel oneAPI Math Kernel Library, including NumPy and SciPy, are easily accessible in Anaconda’s curated package repository. As result of work with Anaconda, the open source Intel® Extension for Scikit-learn, which uses Intel® oneAPI Data Analytics Library and its convenient Python API daal4py, makes machine learning algorithms in Python an average of 27X faster in training and 36X faster during inferencing, and optimizes scikit-learn’s application performance. Anaconda users can now access the speed improvements of Intel Extension for Scikit-learn directly from Anaconda’s repository.

Anaconda, pioneers and leaders in Python data science, offer a great user experience for data science practitioners. Packages and libraries optimized for Intel oneAPI Math Kernel Library, including NumPy and SciPy, are easily accessible in Anaconda’s curated package repository. As result of work with Anaconda, the open source Intel® Extension for Scikit-learn, which uses Intel® oneAPI Data Analytics Library and its convenient Python API daal4py, makes machine learning algorithms in Python an average of 27X faster in training and 36X faster during inferencing, and optimizes scikit-learn’s application performance. Anaconda users can now access the speed improvements of Intel Extension for Scikit-learn directly from Anaconda’s repository.

oneAPI is an XPU Upgrade for Intel Parallel Studio XE and Intel System Studio

Intel’s oneAPI Toolkits combine (and rename) multiple popular software products from Intel, including all of Intel® Parallel Studio XE and Intel® System Studio, while adding significant support for oneAPI heterogeneous programming capabilities. oneAPI is an open multivendor approach to heterogeneous programming that benefits all software developers. This approach significantly raises the bar for development tools to build applications and solutions that harness the power of CPUs, GPUs and FPGAs, together. I recently presented an overview at the IWOCL/SYCLcon conference (available on-demand) that is a good introduction to the oneAPI commitment to an open, standards-based, multivendor, performant, and productive programming solution.

oneAPI and SYCL Ecosystem Adoption Making a Real Difference

Nothing makes me happier than seeing products in use. While I cannot possibly highlight all oneAPI usage, I can share a few that illustrate the diversity of impact that oneAPI has at opening up access to acceleration through heterogeneous computing. Zoom found uses of oneAPI to access AI acceleration in their popular video conferencing application in this three minute video by Velchamy Sankarlingam. Our understanding of the universe is enhanced thanks to work at CERN openlab. Bartlomiej Borzyszkowski is using biologically-inspired computing to investigate gravity waves. In a video interview, he talks about his use of oneAPI to implement classification methods and spiking neural networks for anomaly detection.

Our enthusiasm for the SYCL standard, and the open source DPC++ project to bring SYCL to LLVM, is shared around the world. Support extends to Intel GPUs, Intel FPGAs, x86 CPUs, AMD GPUs, Nvidia GPUs, and Huawei Ascend AI chipsets. The National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory (Berkeley Lab), in collaboration with the Argonne Leadership Computing Facility (ALCF) at Argonne National Laboratory, engaged Codeplay Software to enhance the LLVM SYCL GPU compiler capabilities for NVIDIA® A100 GPUs. Argonne National Laboratory in collaboration with Oak Ridge National Laboratory (ORNL), engaged Codeplay to support AMD GPU-based supercomputers within the DPC++ project.

Update Now for All the Best in oneAPI

I walked through most of the significant changes with this update; a more complete list is available in the release notes.

I encourage you to update and get the best that Intel® oneAPI Toolkits have to offer. When you care about top performance, it is always worth keeping up-to-date.

Be sure to post your questions to the community forums or check out the options for paid Priority Support if you desire more personal support.

Visit my blog for more encouragement on learning about the heterogeneous programming that is possible with oneAPI.

oneAPI is an attitude, a joy, and a journey - to help all software developers.