Intel continues to evolve its integrated GPU strategy and has entered the market of discrete and data center GPUs with products like Intel® Arc™ graphics, Intel® Arc™ Pro workstation graphics, and Intel® Data Center GPU Max Series to help creators, gamers, and AI practitioners around the world accelerate their work and unlock their full creative potential.

This year, seven new papers on photorealistic rendering, generative AI, and neural graphics with university collaborations across the globe are published at three prestigious conferences.

As we are entering the era of 3D perception for humans and machines, both creation and rendering of precise and complex visuals becomes a crucial foundation. New research shows how to efficiently render complex glint and spectral materials as well as most widespread material models, ray trace dynamic scenes with faster BVH build, and accelerate path tracing convergence with difficult lighting. More research also provides an intuitive explanation of diffusion models for more accessible research in generative AI and shows how neural methods can reduce the complexity of conventional graphics algorithms, entering the era of neural graphics.

Technologies from Intel researchers, such as Intel® Xe Super Sampling (XeSS) and Intel® Embree, are regularly updated, contributing to the developer community with Intel’s open source-first mindset to enrich the cross-vendor ecosystem.

The new building blocks presented at this year’s conferences, along with our wide offering of GPU products and scalable cross-architecture rendering stack, will help developers and businesses to do more efficient rendering of digital twins, future immersive AR and VR experiences, as well as synthetic data for sim2real AI training.

Efficient Path Tracing

With Intel’s ubiquitous integrated GPUs and emerging discrete graphics, researchers are pushing the efficiency of the most demanding photorealistic rendering, called path tracing. In path tracing, each photon is simulated the same way as in the real world, bouncing off objects and traveling to their next interaction along a straight-line using ray tracing. Two papers presented at EGSR and HPG show how to more efficiently deal with acceleration structures used in ray tracing to handle dynamic objects and complex geometry.

Once a photon hits a surface, it needs to be shaded, where its scattering off this surface is simulated according to a complex statistical distribution model, which is defined by an object’s material. Two more papers at HPG show how to speed up shading calculations.

The first paper presents a novel and efficient method to compute the reflection of a GGX microfacet surface, the de facto standard material model in the game, animation, and VFX industry.

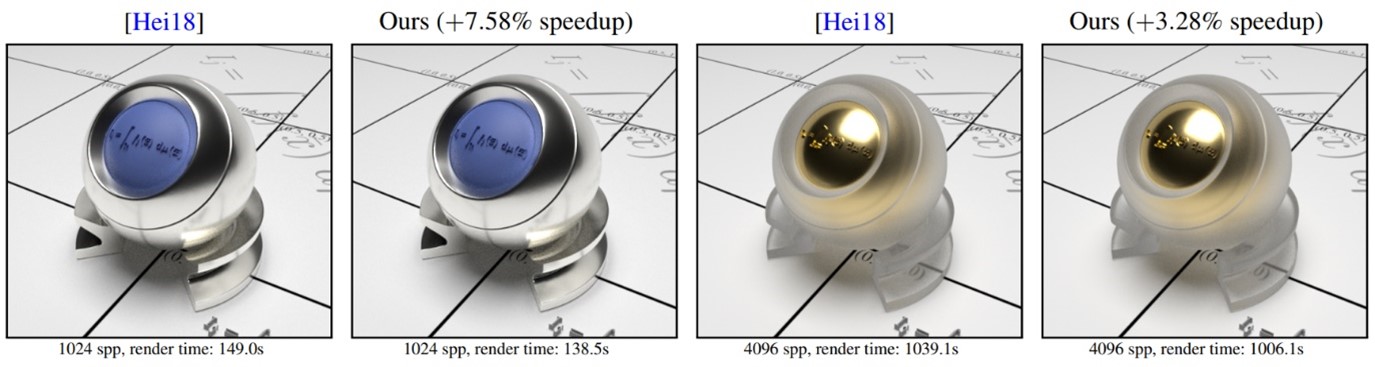

The second paper provides a faster and more stable method to render glinty surfaces—materials that are underused due to their computation cost.

More EGSR work in collaboration with Inria (France) shows how to edit reflectance and transmittance spectra with fine control and enables artists to use more controllable spectral materials. In collaboration with Karlsruhe Institute of Technology (KIT) (Germany), another EGSR paper presents a new way of more efficiently constructing (sampling) photon trajectories in difficult illumination scenarios.

Across the process of path tracing, the research presented in these papers demonstrates improvements in efficiency in path tracing’s main building blocks, namely ray tracing, shading, and sampling. These are important components to make photorealistic rendering with path tracing available on more affordable GPUs, such as Intel Arc GPUs, and a step toward real-time performance on integrated GPUs.

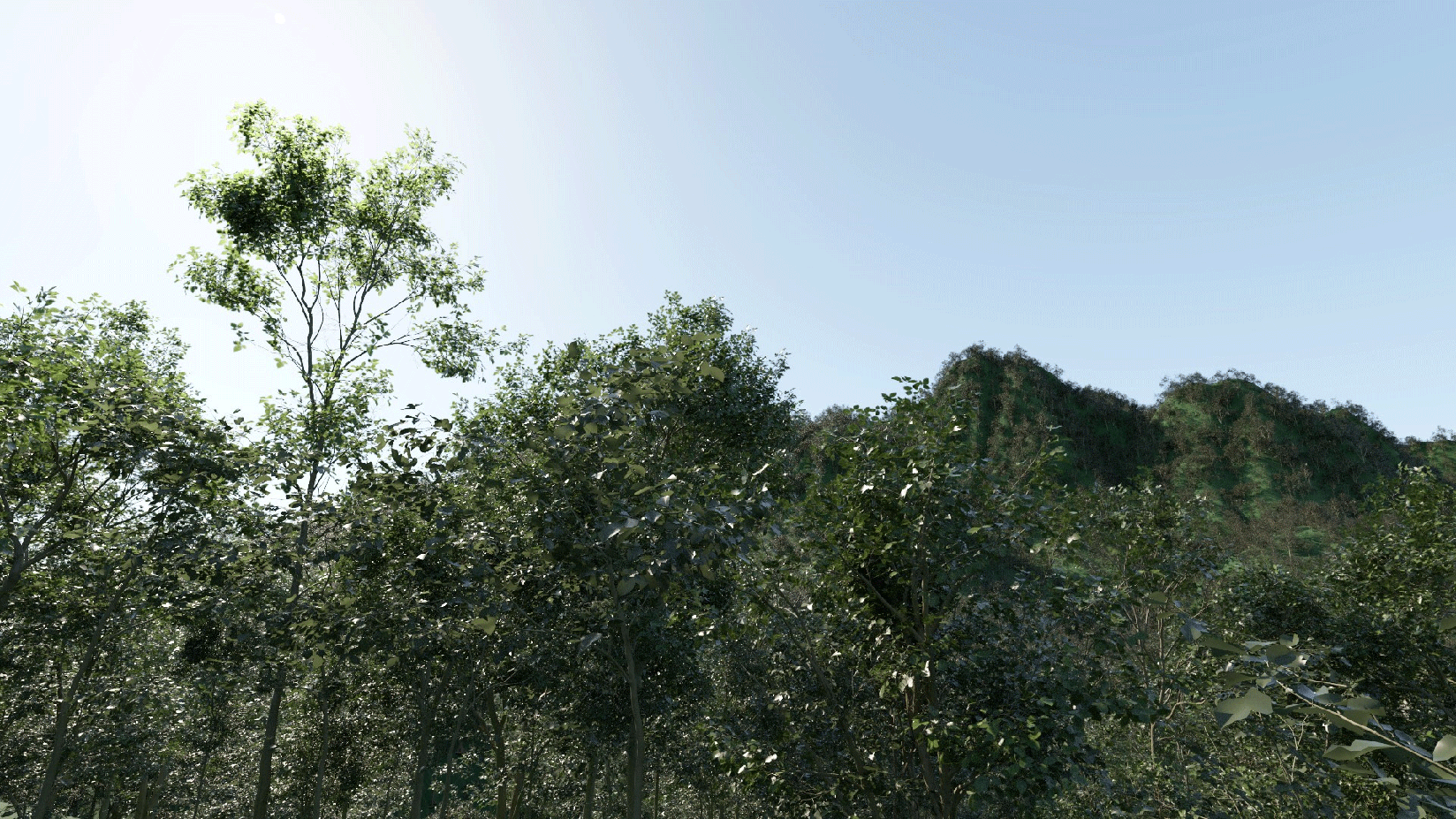

Forest render with 1 trillion triangles.

We’re excited to keep pushing these efforts for more efficient real-time path tracing that requires a much less powerful GPU and can be practical even on mid-range and integrated GPUs in the future. In the spirit of Intel’s open ecosystem software mindset, we will make this cross-vendor framework open source as a sample and a test-bed for developers and practitioners.

For customers that prefer high quality to performance, Intel also has a new offering of the Oscar* award-winning Intel® oneAPI Rendering Toolkit libraries that are now significantly accelerated on GPU with ray tracing and systolic hardware.

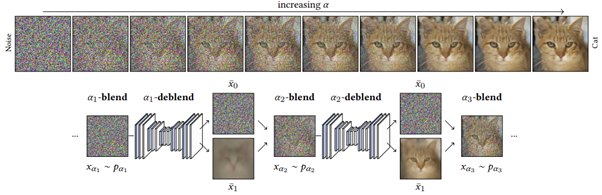

Democratizing Generative AI Research

Many recent advances in generative content creation, such as Midjourney* and Stable Diffusion*, are built on the new generative neural networks called diffusion models. Until recently, the mathematical concept behind these models required advanced scientific skills and knowledge. One work to be presented at SIGGRAPH 2023 makes diffusion models more accessible for graphics and GPU practitioners by explaining the diffusion process through a familiar concept of alpha blending. This also allows for deeper ties of diffusion models to graphics and image generation, while inspiring practitioners and scientists in these areas to employ this new generative tool.

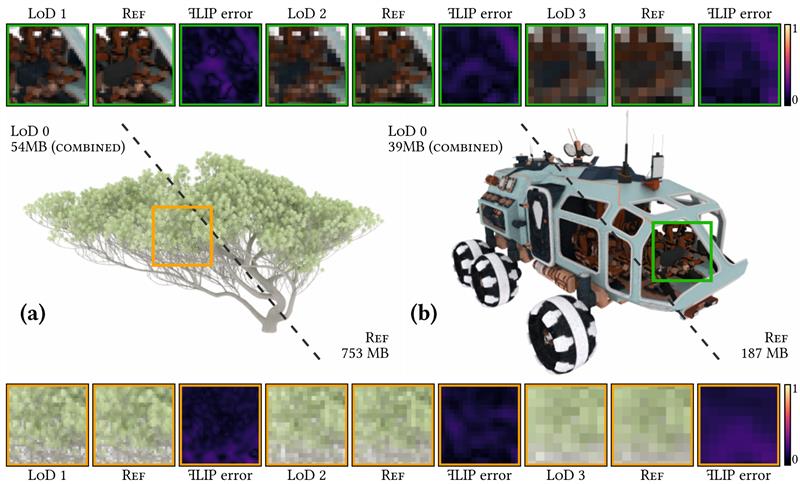

Neural Graphics

The last work at SIGGRAPH 2023 introduces neural tools to real-time path tracing. Neural graphics is revolutionizing the graphics field, and a paper on neural level of detail is a stepping-stone to scale high-quality visuals across games, movies, and new visual AI needs, such as synthetic data and digital twins. New neural level of detail representation achieves 70%-95% compression compared to "vanilla" path tracing, while also delivering interactive to real-time performance. This is still the beginning of the new era of neural graphics that enables higher visual quality, even on low power GPUs.

More to Come

These are just a few highlights. Intel is working to constantly enrich the graphics ecosystem and will present multiple advances in games and professional graphics this summer.

GPU Research at Intel has multiple worldwide teams of world-leading experts, scientists, and engineers who focus on new technologies and ecosystem enablement for the future GPU needs, including conventional and neural graphics, generative AI, and content creation.