Introduction

A number of usage models are possible given the flexible interfaces provided by the Cache Allocation Technology (CAT) feature, including prioritization of important applications and isolation of applications to reduce interference.

How Does CAT Work?

Low-level software such as an OS or VMM uses the CAT feature via the following steps:

- Determine whether the CPU supports CAT via the CPUID instruction. As described in the Intel Software Developer’s Manual, CPUID leaf 0x10 provides detailed information on the capabilities of the CAT feature.

- Configure the class of service (CLOS) to define the amount of resources (cache space) available via MSRs.

- Associate each logical thread with an available logical CLOS.

- As the OS/VMM swaps a thread or VCPU onto a core, update the CLOS on the core via the IA32_PQR_ASSOC MSR, which ensures that the resource usage is controlled via the bitmasks configured in step 2.

Higher-level software such as an orchestration framework or an administrator-level tool can make use of these hardware capabilities via OS/VMM enabling, and some examples are given in the article that describes software enabling.

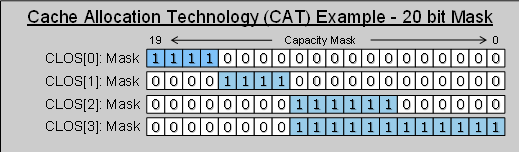

The amount of cache available to a given application is specified via a block of MSRs containing capacity bitmasks (CBMs), which provide an indication of the degree of overlap or isolation across threads:

Figure 1: Configuration of the L3 capacity bitmasks per logical class of service (CLOS), via the IA32_L3_MASK_n block of MSRs, where n corresponds to a CLOS number.

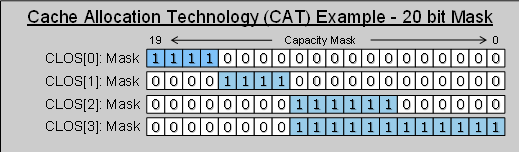

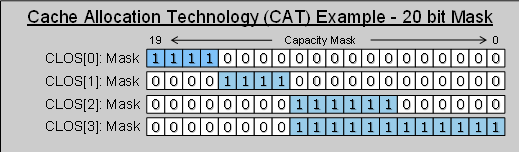

The values within the CBMs indicate the relative amount of cache available and the degree of overlap or isolation. For example, in Figure 2 CLOS[1] has less cache available than CLOS[3] and could be considered lower priority.

Figure 2: Examples of capacity bitmask overlap and isolation across multiple classes of service (CLOS).

In cases where bitmasks do not overlap the applications or VMs do not mutually compete for space in the last level cache; instead they use separate partitions, which can be dynamically updated at any time as resource needs change.

With overlapping bitmasks (CLOS[2] and CLOS[3] in Figure 1) it is often possible to achieve higher throughputs than in the isolated cases, and relative priorities can still be preserved. This may be an appealing use model in cases where many threads/apps/VMs are running concurrently.

The association between a software thread and CLOS (steps 3 and 4 above) is made via the IA32_PQR_ASSOC MSR, which is defined per hardware thread (see Figure 3).

Figure 3: The current class of service (CLOS) for a thread can be specified using the IA32_PQR_ASSOC MSR, which is defined per hardware thread.

An alternate usage method is possible for non-enabled operating systems and VMMs where CLOS are pinned to hardware threads, then software threads are pinned to hardware threads; however OS/VMM enabling is recommended wherever possible in order to avoid the need to pin apps.

As a starting point for evaluation, the pinned use model can be achieved using the RDT utility from 01.org (and GitHub*). This utility works with generic Linux* operating systems to provide per-thread monitoring and control via associating Resource Monitoring IDs (RMIDs) and CLOS with each hardware thread.

Usages across Application Domains

The CAT feature has wide applicability across many domains. With the flexibility of dynamic updates and both overlapped and isolated configurations, usages that span a diverse set of application domains are possible, including:

-

Cloud hosting in the data center – For example; prioritizing important virtual machines (VMs) and containing “noisy neighbors.”

-

Public/Private cloud – Protecting an important infrastructure VM that provides networking services such as a VPN to bridge the private cloud to the public cloud.

-

Data center infrastructure – Protecting virtual switches that provide local networking.

-

Communications – For example, ensuring consistent performance and containing background tasks on a network appliance built atop an Intel® Xeon® processor-based server platform.

-

Content Distribution Boxes (CDNs) – For example, prioritizing key parts of the content-serving application in order to improve throughput.

-

Networking – For example, containing “noisy neighbor” applications to help reduce jitter and reduce packet loss in noisy scenarios, protecting high-performance applications based on the Data Plane Development Kit (DPDK).

-

Industrial control – For example, prioritizing important sections of code so that real-time requirements are more likely to be met.

Conclusion

The CAT feature enables prioritization or even isolation of important applications across a wide variety of usages. The next article in the series provides a few proof points for sample usages, followed by a discussion of software enabling collaterals available to help new users get started using the features.