Intel® oneAPI Deep Neural Network Developer Guide and Reference

enum dnnl_alg_kind_t

Overview

Kinds of algorithms. More…

#include <dnnl_types.h>

enum dnnl_alg_kind_t

{

dnnl_alg_kind_undef,

dnnl_convolution_direct = 0x1,

dnnl_convolution_winograd = 0x2,

dnnl_convolution_auto = 0x3,

dnnl_deconvolution_direct = 0xa,

dnnl_deconvolution_winograd = 0xb,

dnnl_eltwise_relu = 0x20,

dnnl_eltwise_tanh,

dnnl_eltwise_elu,

dnnl_eltwise_square,

dnnl_eltwise_abs,

dnnl_eltwise_sqrt,

dnnl_eltwise_linear,

dnnl_eltwise_soft_relu,

dnnl_eltwise_hardsigmoid,

dnnl_eltwise_logistic,

dnnl_eltwise_exp,

dnnl_eltwise_gelu_tanh,

dnnl_eltwise_swish,

dnnl_eltwise_log,

dnnl_eltwise_clip,

dnnl_eltwise_clip_v2,

dnnl_eltwise_pow,

dnnl_eltwise_gelu_erf,

dnnl_eltwise_round,

dnnl_eltwise_mish,

dnnl_eltwise_hardswish,

dnnl_eltwise_relu_use_dst_for_bwd = 0x100,

dnnl_eltwise_tanh_use_dst_for_bwd,

dnnl_eltwise_elu_use_dst_for_bwd,

dnnl_eltwise_sqrt_use_dst_for_bwd,

dnnl_eltwise_logistic_use_dst_for_bwd,

dnnl_eltwise_exp_use_dst_for_bwd,

dnnl_eltwise_clip_v2_use_dst_for_bwd,

dnnl_pooling_max = 0x1ff,

dnnl_pooling_avg_include_padding = 0x2ff,

dnnl_pooling_avg_exclude_padding = 0x3ff,

dnnl_lrn_across_channels = 0xaff,

dnnl_lrn_within_channel = 0xbff,

dnnl_vanilla_rnn = 0x1fff,

dnnl_vanilla_lstm = 0x2fff,

dnnl_vanilla_gru = 0x3fff,

dnnl_lbr_gru = 0x4fff,

dnnl_vanilla_augru = 0x5fff,

dnnl_lbr_augru = 0x6fff,

dnnl_binary_add = 0x1fff0,

dnnl_binary_mul = 0x1fff1,

dnnl_binary_max = 0x1fff2,

dnnl_binary_min = 0x1fff3,

dnnl_binary_div = 0x1fff4,

dnnl_binary_sub = 0x1fff5,

dnnl_binary_ge = 0x1fff6,

dnnl_binary_gt = 0x1fff7,

dnnl_binary_le = 0x1fff8,

dnnl_binary_lt = 0x1fff9,

dnnl_binary_eq = 0x1fffa,

dnnl_binary_ne = 0x1fffb,

dnnl_binary_select = 0x1fffc,

dnnl_resampling_nearest = 0x2fff0,

dnnl_resampling_linear = 0x2fff1,

dnnl_reduction_max,

dnnl_reduction_min,

dnnl_reduction_sum,

dnnl_reduction_mul,

dnnl_reduction_mean,

dnnl_reduction_norm_lp_max,

dnnl_reduction_norm_lp_sum,

dnnl_reduction_norm_lp_power_p_max,

dnnl_reduction_norm_lp_power_p_sum,

dnnl_softmax_accurate = 0x30000,

dnnl_softmax_log,

};Detailed Documentation

Kinds of algorithms.

Enum Values

dnnl_convolution_directDirect convolution.

dnnl_convolution_winogradWinograd convolution.

dnnl_convolution_autoConvolution algorithm(either direct or Winograd) is chosen just in time.

dnnl_deconvolution_directDirect deconvolution.

dnnl_deconvolution_winogradWinograd deconvolution.

dnnl_eltwise_reluEltwise: ReLU.

dnnl_eltwise_tanhEltwise: hyperbolic tangent non-linearity (tanh)

dnnl_eltwise_eluEltwise: exponential linear unit (elu)

dnnl_eltwise_squareEltwise: square.

dnnl_eltwise_absEltwise: abs.

dnnl_eltwise_sqrtEltwise: square root.

dnnl_eltwise_linearEltwise: linear.

dnnl_eltwise_soft_reluEltwise: soft_relu.

dnnl_eltwise_hardsigmoidEltwise: hardsigmoid.

dnnl_eltwise_logisticEltwise: logistic.

dnnl_eltwise_expEltwise: exponent.

dnnl_eltwise_gelu_tanhEltwise: gelu.

dnnl_eltwise_swishEltwise: swish.

dnnl_eltwise_logEltwise: natural logarithm.

dnnl_eltwise_clipEltwise: clip.

dnnl_eltwise_clip_v2Eltwise: clip version 2.

dnnl_eltwise_powEltwise: pow.

dnnl_eltwise_gelu_erfEltwise: erf-based gelu.

dnnl_eltwise_roundEltwise: round.

dnnl_eltwise_mishEltwise: mish.

dnnl_eltwise_hardswishEltwise: hardswish.

dnnl_eltwise_relu_use_dst_for_bwdEltwise: ReLU (dst for backward)

dnnl_eltwise_tanh_use_dst_for_bwdEltwise: hyperbolic tangent non-linearity (tanh) (dst for backward)

dnnl_eltwise_elu_use_dst_for_bwdEltwise: exponential linear unit (elu) (dst for backward)

dnnl_eltwise_sqrt_use_dst_for_bwdEltwise: square root (dst for backward)

dnnl_eltwise_logistic_use_dst_for_bwdEltwise: logistic (dst for backward)

dnnl_eltwise_exp_use_dst_for_bwdEltwise: exp (dst for backward)

dnnl_eltwise_clip_v2_use_dst_for_bwdEltwise: clip version 2 (dst for backward)

dnnl_pooling_maxMax pooling.

dnnl_pooling_avg_include_paddingAverage pooling include padding.

dnnl_pooling_avg_exclude_paddingAverage pooling exclude padding.

dnnl_lrn_across_channelsLocal response normalization (LRN) across multiple channels.

dnnl_lrn_within_channelLRN within a single channel.

dnnl_vanilla_rnnRNN cell.

dnnl_vanilla_lstmLSTM cell.

dnnl_vanilla_gruGRU cell.

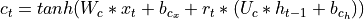

dnnl_lbr_gruGRU cell with linear before reset.

Modification of original GRU cell. Differs from dnnl_vanilla_gru in how the new memory gate is calculated:

Primitive expects 4 biases on input:

dnnl_vanilla_augruAUGRU cell.

dnnl_lbr_augruAUGRU cell with linear before reset.

dnnl_binary_addBinary add.

dnnl_binary_mulBinary mul.

dnnl_binary_maxBinary max.

dnnl_binary_minBinary min.

dnnl_binary_divBinary div.

dnnl_binary_subBinary sub.

dnnl_binary_geBinary greater or equal.

dnnl_binary_gtBinary greater than.

dnnl_binary_leBinary less or equal.

dnnl_binary_ltBinary less than.

dnnl_binary_eqBinary equal.

dnnl_binary_neBinary not equal.

dnnl_binary_selectBinary select.

dnnl_resampling_nearestNearest Neighbor Resampling Method.

dnnl_resampling_linearLinear Resampling Method.

dnnl_reduction_maxReduction using max.

dnnl_reduction_minReduction using min.

dnnl_reduction_sumReduction using sum.

dnnl_reduction_mulReduction using mul.

dnnl_reduction_meanReduction using mean.

dnnl_reduction_norm_lp_maxReduction using lp norm.

dnnl_reduction_norm_lp_sumReduction using lp norm.

dnnl_reduction_norm_lp_power_p_maxReduction using lp norm without final pth-root.

dnnl_reduction_norm_lp_power_p_sumReduction using lp norm without final pth-root.

dnnl_softmax_accurateSoftmax.

dnnl_softmax_logLogsoftmax.