Overview

This tutorial describes how to configure Intel® Optane™ DC persistent memory and provision it to one or more KVM/QEMU virtual machines. Prior knowledge of persistent memory is not required.

Prerequisites

To complete this tutorial, you need the following:

- 2nd generation Intel® Xeon® scalable processor-based platform populated with Intel® Optane™ DC memory modules.

- ipmctl – A utility for configuring and managing Intel Optane DC memory modules.

- ndctl – A utility for managing the non-volatile memory device subsystem in the Linux* kernel.

- KVM/QEMU (Ubuntu*) – Kernel Virtual Machine Installation Guide.

- QEMU version 2.11.1 (any QEMU version starting from 2.6.0 should work).

- Ubuntu 18.10 or higher for both the host and guest operating systems (OS).

- A network bridge named br0 to give your VM network access. See the KVM links in the References section at the end of this tutorial for instructions on how to create it.

Steps

For the virtual machines running on a KVM/QEMU server to access persistent memory, you need to configure the persistent memory in app-direct mode. You can do this in one of three ways:

- Using the ipmctl management utility at the OS level.

- Using the ipmctl management utility, but at the Unified Extensible Firmware Interface (UEFI) shell. You can get this ipmctl management utility from the server vendor’s website.

- Using the BIOS options, if available for your system.

This tutorial describes how to use the ipmctl utility at the OS level to configure Intel Optane DC persistent memory. You can also apply the steps at the UEFI level. Due to BIOS differences between vendors and platforms, consult the documentation provided by your platform vendor.

You will use the ipmctl utility to initially configure the persistent memory so the operating system can see it. Then you use the ndctl utility to create namespaces so you can create and mount filesystems

Install the ipmctl and ndctl Tools in the Host

- Install the ndctl tool:

$ sudo apt install ndctl

- Install the ipmctl tool. Refer to the README.md on the ipmctl GitHub project page for install instructions. Not all Linux distributions provide ipmctl packages in their repositories. Packages are available for some distributions through a package repository hosted by the ipmctl project.

- Use ipmctl to show the available regions in the system. A region is a group of one or more persistent memory modules, also known as an interleaved set.

$ sudo ipmctl show -region

There are no Regions defined in the system.

$

- Create an interleaved app-direct configuration using all the available persistent memory in the system using ipmctl create -goal PersistentMemoryType=AppDirect. Once the configuration has been applied, reboot the system for the changes to take effect.

$ sudo ipmctl create -goal PersistentMemoryType=AppDirect

The following configuration will be applied:

SocketID | DimmID | MemorySize | AppDirect1Size | AppDirect2Size

==================================================================

0x0000 | 0x0001 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0011 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0021 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0101 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0111 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0121 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1001 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1011 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1021 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1101 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1111 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1121 | 0.000 GiB | 126.000 GiB | 0.000 GiB

Do you want to continue? [y/n] y

Created following region configuration goal

SocketID | DimmID | MemorySize | AppDirect1Size | AppDirect2Size

==================================================================

0x0000 | 0x0001 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0011 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0021 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0101 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0111 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0000 | 0x0121 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1001 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1011 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1021 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1101 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1111 | 0.000 GiB | 126.000 GiB | 0.000 GiB

0x0001 | 0x1121 | 0.000 GiB | 126.000 GiB | 0.000 GiB

A reboot is required to process new memory allocation goals.

$

Example: Configure the memory for app-direct using the ipmctl tool.

- After rebooting the system, run ipmctl show -region again to check your newly created app-direct regions. You should see one region per CPU socket, as shown below

$ sudo ipmctl show -region

SocketID| ISetID |PersistentMemoryType |Capacity| FreeCapacity| HealthState

=====================================================================

0x0000 | 0xdf287f483ba62ccc |AppDirect |756.000 GiB | 0.000 GiB | Healthy

0x0001 | 0xa7b87f489fa62ccc | AppDirect|756.000 GiB | 756.000 GiB| Healthy

Example: Using the ipmctl tool to show the configured regions.

Install KVM/QEMU

You are now ready to install KVM/QEMU in the system.

- Install KVM/QEMU.

$ sudo apt-get install qemu-kvm libvirt-daemon-system libvirt-clients bridge-utils

- Use the qemu-ing command to create a raw virtual disk for the virtual machine (VM).

$ sudo qemu-img create -f raw /VMachines/qemu/vm.raw 20G

Formatting '/VMachines/qemu/vm.raw', fmt=raw size=21474836480

Create the Namespaces

Create a namespace using the ndctl tool. Below we create a single namespace using the entire persistent memory capacity of region0.

$ sudo ndctl create-namespace --region=region0

{

"dev":"namespace0.0",

"mode":"fsdax",

"map":"dev",

"size":"744.19 GiB (799.06 GB)",

"uuid":"4bea5f4a-f257-42d4-85b8-3494a05a84c2",

"raw_uuid":"a6f65fff-1cb1-48cb-8a62-ed0b8029a5c3",

"sector_size":512,

"blockdev":"pmem0",

"numa_node":0

}

Example: Using the ndctl tool to create a namespace.

To create a namespace of a specific size (for example, 36 GiB), we use the –size option:

$ sudo ndctl create-namespace --region=region0 --size=36g

{

"dev":"namespace0.0",

"mode":"fsdax",

"map":"dev",

"size":"35.44 GiB (38.05 GB)",

"uuid":"d59a9b4a-6755-4156-9b5e-d122ffbe1532",

"sector_size":512,

"align":2097152,

"blockdev":"pmem0"

"numa_node":0

}

Example: Using using the ndctl tool to create a namespace with the size option for 36 GiB.

You can repeat the above command to create multiple namespaces in the same region.

The ndctl command will create a new block device under /dev. In the following example, this is /dev/pmem0 shown by the blockdev field.

Create and Mount the Filesystem(s)

- Create an XFS or ext4 file system using the /dev/pmem0 block device.

$ sudo mkfs.xfs /dev/pmem0

meta-data=/dev/pmem0 isize=512 agcount=4, agsize=48770944 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=0, rmapbt=0, reflink=0

data = bsize=4096 blocks=195083776, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=95255, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Example: Using the mkfs.xfs command to create a filesystem.

- Create mountpoint (/pmemfs0) and mount the filesystem.

$ sudo mkdir /pmemfs0

$ sudo mount -o dax /dev/pmem0 /pmemfs0

Example: Using the mount command to create a mountpoint.

Create a QEMU Guest VM and Install the OS

When creating and starting the guest virtual machine for the first time, use the following command line arguments, which use an Ubuntu 18.10 ISO image to install the guest operating system. Check the network interfaces using ip a to see what bridged network devices are available. Ubuntu uses br0 by default, but other Linux distros may instead use virtbr0. (The References section at the end of this tutorial provides links to instructions for setting up the bridge.)

Use the command below to create and start the guest.

$ sudo qemu-system-x86_64 -drive file=/VMachines/qemu/vm.raw,format=raw,index=0,media=disk \

-m 4G,slots=4,maxmem=32G \

-smp 4 \

-machine pc,accel=kvm,nvdimm=on \

-enable-kvm \

-vnc :0 \

-netdev bridge,br=br0,id=net0 -device virtio-net-pci,netdev=net0 \

-object memory-backend-file,id=mem1,share,mem-path=/pmemfs0/pmem0,size=10G,align=2M \

-device nvdimm,memdev=mem1,id=nv1,label-size=2M \

-cdrom /Installers_and_images/"Operating Systems"/Ubuntu/ubuntu-18.10-server-amd64.iso \

-daemonize

Example: Using the qemu-system-x86_64 command to start the VM and install OS.

The arguments used above are:

- file= creates the guest operating system image in the /VMachines/qemu/ directory on the host.

- -m starts the guest with 4GB of memory and allows it to use up to 32GB as required.

- -smp simulates symmetric multiprocessor assigning four CPUs to the guest.

- -machine defines the guest machine type to be a PC with KVM acceleration and non-volatile (persistent memory) support.

- -enable-KVM enables full KVM virtualization support.

- -vnc :0 uses port 5900 + display for this guest to provide remote VNC access.

- -netdev defines the network device configuration.

- -object memory-backend-file allows the guest to access persistent memory. We use the -object memory-backend-file,id=mem1,share,mem-path=/pmemfs0/pmem0,size=10G,align=2M option to memory-map 10GB from /pmemfs0/pmem0. The 2MB alignment helps improve performance because a smaller page table is needed when huge pages are used. (Note: The ID should match a mdev entry in the -device list. Here, we use mem1. Multiple -object and -device entries can exist if they have unique identifiers and mem-path files.)

- -device creates an NVDIMM instance within the guest kernel.

- -cdrom provides a path to an ISO image from which the guest will boot.

- -daemon daemonizes the guest virtual machine to run in the background.

Connect to the Guest VM Using VNC

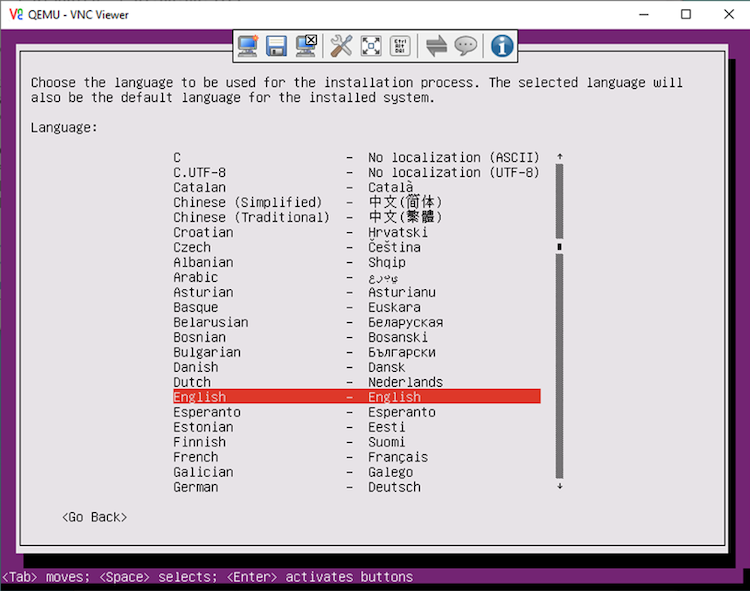

Connect to the VM using VNC port 0 on the host (port 5900 remotely). For the first boot of the VM, you will need to run through the OS installation, shown in Figure 1.

Figure 1. Guest VM OS installation through the VNC connection.

Start the QEMU Guest VM

Once the OS has been installed on the VM, you can remove or comment out the -cdrom option when starting the guest VM, as shown below.

$ sudo qemu-system-x86_64 -drive file=/VMachines/qemu/vm.raw,format=raw,index=0,media=disk \

-m 4G,slots=4,maxmem=32G \

-smp 4 \

-machine pc,accel=kvm,nvdimm=on \

-enable-kvm \

-vnc :0 \

-netdev bridge,br=br0,id=net0 -device virtio-net-pci,netdev=net0 \

-object memory-backend-file,id=mem1,share,mem-path=/pmemfs0/pmem0,size=10G,align=2M \

-device nvdimm,memdev=mem1,id=nv1,label-size=2M \

-daemonize

Example: Using the qemu-system-x86_64 command to start the VM.

Configuring the Intel® Optane™ DC Persistent Memory Within the Guest VM

Persistent memory is attached to the VM as a special block device. With the VM booted, check that /dev/pmem0 exists. If you attached multiple virtual NVDIMMs using the -object and -device command arguments, you will see multiple persistent memory devices under /dev.

Install the ndctl Tool Within the Guest VM

You need to install the ndctl tool within your guest VM using the package manager of your favorite Linux distribution.

$ sudo apt install ndctl

Use ndctl list to check the namespace mode within the VM, as shown below. This should be raw by default under the VM.

$ sudo ndctl list

{

"dev":"namespace0.0",

"mode":"raw",

"size":10735321088,

"sector_size":512,

"blockdev":"pmem0",

"numa_node":0

}

$

Example: Check the "mode" of the namespace0.0.

As currently configured, the pmem0 device can only be used for block I/O. This is because the namespace is configured in raw mode by default, which does not support the dax option. The dax option is what allows you to memory map persistent memory and access it directly through loads and stores from user space.

Change the Default Namespace Type to fsdax within the Guest

- To change the mode, run ndctl create-namespace -f -e with the namespace name, and specify mode fsdax, as shown below.

$ sudo ndctl create-namespace -f -e namespace0.0 --fsdax

{

"dev":"namespace0.0",

"mode":"fsdax",

"map": "dev",

"size":"9.84 GiB (10.57 GB)",

"uuid":"3ac049f8-bcf6-4e30-b74f-7ed33916e405"

"raw_uuid:"b9942cdd-502c-4e31-af51-46ac5d5a1f93"

"sector_size":512,

"blockdev":"pmem0",

"numa_node":0

}

$

Example: Change the namespace mode from raw to fsdax.

- When the command completes, you can check whether your namespace is in fsdax mode by running ndctl list -N, shown below.

$ ndctl list -N

{

"dev":"namespace0.0",

"mode":"fsdax",

"map":"dev",

"size":10565451776,

"uuid":"3ac049f8-bcf6-4e30-b74f-7ed33916e405",

"raw_uuid":"b9942cdd-502c-4e31-af51-46ac5d5a1f93",

"sector_size":512,

"blockdev":"pmem0",

"numa_node":0

}

$

Example: Confirm the namespace mode as fsdax.

If your namespace is in fsdax mode, you are done. Now you must create the filesystem and mount it (see the example below) as follows:

- Create a mount point for your persistent memory.

- Create a filesystem in the device using either ext4 or xfs.

- Mount the device—with the dax option—in the mount point created.

$ sudo mkfs.xfs -f /dev/pmem0

meta-data=/dev/pmem0 isize=512 agcount=4, agsize=644864 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=0, rmapbt=0, reflink=0

data = bsize=4096 blocks=2579456, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

$ sudo mount -o dax /dev/pmem0 /pmemfs0

$

Example: Mount the pmem0 device for the KVM/QEMU VMs to access the persistent memory.

Conclusion

In this tutorial, you set up your Intel Optane DC persistent memory in app-direct mode using the ipmctl and ndctl tools, and then applied similar steps under the guest VM. Applications will be able to utilize the persistent memory using standard file APIs or the Persistent Memory Developer Kit (PMDK). The PMDK is a suite of libraries that allows developers to create or modify an existing application to access persistent memory directly. This bypasses the filesystem page cache and the kernel I/O path to provide the fastest access to persistent memory.

References

- Get Started with Intel® Optane™ DC Persistent Memory

- Quick Start Guide: Provision Intel® Optane™ DC Persistent Memory

- Provision Intel® Optane™ DC Persistent Memory in Linux*

- ipmctl is a utility for configuring and managing Intel Optane DC memory modules.

- ndctl is a utility library for managing the libnvdimm (non-volatile memory device) subsystem in the Linux kernel.

- ipmctl and ndctl Cheat Sheets

- KVM/QEMU Ubuntu Host - How to create a bridged-network

- KVM Ubuntu – How to create bridged-network configuration to be used by the QEMU

- KVM: Creating a bridged network with NetPlan on Ubuntu bionic

- Intel® Optane™ DC Persistent Memory Overview

- Configuring Intel® Optane™ DC Persistent Memory for Best Performance