This article is part three of three articles to introduce the reader to persistent memory.

- Learn More Part 1 - Introduction

- Learn More Part 2 - Persistent Memory Architecture

- Learn More Part 3 - Operating System Support for Persistent Memory

Note: This article will be making references to chapters within the Programming Persistent Memory ebook on pmem.io

Operating System Support for Persistent Memory

This article is the third article in a series that describes how operating systems manage persistent memory as a platform resource and describe their applications' options to use persistent memory. We first compare memory and storage in popular computer architectures and then explain how operating systems have been extended for persistent memory.

Operating System Support for Memory and Storage

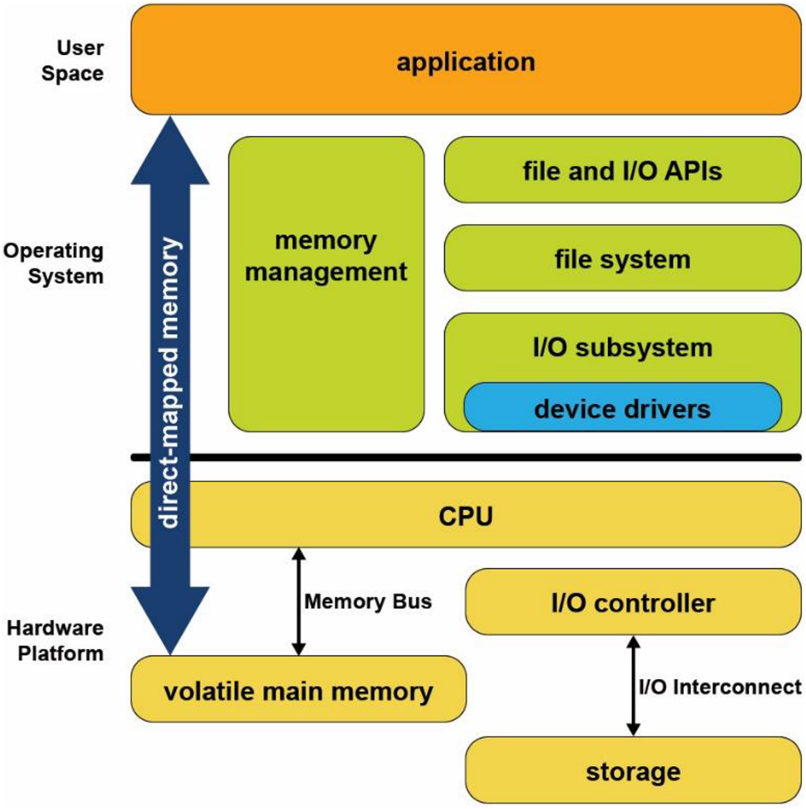

Figure 1 shows a simplified view of how operating systems manage storage and volatile memory. As shown, the volatile main memory is attached directly to the CPU through a memory bus. The operating system manages the mapping of memory regions directly into the application's visible memory address space. Storage, which usually operates at speeds much slower than the CPU, is attached through an I/O controller. The operating system handles access to the storage through device driver modules loaded into the operating system's I/O subsystem.

Combining direct application access to volatile memory with the operating system I/O access to storage devices supports the most common application programming model taught in introductory programming classes. In this model, developers allocate data structures and operate on them at byte granularity in memory. When the application wants to save data, it uses standard file API system calls to write the data to an open file. Within the operating system, the file system executes this write by performing one or more I/O operations to the storage device. Because these I/O operations are usually much slower than CPU speeds, the operating system typically suspends the application until the I/O completes.

Since persistent memory can be accessed directly by applications and can persist data in place, it allows operating systems to support a new programming model that combines memory performance while persisting data like a non-volatile storage device. Fortunately for developers, while the first generation of persistent memory was under development, Microsoft Windows and Linux designers, architects and developers collaborated in the Storage and Networking Industry Association (SNIA) to define a standard programming model. Hence, the methods for using persistent memory described in this article are available in both operating systems. More details can be found in the SNIA NVM programming model specification.

Persistent Memory As Block Storage

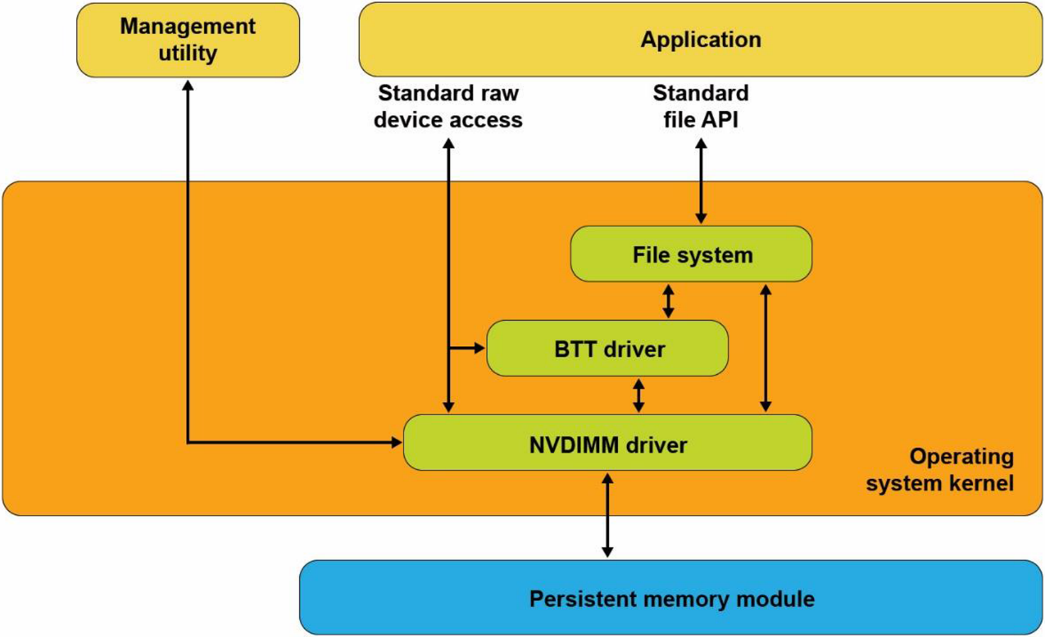

The first operating system extension for persistent memory is the ability to detect the existence of persistent memory modules and load a device driver into the operating system's I/O subsystem, as shown in Figure 2. This NVDIMM driver serves two essential functions. First, it provides an interface for management and system administrator utilities to configure and monitor the persistent memory hardware state. Second, it functions similarly to the storage device drivers.

The NVDIMM driver presents persistent memory to applications and operating system modules as a fast block storage device. This means applications, file systems, volume managers, and other storage middleware layers can use persistent memory the same way they use storage today, without modifications.

Figure 2 also shows the Block Translation Table (BTT) driver, which can be optionally configured into the I/O subsystem. Storage devices such as HDDs and SSDs present a native block size with 512b and 4k bytes as two common native block sizes. Some storage devices, especially NVM Express SSDs, guarantee that when a power failure or server failure occurs while a block write is in-flight, either all or none of the block will be written. The BTT driver provides the same guarantee when using persistent memory as a block storage device. Most applications and file systems depend on this atomic write guarantee and should be configured to use the BTT driver. However, operating systems also can bypass the BTT driver for applications that implement protection against partial block updates.

Persistent Memory-Aware File Systems

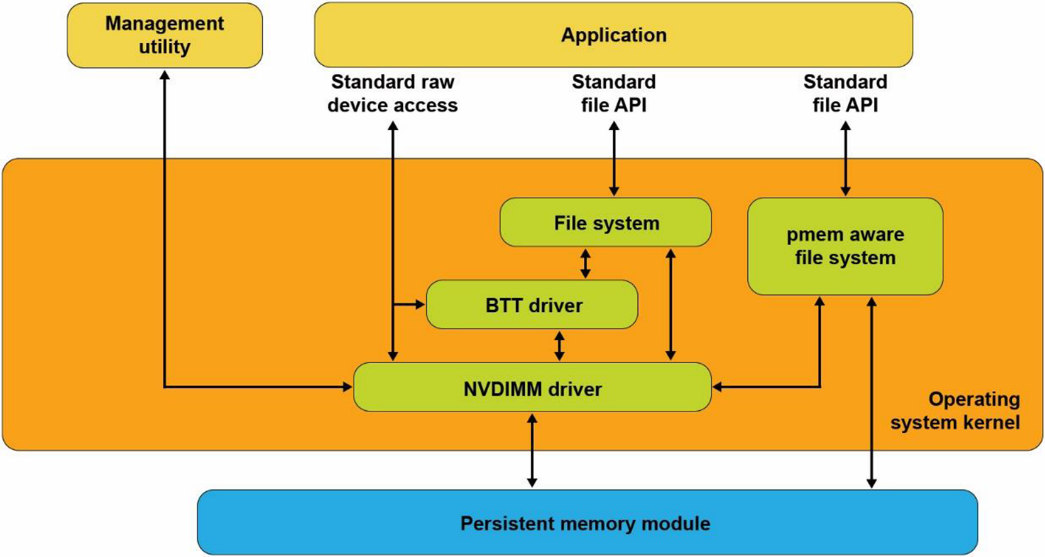

The next extension to the operating system is to make the file system aware of and be optimized for persistent memory. File systems that have been extended for persistent memory include Linux ext4 and XFS, and Microsoft Windows NTFS.

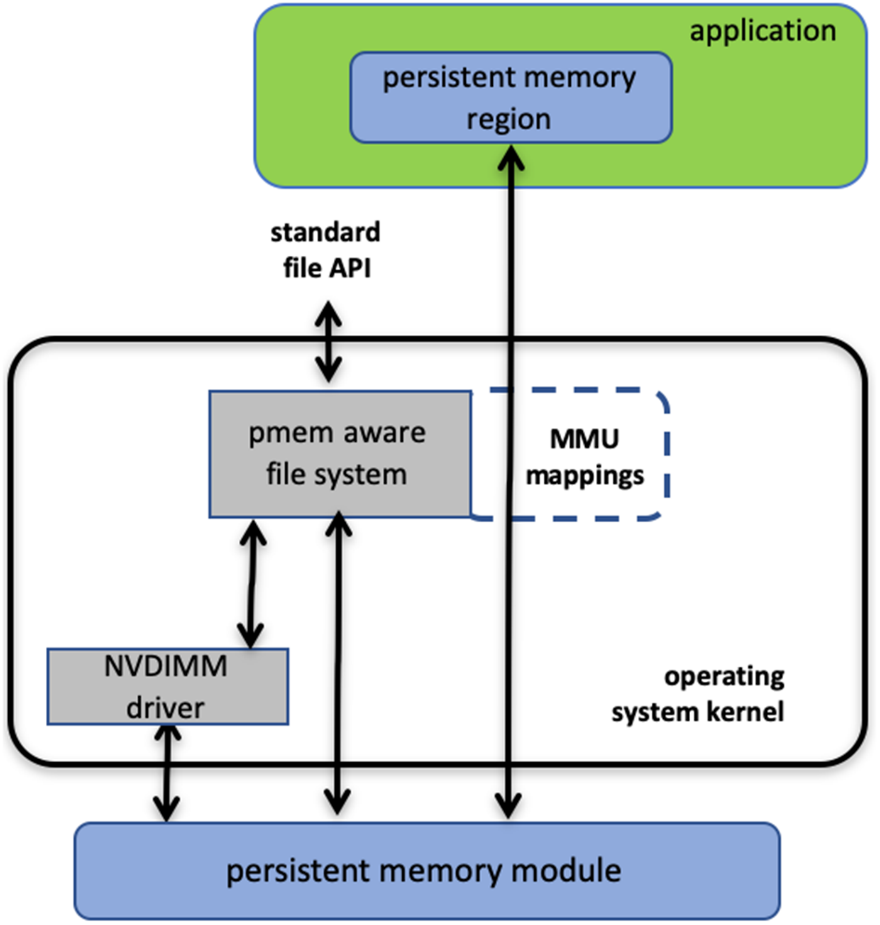

As shown in Figure 3, these file systems can either use the block driver in the I/O subsystem or bypass the I/O subsystem to use persistent memory as byte-addressable load/store memory directly. Applications that use the direct access (DAX) method achieve the fastest and shortest path to data stored in persistent memory.

In addition to eliminating the I/O operation, the DAX method enables small data writes to be executed faster than conventional block storage devices that require the file system to perform a read-modify-write operation.

These persistent memory-aware file systems continue to present the familiar, standard file APIs to applications, including the open, close, read, and write system calls. This allows applications to continue using the familiar file APIs while benefiting from persistent memory higher performance.

Memory-Mapped Files

Before describing the next operating system option for using persistent memory, this section reviews memory-mapped files in Linux and Windows. When memory mapping a file, the operating system adds a range to the application's virtual address space, which corresponds to a range of the file, paging file data into physical memory as required. This allows an application to access and modify file data as byte-addressable in-memory data structures. This can improve performance and simplify application development, especially for applications that make frequent, small updates to file data.

Applications memory-map a file by first opening the file, then passing the resulting filehandle as a parameter to the mmap() system call in Linux or MapViewOfFile() in Windows. Both return a pointer to the in-memory copy of a portion of the file. The code snippet below shows an example of Linux C code that memory maps a file, writes data into the file by accessing it like memory, and then uses the msync system call to perform the I/O operation to write the modified data to the file on the storage device. The second code sample below shows the equivalent operations on Windows. We walk through and highlight the key steps in both code samples.

#include <err.h>

#include <fcntl.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <unistd.h>

int

main(int argc, char *argv[])

{

int fd;

struct stat stbuf;

char *pmaddr;

if (argc != 2) {

fprintf(stderr, "Usage: %s filename\n",

argv[0]);

exit(1);

}

if ((fd = open(argv[1], O_RDWR)) < 0)

err(1, "open %s", argv[1]);

if (fstat(fd, &stbuf) < 0)

err(1, "stat %s", argv[1]);

/*

* Map the file into our address space for read

* & write. Use MAP_SHARED so stores are visible

* to other programs.

*/

if ((pmaddr = mmap(NULL, stbuf.st_size,

PROT_READ|PROT_WRITE,

MAP_SHARED, fd, 0)) == MAP_FAILED)

err(1, "mmap %s", argv[1]);

/* Don't need the fd anymore because the mapping stays around */

close(fd);

/* store a string to the Persistent Memory */

strcpy(pmaddr, "This is new data written to the

file");

/*

* Simplest way to flush is to call msync().

* The length needs to be rounded up to a 4k page.

*/

if (msync((void *)pmaddr, 4096, MS_SYNC) < 0)

err(1, "msync");

printf("Done.\n");

exit(0);

}

- Lines 18-28: We verify the caller passed a file name that can be opened. The open call will create the file if it does not already exist.

- Line 27: We retrieve the file statistics to use the length when we memory map the file.

- Line 34: We map the file into the application’s address space to allow our program to access the contents as if in memory. In the second parameter, we pass the length of the file, requesting Linux to initialize memory with the full file. We also map the file with both READ and WRITE access and also as SHARED allowing other processes to map the same file.

- Line 40: We retire the file descriptor which is no longer needed once a file is mapped.

- Line 43: We write data into the file by accessing it like memory through the pointer returned by mmap.

- Line 50: We explicitly flush the newly written string to the backing storage device.

The next example of C code shows that memory maps a file, writes data into the file, and then uses the FlushViewOfFile() and FlushFileBuffers() system calls to flush the modified data to the file on the storage device.

#include <fcntl.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <Windows.h>

int

main(int argc, char *argv[])

{

if (argc != 2) {

fprintf(stderr, "Usage: %s filename\n",

argv[0]);

exit(1);

}

/* Create the file or open if the file exists */

HANDLE fh = CreateFile(argv[1],

GENERIC_READ|GENERIC_WRITE,

0,

NULL,

OPEN_EXISTING,

FILE_ATTRIBUTE_NORMAL,

NULL);

if (fh == INVALID_HANDLE_VALUE) {

fprintf(stderr, "CreateFile, gle: 0x%08x",

GetLastError());

exit(1);

}

/*

* Get the file length for use when

* memory mapping later

* */

DWORD filelen = GetFileSize(fh, NULL);

if (filelen == 0) {

fprintf(stderr, "GetFileSize, gle: 0x%08x",

GetLastError());

exit(1);

}

/* Create a file mapping object */

HANDLE fmh = CreateFileMapping(fh,

NULL, /* security attributes */

PAGE_READWRITE,

0,

0,

NULL);

if (fmh == NULL) {

fprintf(stderr, "CreateFileMapping,

gle: 0x%08x", GetLastError());

exit(1);

}

/*

* Map into our address space and get a pointer

* to the beginning

* */

char *pmaddr = (char *)MapViewOfFileEx(fmh,

FILE_MAP_ALL_ACCESS,

0,

0,

filelen,

NULL); /* hint address */

if (pmaddr == NULL) {

fprintf(stderr, "MapViewOfFileEx,

gle: 0x%08x", GetLastError());

exit(1);

}

/*

* On windows must leave the file handle(s)

* open while mmaped

* */

/* Store a string to the beginning of the file */

strcpy(pmaddr, "This is new data written to

the file");

/*

* Flush this page with length rounded up to 4K

* page size

* */

if (FlushViewOfFile(pmaddr, 4096) == FALSE) {

fprintf(stderr, "FlushViewOfFile,

gle: 0x%08x", GetLastError());

exit(1);

}

/* Flush the complete file to backing storage */

if (FlushFileBuffers(fh) == FALSE) {

fprintf(stderr, "FlushFileBuffers,

gle: 0x%08x", GetLastError());

exit(1);

}

/* Explicitly unmap before closing the file */

if (UnmapViewOfFile(pmaddr) == FALSE) {

fprintf(stderr, "UnmapViewOfFile,

gle: 0x%08x", GetLastError());

exit(1);

}

CloseHandle(fmh);

CloseHandle(fh);

printf("Done.\n");

exit(0);

}

- Lines 1-31: As in the previous Linux example, we take the file name passed through argv and open the file.

- Line 37: We retrieve the file size to use later when memory mapping.

- Line 45: We take the first step to memory mapping a file by creating the file mapping. This step does not yet map the file into our application’s memory space.

- Line 62: This step maps the file into our memory space.

- Line 80: As in the previous Linux example, we write a string to the beginning of the file, accessing the file like memory.

- Line 87: We flush the modified memory page to the backing storage.

- Line 94: We flush the full file to backing storage, including any additional file metadata maintained by Windows.

- Line 101-112: We unmap the file, close the file, then exit the program.

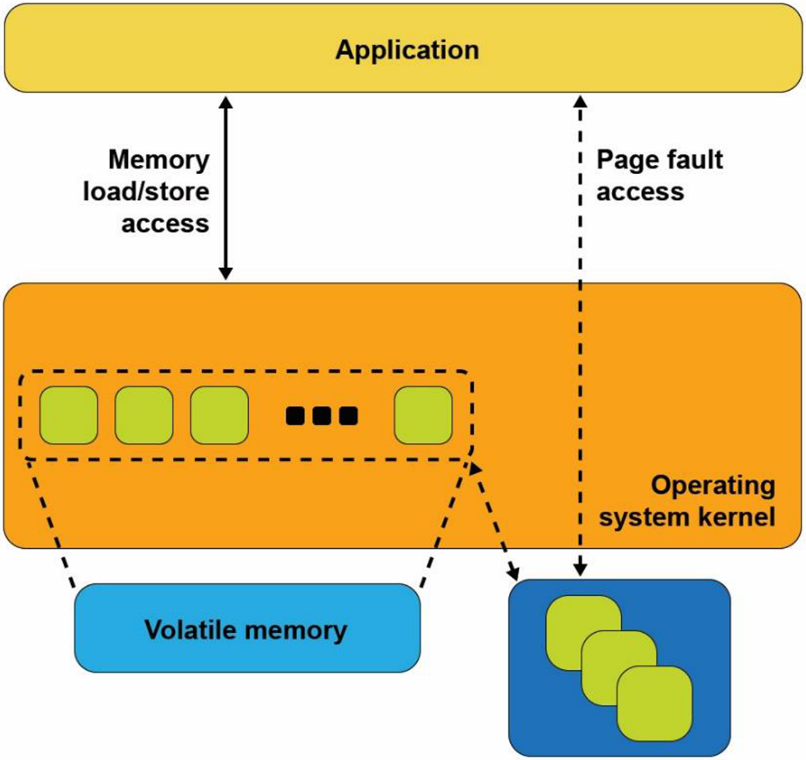

Figure 4 shows what happens inside the operating system when an application calls mmap() on Linux or CreateFileMapping() on Windows. The operating system allocates memory from its memory page cache, maps that memory into the application's address space, and creates the association with the file through a storage device driver.

As the application reads pages of the file in memory, and if those pages are not present in memory, a page fault exception is raised to the operating system, which will then read that page into the main memory through storage I/O operations. The operating system also tracks writes to those memory pages and schedules asynchronous I/O operations to write the modifications back to the primary copy of the file on the storage device. Alternatively, suppose the application wants to ensure updates are written back to storage before continuing as we did in our code example. In that case, the msync system call on Linux or FlushViewOfFile on Windows executes the flush to disk. This may cause the operating system to suspend the program until the write finishes, similar to the file-write operation described earlier.

This description of memory-mapped files using storage highlights some of the disadvantages. First, a portion of the limited kernel memory page cache in the main memory is used to store a copy of the file. Second, for files that cannot fit in memory, the application may experience unpredictable pauses as the operating system moves pages between memory and storage through I/O operations. Third, updates to the in-memory copy are not persistent until written back to storage so that they can be lost in the event of a failure.

Persistent Memory Direct Access (DAX)

The persistent memory direct access feature in operating systems, referred to as DAX in Linux and Windows, uses the memory-mapped file interfaces described in the previous section but takes advantage of persistent memory's native ability to store data and be used as memory. Persistent memory can be natively mapped as application memory, eliminating the operating system's need to cache files in volatile main memory.

The system administrator creates a file system on the persistent memory module and mounts that file system into the operating system's file system tree to use DAX. For Linux users, persistent memory devices will appear as /dev/pmem* device special files. To show the persistent memory physical devices, system administrators can use the ndctl and ipmctl utilities shown in the bash window below.

# ipmctl show -dimm

DimmID | Capacity | HealthState | ActionRequired | LockState | FWVersion

==============================================================================

0x0001 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x0011 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x0021 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x0101 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x0111 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x0121 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x1001 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x1011 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x1021 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x1101 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x1111 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

0x1121 | 252.4 GiB | Healthy | 0 | Disabled | 01.02.00.5367

# ipmctl show -region

SocketID | ISetID | PersistentMemoryType | Capacity | FreeCapacity | HealthState

===========================================================================================

0x0000 | 0x2d3c7f48f4e22ccc | AppDirect | 1512.0 GiB | 0.0 GiB | Healthy

0x0001 | 0xdd387f488ce42ccc | AppDirect | 1512.0 GiB | 1512.0 GiB | Healthy

Listing 3-4. Displaying persistent memory physical devices, regions, and namespaces on Linux

# ndctl list -DRN

{

"dimms":[

{

"dev":"nmem1",

"id":"8089-a2-1837-00000bb3",

"handle":17,

"phys_id":44,

"security":"disabled"

},

{

"dev":"nmem3",

"id":"8089-a2-1837-00000b5e",

"handle":257,

"phys_id":54,

"security":"disabled"

},

[...snip...]

{

"dev":"nmem8",

"id":"8089-a2-1837-00001114",

"handle":4129,

"phys_id":76,

"security":"disabled"

}

],

"regions":[

{

"dev":"region1",

"size":1623497637888,

"available_size":1623497637888,

"max_available_extent":1623497637888,

"type":"pmem",

"iset_id":-2506113243053544244,

"mappings":[

{

"dimm":"nmem11",

"offset":268435456,

"length":270582939648,

"position":5

},

{

"dimm":"nmem10",

"offset":268435456,

"length":270582939648,

"position":1

},

{

"dimm":"nmem9",

"offset":268435456,

"length":270582939648,

"position":3

},

{

"dimm":"nmem8",

"offset":268435456,

"length":270582939648,

"position":2

},

{

"dimm":"nmem7",

"offset":268435456,

"length":270582939648,

"position":4

},

{

"dimm":"nmem6",

"offset":268435456,

"length":270582939648,

"position":0

}

],

"persistence_domain":"memory_controller"

},

{

"dev":"region0",

"size":1623497637888,

"available_size":0,

"max_available_extent":0,

"type":"pmem",

"iset_id":3259620181632232652,

"mappings":[

{

"dimm":"nmem5",

"offset":268435456,

"length":270582939648,

"position":5

},

{

"dimm":"nmem4",

"offset":268435456,

"length":270582939648,

"position":1

},

{

"dimm":"nmem3",

"offset":268435456,

"length":270582939648,

"position":3

},

{

"dimm":"nmem2",

"offset":268435456,

"length":270582939648,

"position":2

},

{

"dimm":"nmem1",

"offset":268435456,

"length":270582939648,

"position":4

},

{

"dimm":"nmem0",

"offset":268435456,

"length":270582939648,

"position":0

}

],

"persistence_domain":"memory_controller",

"namespaces":[

{

"dev":"namespace0.0",

"mode":"fsdax",

"map":"dev",

"size":1598128390144,

"uuid":"06b8536d-4713-487d-891d-795956d94cc9",

"sector_size":512,

"align":2097152,

"blockdev":"pmem0"

}

]

}

]

}

When a file system is created and mounted using /dev/pmem* devices, they can be identified using the df command, as shown in this bash window below.

$ df -h /dev/pmem*

Filesystem Size Used Avail Use% Mounted on

/dev/pmem0 1.5T 77M 1.4T 1% /mnt/pmemfs0

/dev/pmem1 1.5T 77M 1.4T 1% /mnt/pmemfs1

Windows developers will use PowerShellCmdlets. In either case, assuming the administrator has granted you rights to create files, you can create one or more files in the persistent memory and then memory map those files to your application using the same method shown in the next two cmd windows below:

PS C:\Users\Administrator> Get-PmemDisk

Number Size Health Atomicity Removable Physical device IDs Unsafe shutdowns

------ ---- ------ --------- --------- ------------------- ----------------

2 249 GB Healthy None True {1} 36

PS C:\Users\Administrator> Get-Disk 2 | Get-Partition

PartitionNumber DriveLetter Offset Size Type

--------------- ----------- ------ ---- ----

1 24576 15.98 MB Reserved

2 D 16777216 248.98 GB Basic

Managing persistent memory as files has several benefits:

- You can leverage the rich features of leading file systems to organize, manage, naming, and limit access for user's persistent memory files and directories.

- You can apply the familiar file system permissions and access rights management to protect data stored in persistent memory and share persistent memory between multiple users.

- System administrators can use existing backup tools that rely on file system revision-history tracking.

- You can build on existing memory mapping APIs as described earlier, and applications currently using memory-mapped files and can use direct persistent memory without modifications.

Once a file-backed by persistent memory is created and opened, an application still calls mmap() or MapViewOfFile() to get a pointer to the persistent media. The difference, shown in Figure 5, is that the persistent memory-aware file system recognizes that the file is on persistent memory and programs the memory management unit (MMU) in the CPU to map the persistent memory directly into the application's address space. Neither a copy in kernel memory nor synchronizing to storage through I/O operations is required. The application can use the pointer returned by mmap() or MapViewOfFile() to operate on its data in place directly in the persistent memory. Since no kernel, I/O operations are required. Because the full file is mapped into the application's memory, it can manipulate large collections of data objects with higher and more consistent performance than files on I/O-accessed storage.

The following snippet shows a C source code example that uses DAX to write a string directly into persistent memory. This example uses one of the persistent memory API libraries included in Linux and Windows called libpmem. We describe the use of two of the functions available in libpmem in the following steps. The APIs in libpmem are common across Linux and Windows and abstract the differences between underlying operating system APIs, so this sample code is portable across both operating system platforms.

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <stdio.h>

#include <errno.h>

#include <stdlib.h>

#ifndef _WIN32

#include <unistd.h>

#else

#include <io.h>

#endif

#include <string.h>

#include <libpmem.h>

/* Using 4K of pmem for this example */

#define PMEM_LEN 4096

int

main(int argc, char *argv[])

{

char *pmemaddr;

size_t mapped_len;

int is_pmem;

if (argc != 2) {

fprintf(stderr, "Usage: %s filename\n",

argv[0]);

exit(1);

}

/* Create a pmem file and memory map it. */

if ((pmemaddr = pmem_map_file(argv[1], PMEM_LEN,

PMEM_FILE_CREATE, 0666, &mapped_len,

&is_pmem)) == NULL) {

perror("pmem_map_file");

exit(1);

}

/* Store a string to the persistent memory. */

char s[] = "This is new data written to the file";

strcpy(pmemaddr, s);

/* Flush our string to persistence. */

if (is_pmem)

pmem_persist(pmemaddr, sizeof(s));

else

pmem_msync(pmemaddr, sizeof(s));

/* Delete the mappings. */

pmem_unmap(pmemaddr, mapped_len);

printf("Done.\n");

exit(0);

}

• Lines 7-11: We handle the differences between Linux and Windows for the include files.

• Line 13: We include the header file for the libpmem API used in this example.

• Lines 25-29: We take the pathname argument from the command line argument.

• Line 32-37: The pmem_map_file function in libpmem handles opening the file and mapping it into our address space on both Windows and Linux. Since the file resides on persistent memory, the operating system programs the hardware MMU in the CPU to map the persistent memory region into our application's virtual address space. Pointer pmemaddr is set to the beginning of that region. The pmem_map_file function can also be used for memory mapping disk-based files through kernel main memory and directly mapping persistent memory, so is_pmem is set to TRUE if the file resides on persistent memory and FALSE if mapped through main memory.

• Line 41: We write a string into persistent memory.

• Lines 44-47: If the file resides on persistent memory, the pmem_persist function uses the userspace machine instructions to ensure our string is flushed through CPU cache levels to the power-fail safe domain and ultimately to persistent memory. If our file resided on disk-based storage, Linux mmap or Windows FlushViewOfFile would be used to flushed to storage. Note that we can pass small sizes here (the size of the string written is used in this example) instead of requiring flushes at page granularity when using msync() or FlushViewOfFile().

• Line 50: Finally, we unmap the persistent memory region.

Summary

The image below shows the complete view of the operating system support that this article describes. As we discussed, an application can use persistent memory as a fast SSD, more directly through a persistent memory-aware file system, or mapped directly into the application's memory space with the DAX option. DAX leverages operating system services for memory-mapped files but takes advantage of the server hardware's ability to map persistent memory directly into the application's address space. This avoids the need to move data between main memory and storage.