Overview

Explore the capabilities of your developer kit by running tutorials that use the OpenVINO™ toolkit, which is an open-source toolkit for optimizing and deploying AI inference on Intel® platforms. This includes computer vision, automatic speech recognition, and natural language processing using models trained with popular frameworks such as TensorFlow* and PyTorch*.

Upon successful completion of this guide, you should be able to:

- Understand the hardware setup of the developer kit

- Get started with deep learning inference for computer vision on the CPU and integrated graphics processing unit (GPU) using the OpenVINO™ toolkit

- Know where to find tutorials and references to expand your knowledge of the OpenVINO™ toolkit

Prerequisites

| Recommended Knowledge and Experience | You are familiar with executing Linux* commands. |

| Quick Start Card (included with the kit) |

|

| Prepare the following |

|

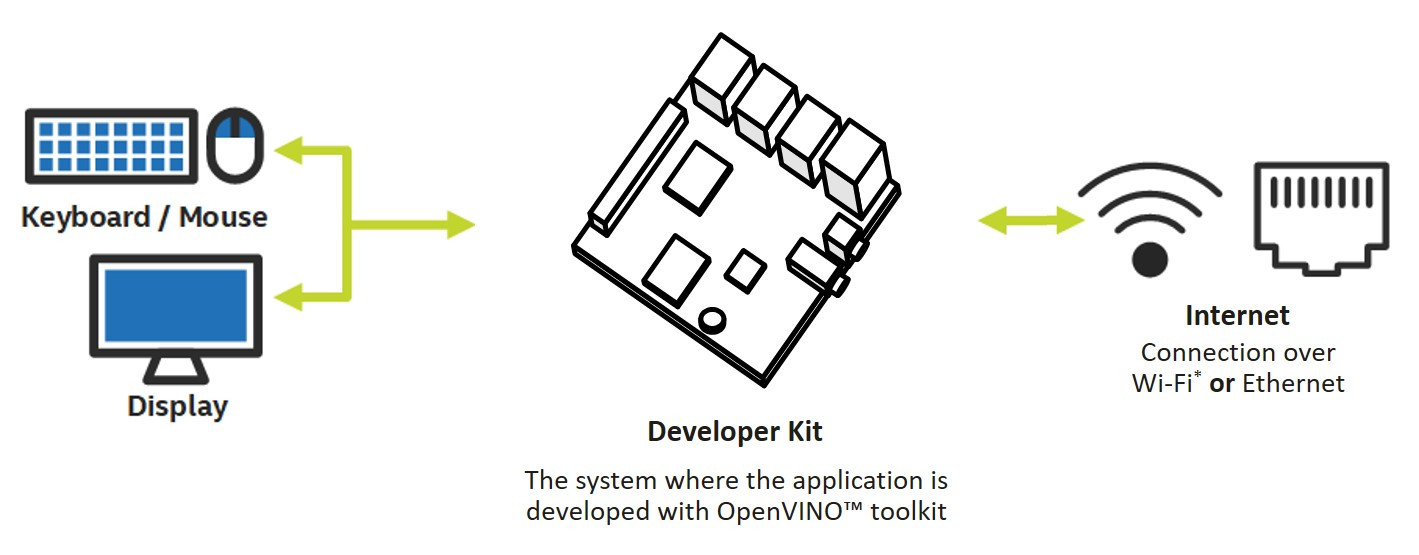

How It Works

Get Started

STEP 1: Install the Operating System

Perform the following procedure to install the operating system on the developer kit.

- On your PC, download the Ubuntu* 20.04.5 LTS image (ISO, 3.6 GB).

- Create a bootable flash drive with the Rufus* tool.

- Insert the bootable flash drive and power on the developer kit.

If the developer kit does not boot from the bootable flash drive, change the boot priority in the system BIOS. - Follow the prompts to install the operating system with the default configurations. For detailed instructions, see the Ubuntu guide.

- Power down the developer kit and remove the USB flash drive.

- Power on the developer kit. You will see Ubuntu is successfully installed.

- Connect the developer kit to the internet before you proceed to the next step.

STEP 2: Install OpenVINO™ Development Tools

Users in China may have issues downloading some of these packages. To resolve the issues, try alternative servers to download.

Perform the following procedure on the developer kit.

- Update your packages.

sudo apt update - Install the required package to create a Python* virtual environment.

sudo apt install python3-venv - Create a Python* virtual environment. This avoids dependency conflicts with the Python* software installed on the system.

python3 -m venv openvino_env - Activate the Python* virtual environment.

source openvino_env/bin/activate - Install and update PIP.

python -m pip install --upgrade pip - Install OpenVINO™ Development Tools with TensorFlow* 2.x ,ONNX*, and PyTorch* frameworks. This step may take a while depending on your internet connection. The installation is complete when you see the command prompt reappear.

pip install openvino-dev[onnx,tensorflow2,pytorch]==2022.2.0 - Verify the installation.

mo -hYou will see the help message for Model Optimizer, indicating the installation is successful.

STEP 3: Try Out Some Demos

Before you start, set up the packages and models you need for the demos. Perform the procedures in this section on the developer kit.

- Install the dependency packages.

sudo apt install git wget - Clone the Open Model Zoo for OpenVINO™ Toolkit, which contains demo applications.

git clone --recurse-submodules https://github.com/openvinotoolkit/open_model_zoo.git - Install the requirements for the demos.

pip install open_model_zoo/demos/common/python

Next are step-by-step instructions on how to run each demo, which showcases the capabilities of Intel® platform with the OpenVINO™ toolkit.

Demo 1: Person or Object Detection

This is a demo using pretrained deep learning models from Intel to detect people in a pre-recorded video. This demo uses the CPU on the developer kit to run inference.

- Download the model via Model Downloader.

omz_downloader --name person-detection-retail-0013 -o models - Download the pre-recorded video to use as the sample for the model to detect people.

wget https://github.com/intel-iot-devkit/sample-videos/raw/master/one-by-one-person-detection.mp4 - Run the demo. Depending on the configuration of your developer kit, you can expect an average frame rate of 15 fps.

Use the keyboard shortcut Ctrl + C to close the demo window.python open_model_zoo/demos/object_detection_demo/python/object_detection_demo.py -m=models/intel/person-detection-retail-0013/FP32/person-detection-retail-0013.xml -i=one-by-one-person-detection.mp4 -at ssd

You can use same procedure to download different object detection models and run on the same sample. Learn more from https://github.com/openvinotoolkit/open_model_zoo/blob/master/demos/object_detection_demo/python/README.md

Demo 2: Automatic Speech Recognition

Use one of the automatic speech recognition (ASR) deep learning model to recognize a pre-recorded speech and convert to text.

- Download the model via Model Downloader.

omz_downloader --name quartznet-15x5-en -o models - Convert the model to OpenVINO™ Toolkit Intermediate Representation (IR) format.

omz_converter --name quartznet-15x5-en -d models - Install the required dependency package.

pip install librosa - Download the pre-recorded audio file to use with sample for ASR.

wget http://www.fit.vutbr.cz/~motlicek/sympatex/f2bjrop1.0.wav -O sample_audio.wav - Run the demo.

Use the keyboard shortcut Ctrl + C to close the demo window.python open_model_zoo/demos/speech_recognition_quartznet_demo/python/speech_recognition_quartznet_demo.py -m=models/public/quartznet-15x5-en/FP32/quartznet-15x5-en.xml -i=sample_audio.wav

To learn more about APIs for OpenVINO™ Toolkit APIs, see https://github.com/openvinotoolkit/openvino_notebooks

Demo 3: Person or Object Detection Using the Integrated Graphics Processing Unit (GPU) to Run Inference

This is an advanced demo of running inference using the integrated GPU to detect people in a pre-recorded video.

- Download the OpenVINO™ Toolkit runtime package.

wget https://storage.openvinotoolkit.org/repositories/openvino/packages/2022.2/linux/l_openvino_toolkit_ubuntu20_2022.2.0.7713.af16ea1d79a_x86_64.tgz - Extract the package.

tar xf l_openvino_toolkit_ubuntu20_2022.2.0.7713.af16ea1d79a_x86_64.tgz - Create the openvino directory in the /opt/intel path.

sudo mkdir -p /opt/intel/openvino - Move the contents of the package to the /opt/intel/openvino directory.

sudo mv l_openvino_toolkit_ubuntu20_2022.2.0.7713.af16ea1d79a_x86_64/* /opt/intel/openvino - Navigate to the /opt/intel/openvino directory and execute the setupvars.sh script to set up the environment variables.

cd /opt/intel/openvino source setupvars.sh - Install the OpenCL™ GPU driver.

cd install_dependencies/ sudo -E ./install_NEO_OCL_driver.sh - Reboot the developer kit.

sudo reboot - When the desktop appears after reboot, open the openvino_env Python* virtual environment.

source openvino_env/bin/activate - Download the model and the pre-recorded video. You can skip this step if you've completed Demo 1 earlier.

omz_downloader --name person-detection-retail-0013 -o models wget https://github.com/intel-iot-devkit/sample-videos/raw/master/one-by-one-person-detection.mp4 - Run inference using the integrated GPU. This is the same command as the one in Demo 1 but with the additional option -d GPU. Depending on the configuration of your developer kit, you can expect an average frame rate of 25 fps.

Use the keyboard shortcut Ctrl + C to close the demo window.python open_model_zoo/demos/object_detection_demo/python/object_detection_demo.py -m=models/intel/person-detection-retail-0013/FP32/person-detection-retail-0013.xml -i=one-by-one-person-detection.mp4 -at ssd -d GPU

Conclusion

You have now experienced computer vision inferencing using the CPU and the integrated GPU on the developer kit.

In this get started guide, we have explored three demos that showcase computer vision and speech recognition capabilities on Intel® architecture.

Learn More

Explore additional documentation on the OpenVINO™ toolkit and related training resources:

OpenVINO™ toolkit is an open-source toolkit for optimizing and deploying AI inference.

Open Model Zoo for OpenVINO™ Toolkit provides a wide variety of free, pre-trained deep learning models and demo applications for you to try out deep learning in Python*, C++*, or OpenCV* Graph API (G-API).

OpenVINO™ Notebooks is a collection of ready-to-run Jupyter* notebooks for learning and experimenting with the OpenVINO™ Toolkit. The notebooks provide an introduction to OpenVINO basics and teach developers how to leverage available API for optimized deep learning inference.

Intel® OpenVINO™ AI Vision Certificate Course is an online training course that includes virtual classroom instruction and hands-on projects, where you learn to use Intel® developer tools and platforms to create your own portfolio of edge AI solutions. Build your portfolio and learn new, marketable skills to prepare yourself for jobs of the future.

Troubleshooting, Support, and Additional Information

For troubleshooting or additional help regarding your developer kit, refer to the manufacturer’s product manual or website.

OpenVINO™ Toolkit Documentation:

https://docs.openvino.ai/latest/documentation.html

OpenVINO™ Toolkit Troubleshooting:

https://docs.openvino.ai/latest/openvino_docs_get_started_guide_troubleshooting.html

OpenVINO™ GitHub:

https://github.com/openvinotoolkit/openvino