Introduction

This vehicle advanced monitoring system (VAMS) solution is intended for vehicular systems supporting the CAN protocol. The system uses the On Board Diagnostic -2 (OBD-2) port of the targeted vehicle for data acquisition. VAMS is an intelligent framework that derives raw data from a car and saves the data online so that it can be accessed globally by the owner using a web-portal. One possible use case would be for car rental companies that want to track real-time stats for its vehicles.

The roles of VAMS include:

- Access raw data from the targeted car using the OBD-2 port.

- Save the data to servers to be used by authorized users globally.

- Capture and process visual feed from a dash-mounted camera at edge level.

- Perform analytics on the data acquired for analysis of vehicle performance, driving conditions, etc.

- Visualize the data live in a local dashboard or through an app that can be used globally.

- Track a car in real time, capturing primary parameters such as speed, engine revolutions per minute (RPM), fuel consumption, etc.

- Act as a car’s data recorder, which can be used by insurance companies.

This article examines how to acquire edge-telemetry and then route the data to the cloud for processing.

Overview and Architecture

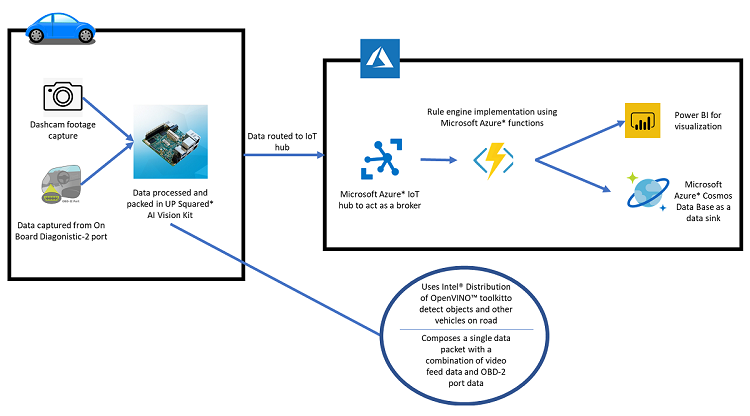

The VAMS approach consists of two major systems: one based at edge level and the second at cloud level using Microsoft Azure*. The overall architecture is shown below. The diagram can be divided into two major components:

- Edge level: the system components within a vehicle and responsible for data acquisition and video processing using the Intel® Distribution of OpenVINO™ toolkit.

- Cloud level: the system components that are responsible for handling the processed data and passing it through the rule engine for visualization.

Note: The main purpose for using the cloud in VAMS is to remotely monitor the vehicle. A common use case scenario might be for an owner whose vehicle is loaned or rented out.

Figure 1. Architecture

This edge system uses the ELM327 Integrated Circuit (IC) for OBD-2 port interaction and an UP Squared* AI Vision Kit for both the dash-cam and the primary edge computing device. A series of Python* scripts run in the background, along with a node.js process for processing and routing the data to the Internet of Things (IoT) hub.

Edge System

Primary processing happens at the edge level. One process includes the data acquisition through the OBD-2 port. The second is concentrated on video processing of live video feed. Getting data from OBD-2 port is fairly easy and straightforward, in this case using the python-OBD library to handle the communication. The result is construction of a json string of primary parameters.

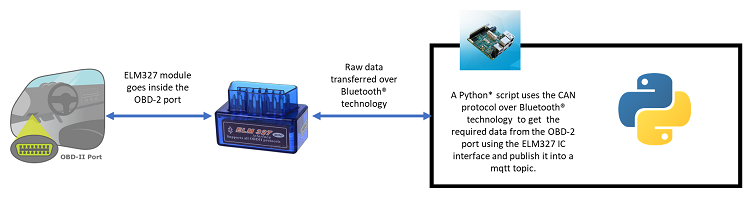

OBD-2 uses the CAN protocol for communication. ELM327 IC interacts with the OBD-2 port using the CAN protocol to get the data. As of now, the focus is on accessing three major primary parameters.

- Engine RPM

- Vehicle speed

- Engine coolant temperature

The raw data is converted into a json structure as key-value pair. A single Python script is responsible for publishing the data to a topic in a locally hosted MQTT broker.

Figure 2. Edge flow

Next, the system implements video processing locally to determine the number of vehicles present on the road at any given time, allowing it to intercept parameters more effectively. For this, the focus is on using the Intel Distribution of OpenVINO toolkit to run interfaces. An object recognition algorithm helps detect the presence of different objects on the road, according to certain classes – other cars, buses, trucks, pedestrians – to improve the performance.

Now to examine how video frames are processed to generate textual data, which is merged with telemetry data to be sent to cloud.

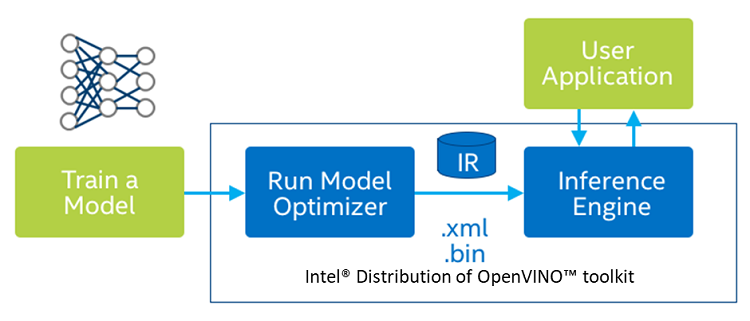

This approach is based on the use of a model optimizer to fine-tune the model for a user application to consume. The .xml and .bin file generated from the model optimizer is consumed by the inference engine using the Python* API, which is essentially a wrapper built on top of C++ core codes.

Figure 3. Diagram of Intel® Distribution of OpenVINO™ toolkit flow

For the trained model, this approach uses a single-shot detector (SSD) framework with a tuned MobileNetV1 as a feature extractor object recognition model, which has nearly 90 classification classes for object recognition. Out of those 90 classes, only six classes are of interest, as shown in the json below.

{“person”,”bicycle”,”car”,”motorcycle”,”bus”,”truck”}

Cloud System

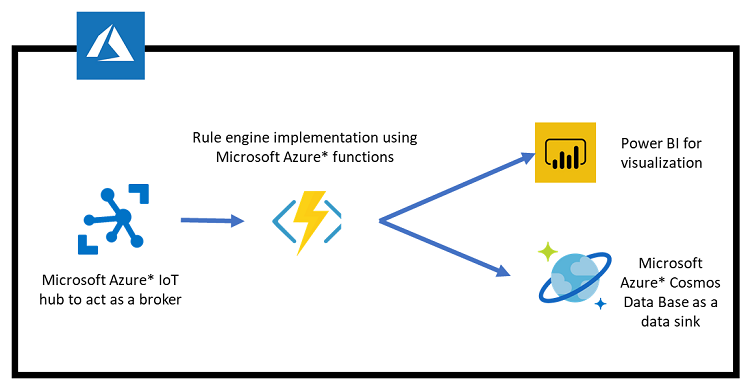

The cloud system is based on Windows Azure. Work is underway to port it into Amazon Web Services (AWS)*. Irrespective of the cloud service used, the overall architecture will remain the same. The process is triggered when the primary Python node initiates a signal to the IoT hub through MQTT. The data is then routed to the Azure function, where the rule engine is coded and finally sent to the Microsoft power BI dashboard and the Cosmos Data Base. The flow diagram is shown below.

Figure 4. Cloud data flow

As of this version, this approach doesn’t perform user-level segregation; work is underway to integrate a custom node.js web app which would offer this with authentication.

Getting Data from OBD-2 Port

Getting the data from the OBD-2 port with all the hardware in place requires the use of certain dependencies of Python to acquire it asynchronously. This calls for using the python-obd library.

To acquire data, the CAN protocol requires sending a parameter ID to access it. Suppose you need to access speed – simply send the parameter ID responsible for speed, which is 0D. The system then returns the value of speed. All the required data can be obtained from Mode 1 of the OBD-2 command list.

import obd

import time

#obd.logger.setLevel(obd.logging.DEBUG)

connection = obd.Async("COM4",baudrate=38400, fast=False)

# a callback that prints every new value to the console

#RPM

def new_rpm(r):

print("RPM: ",r.value)

print("\t")

#SPEED

def new_speed(r):

print("SPEED: ",r.value)

print("\t")

#COOLANT TEMPERATURE

def new_coolant_temperature(r):

print("Coolant temperature: ",r.value)

print("\t")

#THROTTLE POSITION

def new_throttle_position(r):

print("THROTTLE POSITION: ",r.value)

print("\t")

#FUEL_LEVEL

def new_fuel_level(r):

print("Fuel level: ",r.value)

print("\t")

connection.watch(obd.commands.RPM, callback=new_rpm)

connection.watch(obd.commands.SPEED, callback=new_speed)

connection.watch(obd.commands.COOLANT_TEMP, callback=new_coolant_temperature)

connection.watch(obd.commands.THROTTLE_POS, callback=new_throttle_position)

connection.watch(obd.commands.FUEL_PRESSURE, callback=new_fuel_level)

connection.start()

# the callback will now be fired upon receipt of new values

time.sleep(600)

connection.stop()

Above is the sample Python script that prints the desired value asynchronously. It uses call-backs to get the values. To save the data, handle and append all the values in a json and include it in all of the call-backs.

#RPM

def new_rpm(r):

rpmValue=r.value.magnitude

rpm_flag=1

obdjson["rpm"]=rpmValue

#SPEED

def new_speed(r):

#print("SPEED: ",r.value)

#print("\t")

speed_flag=1

obdjson["speed"]=r.value.magnitude

print(obdjson)

#COOLANT TEMPERATURE

def new_coolant_temperature(r):

#print("Coolant temperature: ",r.value)

#print("\t")

coolant_flag=1

obdjson["coolant_temp"]=r.value.magnitude

To publish the message to a certain MQTT topic, push the json in any one call-back.

#RPM

def new_rpm(r):

rpmValue=r.value.magnitude

rpm_flag=1

obdjson["engine_rpm"]=rpmValue

jstemp["telemetry_data"]=obdjson

json_temp = json.dumps(jstemp)

client.publish("vams/wb74asxxxx/status", str(json_temp), qos=1)

print(json_temp)

#SPEED

def new_speed(r):

#print("SPEED: ",r.value)

#print("\t")

speed_flag=1

obdjson["car_speed"]=r.value.magnitude

#print(obdjson)

#COOLANT TEMPERATURE

def new_coolant_temperature(r):

#print("Coolant temperature: ",r.value)

#print("\t")

coolant_flag=1

obdjson["coolant_temperature"]=r.value.magnitude

Thus, by publishing in any MQTT topic, you can access it through your primary code to append with the video processed data.

Video Processing and Generating Metadata json at Edge

As discussed earlier, this approach uses the Intel Distribution of OpenVINO toolkit for optimization and SSD framework with a tuned MobileNetV1 as a feature extractor for computer vision. Both are combined with OpenCV for image pre- and post-processing. The first step is to use the sample models that are available with Intel Distribution of OpenVINO toolkit under:

Intel\computer_vision_sdk\deployment_tools\intel_models\pedestrian-and-vehicle-detector-adas-0001

Under subfolder FP32 are the .bin and .xml files. The .xml file describes the network topology and the .bin file contains the weights and biases binary data. These files are generated using the model optimizer available within Intel Distribution of OpenVINO toolkit . For details, see the Model Optimizer Guide.

To implement the model, use the Python API, which is simply an abstraction built upon the C++ core classes. The output from this step is a json string with the following parameters:

{“vehicle_count”:x,

“pedestrian_count”:y}

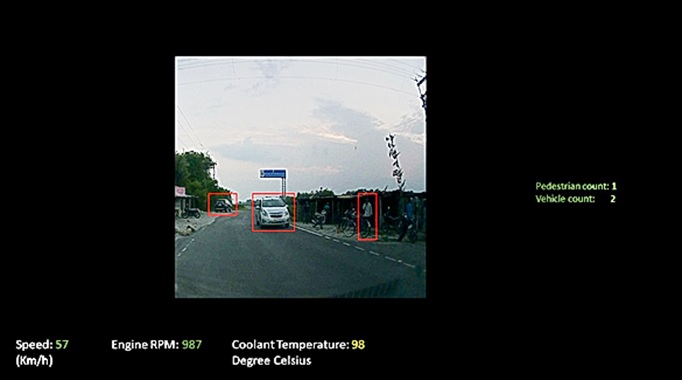

Where x is the number of vehicles on the road and y is the number of pedestrians per video frame under consideration. An example image is shown below.

Figure 5. Test video frame output obtained from a dash-cam of a Maruti Suzuki* WagonR 2017 model

The above two parameters are useful for figuring out how the driver reacts to different density levels of traffic. The above parameter is evaluated every second. The json is then pushed to a topic to the MQTT broker, which is then intercepted on the primary node.

Pushing Data to the Cloud

When edge processing is completed, proceed to the primary node, which collects the data from the telemetry and vision processing nodes and forwards the data packet to the cloud using MQTT hosted on Azure.

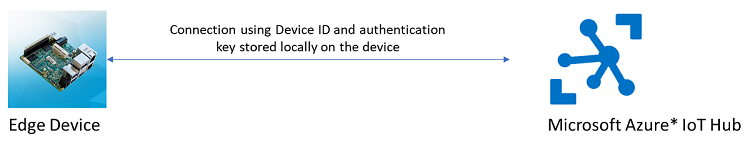

Security is handled by the Azure IoT hub, where a device can send data using device ID and authentication token as shown in figure 6 below.

Regarding cloud connectors, multiple options are available including direct sending via connection string or the use of middleware and a protocol such as MQTT to send messages.

Figure 6. Direct secured communication device to Azure cloud

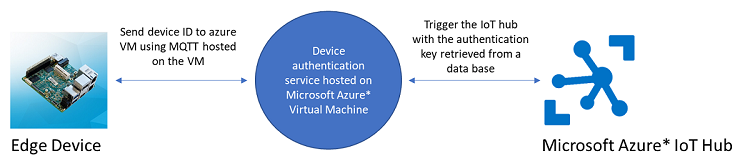

Figure 7. In-direct secured communication device to Azure cloud

The second case includes middleware in which a service is written in node.js which gets the device ID along with the data. It retrieves the authentication key from a DB. The connection string is then used to send the received data to the IoT hub.

Once the data is pushed to the cloud, visualize it and perform user-level segregation. This will allow each owner to view vehicle status (telemetry and visual data) remotely.

Additionally, data can be saved to the DB for later use. This area is still under development.

Conclusion

The above discussion details how to obtain data from the OBD-2 port of a vehicle and introduces a computer vision recipe for detecting the presence of other vehicles and pedestrians using the model optimizer and inference engine of the Intel Distribution of OpenVINO toolkit. This enables live telemetry data as well as composed custom metadata for the video frame processed. Finally, the data is routed to Azure cloud, with two options for saving it in a DB or live viewing it in a dashboard. Communication is maintained by Wi-Fi for testing but ultimately will be shifted to a cellular-based method. If the connection drops, the data is saved locally and published when the connection is re-established.