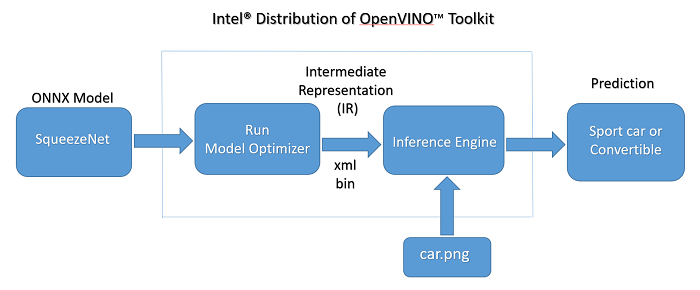

The Open Neural Network Exchange (ONNX) is a format for deep learning models. This tutorial explores the use of ONNX in version R4 of the Intel® Distribution of OpenVINO™ toolkit. It converts the SqueezeNet ONNX model into the two Intermediate Representation (IR) .bin and .xml files. It also demonstrates the use of the IR files in the image classification user application to predict the input image. SqueezeNet is a lightweight Convolutional Neural Network (CNN) for image classification that takes an image as input and classifies the major objects in the image into pre-defined categories.

The tutorial includes instructions for building and running the classification sample application on the UP Squared* Grove* IoT Development Kit and the IEI Tank* AIoT Developer Kit. The UP Squared* board and the IEI Tank platforms come preinstalled with an Ubuntu* 16.04.04 Desktop image and the Intel® Distribution of OpenVINO™ toolkit.

Figure 1 illustrates the relationship the ONNX model has to the Model Optimizer and the Inference Engine and how it results in output image prediction.

Figure 1. SqueezeNet image classification flow

Hardware

The hardware components used in this project are listed below:

- UP Squared* Grove* IoT Development Kit or IEI Tank* AIOT Development Kit

- A monitor with an HDMI interface and cable

- USB keyboard and mouse

- A network connection with Internet access or Wi-Fi kit for the UP Squared board.

Software Requirements

The software used in this project is listed below:

- An Ubuntu 16.04.4 Desktop image and the Intel® Distribution of OpenVINO™ toolkit come pre-installed R1 version 1.265 in the UP Squared* and the IEI Tank.

- Intel® Distribution of OpenVINO™ toolkit (pre-installed on UP Squared* and IEI Tank). This tutorial uses the R4 version 4.320. Download the Intel® Distribution of OpenVINO™ Toolkit R4 and follow the installation instructions for Linux*. The installation creates the directory structure in Table 1.

Table 1. Directories and Key Files in the Intel® Distribution of the OpenVINO™ Toolkit R4

| Component | Location |

| Root directory | /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools |

| Model Optimizer | /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/model_optimizer |

| install_prerequisites_onnx.sh | /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/model_optimizer/install_prerequisites |

| Build | /home/upsquared/inference_engine_samples |

| Binary | /home/upsquared/inference_engine_samples/intel64/Release |

| Demo scripts | /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/demo |

| Classification Sample | /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/inference_engine/samples/classification_sample |

Download SqueezeNet ONNX Models

Download the SqueezeNet ONNX model version 1.3 and place it in ~/openvino_models/squeezenet1.3 of your UP Squared board or the IEI Tank.

Convert ONNX Model to IR

- Set environment variables.

export ROOT_DIR=/opt/intel/computer_vision_sdk_2018.4.420/deployment_tools - Configure the Model Optimizer for ONNX.

cd $ROOT_DIR/model_optimizer/install_prerequisites ./install_prerequisites_onnx.sh - Convert the ONNX SqueezeNet model to optimized IR files using the Model Optimizer. squeezenet.xml and squeezenet.bin will be generated at ~/openvino_models/squeezenet1.3.

cd $ROOT_DIR/model_optimizer sudo python3 mo.py --input_model ~/openvino_models/squeezenet1.3/model.onnx --output_dir ~/openvino_models/squeezenet1.3 --framework onnx --model_name squeezenet.1.3

Build the Image Classification Application

- Update the repository list and install prerequisite packages.

# Update the repository list sudo -E apt update # Install prerequisite packages sudo -E apt -y install build-essential python3-pip virtualenv cmake libpng12-dev libcairo2-dev libpango1.0-dev libglib2.0-dev libgtk2.0-dev libswscale-dev libavcodec-dev libavformat-dev libgstreamer1.0-0 gstreamer1.0-plugins-base - Set environment variables.

source $ROOT_DIR/../bin/setupvars.sh - If the build directory exists, go to the build directory. Otherwise, create the build directory.

cd ~/inference_engine_samples - Generate makefile for release, without debug information.

Or generate makefile with debug information.sudo cmake -DCMAKE_BUILD_TYPE=Release $ROOT_DIR/inference_engine/samplessudo cmake -DCMAKE_BUILD_TYPE=Debug $ROOT_DIR/inference_engine/samples - Build the image classification sample.

sudo make -j8 classification_sample - Build all samples.

sudo make -j8 - The build generates the classification_sample executable in the Release or Debug directory.

If you make changes in the classification sample and want to rebuild it, be sure delete the classification_sample first. The demo_squeezenet_download_convert_run.sh script doesn’t rebuild if the binary exists.ls $ROOT_DIR/deployment_tools /inference_engine/samples/build/intel64/Release/classification_samplerm $ROOT_DIR/deployment_tools /inference_engine/samples/build/intel64/Release/classification_sample

Run the Classification Application

Run the application with -h to display all available options.

cd ~/inference_engine_samples/intel64/Release

upsquared@upsquared-UP-APL01:~/inference_engine_samples/intel64/Release$ ./classification_sample -h

[ INFO ] InferenceEngine:

API version ............ 1.2

Build .................. 13911

classification_sample [OPTION]

Options:

-h Print a usage message.

-i "<path>" Required. Path to a folder with images or path to an image files: a .ubyte file for LeNetand a .bmp file for the other networks.

-m "<path>" Required. Path to an .xml file with a trained model.

-l "<absolute_path>" Required for MKLDNN (CPU)-targeted custom layers.Absolute path to a shared library with the kernels impl.

Or

-c "<absolute_path>" Required for clDNN (GPU)-targeted custom kernels.Absolute path to the xml file with the kernels desc.

-pp "<path>" Path to a plugin folder.

-d "<device>" Specify the target device to infer on; CPU, GPU, FPGA or MYRIAD is acceptable. Sample will look for a suitable plugin for device specified (CPU by default)

-nt "<integer>" Number of top results (default 10)

-ni "<integer>" Number of iterations (default 1)

-pc Enables per-layer performance report

The classification application uses below options:

- Path to an .xml file with a trained SqueezeNet classification model which generated above.

- Path to an image file

The classification application assumes the labels file name is the same as the IR files but with different extensions. Copy the existing SqueezeNet labels file from $ROOT_DIR/demo/squeezenet1.1.labels to the squeezenet.1.3.labels.

cp $ROOT_DIR/demo/squeezenet1.1.labels ~/openvino_model/squeezenet1.3/squeezenet.1.3.labels

Run the classification application:

./classification_sample -d CPU -i /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/demo/car.png -m ~/openvino_models4/squeezenet1.3/squeezenet.xml

Interpret Detection Results

The application outputs the top-10 inference results. To get the top 15 inference results, add option “-nt 15” into the command line.

./classification_sample -d CPU -i /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/demo/car_1.bmp -m $ROOT_DIR/model_optimizer/model.xml -nt 15

The number shown on the first column is the line number plus one in the labels file. For the output example below, 817 will be the line number 818 in the labels file. “sports car, sport car” is the corresponding words at line 818 in the labels file which are detection objects recognized by the deep learning model.

labelFileName = /opt/intel/computer_vision_sdk/deployment_tools/model_optimizer/model.labels

Image /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/demo/car.png

817 0.8714160 label sports car, sport car

511 0.0519410 label convertible

479 0.0339229 label car wheel

436 0.0194652 label beach wagon, station wagon, wagon, estate car, beach waggon, station waggon, waggon

656 0.0179717 label minivan

751 0.0017786 label racer, race car, racing car

468 0.0011149 label cab, hack, taxi, taxicab

717 0.0009329 label pickup, pickup truck

581 0.0007121 label grille, radiator grille

864 0.0004010 label tow truck, tow car, wrecker

Run Demo Script

The classification and security barrier camera demo scripts are located in /opt/intel/computer_vision_sdk_2018.4.420/deployment_tools/demo. Refer to README.txt for instructions detailing how to use the demo scripts. Use the above command lines and update the existing demo scripts to create your own classification demo script.

Troubleshooting

If you encounter the error below, make sure the environment variables are correct by entering source $ROOT_DIR/../bin/setupvars.sh on the terminal window that executes the cmake command to set environment variables.

[ ERROR ] Cannot find plugin to use :Tried load plugin : MKLDNNPlugin, error: Plugin MKLDNNPlugin cannot be loaded: cannot load plugin: MKLDNNPlugin from : Cannot load library 'libMKLDNNPlugin.so': libiomp5.so: cannot open shared object file: No such file or directory, skipping

Summary

This tutorial describes how to convert a deep learning ONNX model to optimized IR files and then how to use the IR files in the classification application to predict the input image. Feel free to try the other deep learning ONNX models on the UP Squared* board and the IEI Tank.

Key References

- Intel® Developer Zone

- UP Squared* board

- IEI Tank* AIoT Developer Kit

- Intel® Distribution of OpenVINO™ toolkit

- Intel® Distribution of OpenVINO™ toolkit Release Notes

- Intel® Distribution of OpenVINO™ toolkit Forum

- Intel® Distribution of OpenVINO™ toolkit using ONNX

- Model Optimizer

About the Author

Nancy Le is a software engineer at Intel Corporation in the Core & Visual Computing Group working on Intel Atom® processor enabling for Intel® Internet of Thing or IoT projects.