Introduction

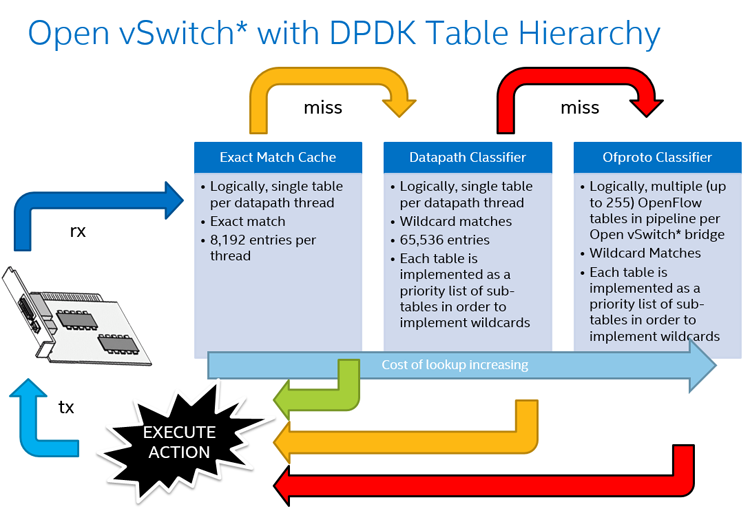

The exact-match cache (EMC) is the first and fastest mechanism Open vSwitch* (OVS) uses to determine what to do with an incoming packet. If the action for the packet cannot be found in the EMC, the search continues in the datapath classifier, and failing that the OpenFlow* flow tables are consulted. This can be thought of as similar to how a CPU checks increasingly slower levels of cache when accessing data.

Figure 1: The Open vSwitch* table hierarchy.

Exact Match Cache (EMC) Processing

Let's look at a pseudocode representation of the workflow for incoming packets as they are looked up in the EMC. The relevant code is in emc_processing() in dpif-netdev.c.

emc_processing( IN/OUT: dp_packet_batch, OUT: netdev_flow_key[]

OUT: packet_batch_per_flow[])

foreach packet in batch

flowkey.miniflow = miniflow_extract (pkt)

flowkey.hash = dpif_netdev_packet_get_rss_hash (pkt)

dp_netdev_flow = emc_lookup(flowkey)

if dp_netdev_flow

/* EMC hit \0/ */

Add packet to entry in packet_batch_per_flow[]

else

//EMC miss

Write packet back to dp_packet_batch

Code block: emc_processing pseudocode.

First, incoming packets have their netdev_flow_key calculated. Conceptually, the netdev_flow_key is a concatenation all the packet's header fields that OVS knows how to decode.

Then, this key is used to look up the EMC to find the corresponding dp_netdev_flow, which may (EMC hit) or may not (EMC miss) exist. The dp_netdev_flow structure contains an actions structure that determines how the packet should be processed, without having to perform a more expensive up-call to the datapath classifier.

Once the action for a packet has been determined—which is to say once a dp_netdev_flow for the packet has been found—that action is not executed immediately. Instead, for performance reasons, all the packets for each action are batched together and returned via the packet_batch_per_flow argument. All the packets that missed the EMC are also batched together and returned via the dp_packet_batch argument to dp_netdev_input(). There, the packets that missed the EMC are passed to the datapath classifier with fast_path_processing(), and finally, the packet batches are dispatched using packet_batch_per_flow_execute().

By batching packets in this manner, egressing packets to devices becomes more efficient as the cost of slow memory-mapped input/output (MMIO) operations is spread across multiple packets.

The EMC in Detail

The EMC maps from the flowkey structure described above to a dp_netdev_flow data structure. The notable items in this data structure are the flow and actions item, which indicates how a packet matching the flow should be processed. If a packet's flow is found in the EMC, the packet can be processed appropriately without further processing.

It's worth studying the mechanism of the EMC as it has some surprising features.

The capacity of the EMC is configurable to a power of 2 value (2, 4, 8, 16, …) at compile time by setting EM_FLOW_HASH_SHIFT. The exact capacity is 2EM_FLOW_HASH_SHIFT. So, if EM_FLOW_HASH_SHIFT is set to 13 we get an EMC with 213, or 8192 entries. As of OVS 2.8, each entry takes approximately 570 bytes, so at 8196 entries the EMC will take up about 4.4 MB of RAM.

Generally (see section EMC Insertion, below), when a packet for a new flow is received it will always result in an insertion into the EMC, even if it requires an existing entry to be evicted. But surprisingly, an eviction may be required even when the EMC is holding less than its full capacity of entries.

This is because the EMC can be considered to be an n-way associative cache (similar to a modern CPU cache). If the EMC was a fully associative cache, then any flow (that is, struct dp_netdev_flow) could be stored at any location in the cache. However, n-way associative means that there are only n entries from the 8196 entries that can be used to store any given flow. Currently, n is set to 2 (using #define EM_FLOW_HASH_SEGS). This means that even with a number of flows much less than the size of the EMC it is likely that there will be contention between flows for entry into the EMC. Think of n ways as meaning there being n possible ways to do something; in this case n ways in which to insert the item into the EMC.

How the n possible entries are determined for a flow is interesting. A hash is calculated from a subset of the packet’s header fields. This can be done by the network interface card (NIC) and passed to the host in the packet metadata; or, if that has not been done, OVS will calculate a hash itself (see dpif_netdev_packet_get_rss_hash()).

In any case, for this example assume that EM_FLOW_HASH_SHIFT has been set to 8 (28 = 256 EMC entries) and EM_FLOW_HASH_SEGS has been set to 3 (each dp_netdev_flow can be stored in any one of 3 locations out of that 256). So how are the three possible entries chosen? Say that the 32-bit hash calculated or retrieved by dpif_netdev_packet_get_rss_hash() was 0x00B177EE. Then the three possible locations in the EMC cache allowable to store the dp_netdev_flow of that packet would be 0xB1, 0x77, or 0xEE.

In the current code, the values are different and do not align so nicely on the nibble boundaries, so the calculations are uglier, but the mechanism is identical.

When is the EMC Full?

An eviction may be required even when the EMC is holding less than its full capacity of entries.

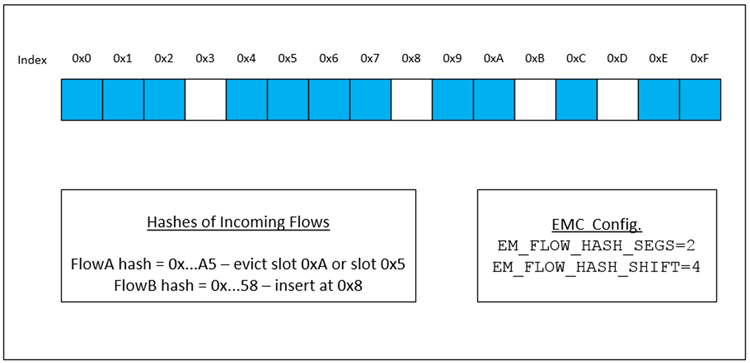

Consider an EMC small enough to depict in a single diagram. The shaded squares are used EMC slots.

Figure 2: A 75-percent full EMC with 16 slots.

Let’s say that two packets for two new flows arrive. The hashes are calculated, and due to the configuration of the EMC, only the two least significant sets of four bits are used to determine possible locations for the new flows.

FlowA has a hash ending in 0xA5. But as both slots 0xA and 0x5 are already full, one or the other is chosen randomly for eviction.

FlowB has a hash ending in 0x58. Slot 0x5 is used but 0x8 is free, so FlowB is assigned to slot 0x8.

It can be shown that for EM_FLOW_HASH_SEGS=2 the probability of an eviction being required for an incoming flow rises above 50 percent when the EMC is over 70.7 percent full. In the case of the EMC depicted here with 16 entries, that occurs with the 12th entry, making it 75 percent full and giving a 56 percent probability that a new entry will require an eviction.

EMC Matching, Insertion, and Deletion

EMC Matching

As the name EMC suggests, when a flow is looked up or stored in the EMC, it is done in an exact manner. There is no wildcard matching, omission of fields, or setting of bits to a don’t-care value.

For instance, even if an OpenFlow rule says to send all packets with a destination media access control (MAC) address of 01:FC:09:xx:xx:xx to a given port, each different matching destination MAC address encountered by OVS will result in a separate entry in the EMC.

As another example, the IPv4 time-to-live (TTL) field is a known field to OVS, and is extracted into the miniflow structure described above. If a packet in a TCP connection takes a slightly different route before traversing OVS that packet will fail to match the existing EMC entry for the other packets in that TCP connection (as it will have a different TTL value); this will result in an up-call to the datapath classifier and a new entry into the EMC just for that packet.

EMC Insertion

Generally, insertion into the EMC never fails. If one of the n-way locations is free then that is used; if there is no free location then one of the n locations is chosen at random and that is used for the new entry. For performance reasons, there is no least-recently used or least-frequently used replacement mechanism employed.

That said, since OVS 2.7 there is a probabilistic insertion feature, which reduces EMC thrashing in situations where there are many more flows being handled than available EMC entries. By default, it means that on average, 1 packet in 100 will result in a flow being inserted into the EMC. This means that only when a flow comprises more than 50 packets does it become likely that an entry will be made into the EMC for that flow.

The exact value can be changed using ovs-vsctl.

$ ovs-vsctl --no-wait set Open_vSwitch . other_config:emc-insert-inv-prob=5

This means that just one in every five packets on average will be inserted into the EMC.

By this mechanism, flows with more frequent packets tend to end up in the EMC where they tend to produce more hits, and flows with less-frequent packets remain outside it.

Probabilistic insertion can avoid some otherwise odd effects; for example, when a port scan is being performed on an IP address. A port scan involves sending packets to each of 64 kilobyte (KB) port addresses on an IP address. As the packets of these very short flows traverse OVS each one results in an EMC entry being created. With the current EMC size of 8 K entries, a 64-KB port scan could potentially thrash the EMC eight times over, but with the default one percent insertion probability approximately 640 entries would be filled.

EMC Sweep

Flow entries in the EMC do not have any associated last-used time or used counter. This means that a least-recently used or least-frequently used replacement mechanism cannot be used. However, unused EMC entries are still periodically removed.

This can be achieved as each EMC entry is associated with dp_netdev_flow in the datapath classifier. This dp_netdev_flow does have a last-used timestamp and that is used to remove the dp_netdev_flow from the datapath classifier when it has been unused for a period of time. As part of the dp_netdev_flow removal process all associated EMC entries are marked as dead. They are then removed periodically as part of a slow sweep carried out by the poll mode driver (PMD).

Additional Information

There is a separate EMC for each PMD. While this can mean that the same flow may have an entry in several EMCs it avoids the overhead of having locks on the EMC.

The Flow Key

A flow key consists of:

- All the packet header data present in the packet that OVS knows about (MAC and IP addresses, time-to-live (TTL, multiprotocol label switching (MPLS), and so on). As most possible fields are only present in specific protocols the majority of these fields are not set at all; the values of the headers that are present are stored in a special memory efficient form called a miniflow.

- A hash calculated from a subset of those fields. Like all hashes it is not unique for every combination of packet header data values. But it is used to drastically reduce the search space in the EMC for the packet.

The Miniflow

A packet’s miniflow is calculated by miniflow_extract(). A miniflow is a sparse representation of the header fields of a packet. To understand what sparse means in this context, consider struct flow, which has an individual member for every packet header field of every packet type that OVS knows about. Even though most fields are unused for any given packet, struct flow has a field for each and every one. For example, there is space allocated in a struct flow for both IPv4 and IPv6 addresses, even though both cannot be populated at the same time. So struct flow is a sparse data structure in that for any given packet most of its fields are not set. Due to processor caching effects it is actually more efficient to process the smaller miniflow structure than the larger flow data structure even though the former requires special accessor methods.

It's not necessary to go further in the implementation details of how miniflow implements a memory efficient representation of the flow header fields; only know that it is memory efficient, and that this extra complexity has been introduced for performance reasons.

Hash Calculation

As mentioned above, the hash is calculated from a subset of the fields present in the packet.

For IPv4 and IPv6 packets these fields are src and dst IP address, IP protocol and, if present (that is, for TCP, UDP, ICMP and SCTP), the src and dst port numbers. This hash is commonly called the 5-tuple hash.

As a corollary of this, all non-IP packets have the same hash; and therefore all contend for the same small handful of cache locations—the exact number is determined by EM_FLOW_HASH_SEGS, which is typically 2.

The calculation of the hash can also be offloaded to the NIC. In this case the hash is provided along with the other packet metadata provided by the NIC and the hash is retrieved rather than re-calculated during emc_processing(). As a side note, the hash provided by the NIC in that case is the one it uses for receive-side scaling (RSS).

Summary

The OVS EMC is an important mechanism that OVS uses to be a performant virtual switch. It is important for both developers and users to be aware of its key features (it is an n-way associative cache, it does not support any wildcarding, the hash calculations used are specifically designed for use with certain IP protocols) and the consequences of these. OVS also uses some complicated data structures such as the miniflow for performance reasons. While developers should be aware of the reasons for using such structures they do not need to spend much time on those details.

About the Author

Billy O’Mahony is a network software engineer with Intel. He has worked on the Open Platform for Network Functions Virtualization (OPNFV) project and accelerated software switching solutions in the user space running on Intel® architecture. His contributions to Open vSwitch with DPDK include Ingress Scheduling and RXQ/PMD Assignment.