Executive Summary

ExxonMobil IT has defined an overall IoT architecture that will enable the digital transformation of the enterprise. Intel has strategically teamed up with important ecosystem partners to help solidify IoT security. They will be supporting specific usage models and workflows required by ExxonMobil as part of their business demands. While the work on enabling the digital transformation is company focused, it is important to understand that the overall objective and resulting solution will transcend ExxonMobil. The solution will pave the way for general ecosystem scale, and result in individual features that can be enabled and used independently.

This paper will present the overall security objectives and required business workflows outlined by ExxonMobil IT that will drive the security requirements, capabilities and feature additions required to fill the gaps. The outcome of this joint effort will be a full end-to-end IoT reference architecture with hardware and device features, OS, virtualization and the management capabilities that meets ExxonMobil requirements.

Authors

Dave Hedge , ExxonMobil, Enterprise Compute Architect

Cesar Martinez Spessot, Intel Corporation, Engineering Director, Senior IoT Solutions Architect

Nicolas Oliver, Intel Corporation, Software Engineer

Marcos Carranza, Intel Corporation, Senior IoT Solutions Architect

Lakshmi Talluru, Intel Corporation, Sr. Director Digital Transformation

Don Nuyen, Intel Corporation, Account Executive

Overview

With the introduction of the Internet of Things (IoT) technologies as part of digital transformation within the modern enterprise; a commonly observed trend is the emergence of a variety of point solutions that only solve specific problems (Smart Parking, Smart Building, etc.). While these point solutions introduce unique capabilities and short-term value, for the modern enterprise these solutions sets present a significant number of challenges. These challenges include data ingestion method, platform offerings, gateway devices, and overall security posture. These challenges are then magnified when attempting to address the industrial market space where operation technology (OT) and information technology meet.

The lack of standardization in the approach toward the desired capabilities leads to an increase in the cost of implementation, support, maintenance, and adversely affects the security posture of the entire platform. These shortcomings mean a higher likelihood of use case failure, limited or no interoperability between capabilities and unclear overall return on investment (ROI). Additionally, many of these solutions are based on inappropriate models of governance that fundamentally neglect privacy and security in their design that poses scalability challenges.

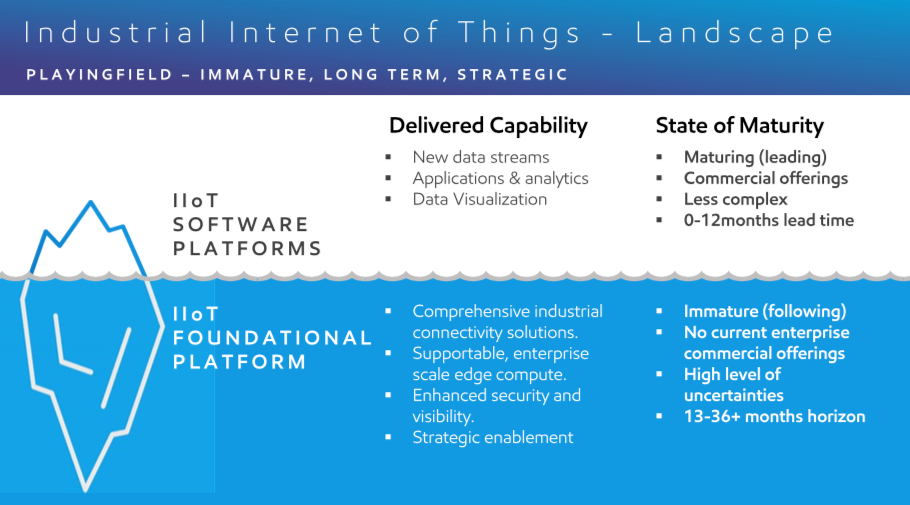

From a conceptual prospective, there are two platforms at play when looking at IoT and Industrial IoT: the Software platform and the Foundational platform. Both are crucial to the overall success of deployment and adoption for enterprise use.

The Software platform, at the highest level, represent where the lines of business would consume the data and arrive at actionable insights. This space is highly visible and a majority of the value projected from IoT, from a business prospective, will be generated in this space as it matures quickly.

The Foundational platform is the enabling capabilities to acquire, cleanse and move the data towards where the data will be consumed. While not as visible as the software platform, the importance from an enterprise prospective is actually higher. Without the ability to address some of the challenges listed above, scaling is unattainable which then impacts the value delivered by the software platform.

In order to realize the true benefit of IoT, enterprises should adopt a platform-based approach. This approach should provide common ground for building IoT solutions and is supported by a reference architecture that describes the essential building blocks that defines security, privacy, performance, and similar needs. Interfaces should be standardized; best practices in terms of functionality and information usage need to be provided.

Edge Architecture

The cloud is a powerful tool for many OT workloads, but it can fall short at times for IIoT use cases. Issues such as latency for control plane, massive volumes of remote data, and data aggregation (while maintaining context) require a complementing capability. The continuing evolution of edge computing is providing that capability and enabling the resolution of these issues. Yet, there are several key challenges to consider:

- Edge stacks are increasing in complexity and due to a lack of manageability standards, the management of these systems often requires manual intervention. This is frequently handled internally or through third parties, which leads to non-standard system approaches that drive additional costs and complexities while reducing reliability.

- IT/OT convergence is a key challenge as well. In the past, keeping OT systems as a closed circuit with no IT integration was a common practice. That is no longer an option. In today’s competitive landscape, duplicating infrastructure in that manner is not an ideal solution.

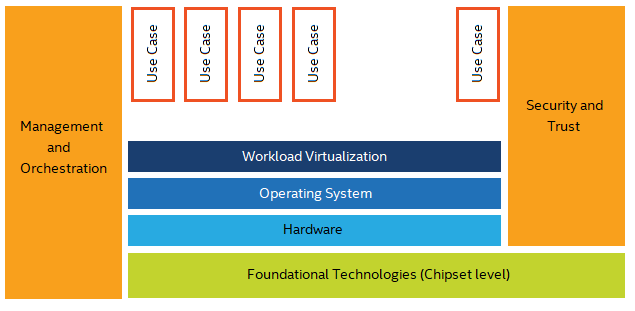

ExxonMobil IT approached Intel to assist in the development and realization of a foundational platform that would address some of the short comings that exist in the market for the enterprise today. Architecturally we developed a framework that we believe can enable us to adopt digital technologies in a scalable, managed and secure way. While discussing with Intel, we determined that our approach aligned nicely with the Unified Service Framework strategy developed by Intel and that the synergies could help propel the tech industry forward.

The basis of this framework is to align on an architecture that can scale to meet the needs of our business lines (use cases). These use cases are where the business logic resides and are deployable across a geographically disperse enterprise. Aligning with Intel with our focus on security, scalability and manageability; we established a focus group across the technology industry to help deliver the components that will evolve into the underlying foundational platform.

Securing the Edge

One of the primary focus areas for the end-to-end IoT architecture defined and presented in this paper is to secure the edge. Security is a multifaceted discipline usually with emphasis on Confidentiality, Integrity and Availability as the fundamental cornerstones.

We are focusing on security with respect to a certain set of usage models; and have itemized those items as they relate to the different device management life cycles.

Securing the edge here implies hardened security with respect to:

- Trustworthiness

- Protection of data at rest

- Protection of data in transit

- Protection of data in use

These are relatively broad objectives; the rest of this section will dive deeper to provide further insight and clarification.

Hardware Root of Trust and TPM 2.0 (Trusted Platform Module) are key capabilities required to secure the edge. By building a foundation of trust and security through our partnership with Intel, we are helping to bridge the technology enablement gaps that exist today in the market. By enabling the usage models that were initially intended by existing technologies we are better positioned to address real business needs.

Secure Boot

Secure Boot is a technology that became an industry standard, where the “firmware verifies that the system’s boot-loader, kernel, and potentially, user space, are signed with a cryptographic key authorized by a database stored in the firmware.”

The UEFI (Unified Extensible Firmware Interface) specification defines the above process as Secure Boot, which is “based on the Public Key Infrastructure (PKI) process to authenticate modules before they are allowed to execute. These modules can include firmware drivers, option ROMs, UEFI drivers on disk, UEFI applications, or UEFI boot loaders. Through image authentication before execution, Secure Boot reduces the risk of pre-boot malware attacks such as rootkits.”

Secure Boot relies on cryptographic signatures that are embedded into files using the Authenticode file format. The integrity of the executable is verified by checking the hash. The authenticity and trust is established by checking the signature. The signature is based on X.509 certificates, and must be trusted by the platform.

The system’s Firmware has 4 different set of keys:

Platform Key (PK): UEFI Secure Boot supports a single PK that establishes a trust relationship between the platform owner and the platform firmware, by controlling access to the KEK database. Each platform has a unique key. The public portion of the key is installed into the system likely at production or manufacturing time. Therefore, this key is:

- The highest level key in Secure Boot

- Usually provided by the motherboard manufacturer

- Owned by the manufacturer

Key Exchange Key (KEK): These keys establish a trust relationship between the firmware and the Operating System. Normally there are multiple KEKs on a platform; it is their private portion that is required to authorize and make changes to the ‘DB’ or ‘DBX’.

Authorized Signatures/Allowlist Database (DB): This database contains a list of public keys used to verify the authenticity of digital signatures of certificates and hashes on any given firmware or software object. For example, an image either signed with a certificate enrolled in DB or that has a hash stored in DB will be allowed to boot.

The Forbidden Signatures/Blacklist Database (DBX): This database contains a list of public keys that correspond to unauthorized or malicious software or firmware. It is used to invalidate EFI binaries and loadable ROMs when the platform is operating in Secure Mode. It is stored in the DBX variable. The DBX variable may contain either keys, signatures or hashes. In secure boot mode, the signature stored in the EFI binary (or computed using SHA-256 if the binary is unsigned) is compared against the entries in the database.

Data Encryption

Data Encryption allows protecting the private data stored on each of the hosts in the IoT infrastructure. The Linux Unified Key Setup (LUKS) is the standard for Linux hard disk encryption. By providing a standard on-disk format, it facilitates compatibility among different distributions and secure management of multiple encryption keys. By using the TPM 2.0 as credential storage for disk encryption keys, we mitigate the security risk of having disk encryption keys stored in plain text on the device disk, and we remove the need to distribute keys through an insecure channel where they can be disclosed.

Additionally, a platform integrity requirement can be established by using TPM 2.0 Platform Configuration Registries (PCR) Policies, where the TPM will provide the disk encryption key only if the PCR values are the expected ones configured at the time of the key creation. Combining PCR Policies with Secure/Measured Boot allows us to prevent unsealing secrets if an unexpected boot binary or boot configuration is being currently used in the platform.

One of the benefits of the TPM 2.0 in comparison with the TPM 1.2 specification is the availabilities of the Authorized Policies, which allows updating the authorized boot binaries and boot configuration values in the event of a platform updated. In this case, a policy is signed by an authorized principal and can be verified using TPM primitives, allowing the contents of the PCRs to be different at the moment to unseal the disk encryption keys. This solves the “PCR Fragility” problem associated with the TPM 1.2 family, which was considered a scale barrier for the TPM based security adoption.

From the Operative System, TPM based disk encryption can be configured and automated using the Clevis open-source tool. Clevis is a framework for automated encryption/decryption operations, with support for different key management techniques based on “Pins” (Tang, SSS, TPM2). The TPM2 pin uses the TPM2 Software Stack to interact with the TPM and automate the cryptographic operations used in the disk encryption operations.

Execution policies and Integrity protection

The goal of the Integrity Measurement Architecture is to:

- Detect if files have been accidentally or maliciously altered (both remotely and locally)

- Appraise a file’s measurement against a “good” value stored as an extended attribute

- To enforce local file integrity

These goals are complementary to the Mandatory Access Control (MAC) protections provided by LSM modules, such as SELinux and Smack; which depending on their policies can attempt to protect file integrity.

The following modules provide several integrity functions:

- Collect – measures a file before it is accessed.

- Store – adds the measurement to a kernel resident list and, if a hardware Trusted Platform Module (TPM) is present, extends the IMA PCR

- Attest – if present, uses the TPM to sign the IMA PCR value, to allow a remote attestation of the measurement list

- Appraise – enforces local validation of a measurement against a “good” value stored in an extended attribute of the file

- Protect – protects a file’s security extended attributes (including appraisal hash) against off-line attack.

- Audit – audits file hashes.

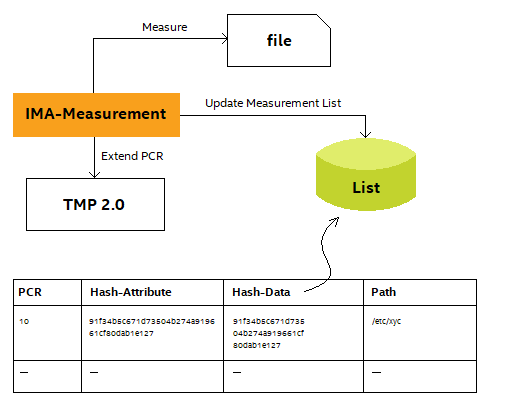

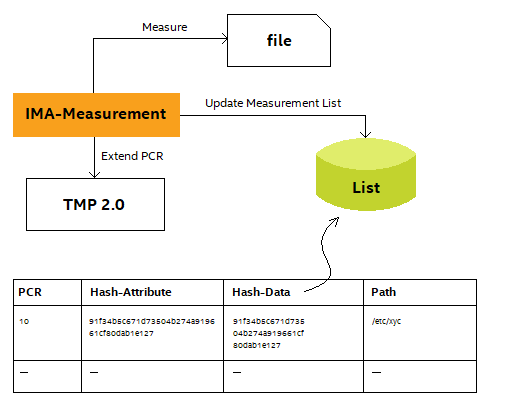

Components

IMA-measurement, one component of the kernel’s integrity subsystem, is part of the overall Integrity Measurement Architecture based on the Trusted Computing Group’s open standards. IMA-measurement maintains a runtime measurement list and, if anchored in a hardware Trusted Platform Module (TPM), an aggregate integrity value over this list. The benefit of anchoring the aggregate integrity value in the TPM is that the measurement list cannot be compromised by any software attack without being detectable. IMA-measurement can be used to attest to the system's runtime integrity. Based on these measurements, a remote party can detect whether critical system files have been modified or if malicious software has been executed.

IMA-appraisal, a second component of the kernel’s integrity subsystem, extends the “secure boot” concept of verifying a file’s integrity; before transferring control or allowing the file to be accessed by the OS. The IMA-appraisal extension adds local integrity validation and enforcement of the measurement against a "good" value stored as an extended security.ima attribute. The initial method for validating security.ima are hashed based, which provides file data integrity, and digital signature based, which in addition to providing file data integrity, provides authenticity.

IMA-audit, another component of the kernel’s integrity subsystem includes file hashes in the system audit logs, which can be used to augment existing system security analytics/forensics.

The IMA-measurement, IMA-appraisal, and IMA-audit aspects of the kernel’s integrity subsystem complement each other, but can be configured and used independently of each other.IMA-measurement and IMA-appraisal allows us to extend the Hardware Root of Trust model into user space applications and configuration files, including the container system.

IMA-appraisal benefits are similar to the ones provided by Secure Boot, in the sense that each file in the Trusted Computing Base is first appraised by the Linux Kernel against a good known value before passing control to it. For example, the dockerd binary will only be able to execute if the actual digest of the dockerd file matches the approved digest stored in the security.ima attribute. In the digital signature case, the binary will only be able to execute if the signature can be validated against a Public Signing Key stored in the Machine Owner Key infrastructure.

On the other hand, IMA-measurement benefits are similar to the ones provided by the Measured Boot process, where each file in the Trusted Computing Base will be first measured by the Linux Kernel before passing control to it. This measurements are stored in a special file in the Linux Security File System, which can later be used in a remote attestation scenario.

Credential storage

TPM generates strong, secure cryptographic keys. Strong in the sense that the key is derived from true random source and large key space. Secure in the sense that the private key material never leaves the TPM secure boundary in plain form. The TPM stores keys on one of four hierarchies:

- Endorsement hierarchy.

- Platform hierarchy.

- Owner hierarchy, also known as storage hierarchy.

- Null hierarchy.

A hierarchy is a logical collection of entities: keys and nv data blobs. Each hierarchy has a different seed and different authorization policy. Hierarchies differ by when their seeds are created and by who certifies their primary keys. The Owner hierarchy is reserved to the final user of the platform, and can be used as a secure symmetric/asymmetric key storage facility for any user space application or service that requires them.

An example of a symmetric key storage is presented in the Disk Encryption section, where a random key is stored in the TPM, and a TPM PCR Policy is used to enforce integrity state validation prior to unsealing the key.

As an example of an asymmetric key storage, we present the use case for VPN credentials. VPN clients, like OpenVPN, uses a combination of public and private keys and certificates (in addition to other security mechanisms as Diffie Hellman Parameters and TLS Authentication keys) to establish identity and authorization against the VPN server. By using the TPM, a private key is created internally in the NVRAM, and a Certificate Signing Request is derived from the private key using the TPM 2.0 Software Stack. The Certificate Signing Request is then signed by the organization PKI, and a Certificate is provided to the VPN client to establish authentication with the VPN Server. At the moment of establishing the connection, the VPN Client will need to prove that it owns the private part, having to interact directly with the TPM, or indirectly using the PKCS#11 Cryptographic Token Interface.

Another example of asymmetric key storage can be described with Docker Enterprise. To allow host to pull and run Docker Containers from a Docker Trusted Registry, it is required to use a Docker Client Bundle, which consists on a public and private key pair signed by the Docker Enterprise Certificate Authority. Similarly, to the VPN Client case, a private key can be securely generated in the TPM, and a certificate signing request can be derived from the private key to be signed by the Docker Enterprise CA. Once a Certificate is issued to the client, the Docker Daemon will need to interact with the TPM to prove that it has access the private key at the moment to establish a TLS connection with the Docker Enterprise Service

Data Sanitization

Data sanitization is one key element in assuring confidentiality.

Sanitization is a process to render access to target data (the data subject to the sanitization technique) on the media infeasible for a given level of recovery effort. The level of effort applied when attempting to retrieve data may range widely.1 For example, a party might attempt simple keyboard attacks without the use of specialized tools, skills, or knowledge of the medium’s characteristics. On the other end of the spectrum, they might have extensive capabilities and be able to apply state of the art laboratory techniques in their attempts to retrieve data.

Solid State Drives (SSDs) present additional challenges to implement an effective data sanitization process, given that the internals of an SSD differ in almost every aspect from a hard drive, so assuming that the erasure techniques that work for hard drives will also work for SSDs is dangerous.

To implement an effective Data Sanitization process, we rely on the Cryptographic Erase (CE) process. This technique, used widely in Self-Encrypting Drives, leverages the encryption of target data by enabling sanitization of the target data's encryption key. This leaves only the cipher text remaining on the media, effectively sanitizing the data by preventing read-access. Without the encryption key used to encrypt the target data, the data is unrecoverable. The level of effort needed to decrypt this information without the encryption key then is the lesser of the strength of the cryptographic key or the strength of the cryptographic algorithm and mode of operation used to encrypt the data. If strong cryptography is used, sanitization of the target data is reduced to sanitization of the encryption key(s) used to encrypt the target data. Thus, with CE, sanitization may be performed with high assurance much faster than with other sanitization techniques. The encryption itself acts to sanitize the data.

By storing all the sensitive data in encrypted partitions and using the TPM 2.0 to store the disk encryption key, the Cryptographic Erase process can be applied by issuing a TPM2_CC_Clear command that will erase the content of the TPM NV RAM. After the clear operation, any attempt to unlock the encrypted partition by requesting the key to the TPM will result in an error, based on the fact the TPM do not hold the disk encryption key any more. It has to be noted that the sanitization process relies on the fact that the key needs to be securely stored in the TPM, and should not be stored in plain text on the device disk at any time. Additionally, to prevent disclosing the key while being in the device memory, and encrypted swap partition is used.

Conclusion

The Foundational IoT Edge Platform presented in this document provides an enterprise grade architecture to enable digital transformation at scale, with emphasis on meeting the security requirements for protection of sensitive data at rest, in transit, in use and the trustworthiness of the overall system. ExxonMobil and Intel have contributed with key ecosystem players in the IoT technology space to pave the road for a better and more secure realization of the Industry 4.0 revolution, which will not only benefit the ExxonMobil business, but industrial ecosystem as a whole.

1Joko Saputro, NIST Special Publication 800-88 Guidelines for Media Sanitization