Due to the PMEM architecture, we know that performance varies based on random vs sequential writes, but how does performance compare on differing filesystems? Is one better than another for a certain use case?

This short article uses psync and libpmem, two FIO engines that collect performance numbers, to examine the performance differences between workloads on XFS and EXT4 filesystems. Every data point is run three times, and the maxed value of those three is the result reported for each one (results are shared in graphs throughout the article).

This article assumes that you have a basic understanding of persistent memory concepts and are familiar with general Persistent Memory Development Kit (PMDK) features. If not, please visit Persistent Memory Programming on the Intel® Developer Zone web site, where you will find the information you need to get started.

Configuration

Below is the system configuration for FIO performance number collection:

| Platform | Wolfpass |

| Processor | 1-node, 2x Intel® Xeon® Platinum 8280L @ 2.70GHz processors (28 cores, 28 threads), ucode: 0x5002f01 |

| Total Memory | 192 GB, 12 slots / 16 GB / 2667 MT/s DDR4 RDIMM |

| Total Persistent Memory | 3.0 TB, 12 slots / 256 GB / 2667 MT/s Intel® Optane™ persistent memory modules |

| BIOS Settings | Intel® Hyper-Threading Technology (Intel® HT Technology) - Disabled |

| Storage | 1x TB P4500 |

| OS | Ubuntu* Linux* 20.04, Kernel: 5.4.0-45 |

See backup for workloads and configurations. Results may vary.

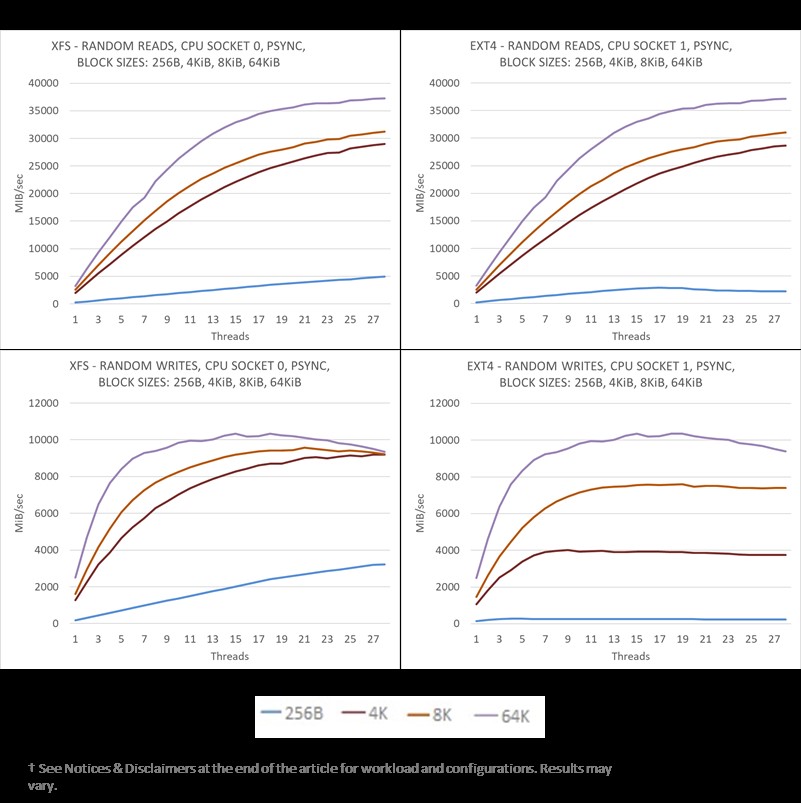

Random Reads and Writes Using Psync

Using psync engine with the four different block sizes 256B, 4KiB, 8KiB, and 64KiB, we can see that random reads performs relatively consistent between the two filesystems for block sizes greater than or equal to 4KiB (shown in the top two charts of Figure 1). For small blocks sizes of 256B, XFS scales better beyond 15 threads.

Random writes are shown in the two lower charts of Figure 1. Performance numbers shows that the XFS filesystem handles random writes better than the EXT4 filesystem for block sizes 256B, 4KiB, and 8KiB. For large block sizes, such as 64KiB, both filesystems are on par.

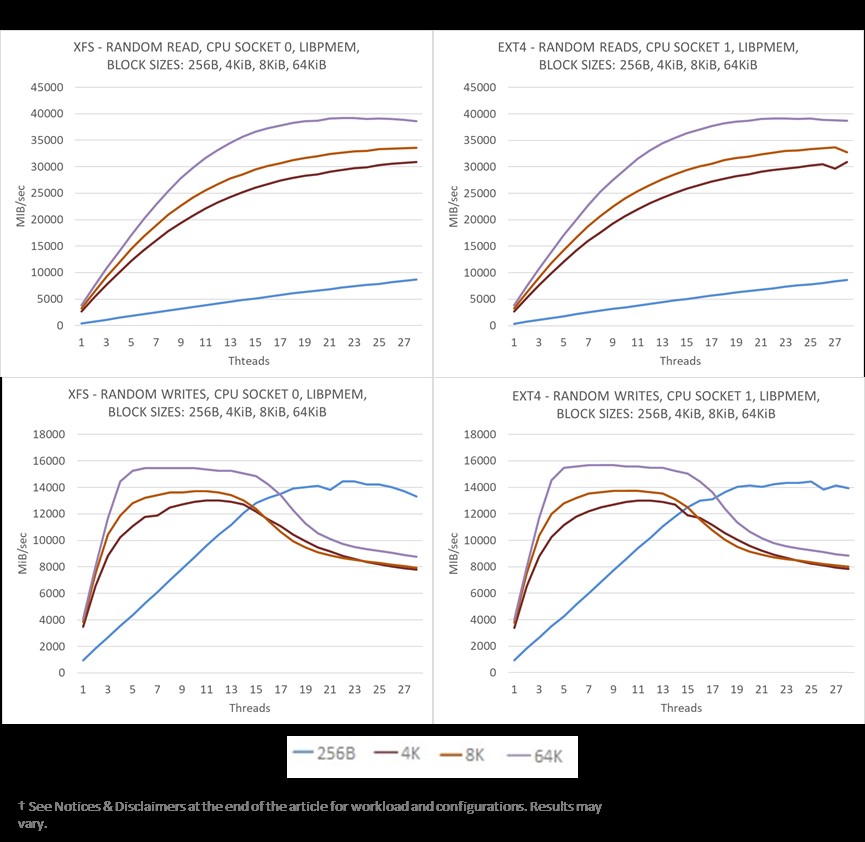

Random Reads and Writes Using Libpmem

Using the libpmem engine with the same four block sizes used in the previous test, we can see that random reads and writes perform consistently between the two filesystems (see Figure 2). This is somewhat expected. By taking advantage of Direct Access (DAX), libpmem operates in user-space through memory-mapped files, thus bypassing the file system (page cache and block layer) completely. Since the file system is out of the way (with the exception of initial checks and bookkeeping), it should not be a significant factor in performance results.

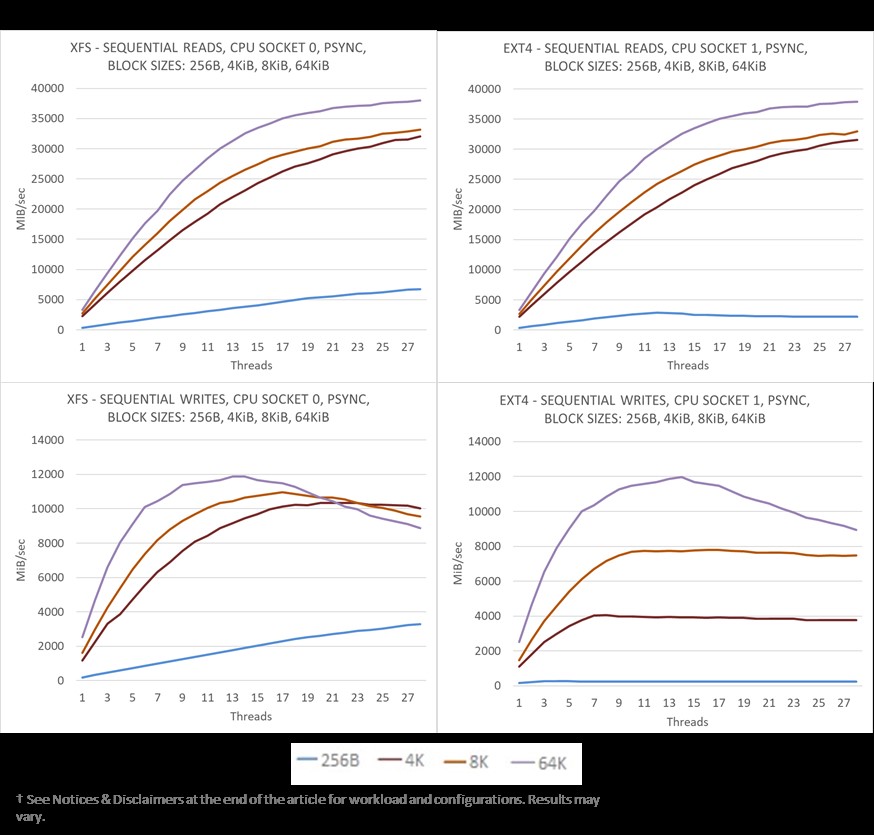

Sequential Reads and Writes Using Psync

Using the psync engine with the same block sizes, we see the same pattern for sequential as we did for random above. Sequential reads (top two charts of Figure 3) performs relatively consistent between the two filesystems for block sizes greater than or equal to 4KiB. For small blocks sizes of 256B, XFS scales better beyond 12 threads. Sequential writes are shown in the two lower charts of Figure 3. Performance numbers shows that the XFS filesystem handles sequential writes better than the EXT4 filesystem for block sizes 256B, 4KiB, and 8KiB. For large block sizes, such as 64KiB, both filesystems are on par.

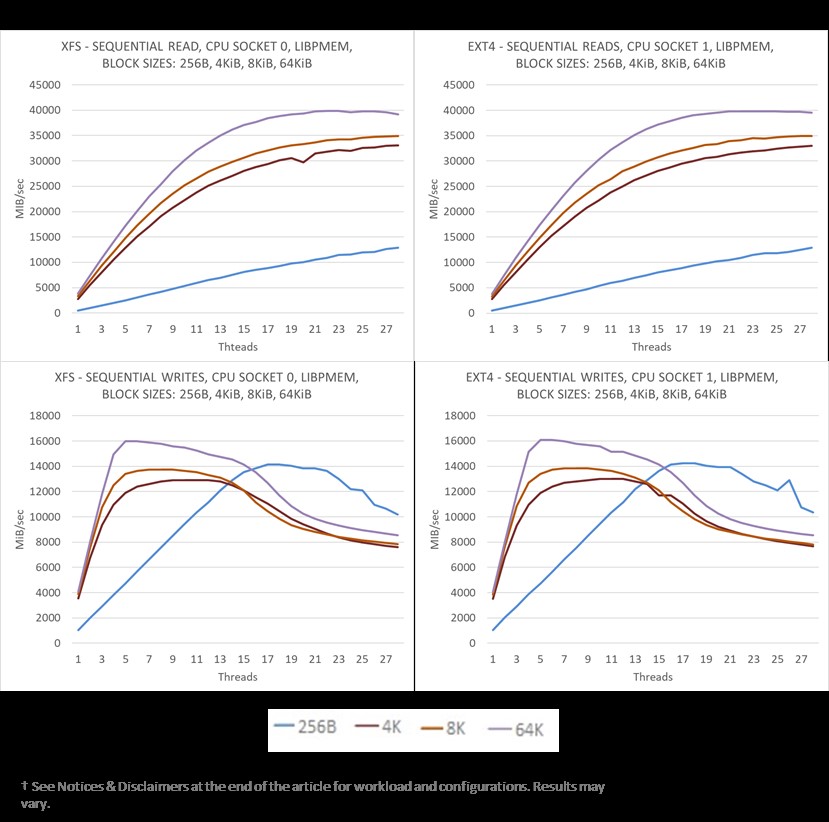

Sequential Reads and Writes Using Libpmem

In this scenario, with the libpmem engine using the same block sizes as previous tests in the article, the sequential reads and writes performance is consistent across thread numbers between the two filesystems (shown in Figure 4). As in the case of random reads and writes above, libpmem bypasses the filesystem during data access, so similar performance between XFS and EXT4 is expected.

Conclusion

By now, you have reviewed the read and write performance on both the XFS and EXT4 filesystem using two different FIO engines – psync and libpmem. Given that, thanks to DAX, the libpmem engine bypasses the filesystem during data access, performance with libpmem is consistent on both filesystems across the board. In the psync engine case, however, the XFS filesystem performs better in general, with the exception of large bock reads (64KiB) where both perform about the same. Hopefully, the data provided here helps you decide which filesystem is best for your application’s I/O performance needs.

Appendix A: FIO Configuration

Note: The libpmem engine implementation in FIO up until commit 67719e1 is incorrect. The right path of the engine that uses non-temporal stores does not execute the SFENCE instruction at the end to ensure persistence is achieved after the write is completed. Versions 3.22 or lower of FIO still does not contain the needed code changes. For proper testing, either pull the latest master branch, or apply the changes from the following patch.

Below is the FIO configuration file used for CPU socket 0 or 1:

[global]

name=fio-interleaved-n0

rw=${RW}

rwmixread=${RWMIX}

norandommap=1

invalidate=0

bs=${BLOCKSIZE}

numjobs=${THREADS}

time_based=1

clocksource=cpu

ramp_time=30

runtime=120

group_reporting=1

ioengine=${ENGINE}

iodepth=1

fdatasync=${FDATASYNC}

direct=${DIRECT}

[pnode0]

directory=${MOUNTPOINTSOCKET0}

size=630G

filename=file1.0.0:file1.0.1:file1.0.2:file1.0.3:file1.0.4:file1.0.5:file1.0.6:file1.0.7:f ile1.0.8:file1.0.9:file1.0.10:file1.0.11:file1.0.12:file1.0.13:file1.0.14:file1.0.15:file1 .0.16:file1.0.17:file1.0.18:file1.0.19:file1.0.20:file1.0.21:file1.0.22:file1.0.23:file1.0 .24:file1.0.25:file1.0.26:file1.0.27:file1.0.28:file1.0.29:file1.0.30:file1.0.31:file1.0.3 2:file1.0.33:file1.0.34:file1.0.35:file1.0.36:file1.0.37:file1.0.38:file1.0.39:file1.0.40: file1.0.41:file1.0.42:file1.0.43:file1.0.44:file1.0.45:file1.0.46:file1.0.47:file1.0.48:fi le1.0.49:file1.0.50:file1.0.51:file1.0.52:file1.0.53:file1.0.54:file1.0.55:file1.0.56:file 1.0.57:file1.0.58:file1.0.59:file1.0.60:file1.0.61:file1.0.62

file_service_type=random

nrfiles=63

cpus_allowed=${CPUSSOCKET0}

Appendix B: XFS and EXT4 Configuration

Formatting and mounting are shown for one device only.

For XFS:

# mkfs.xfs -f -i size=2048 -d su=2m,sw=1 -m reflink=0 /dev/pmem0

# mount -t xfs -o noatime,nodiratime,nodiscard,dax /dev/pmem0 /mnt/pmem0/

# xfs_io -c "extsize 2m" /mnt/pmem0

For EXT4:

# mkfs.ext4 /dev/pmem1

# mount -o dax /dev/pmem1 /mnt/pmem1

Appendix C: Volume Configuration

Creating Regions - Refer to ipmctl guide for more information

Creation of interleaved regions:

# ipmctl create -f -goal

...

(reboot the system)

Creating Namespaces - Refer to ndctl user guide for more information

Namespace creation is shown for one region only (you can list available regions running ndctl list -RuN):

# ndctl create-namespace -m fsdax -M mem --region=region0

The ipmctl and ndctl Cheat Sheet is a good reference too.

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See configuration disclosure for configuration details. No product or component can be absolutely secure.

Configuration disclosure: Testing by Intel as of Oct 07, 2020. 1-node, 2x Intel® Xeon® Platinum 8260 processors, Wolfpass platform, Total memory 192 GB, 12 slots / 16 GB / 2667 MT/s DDR4 RDIMM, Total persistent memory 3.0 TB, 12 slots / 256 GB / 2667 MT/s Intel® Optane™ persistent memory modules, Intel® Hyper-Threading Technology (Intel® HT Technology): Disabled, Storage (boot): 1x TB P4500, ucode: 0x400001C, OS: Ubuntu* Linux* 20.04, Kernel: 5.4.0-45.

Security mitigations for the following vulnerabilities: CVE-2017-5753, CVE-2017-5715, CVE-2017-5754, CVE-2018-3640, CVE-2018-3639, CVE-2018-3615, CVE-2018-3620, CVE-2018-3646, CVE-2018-12126, CVE-2018-12130, CVE-2018-12127, CVE-2019-11091, CVE-2019-11135, CVE-2018-12207, CVE-2020-0543

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.