Documentation

Container Storage Interface that makes local Intel® Optane™ Persistent Memory available as a filesystem volume to container applications in Kubernetes*.

Overview

Intel PMEM-CSI is a CSI storage driver for container orchestrators like Kubernetes. It makes local persistent memory (PMEM) available as a filesystem volume to container applications. It can currently utilize non-volatile memory devices that can be controlled via the libndctl utility library.

PMEM-CSI implements the CSI specification version 1.x, which is only supported by Kubernetes versions >= v1.13.

Read full documentation about PMEM-CSI.

What Is Intel® Optane™ Persistent Memory?

Intel® Optane™ persistent memory is an innovative memory technology that delivers a unique combination of affordable large capacity and support for data persistence. It can help businesses fuel innovation with increased capacity and unique memory modes, lower overall TCO while maximizing VM densities, and increase memory security with automatic hardware-level encryption.

See the full documentation about Intel® Optane™ persistent memory.

Design and Architecture

The PMEM-CSI driver can operate in two different device modes: LVM and direct. This table contains an overview and comparison of those modes.

LVM |

direct |

|

|---|---|---|

| Main advantage | avoids free space fragmentation1 | simpler, somewhat faster, but free space may get fragmented1 |

| What is served | LVM logical volume | pmem block device |

| Region affinity2 | yes: one LVM volume group is created per region, and a volume has to be in one volume group | yes: namespace can belong to one region only |

| Namespace modes | fsdax mode3 namespaces pre-created as pools |

namespace in fsdax mode created directly, no need to pre-create pools |

| Limiting space usage | can leave part of device unused during pools creation | no limits, creates namespaces on device until runs out of space |

| Name field in namespace | Name gets set to 'pmem-csi' to achieve own vs. foreign marking | Name gets set to VolumeID, without attempting own vs. foreign marking |

| Minimum volume size | 4 MB | 1 GB (see also alignment adjustment below) |

| Alignment requirements | LVM creation aligns size up to next 4MB boundary | driver aligns size up to next alignment boundary. The default alignment step is 1 GB. Device(s) in interleaved mode will require larger minimum as size has to be at least one alignment step. The possibly bigger alignment step is calculated as interleave-set-size multiplied by 1 GB |

To see details of these modes and footnotes, click here.

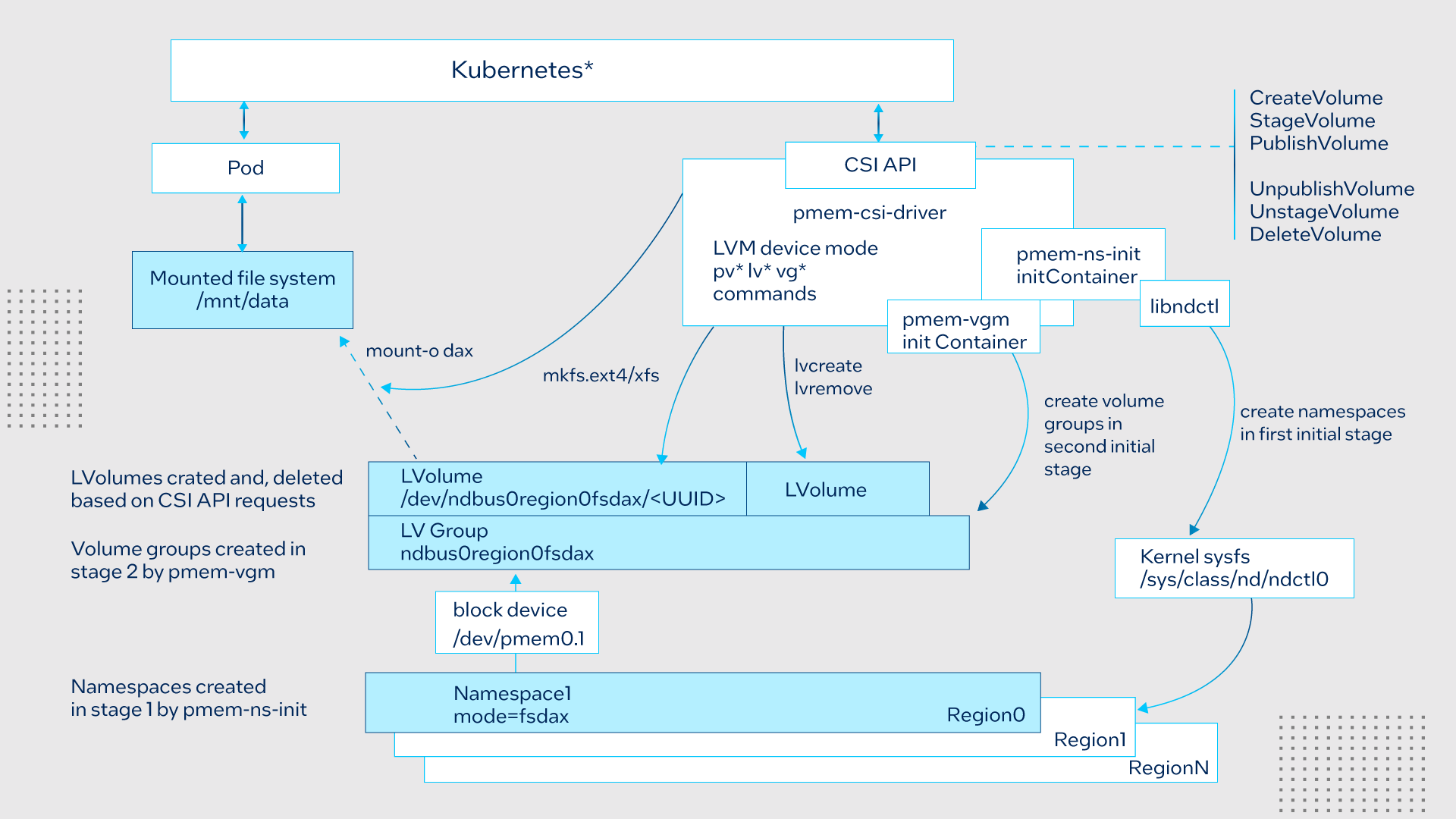

LVM device mode

In Logical Volume Management (LVM) mode the PMEM-CSI driver uses LVM for logical volume Management to avoid the risk of fragmentation. The LVM logical volumes are served to satisfy API requests. There is one volume group created per region, ensuring the region-affinity of served volumes.

During startup, the driver scans persistent memory for regions and namespaces, and tries to create more namespaces using all or part (selectable via option) of the remaining available space. Later it arranges physical volumes provided by namespaces into LVM volume groups.

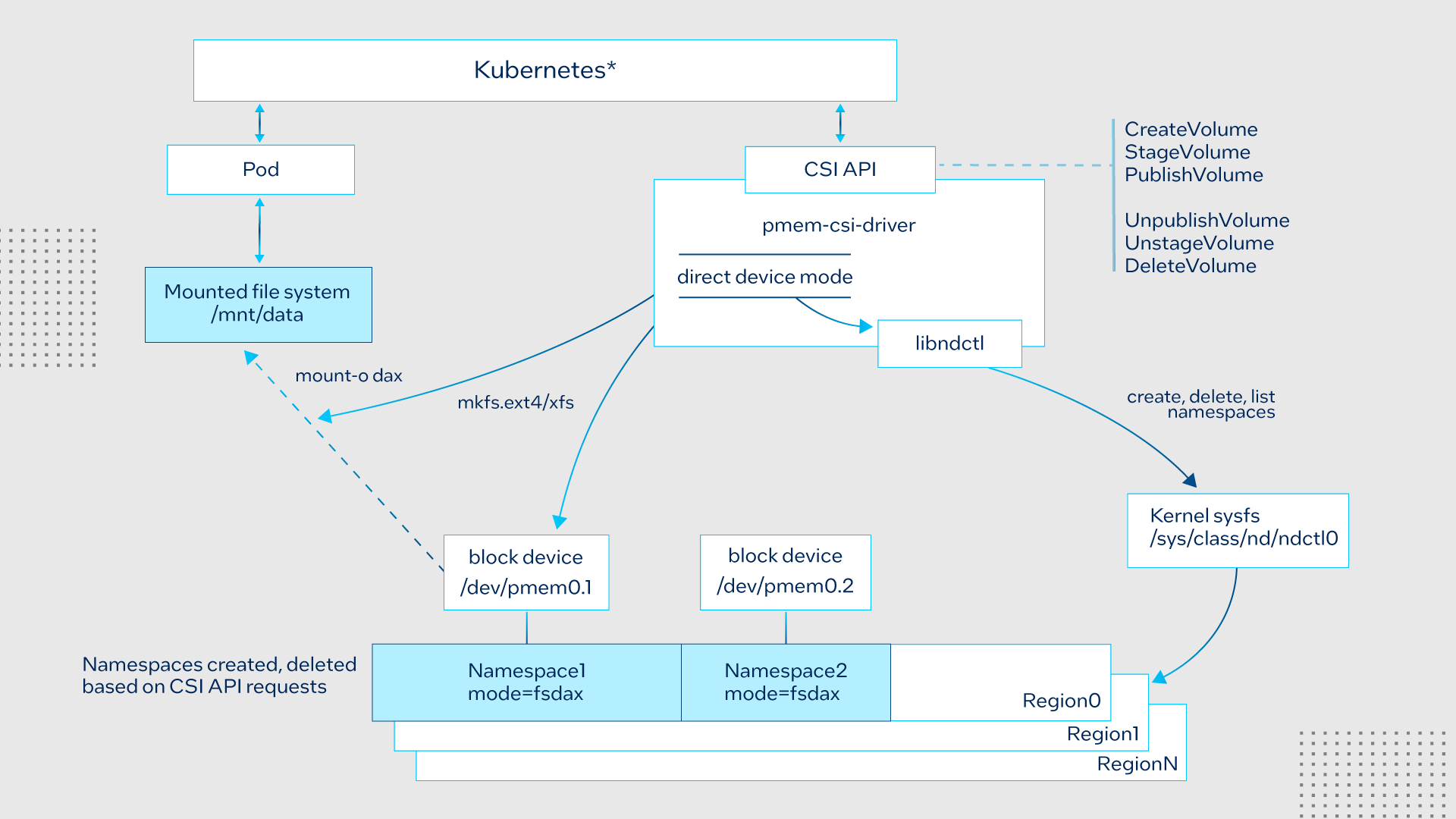

Direct device mode

The following diagram illustrates the operation in Direct device mode:

In direct device mode PMEM-CSI driver allocates namespaces directly from the storage device. This creates device space fragmentation risk, but reduces complexity and run-time overhead by avoiding additional device mapping layer. Direct mode also ensures the region-affinity of served volumes, because provisioned volume can belong to one region only.

Kata Container support

Read the latest status on Kata container support here.

Driver modes

The PMEM-CSI driver supports running in different modes, which can be controlled by passing one of the below options to the driver’s ‘-mode’ command line option. In each mode, it starts a different set of open source Remote Procedure Call (gRPC) servers on given driver endpoint(s).

-

Controller should run as a single instance in cluster level. When the driver is running in Controller mode, it forwards the pmem volume create/delete requests to the registered node controller servers running on the worker node. In this mode, the driver starts the following gRPC servers:

-

One Node instance should run on each worker node that has persistent memory devices installed. When the driver starts in such mode, it registers with the Controller driver running on a given -registryEndpoint. In this mode, the driver starts the following servers:

-

Read more about the driver components here.

Communication between components

Continue reading more about PMEM-CSI security, volume persistency, pod scheduling, and PMEM-CSI operator, click here.

Installation and Usage

Prerequisites

Software required

The recommended mimimum Linux kernel version for running the PMEM-CSI driver is 4.15. See Persistent Memory Programming for more details about supported kernel versions.

Hardware required

Persistent memory device(s) are required for operation. However, some development and testing can be done using QEMU-emulated persistent memory devices. See the “QEMU and Kubernetes” section for the commands that create such a virtual test cluster.

Persistent memory pre-provisioning

The PMEM-CSI driver needs pre-provisioned regions on the NVDIMM device(s). The PMEM-CSI driver itself intentionally leaves that to the administrator who then can decide how much and how PMEM is to be used for PMEM-CSI. Beware that the PMEM-CSI driver will run without errors on a node where PMEM was not prepared for it. It will then report zero local storage for that node, something that currently is only visible in the log files.

When running the Kubernetes cluster and PMEM-CSI on bare metal, the ipmctl utility can be used to create regions. App Direct Mode has two configuration options - interleaved or non-interleaved. One region per each NVDIMM is created in non-interleaved configuration. In such a configuration, a PMEM-CSI volume cannot be larger than one NVDIMM.

Example of creating regions without interleaving, using all NVDIMMs:

ipmctl create -goal PersistentMemoryType=AppDirectNotInterleaved

Example of creating regions in interleaved mode, using all NVDIMMs:

ipmctl create -goal PersistentMemoryType=AppDirect

Installation and setup

This section assumes that a Kubernetes cluster is already available with at least one node that has persistent memory device(s). For development or testing, it is also possible to use a cluster that runs on QEMU virtual machines, see the “QEMU and Kubernetes”. To read the detailed steps, click here.

- Make sure that the alpha feature gates CSINodeInfo and CSIDriverRegistry are enabled

- Label the cluster nodes that provide persistent memory device(s)

- Setup deployment certificates

- Install PMEM-CSI driver either using PMEM-CSI operator or reference yaml files

- Create a custom resource

To see how to install and deploy PMEM-CSI from source, click here.

Applications Examples

Deploy a Redis cluster through the redis-operator using QEMU-emulated persistent memory devices

Deploy memcached with PMEM as replacement for DRAM.

Install Kubernetes and PMEM-CSI on Google Cloud machines.

Demo Videos

A simple PMEM-CSI demo on Kubernetes

These instructions show how to use persistent memory (PMEM) within Kubernetes. This server is configured with Intel(R) Optane(TM) memory in a 2-2-2 configuration and has already been configured for app direct mode using the ipmctl utility. The Persistent Memory has to be configured for local storage and is local to each node. This example will use LVM for the storage interface but direct mode is also available. There are already existing volumes that were created from a previous install of the PMEM-CSI driver. The driver can reuse existing namespaces, but this demo will show you how to start from a clean state.

Accelerate Redis in Kubernetes with PMEM-CSI and Intel® Optane™ Persistent Memory

This video demonstrates how to deploy PMEM-CSI to use Intel® Optane™ Persistent Memory on a multi-node Kubernetes cluster, and how it can help to accelerate Redis by showing Memtier benchmark result.

Events

KubeCon + CloudNativeCon Europe Virtual

Visit our booth HERE! (requires to be logged in the tool)