Introduction

This article shows the Intel® oneAPI Math Kernel Library (oneMKL) Data Parallel C++ usage models applied to the Monte Carlo simulation example with oneMKL Random Number Generators functionality, available, starting with the oneMKL beta release. Six types of examples are demonstrated: reference C++ based, oneMKL Data Parallel C++ based, oneMKL Data Parallel C++ extended, heterogeneous implementations as well as implementations based on using of different memory allocations techniques.

Pi-number Evaluation by Monte Carlo Simulations - Theoretical Aspects

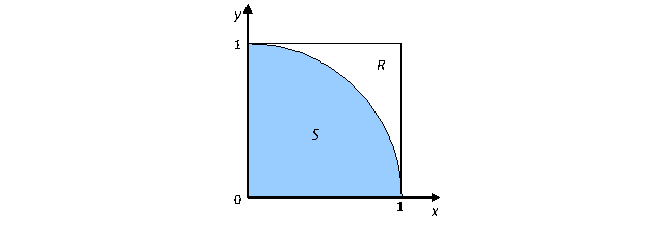

Monte Carlo simulations (methods) are a broad class of computational algorithms that use repeated random sampling to obtain numerical results [Knuth81]. The following example shows how to evaluate Pi-number using by Monte Carlo method. Consider a quadrant inscribed in a unit square as shown in the figure below.

If a point C = (x,y) is chosen from the unit square R randomly, the probability that C is within sector S equals to

Pr(C in S) = Area(S)/Area(R) = Pi/4 (1)

Consider n such points, where n is large enough, and count the number of points falling into S – k numbers. Then the probability Pr ( C in S) can be approximated by the ratio k/n, or

Pi/4 ~ k/n (2)

An approximation of Pi may be found as

Pi ~ 4k/n (3)

According to the law of large numbers, the larger the number that n is, then the more accurate the Pi approximation is. More accurately, from the Bernoulli theorem, for any ξ > 0

Pr(|k/n-4*Pi|>=ξ) <= 1/(4*n*ξ^2) (4)

If x and y coordinates of the tested point C are in [0,1] (abscissa and ordinate, respectively), then point C falls into the sector S, when

x^2 + y^2 <= 1 (5)

Pi-number evaluation by Monte Carlo simulations workflow:

- Generate n 2D points (each point is represented by two uniformly distributed over [0,1) interval random numbers ).

- Count the number of points that falls into the sector S using condition (5).

- Calculate the approximated value of Pi using formula (3).

Reference C++ Example of Pi-number Evaluation Using Monte Carlo Simulation

Let us consider a function estimate_pi that takes a number of 2D points n_points and process workflow above:

float estimate_pi( size_t n_points ) {

float estimated_pi; // Estimated value of Pi

size_t n_under_curve = 0; // Number of points fallen under the curve

// Allocate storage for random numbers

std::vector<float> x(n_points);

std::vector<float> y(n_points);

// Step 1. Generate n_points random numbers

// 1.1. Generator initialization

std::default_random_engine engine(SEED);

std::uniform_real_distribution<float> distr(0.0f, 1.0f);

// 1.2. Random number generation

for(int i = 0; i < n_points; i++) {

x[i] = distr(engine);

y[i] = distr(engine);

}

// Step 2. Count the number of points fallen under the curve

for ( int i = 0; i < n_points; i++ ) {

if (x[i] * x[i] + y[i] * y[i] <= 1.0f)

n_under_curve++;

}

// Step 3. Calculate approximated value of Pi

estimated_pi = n_under_curve / ((float)n_points) * 4.0;

return estimated_pi;

}

The current example uses random functionality from the C++ 11 standard. In Step 1, the random number generator is initialized by creating two instances: engine and distribution

// 1.1. Generator initialization

std::default_random_engine engine(SEED);

std::uniform_real_distribution<float> distr(0.0f, 1.0f);

The engine holds a state of a generator and provides independent and identically distributed random variables. Distribution describes the transform of the generator output with proper statistics and parameters. In this example, uniform_real_distribution produces random floating-point values, uniformly distributed on the interval [a, b).

Random numbers are obtained by passing the engine to an operator() of distribution. A single floating-point variable is obtained. In the following loop fills vectors x and y with random numbers:

// 1.2. Random number generation

for(int i = 0; i < n_points; i++) {

x[i] = distr(engine);

y[i] = distr(engine);

}In Step 2, condition (5) is checked to calculate the number of points that are fallen into the S sector. The result is stored in n_under_of_curver variable:

// Step 2. Count the number of points fallen under the curve

for ( int i = 0; i < n_points; i++ ) {

if (x[i] * x[i] + y[i] * y[i] <= 1.0f)

n_under_curve++;

}

In Step 3, the Pi value is calculated and returned it the main program:

estimated_pi = n_under_curve / ((float)n_points) * 4.0;

return estimated_pi;

oneMKL Data Parallel C++ Example of Pi-number Evaluation by Monte Carlo Simulations

Let us slightly modify the estimate_pi function and add the cl::sycl::queue creation. Data Parallel C++ allows you to choose a device to run on via a selector interface [DPC++ Spec reference] :

float estimate_pi(size_t n_points) {

float estimated_pi; // Estimated value of Pi

size_t n_under_curve = 0; // Number of points fallen under the curve

// Allocate storage for random numbers

cl::sycl::buffer<float, 1> x_buf(cl::sycl::range<1>{n_points});

cl::sycl::buffer<float, 1> y_buf(cl::sycl::range<1>{n_points});

// Choose device to run on and create queue

cl::sycl::gpu_selector selector;

cl::sycl::queue queue(selector);

std::cout << "Running on: " <<

queue.get_device().get_info<cl::sycl::info::device::name>() <<std::endl;

// Step 1. Generate n_points random numbers

// 1.1. Generator initialization

mkl::rng::philox4x32x10 engine(queue, SEED);

mkl::rng::uniform<float, mkl::rng::standard> distr(0.0f, 1.0f);

// 1.2. Random number generation

mkl::rng::generate(distr, engine, n_points, x_buf);

mkl::rng::generate(distr, engine, n_points, y_buf);

//Step 2. Count the number of points fallen under the curve

auto x_acc = x_buf.template get_access<cl::sycl::access::mode::read>();

auto y_acc = y_buf.template get_access<cl::sycl::access::mode::read>();

for ( int i = 0; i < n_points; i++ ) {

if (x_acc[i] * x_acc[i] + y_acc[i] * y_acc[i] <= 1.0f)

n_under_curve++;

}

// Step 3. Calculate approximated value of Pi

estimated_pi = n_under_curve / ((float)n_points) * 4.0;

return estimated_pi;

}

cl::sycl::queue is an input argument of oneMKL functionality, the library’s kernels are submitted in this queue, and no code changes are required to switch between devices (host, cpu and gpu are available for oneMKL beta).

Instead of std::vectors, cl::sycl::buffers are used as a storage for random numbers:

//reference:

std::vector<float> x(n_points);

std::vector<float> y(n_points);

//Data Parallel C++:

cl::sycl::buffer<float, 1> x_buf(cl::sycl::range<1>{n_points});

cl::sycl::buffer<float, 1> y_buf(cl::sycl::range<1>{n_points});

Buffers manage data for the host application and device kernels. The buffer class together with the accessor class are responsible for tracking memory transfers and guaranteeing data consistency across the different kernels.

In Step 1, oneMKL RNG APIs also have two entities to initialize – basic random number generator (or engine) and distribution. Step 1.1. can be represented as follows:

mkl::rng::philox4x32x10 engine(queue, SEED);

mkl::rng::uniform<float, mkl::rng::standard> distr(0.0f, 1.0f);Engines take cl::sycl::queue and an initial value (SEED) as in input of constructor. Distribution mkl::rng::uniform has template parameters for the type of output values and method used for transformation of the engine’s output (Intel® oneAPI Math Kernel Library (oneMKL)—Data Parallel C++ Developer Reference for details ) and parameters of the distribution.

mkl::rng::generate function is called at the Step 1.2. to obtain random numbers:

mkl::rng::generate(distr, engine, n_points, x_buf);

mkl::rng::generate(distr, engine, n_points, y_buf);

This function takes the distribution and engine created in the previous step, the number of elements to be generated, and storage for the result in buffers -> RNG API mkl::rng::generate() is vectorized in comparison with С++ standard API: Vector type library subroutines often perform much more efficiently than scalar type routines. The overhead expenses of scalar type routines are often comparable with the total time required for vector type computations, especially for highly optimized RNGs [VS Notes ].

auto x_acc = x_buf.template get_access<cl::sycl::access::mode::read>();

auto y_acc = y_buf.template get_access<cl::sycl::access::mode::read>();

Other steps are the same as in the Reference C++ example.

oneMKL Data Parallel C++ Example of Monte Carlo Simulation. Extended

Step 2 in the previous example can be optimized by using Data Parallel C++ Parallel STL function. This approach reduces data transfer from the device to the host which improves the performance aspect of simulations. Modified Step 2 is represented below:

auto policy = dpstd::execution::make_sycl_policy<class count>(queue);

auto x_buf_begin = dpstd::begin(x_buf);

auto y_buf_begin = dpstd::begin(y_buf);

auto zip_begin = dpstd::make_zip_iterator(x_buf_begin, y_buf_begin);

n_under_curve = std::count_if(policy, zip_begin, zip_begin + n_points,

[](auto p) {

using std::get;

float x, y;

x = get<0>(p);

y = get<1>(p);

return x*x + y*y <= 1.0f;

});Zip iterator provides a pair of random numbers as an input of the count_if function.

Other steps are the same as in the oneMKL Data Parallel C++ example.

oneMKL Data Parallel C++ Example of Monte Carlo Simulation. Unified Shared Memory (USM)-based

Data Parallel C++ support pointer-based memory management by usage:

- cl::sycl::malloc

- cl::sycl::usm_allocator

see [DPC++ USM] for more details.

- Approach I. Allocate memory by cl::sycl::malloc

cl::sycl::malloc allows to allocate memory directly on host, device or shared (to access memory from both host and device sides):

float* x = (float*) cl::sycl::malloc_shared(n_points * sizeof(float), queue.get_device(), queue.get_context());By using such memory allocation all advantages of pointers arithmetic can be used as well.

Please note: such an approach requires free memory at the end of the program.

- Approach II. Create cl::sycl::usm_allocator and work with standard or user’s containers without worrying about direct memory control:

// Create usm allocator

cl::sycl::usm_allocator<float, cl::sycl::usm::alloc::shared> allocator(queue.get_context(), queue.get_device());

// Allocate storage for random numbers

std::vector <float, cl::sycl::usm_allocator<float, cl::sycl::usm::alloc::shared>> x(n_points, allocator);

Generation is performed in the same way, but instead of cl::sycl::buffer<float, 1>, float* is used:

auto event = mkl::rng::generate(distr, engine, n_points, x.data());Each function returns cl::sycl::event that can be used for synchronization: you can call wait() or wait_and_throw() functions for these event or for entire queue. It allows controlling data-dependencies between sycl kernels manually by calling event.wait() or queue.wait() functions.

To obtain random numbers and count points on Step 2, vectors/pointers can be used natively, without the creation of host accessors:

for ( int i = 0; i < n_points; i++ ) {

if (x[i] * x[i] + y[i] * y[i] <= 1.0f)

n_under_curve++;

}

oneMKL Data Parallel C++ Example of Monte Carlo Simulation. Heterogeneous Execution

An example may be extended for a case, where part of computation goes on CPU and another one on the accelerator(s). In this case, APIs stay the same, you need to choose the device depending only on cl::sycl::queue.

Let us consider an example, where one portion of numbers are generated on Host and another one on GPU:

// Create queues for Host and GPU

cl::sycl::queue queue_gpu(cl::sycl::gpu_selector(), exception_handler);

cl::sycl::queue queue_host(cl::sycl::host_selector(), exception_handler);

Two queues are needed for parallel execution on different devices.

Memory allocation may be also done separately, for CPU part allocation may be done directly on Host:

// Create usm allocators for shared and host memory allocation

cl::sycl::usm_allocator<float, cl::sycl::usm::alloc::shared> allocator_gpu(queue_gpu.get_context(), queue_gpu.get_device());

// Allocate storage for random numbers

std::vector <float, decltype(allocator_gpu)> x(n_points, allocator_gpu);

std::vector <float> y(n_points);

oneMKL RNG engines should be constructed for the exact type of device, so two objects are required. The second engine may be initialized with another seed, or should be skipped on n_points elements to continue the sequence, produced from the state of random number generator properly, see RNGs in parallel computations in [VS Notes] for details:

mkl::rng::philox4x32x10 engine_gpu(queue_gpu, SEED);

mkl::rng::philox4x32x10 engine_host(queue_host, SEED);

mkl::rng::skip_ahead(engine_host, n_points);

Generation and post-processing may be called as previous; in this example std::count_if is used on a host with parallel execution policy:

auto event1 = mkl::rng::generate(distr, engine_gpu, n_points, x.data());

auto event2 = mkl::rng::generate(distr, engine_host, n_points, y.data());

// Wait to finish generation

event1.wait_and_throw();

event2.wait_and_throw();

n_under_curve = std::count_if(std::execution::par, dpstd::make_zip_iterator(x.begin(),y.begin()), dpstd::make_zip_iterator(x.end(),y.end()), [](auto p) {

using std::get;

float dx, dy;

dx = get<0>(p);

dy = get<1>(p);

return dx*dx + dy*dy <= 1.0f;

});

This approach allows balancing work between devices, or completes different tasks in parallel on any supported devices within a single API.

oneMKL Data Parallel C++ Exception Handling

Error handling via asynchronous exceptions could be supported in the following manner:

auto exception_handler = [] (cl::sycl::exception_list exceptions) {

for (std::exception_ptr const& e : exceptions) {

try {

std::rethrow_exception(e);

}

catch(cl::sycl::exception const& e) {

std::cout << "Caught asynchronous SYCL exception:\n"

<< e.what() << std::endl;

}

}

};

// Choose device to run on and create queue

cl::sycl::gpu_selector selector;

cl::sycl::queue queue(selector, exception_handler);

The exception handler is passed in cl::sycl::queue constructor, main DPC++ computational part (Steps 1-2) may be wrapped into the try-catch block to handle DPC++ runtime and oneMKL library exceptions.

try {

//DPC++ computational part

}

catch(cl::sycl::exception const& e) {

std::cout << "\t\tSYCL exception \n" << e.what() << std::endl;

}

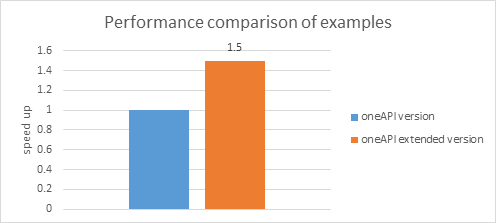

Performance Comparison

Results of the comparison between oneMKL Data Parallel C++ and Extended oneMKL Data Parallel C++ examples are represented below:

Simulation assumptions: Hardware: Intel® Core™ i7-6770HQ CPU @ 2.60GHz GPU Intel® Gen9 HD Graphics NEO; OS: Ubuntu 18.04.1 LTS; Software: Intel® oneMKL beta;

Measurement assumptions: Number of generated 2D points:10^8; Basic random number generator: mkl::rng::philox4x32x10; Random number distribution: uniform single precision; Measurement region: computational part of estimate_pi() functions (without memory allocation overhead);

Attachment:

The attachment contains six examples of the code discussed above:

- pi_mc_reference.cpp - the reference DPC++ implementation

- pi_mc_oneAPI.cpp - the initial oneMKL implementation

- pi_mc_oneAPI_extended.cpp - the extended version of such example with DPSTD

- pi_mc_oneAPI_heterogeneous.cpp - the heterogeneous version

- pi_mc_oneAPI_usm_allocator.cpp - the version of the example shows how to use usm_allocator

- pi_mc_oneAPI_usm_malloc.cpp - the version of the example shows how to use malloc_shared

How to Build the Examples on Linux* OS:

- clang++ -std=c++11 -O3 pi_mc_reference.cpp -o pi_mc_reference.out

- clang++ -fsycl -std=c++11 -O3 -DMKL_ILP64 pi_mc_oneAPI.cpp -o pi_mc_oneAPI.out -lmkl_intel_ilp64 -lmkl_sequential -lmkl_core -lmkl_sycl

- clang++ -fsycl -std=c++14 -O3 -DMKL_ILP64 pi_mc_oneAPI_extended.cpp -o pi_mc_oneAPI_extended.out

-lmkl_intel_ilp64 -lmkl_sequential -lmkl_core -lmkl_sycl - clang++ -fsycl -std=c++14 -O3 -DMKL_ILP64 pi_mc_oneAPI_heterogeneous.cpp

-o pi_mc_oneAPI_pi_mc_oneAPI_heterogeneous.out -lmkl_intel_ilp64 -lmkl_sequential -lmkl_core -lmkl_sycl -ltbb - clang++ -fsycl -std=c++11 -O3 -DMKL_ILP64 pi_mc_oneAPI_usm_allocator.cpp

-o pi_mc_oneAPI_usm_allocator.out -lmkl_intel_ilp64 -lmkl_sequential -lmkl_core -lmkl_sycl - clang++ -fsycl -std=c++11 -O3 -DMKL_ILP64 pi_mc_oneAPI_usm_malloc.cpp

-o pi_mc_oneAPI_usm_malloc.out -lmkl_intel_ilp64 -lmkl_sequential -lmkl_core -lmkl_sycl

Bibliography

[Knuth81] Knuth, Donald E. The Art of Computer Programming, Volume 2, Seminumerical Algorithms, 2nd edition, Addison-Wesley Publishing Company, Reading, Massachusetts, 1981.

[VS Notes] Intel® MKL Vector Statistics Notes, https://software.intel.com/en-us/node/810895

[Intel MKL Documentation] https://software.intel.com/en-us/mkl/documentation/view-all

[DPC++ Spec reference] https://spec.oneapi.com/oneAPI/Elements/dpcpp/dpcpp_root.html#

[oneMKL Data Parallel C++ Developer reference ] https://software.intel.com/en-us/onemkl-developer-reference-c

[PSTL documentation] https://spec.oneapi.com/oneAPI/Elements/onedpl/onedpl_root.html#extensions-to-parallel-stl

[DPC++ USM] https://github.com/intel/llvm/blob/sycl/sycl/doc/extensions/USM/USM.adoc

Authors:

Elizarova, Alina alina.elizarova@intel.com

Dyakov, Pavel pavel.dyakov@intel.com

Fedorov, Gennady Gennady.Fedorov@intel.com