As the complexity of applications continues to grow, systems need more and more computational resources for workloads, including data encryption and data compression. The exponential growth of data demands more efficient ways to handle data in process, in motion, or at rest. Intel® QuickAssist Technology (Intel® QAT) saves cycles, time, space, and cost by offloading compute-intensive workloads to free up capacity. Ceph, a leading open-source software defined storage application, provides excellent scalability and a centrally managed cluster with file, object, and block interfaces. To deploy more object storage devices (OSDs) on a server that can manage more disks but lacks CPU resources, we can put the workload on Intel QAT. By putting computational resources, such as data encryption and data compression on Intel QAT, we save resources to deploy more OSDs and manage more disks. We implemented the encryption and compression plugin using Intel QAT in Ceph, so that Ceph users can easily use Intel QAT to offload the actual compression and encryption request(s).

This article illustrates the method in Ceph to use Intel QAT encryption and compression to offload CPU encryption and compression. The article also explains how to configure the Intel QAT configuration file and how to enable the Intel QAT function in Ceph.

Intel QAT introduction

Now, let's take a look at what Intel QAT is and how to configure it.

Intel QAT, is a hardware and software solution that encrypts, decrypts, compresses, and decompresses efficiently. Intel QAT provides extended accelerated compression, decompression, encryption, and decryption services by offloading the actual compression and encryption request(s).

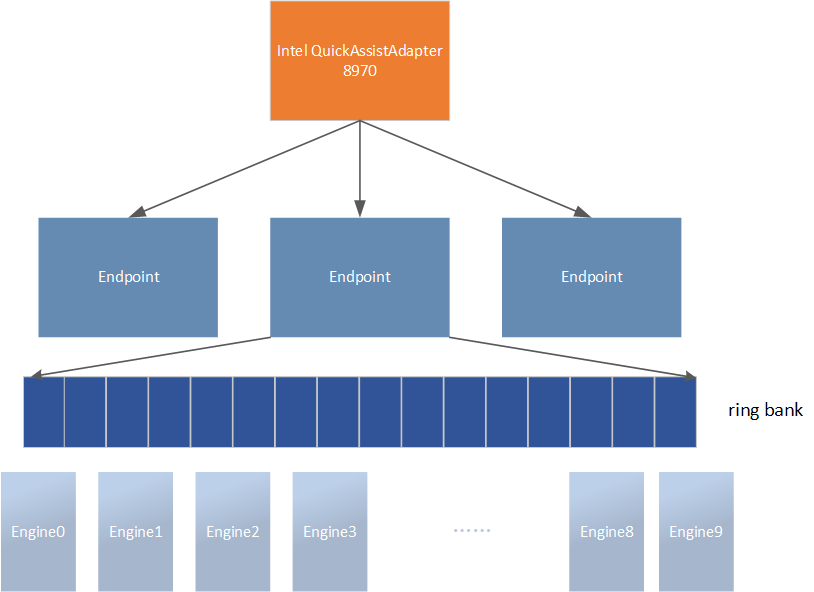

One example of an Intel QAT device is the Intel® QuickAssist Adaptor 8970, which is an external PCIE (peripheral component interconnect express) card. The card has three Endpoints, which are also called Intel QAT acceleration devices. Each Endpoint has ring buffers for logical instances, and the Endpoint load balances requests from all rings of a given service type across all available hardware engines of the corresponding type. The engines are the computational units.

Each instance or logical instance is a channel to a specific hardware accelerator, that needs to be configured in a configuration file to use Intel QAT. The number of instances that can be configured depends on many factors.

The number of rings and the type of service enabled determines the maximum number of instances of each type. The configuration parameters (NumberDcInstances * NumProcess) should be less than or equal to the maximum number of instances that the current service can provide. (NumberCyInstances * NumProcess) is the same.

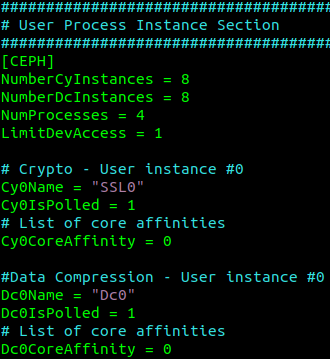

Figure 2 shows the minimum parameters we need to change in the Intel QAT configuration file. In the example, we enabled cy and dc services, so only 32 instances of each service type can be created. Here, we use it for 4 processes and create 8 instances for each process.

In the CEPH section, LimitDevAccess is used to indicate if the user space processes in this section are limited to only access instances on this Endpoint. 0 means the processes in this section can access multiple Endpoints. 1 means each process in this section can only access one Endpoint. CyXIsPolled/DcXIsPolled is used to indicate the mode, such as 0 for interrupt mode, 1 for polling mode (this mode is used in Ceph), 2 for epoll mode. CyXCoreAffinity/DcXCoreAffinity is used to specify the core for which the instance should be affinitized, and is only valid when CyXIsPolled/DcXIsPolled is 0 or 2.

Intel QAT usage in Ceph

Intel QAT encryption in Ceph

Encryption is only used in the Ceph object gateway (RGW). It is implemented in S3 according to the Amazon SSE-C specification, and it supports AES-256-CBC server-side encryption.

In the Ceph code, there are three implementations of AES-256-CBC, including openssl, isa-l, and Intel QAT. These three methods all implement the same plugin interface. We can choose which one by configuring the plugin_crypto_accelerator parameter.

Ceph splits the RGW object with CHUNK_SIZE in the encryption module, and then sends it to the plugin for encryption. In the Intel QAT encryption plugin, the implementation is built on the top of the Intel QAT user space library directly and uses the previously mentioned sync mode.

Intel QAT compression in Ceph

Currently, the compression plugin implements a variety of compression algorithms, including brotli, lz4, snappy, zlib, and zstd. We implement the zlib algorithm using Intel QAT. These compression plugins work with RGW, messenger, and bluestore.

The QATzip library is used here to reimplement the above algorithm (zlib, lz4, zstd). Compared to the Intel QAT user space library, using the QATzip library can simplify our programming. At the same time, using QATzip, the performance provided by Intel QAT can easily be fully utilized.

The related compression algorithm implemented by Intel QAT is not implemented as a separate plugin. When using this algorithm, you only need to set the configuration parameter qat_compressor_enabled to true to offload the compression workload to Intel QAT.

How to use

This section shows how to enable the Intel QAT plugin in Ceph. It is assumed here that the Intel QAT driver and QATzip have been successfully installed. And the qat driver source directory is assigned to the environment variable ICP_ROOT, and the QATzip source directory is assigned to the environment variable QZ_ROOT.

Download the Intel QAT driver and QATzip:

Intel QAT driver: https://www.intel.com/content/www/us/en/download/19734/intel-quickassist-technology-driver-for-linux-hw-version-1-7.html

QATzip: https://github.com/intel/QATzip

For encryption, we should build Ceph using the parameter:

$ ./do_cmake.sh -DWITH_QAT=ON

$ cd build

$ ninja -j{ncpus}

And we should add the configuration in ceph.conf:

plugin_crypto_accelerator=crypto_qat

After the process radosgw restarts, Intel QAT encryption works.

Note: The Intel QAT config section name must be CEPH for encryption.

For Compression, we should build Ceph using the parameter:

$ ./do_cmake.sh -DWITH_QATZIP=ON -DWITH_QAT=ON

$ cd build

$ ninja -j{ncpus}

And the below parameter should be in Ceph configuration file (ceph.conf)

qat compressor enabled=true

bluestore_compression_mode=aggressive // only for bluestore

bluestore_compression_algorithm=zlib // only for bluestore

ms_osd_compress_mode=force // only for messenger

ms_osd_compression_algorithm=zlib // only for messenger

If the Intel QAT config section isn’t SHIM, we should set the environment variable before restarting the process, such as radosgw and ceph-osd.

export QAT_SECTION_NAME=CEPH // Assume QAT config section name is CEPH

It should be noted that if we want to use compression in RGW, we need to create a storage class to associate with a pool, and then set a specific compression algorithm for this storage class.

$CEPH_BIN/radosgw-admin zonegroup placement add --rgw-zonegroup default --placement-id default-placement --storage-class COLD -c $conf_fn

$CEPH_BIN/radosgw-admin zone placement add --rgw-zone default --placement-id default-placement --storage-class COLD --compression zlib --data-pool default.rgw.cold.data -c $conf_fn

If we don’t want to use compression for all OSD (bluestore is used in OSD), we can also enable compression on a specific pool, like this:

ceph osd pool set <pool-name> compression_algorithm <algorithm>

ceph osd pool set <pool-name> compression_mode <mode>

ceph osd pool set <pool-name> compression_required_ratio <ratio>

ceph osd pool set <pool-name> compression_min_blob_size <size>

ceph osd pool set <pool-name> compression_max_blob_size <size>

Summary

In this article, we explained what Intel QAT is and the meaning of some parameters of the Intel QAT configuration file. We detail how Intel QAT is currently used in Ceph, where and how it is used. Although the current Intel QAT device is an external PCIE card, we will have some consideration whether to use Intel QAT. But when Intel QAT is integrated into the CPU like an integrated graphics card, we should prioritize how to offload more computing-intensive workloads to Intel QAT, thereby saving more CPU computing resources to complete other tasks.

References

• https://docs.ceph.com/en/latest/radosgw/qat-accel/

• https://docs.ceph.com/en/latest/radosgw/compression/

• https://docs.ceph.com/en/latest/radosgw/encryption/

• https://docs.ceph.com/en/latest/rados/configuration/bluestore-config-ref/#inline-compression

• https://www.intel.com/content/www/us/en/download/19734/intel-quickassist-technology-driver-for-linux-hw-version-1-7.html

• https://github.com/intel/QATzip

• https://www.intel.com/content/www/us/en/architecture-and-technology/intel-quick-assist-technology-overview.html

• https://01.org/sites/default/files/downloads//336210-021-intel-qat-programmers-guide-v1.7.pdf

• https://01.org/sites/default/files/downloads/621658-intelr-qat-debugging-guide-rev1-0_0.pdf