Quality of Service (QoS) refers to the overall performance of a network and is used in telephony and computer networks to describe the customer’s experience with the network. The most straightforward way of guaranteeing a good quality of service to the end user is to over provision the network.

Over provisioning a network means the network has more bandwidth available to it than the customers can consume at any given time. This simplistic approach to quality of service is also the most expensive. It allows for traffic over the network to spike at peak times while not eating into another customer’s minimum bandwidth. This introduces the “noisy neighbor” scenario where someone on the network consumes more than their share of bandwidth reducing the bandwidth that is available to their neighbors. In this blog, we will take a look at four QoS policies, two that are already implemented and two that are currently in development.

Noisy Neighbor Use-Case

The “noisy neighbor” was the first use-case that the Neutron QoS team sought to address when designing the Neutron QoS extension. The resulting QoS rule was named the “QosBandwidthLimitRule” and it accepts two non-negative integers: max-kbps is the bandwidth measured in kilobits per second and max-burst-kbps is the burst buffer measured in kilobits. For the best results, it is recommended that the max-burst-kbps is set to at least the maximum transfer unit (MTU); for Ethernet this is 1500 bytes.

The “QoSBandwidthLimitRule” polices traffic egressing from a virtual machine. This prevents any individual virtual machine from dominating the available bandwidth when the rule is applied to all virtual machines on the network. The “QoSBandwidthLimitRule” has been implemented in the Neutron Open vSwitch, linuxbridge and single root input/output virtualization (SR-IOV) drivers. Any out-of-tree drivers will have to implement the backend for this QoS rule themselves in order to provide the driver-specific configurations.

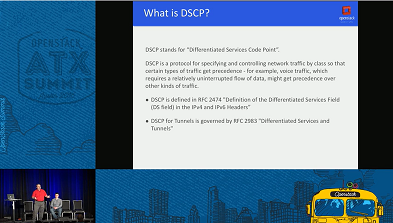

The most recent addition to the QoS rules is the “QosDscpMarkingRule”, which merged in the Newton cycle. This rule marks the Differentiated Service Code Point (DSCP) value in the “type of service” header on ipv4 (RFC 2474) and “traffic class” header on ipv6 on all traffic leaving a virtual machine that the rule is applied to. This is a 6-bit header with 21 valid values that denote the drop priority of a packet as it crosses networks should it meet congestion. It can also be used by firewalls to match valid or invalid traffic against its access control list. A detailed talk on the features, applications and implementation of this rule can be found in this presentation from the 2016 Openstack Austin Summit.

At present, only one of each of the two rules can be used on a virtual machine. The rules are added to a QoS Policy and bound to a Neutron port or network. The QoS Policy applied to the port takes priority over the Policy applied to the network. There is work in Neutron to develop a “Neutron Common Classifier” which would allow features to classify traffic in a uniform way. This feature could allow more than one of each type of rule to be applied if the rules were to target specific traffic from a port in the future.

New QoS Rules in Development

After the OpenStack Austin Summit in April of 2016, the QoS community listed a few new QoS rules they wish to introduce into Neutron over the Newton cycle. The “QosIngressBandwidthLimitRule” addresses the same “noisy neighbor” scenario as described above, however, this rule polices the traffic when entering a virtual machine as opposed to leaving it. In this scenario, the “noisy neighbor” isn’t generating the traffic that is congesting the network, but receiving it. This rule can be implemented by extending the original “QosBandwidthLimitRule” to include a directionality field.

The other QoS rule in development is the “QosEgressBandwidthMinimumRule”. This rule is a different approach to the “noisy neighbor” problem; rather than policing the excess of traffic and effectively partitioning the bandwidth between your virtual machines, this method seeks to guarantee that all virtual machines can at least use a minimum amount of bandwidth before it begins policing the network. This allows more active virtual machines to spike due to an increase in workload so long as they don’t cause other virtual machines to drop below their minimum bandwidth guarantee.

With the introduction of these new rules and the two currently implemented, cloud administrators are given greater control of the bandwidth and traffic prioritization of their clouds, allowing them to provision the networks to best suit their (and their customers’) requirements.