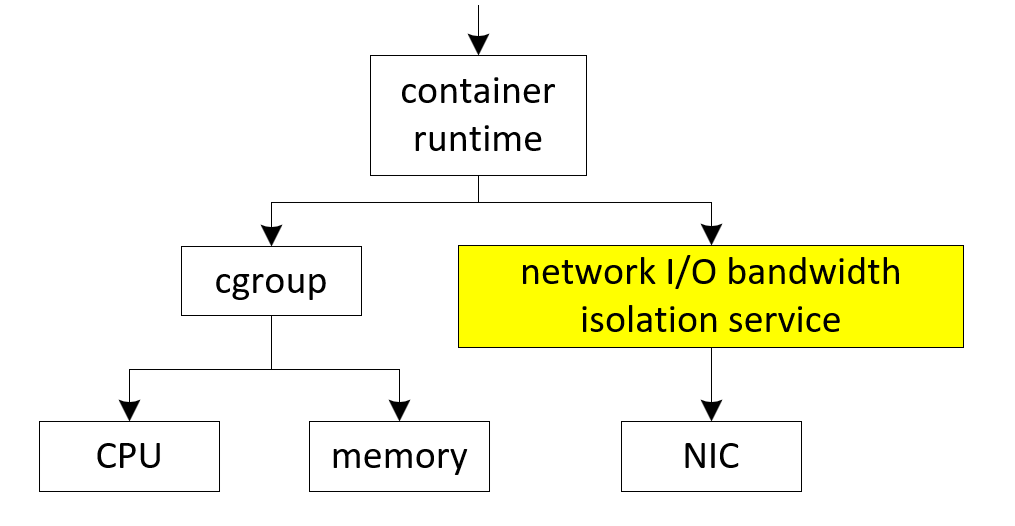

Figure 1 is an illustration of the container runtime stack with the network I/O bandwidth isolation service. Instead of isolating resources (such as CPU and memory) via cgroup, the network I/O isolation is controlled by a network I/O service. The implementation of network I/O isolation can be realized as a plugin mechanism to support different types of network interface cards (NIC).

Using the native resource quality of service (QoS) definition from Kubernetes (k8s) [1], we divide workloads into three classes, according to their requested and limited network I/O bandwidth:

- Guaranteed (GA): The requested bandwidth of Guaranteed workloads should be guaranteed when running with other workloads.

- Burstable (BT): Burstable workloads have some lower-bound resource guarantees based on the request but cannot exceed a specific limit when applied.

- Best effort (BE): Best effort workloads are the lowest priority and can use resources that aren’t specifically assigned to workloads in other QoS classes.

We propose a network I/O bandwidth isolation service that users only need to specify the workload’s requested network I/O resource and its QoS class to isolate all workloads’ network bandwidth. This service sets workloads’ network I/O resource boundary and collects each workload’s real-time traffic. Moreover, Application Device Queues (ADQ) technology (see ADQ NIC I/O isolation solution) could reduce I/O latency and improve network performance by allocating specific hardware TCs for each workload QoS class.

This article introduces the design of the network I/O bandwidth isolation service. We provide two plugins for the implementation of the service, i.e., a software-based generic plugin and ADQ-enabled plugin supported by hardware.

Architecture

Network I/O service runs as a server and receives requests from the client. The client sends requests to the network I/O service via a network framework, such as gRPC. The service plugin handles all requests from the upper layer. We currently implement two plugins for controlling application bandwidth according to whether the NIC supports ADQ technology: generic plugin and ADQ plugin. The plugin framework is extensible, and users can also develop their own plugins based on this framework. There are seven functional interfaces defined currently: GetServiceInfo, RegisterApp, UnRegisterApp, SetAppLimit, GetAppLimit, Subscribe, and Configure. The handlers for those functional interfaces are different; here we mainly introduce the implementation of a generic plugin and an ADQ plugin. In these two plugins, the GetServiceInfo interface returns a response containing the maximal network I/O bandwidth and information from the NICS, such as the maximum queue number. The I/O service monitors the traffic and the Subscribe interface returns the real-time traffic.

We divide workloads into three classes, depending on the characteristics of the application. For example, depending on the workload’s network I/O QoS, a workload can be divided into one of three classes defined earlier, GA, BT, or BE. Each plugin may be able to do bandwidth control for each application and each class. In the Configure interface, both plugins return a groupSettable parameter to denote whether the plugin is supporting a class-level limit.

Generic NIC I/O isolation solution

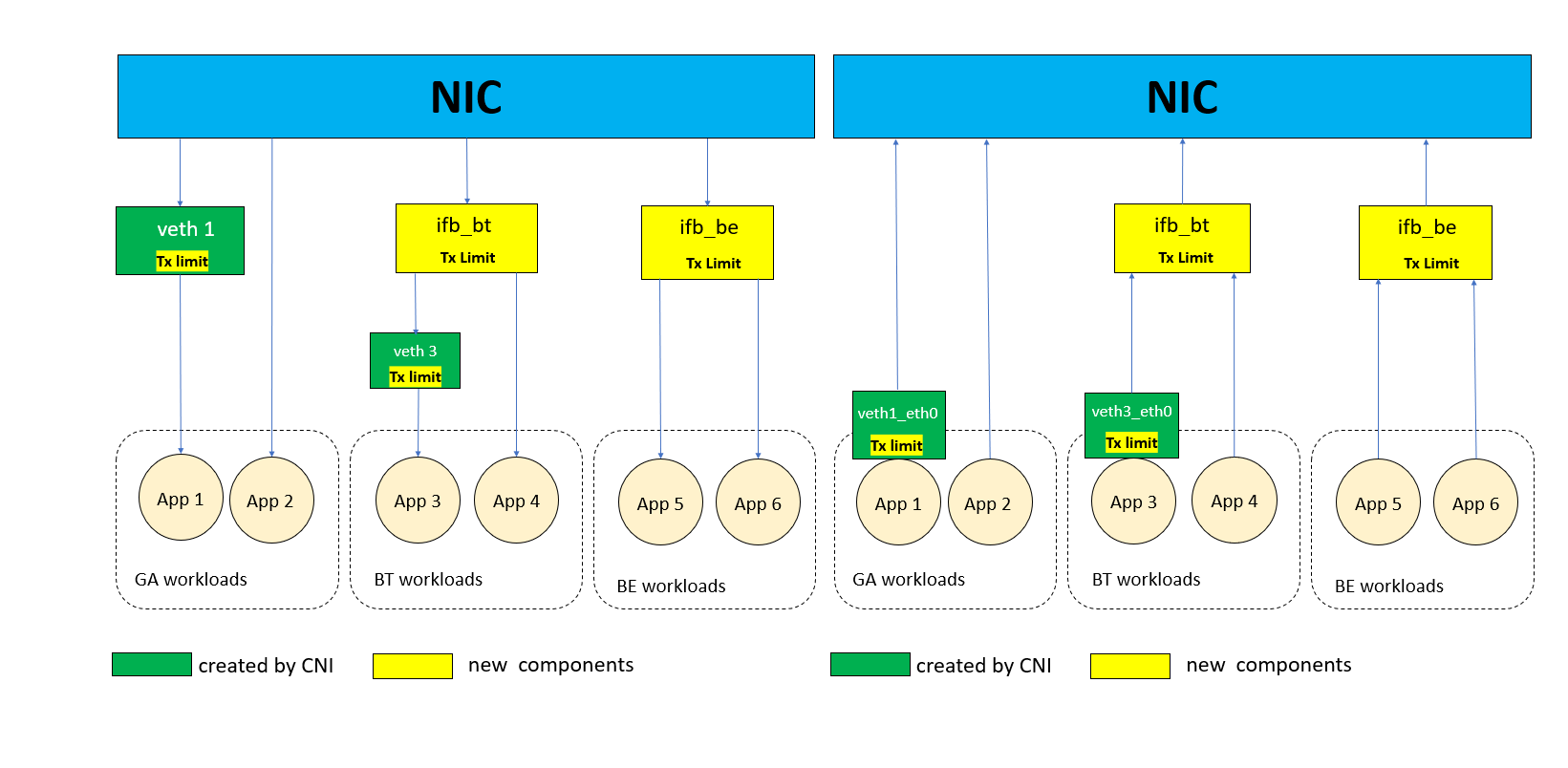

For most NIC models, ADQ is not supported. In this case, we design a Generic I/O isolation service plugin for generic NICs. As previously mentioned, we divide workloads into three classes according to their network I/O QoS, i.e., GA (guaranteed), BT (burstable), and BE (best effort). For GA pods, we implement bandwidth throttling via traffic control on each of the pods. Moreover, we gather all BE pods in a class by redirecting them through an additional intermediate functional block (IFB) device. All the BE pods will share the class-level bandwidth. BT pods both support class-level limits and pod-level limits.

We provide four APIs for the client: Configure, RegisterApp, UnRegisterApp, and SetLimit.

Configure:

Configure is used to configure class-level settings for BT and BE classes. BT and BE class bandwidth limits are controlled by configuring the intermediate IFB device’s qdisc in both ingress and egress directions.

RegisterApp:

RegisterApp is used to redirect the traffic of BT and BE pods to their own class IFB. It redirects traffic in both of two directions, ingress and egress. It adds a filter to connect the pod with the corresponding network interface and IFB device.

UnRegisterApp:

UnRegisterApp removes qdisc rules of ingress and egress from GA pods, i.e., it removes the bandwidth limit of GA pods. Moreover, it removes the corresponding qdisc rules and filters of BT and BE pods to cut off the connection of pods with their classes.

SetLimit:

SetLimit sets the network bandwidth limit for pod-level GA pods and class-level BE pods. BT pods support two-level limits. For the pod-level limit, it adds qdisc for the veth pair in ingress mode and adds qdisc on the veth in the container network in egress mode. For the class-level limit, it sets the given value to the corresponding IFB device.

ADQ NIC I/O isolation solution

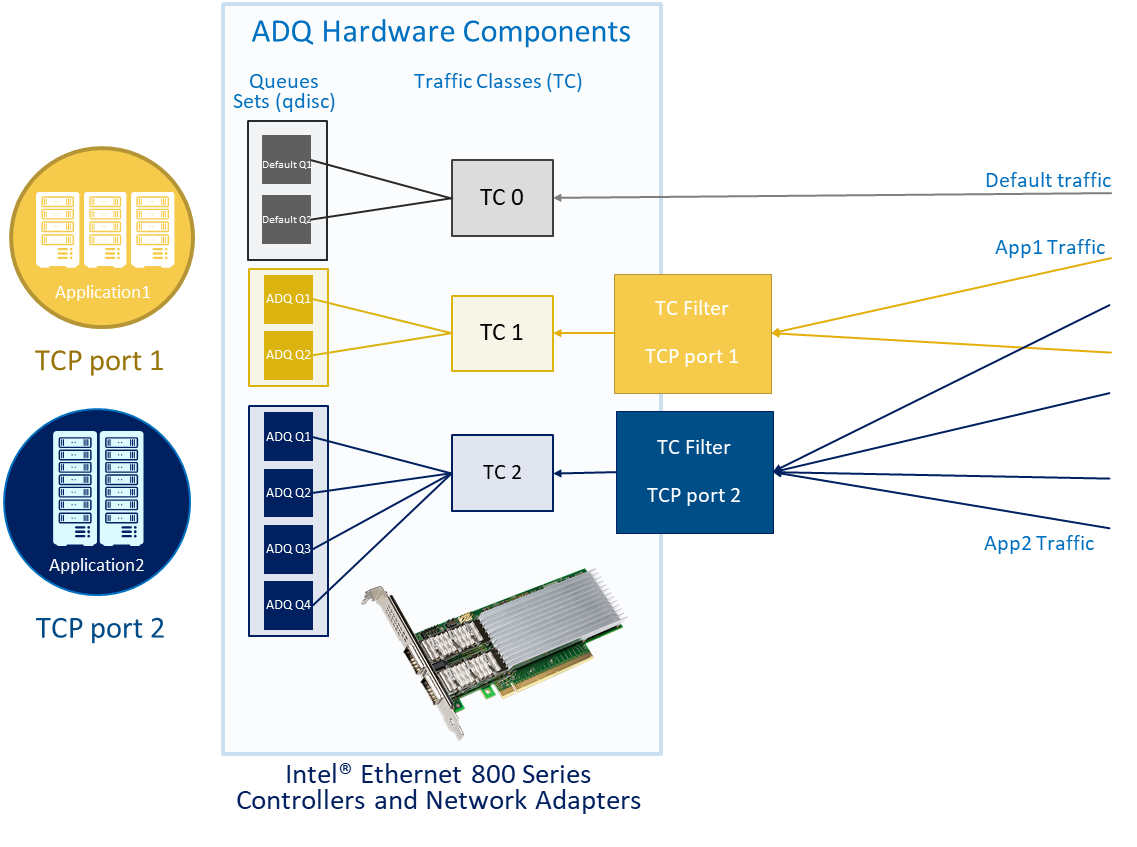

ADQ-enabled plugin implementation

Intel® Ethernet 800 Series Network Adapters feature Application Device Queues (ADQ) [2], which is technology designed to prioritize application traffic to deliver the performance required for high-priority, network-intensive workloads. By performing application-specific queuing and steering, ADQ can improve response time predictability, reduce latency, and improve throughput for critical applications.

Under the context of network I/O resource isolation, ADQ helps to ensure that critical workloads receive high performance and guaranteed network I/O resources. By preventing other traffic from contesting for resources with a chosen workload's traffic, performance of critical workloads becomes more predictable and less prone to jitter. Additionally, workload-specific outgoing network bandwidth can be rate-limited with hardware acceleration, which can be used to divide or prioritize network bandwidth for specific workloads.

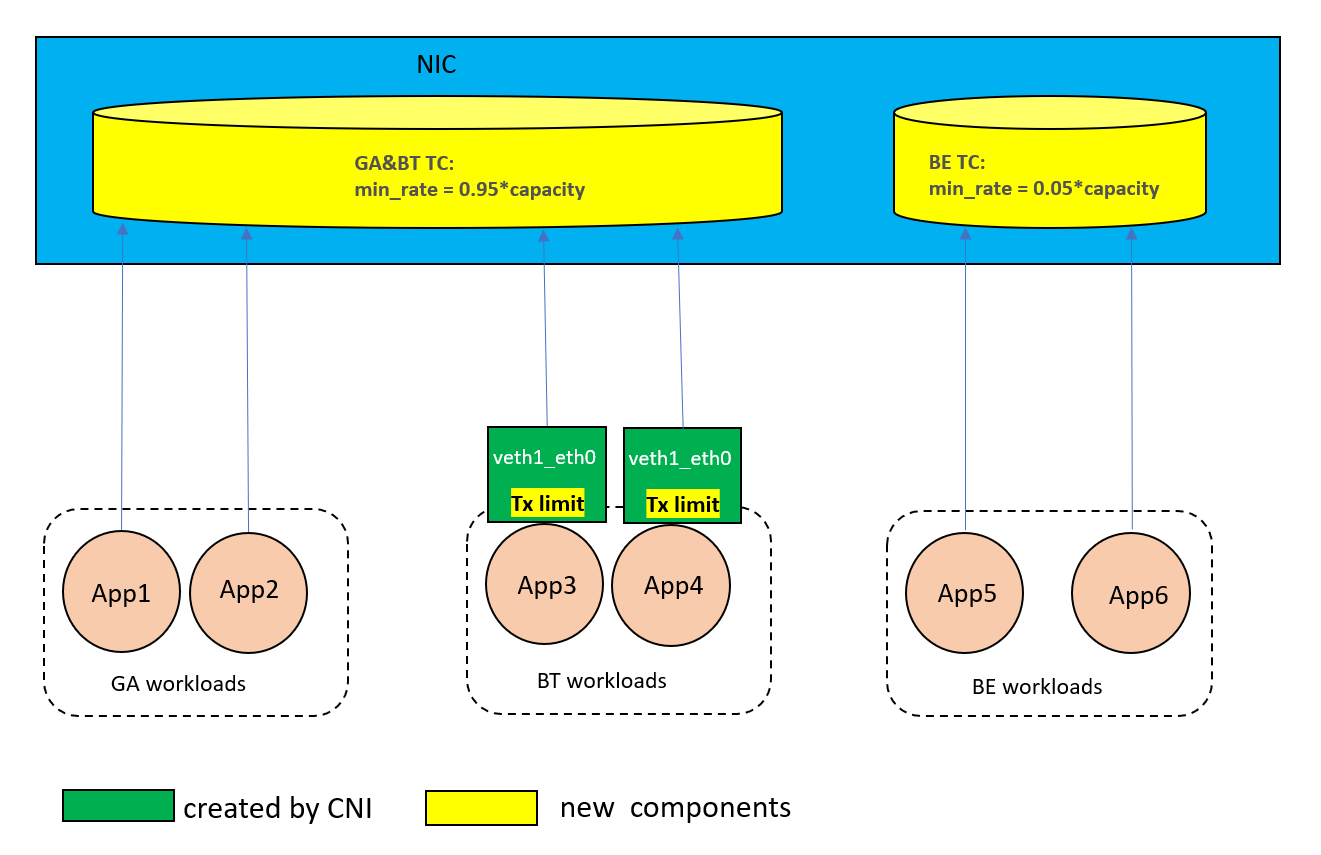

The generic I/O Isolation solution can only set the maximum bandwidth for a single workload or a class of workloads. ADQ hardware supports setting the minimum bandwidth for traffic classes (TCs) in the egress direction. This ensures a minimum bandwidth for a TC when competing for network bandwidth with other TCs as well. As a result, the class-level traffic control in the egress direction can be offloaded to hardware, while the ingress-direction traffic control remains to be controlled in software by adding qdisc in the veth pair of each workload.

We divide queues in a NIC into two traffic classes (TCs), a guaranteed and burstable (GA/BT) TC for all GA and BT workloads and a best effort (BE) TC for all BE workloads. When initializing TCs, a large proportion of network I/O resources (95%, for example) are reserved for the GA/BT TC by setting the min_rate parameter of the TC, indicating that when BE workloads are contesting network I/O resources with GA/BT workloads, the GA/BT TC could guarantee at least 95% of the NIC’s maximum speed. Each TC can borrow bandwidth from the other TC if the other TC has unoccupied network I/O resources.

The ADQ plugin supports the service’s gRPC dynamic configuration request. It enables control of workloads in a class-level using ADQ TC in hardware rather than using an IFB device. Here are some differences in the implementation of the client’s gRPC request of the ADQ plugin and the generic plugin.

Configure:

There is no need to create IFB devices for the BE class. Create a GA/BT TC and a BE TC on the ADQ-enabled NIC and set the min_rate for both for class-level I/O bandwidth isolation.

RegisterApp:

According to the app’s type, add the IP/port of the app to the NIC’s TC filtering rules to make the ingress/egress traffic of the app redirected to the specified queue set.

UnRegisterApp:

Remove the TC filter rule of this app from the NIC’s TC filter list and remove the pod’s veth pair interfaces’ Token Bucket Filter (TBF) qdisc.

SetLimit:

The Setlimit interface in the ingress direction is the same as the implementation of the generic plugin. As for the egress direction, at present ADQ's TC does not support dynamically adjusting bandwidth rate limit after TC initialization. Therefore, the ADQ plugin's SetLimit interface currently only supports limiting the maximum bandwidth of each pod by adding TBF qdisc on the pod's veth pair. Each BT workload’s egress maximum bandwidth is set via the SetLimit interface at the pod level, to avoid affecting the I/O bandwidth of GA workloads.

ADQ performance data

We use a Netperf workload running TCP_RR to test bandwidth throttling at the class level using the ADQ-disabled solution and the ADQ-enabled solution. When 50 threads of TCP requests are running simultaneously, the ADQ-enhanced solution of throttling class’s bandwidth can increase throughput by nearly 110% while reducing average latency and P99 latency by nearly 50% and 80%, respectively. The standard deviation of latency drops to less than 1% compared to the non-ADQ solution[3]. As a result, the ADQ-enabled approach significantly improves the throughput and predictability of workloads and significantly reduces latency.

Meanwhile, the improvement in throughput, latency, and predictability of ADQ is more obvious with the increased number of workloads’ network I/O threads. Therefore, the ADQ-enhanced solution could improve performance more obviously in the multi-workload cluster environment.

Conclusion and result

In this article, we introduced the network I/O bandwidth isolation service solution and the two plugins we implemented. This supports generic NICs and NICs enhanced with ADQ, which makes it possible to isolate network I/O resources between multiple workloads in the cloud-native environment. We divide workloads into three classes (e.g., GA, BT, and BE), and guarantee network I/O bandwidth of different-priority workloads based on their requested and limited network resource.

Here is an example of how it works in real usage scenarios: suppose there are several BT and BE workloads running, and the network I/O bandwidth of the host is fully occupied. If we add more BT workloads to the server at this time, the bandwidth of the running BE workloads will be compressed to share their network bandwidth with BT workloads. Alternatively, if we add a GA workload to run out of the available bandwidth, the bandwidth usage of BT and BE workloads will both be compressed. So, users can specify a workload as a GA type to guarantee its network resources with the highest priority.

Reference

[1] https://kubernetes.io/docs/concepts/workloads/pods/pod-qos/

[2] https://www.intel.com/content/www/us/en/architecture-and-technology/ethernet/adq-resource-center.html