Overview

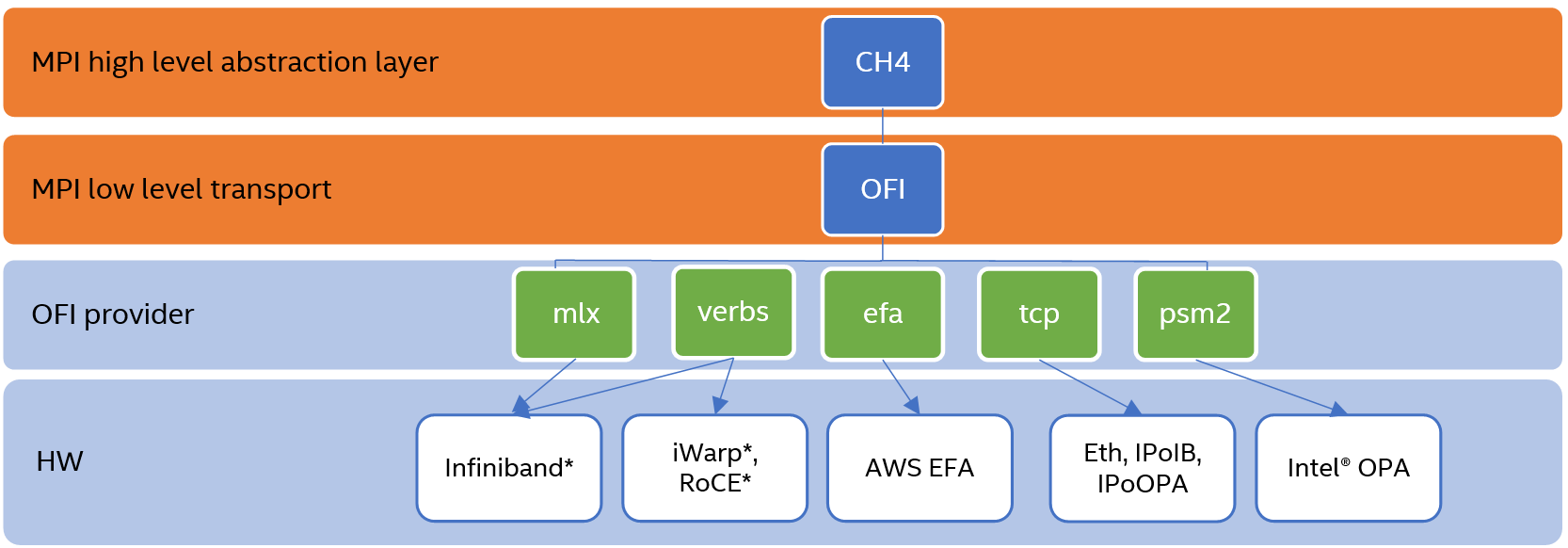

With the 2019 release, the Intel® MPI Library switched from the Open Fabrics Alliance* (OFA) framework to the Open Fabrics Interfaces* (OFI) framework and currently supports libfabric*. The DAPL, TMI, and OFA fabrics are deprecated. The Intel MPI Library package contains the libfabric library, which is used when the mpivars.sh/csh/bat script executes.

The following diagram shows the Intel MPI Library software stack:

What Is Libfabric?

Libfabric is a low-level communication abstraction for high-performance networks. It hides most transport and hardware implementation details from middleware and applications to provide high-performance portability between diverse fabrics. See the OFI web site for more details, including a project overview and detailed API documentation.

Using the Intel MPI Library Distribution of Libfabric

By default, mpivars.sh/csh/bat (or vars.sh/bat for oneAPI) for Linux* or Windows* sets the environment to the version of libfabric shipped with the Intel MPI Library. To disable this, use the I_MPI_OFI_LIBRARY_INTERNAL environment variable or -ofi_internal (by default ofi_internal=1):

source ./mpivars.sh -ofi_internal=1

I_MPI_DEBUG=4 mpirun -n 1 IMB-MPI1 barrier

Output:

[0] MPI startup(): libfabric version: 1.9.0a1-impi

[0] MPI startup(): libfabric provider: mlx

source ./mpivars.sh -ofi_internal=0

I_MPI_DEBUG=4 mpirun -n 1 IMB-MPI1 barrier

Output:

[0] MPI startup(): libfabric version: 1.10.0a1

[0] MPI startup(): libfabric provider: verbs;ofi_rxm

Note: You can use I_MPI_DEBUG=4 to display the libfabric version.

Common OFI Controls

Choosing a Provider

To select the OFI provider from the libfabric library, use the FI_PROVIDER environment variable, which defines the name of the OFI provider to load:

You can specify the selected OFI provider with the IMPI environment variable:

export I_MPI_OFI_PROVIDER=<name>

Note: The I_MPI_OFI_PROVIDER is preferred over the FI_PROVIDER.

Runtime Parameters

The environment variable FI_UNIVERSE_SIZE=<number> for RxM, MLX, and EFA providers defines the maximum number of processes used by a distributed OFI application. The provider uses this to optimize resource allocations.

Logging Interface

FI_LOG_LEVEL=<level> controls the amount of logging data that is output. The following log levels are defined:

- Warn: Warn is the least verbose setting and is intended for reporting errors or warnings.

- Trace: Trace is more verbose and is meant to include non-detailed output helpful for tracing program execution.

- Info: Info is high traffic and meant for detailed output.

- Debug: Debug is high traffic and is likely to impact application performance. Debug output is only available if the library has been compiled with debugging enabled.

See fi_fabric(7) for more details.

Linux* OS

OFI Providers Supported by Intel MPI Library

This section describes OFI providers supported by the Intel MPI Library. Every provider has a description and parameters relevant to the Intel MPI Library, and optional notes in the form of prerequisites or limitations.

MLX

Description

The MLX provider was added in the 2019 Update 5 release as a binary in internal libfabric distribution. The provider runs over the UCX that is currently available for the Mellanox InfiniBand* hardware.

For more information on using MLX with InfiniBand, see Improve Performance and Stability with Intel MPI Library on InfiniBand*

Common Control Parameters

| Name | Description |

FI_MLX_INJECT_LIMIT |

Sets the control for maximal tinject/inject message sizes. |

FI_MLX_ENABLE_SPAWN |

Enables dynamic processes support. |

FI_MLX_TLS |

Specifies the transports available for the MLX provider. Note: Transport is chosen optimally depending on the number of ranks and other parameters. Which transport you might set to FI_MLX_TLS, see Improve Performance and Stability with Intel MPI Library on InfiniBand*. |

Prerequisites

UCX version 1.4 or above has to be installed (available as a part of MOFED or standalone package).

Limitations

The MLX provider does not support the MPI connect/accept primitives and multi-EP mode of the Intel MPI Library. The minimum UCX API is version 1.4.

PSM2

Description

The PSM2 provider runs over the PSM 2.x interface supported by the Cornelis* Omni-Path Fabric.

Common Control Parameters

| Name | Description |

FI_PSM2_INJECT_SIZE |

Defines the maximum message size allowed for fi_inject and fi_tinject calls. |

FI_PSM2_LAZY_CONN |

Controls the connection mode established between PSM2 endpoints that OFI endpoints are built on top of.

Note: Lazy connection mode may reduce the startup time on large systems at the expense of higher data path overhead. |

Prerequisites

The PSM2 provider requires an additional library: libpsm2, which is part of the Cornelis OPA-IFS software stack.

See the fi_psm2(7) man page for more details.

TCP

Description

The TCP provider is a general purpose provider for the Intel MPI Library that can be used on any system that supports TCP sockets to implement the libfabric API. The provider lets you run the Intel MPI Library application over regular Ethernet, in a cloud environment that has no specific fast interconnect (e.g., GCP, Ethernet empowered Azure*, and AWS* instances) or using IPoIB.

Common Control Parameters

| Name | Description |

FI_TCP_IFACE |

Specifies a particular network interface. |

FI_TCP_PORT_LOW_RANGE |

Sets the range of ports to be used by the TCP provider for its passive endpoint creation. This is useful where only a range of ports are allowed by the firewall for TCP connections |

Prerequisites

The TCP provider has no prerequisites.

See the fi_tcp(7) man page for more details.

EFA

Description

The EFA provider enables applications using OFI to be run on AWS EC2 instances over AWS EFA (Elastic Fabric Adapter) hardware. For more information, see the Elastic Fabric Adapter page.

The EFA provides reliable and unreliable datagram send/receive with direct hardware access from userspace (OS bypass). The provider supports endpoint types FI_EP_RDM on a new Scalable (unordered) Reliable Datagram protocol (SRD) for the Intel MPI Library. SRD provides support for reliable datagrams and more complete error handling than typically seen with other Reliable Datagram (RD) implementations. The EFA provider provides segmentation and reassembly of out-of-order packets to provide send-after-send ordering guarantees to applications via its FI_EP_RDM endpoint.

Common Control Parameters

| Name | Description |

FI_EFA_EFA_CQ_READ_SIZE |

Sets the number of EFA completion entries to read for one loop for one iteration of the progress engine. (The default is 50.) |

FI_EFA_MR_CACHE_ENABLE |

Uses the memory registration cache and in-line registration instead of a bounce buffer for IOV's larger than the max_memcpy_size. Defaults to true. When disabled, only uses a bounce buffer. |

FI_EFA_MR_CACHE_MERGE_REGIONS |

Merges overlapping and adjacent memory registration regions. (The default is true.) |

FI_EFA_MR_MAX_CACHED_COUNT |

Sets the maximum number of memory registrations that can be cached at any time. |

FI_EFA_RECVWIN_SIZE |

Defines the size of the sliding receive window. (The default is 16384.) Note: May affect performance in case of a large number of send operations without waiting for send completion. |

FI_EFA_TX_MIN_CREDITS |

Defines the minimum number of credits a sender requests from a receiver. (The default is 32.) |

Prerequisites

EFA software components must be installed. For details, see the AWS EC2 User Guide.

Limitations

Set FI_UNIVERSE_SIZE to the number of expected ranks in case of runs larger than 8192 ranks (or 227 C5n instances).

See the fi_efa(7) man page for more details.

Verbs

Description

The Verbs provider enables applications using OFI to be run over any verbs hardware (InfiniBand*, iWarp*/RoCE*). It uses the Linux Verbs API for network transport and provides a translation of OFI calls to appropriate verbs API calls. The verbs provider uses the RxM utility provider to implement some specific environments.

Common Control Parameters

| Name | Description |

FI_VERBS_INLINE_SIZE |

Defines the maximum message size allowed or fi_inject and fi_tinject calls. |

FI_VERBS_IFACE |

Defines the prefix or the full name of the network interface associated with the verbs device. The default value is ib. |

FI_VERBS_MR_CACHE_ENABLE |

Enables Memory Registration caching. The default value is 0. Note: Set the value to 1 to increase bandwidth for medium and large messages. |

FI_MR_CACHE_MONITOR |

Defines a default memory registration monitor. The monitor checks for virtual to physical memory address changes. Options are:

|

Prerequisites

The Verbs provider requires the following additional libraries to be installed:

IPoIBhas to be available and properly set up.ibacmvialibrdmacm(version 1.0.16 or newer) is required for connection management.

Note: If you are compiling libfabric from source and want to enable Verbs support, it is essential to have the matching header files for the above two libraries. If the libraries and header files are not in the default paths, specify them in the CFLAGS, LDFLAGS, and LD_LIBRARY_PATH environment variables.

See the fi_verbs(7) man page for more details.

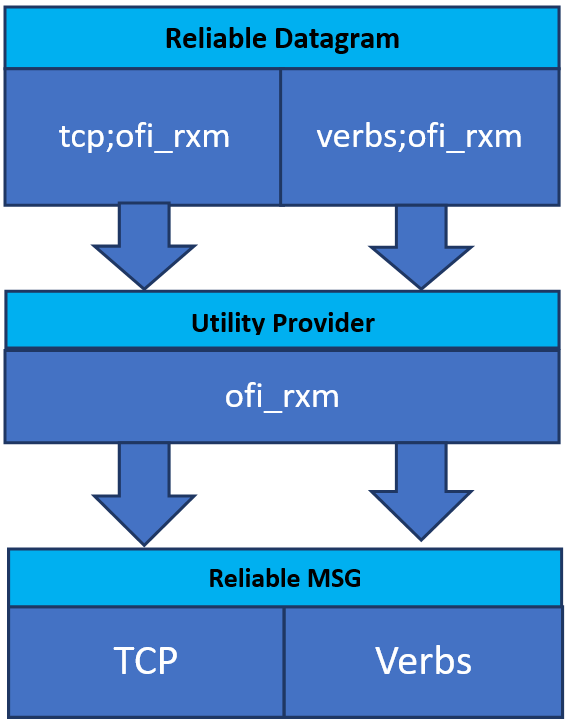

RxM

Description

The RxM provider (ofi_rxm) is a utility provider layered provider over Verbs or TCP core providers. Utility providers are often used to support a specific endpoint type over a simpler endpoint type. For example, the RxM utility provider supports Reliable Datagram Sockets mechanism over an MSG endpoint.

Note: This utility provider is enabled automatically for a core provider that does not support the feature set requested by an application.

Common Control Parameters

| Name | Description |

FI_OFI_RXM_BUFFER_SIZE |

Defines the transmit buffer size/inject size. Messages of smaller size are transmitted via an eager protocol. Messages of greater size are transmitted via the SAR or rendezvous protocol. Transmitted data is copied up to the specified size. By default, the size is 16 K. |

FI_OFI_RXM_USE_SRX |

Controls the RxM receive path. If the variable is set to 1, the RxM uses the Shared Receive Context of the core provider. The default value is 0. Note: This mode improves memory consumption, but may increase small message latency as a side effect. |

FI_OFI_RXM_SAR_LIMIT |

Controls the RxM SAR (Segmentation and Reassembly) protocol. Messages of greater size are transmitted via rendezvous protocol. The default value is 256 KB. Note: RxM SAR is not supported by iWarp. |

FI_OFI_RXM_ENABLE_DYN_RBUF |

Enables support for dynamic receive buffering if it is available by the message endpoint provider. This allows to place received messages directly into application buffers, bypassing RxM bounce buffers. The default value is 0. |

FI_OFI_RXM_ENABLE_DIRECT_SEND |

Enables support to pass application buffers directly to the core provider. The default value is 0. |

Prerequisites

The RXM provider doesn't require any external prerequisites, but it requires a reliable message provider, like TCP or Verbs.

See the fi_rxm(7) man page for more details.

Other

It is possible to build PSM and GNI providers from ofiwg/libfabric sources and use with the Intel MPI Library.

To run the Intel MPI Library using Intel® True Scale, follow these steps:

- Download and configure libfabric sources as was described in the section Building and Installing Libfabric from the Source. During the configuration phase, make sure that the PSM provider is enabled. You should see the following after configuration:

*** Built-in providers: psm - Force the Intel MPI Library to use your own libfabric installation as described in the section Using Your Built Libfabric.

- For the 2019 Update 6 release, you must specify extra controls:

export FI_PSM_TAGGED_RMA=0 export MPIR_CVAR_CH4_OFI_ENABLE_DATA=0 - Run the Intel MPI Library as usual.

Building and Installing Libfabric from the Source

Distribution .tar packages are available from the GitHub* Releases tab. If you are building libfabric, follow these steps:

- Download the desired release package (for example,

libfabric-1.9.0.tar.bz2). - Decompress the .tar release package (

tar xvf <package_name>) - Change into the libfabric directory.

- Run

autogen.sh - Run

configure - Run

make - Run

make installas super user or root to install.

Configuration Options

The configure script has many built-in options (see ./configure --help). Some useful options are:

--prefix=<directory>

By default, make install places the files in the /usr tree. The --prefix option specifies that libfabric files should be installed in the tree specified by <directory>. The executables will be located at <directory>/bin.

--with-valgrind=<directory>

Specifies the directory where Valgrind* is installed. If Valgrind is found, then Valgrind annotations are enabled. This may incur a performance penalty.

--enable-debug

Enables debug code paths. This enables various extra checks and allows for using the highest verbosity logging output that is normally compiled in production builds.

--enable-<provider>=[yes|no|auto|dl|<directory>]

Enables the provider named <provider>. Valid options are:

autoThis is the default if the--enable-<provider>option is not specified. The provider will be enabled if all of its requirements are satisfied. If one of the requirements cannot be satisfied, the provider is disabled.yesThis is the default if the--enable-<provider>option is specified. The configure script will abort if the provider cannot be enabled (e.g., due to some of its requirements not being available.noDisables the provider. This is synonymous with--disable-<provider>dlEnables the provider and builds it as a loadable library.<directory>Enables the provider and uses the installation given in<directory>.

--disable-<provider>

Disables the provider named <provider>.

All providers are configured to use the dl option and will be built as a set of independent binaries with their own dependencies. Meanwhile, libfabric itself will have no any external dependencies. Dynamic loadable providers may be easily overridden using the variable FI_PROVIDER_PATH.

Providers built in the regular way will be statically linked to the libfabric library itself. In this case, libfabric.so will have dependencies of all built-in providers.

Examples

Consider the following example:

$ ./configure --prefix=/opt/libfabric --disable-tcp && make -j 32 && make install

You should see the following after configuration is complete:

*** Built-in providers: rxd rxm verbs

*** DSO providers:

This tells libfabric to disable the sockets provider and install libfabric in the /opt/libfabric tree. All other providers will be enabled if possible and all providers will be built into a single library.

Alternatively:

$ ./configure --prefix=/opt/libfabric --enable-debug --enable-tcp=dl && make -j 32 && make install

You should see the following after configuration is complete:

*** Built-in providers: rxd rxm verbs sockets

*** DSO providers: tcp

This tells libfabric to enable the TCP provider as a loadable library, enable all debug code paths, and install libfabric to the /opt/libfabric tree. All other providers will be enabled if possible.

Validate Installation

The fi_info utility can be used to validate the libfabric and provider installation, as well as provide details about provider support and available interfaces. See the fi_info man page for details on using the utility, which is installed as part of the libfabric package.

A more comprehensive test suite is available via the fabtests software package. Also, for validation purposes you can use fi_pingpong, a ping-pong test for transmitting data between two processes.

Using Your Built Libfabric

To use your own built libfabric, specify LD_LIBRARY_PATH to your library and FI_PROVIDER_PATH to the path to OFI providers, built as dynamic libraries:

Syntax

export LD_LIBRARY_PATH=<ofi-install-dir>/lib:$LD_LIBRARY_PATH

export FI_PROVIDER_PATH=<ofi-install-dir>/lib/libfabric

Readme

For further Information on building and installing libfabric, refer to the libfabric readme file.

Windows* OS

OFI Providers Supported by Intel MPI Library

This section describes OFI providers supported by the Intel MPI Library. Every provider has a description and parameters relevant to the Intel MPI Library, and optional notes in the form of prerequisites or limitations.

TCP

Description

The TCP provider is a general purpose provider for the Intel MPI Library that can be used on any system that supports TCP sockets to implement the libfabric API. The provider lets you run the Intel MPI Library application over regular Ethernet, in a cloud environment that has no specific fast interconnect (e.g., GCP, Ethernet empowered Azure*, and AWS* instances) or using IPoIB.

Common Control Parameters

| Name | Description |

FI_TCP_IFACE |

Specifies a particular network interface. |

FI_TCP_PORT_LOW_RANGE |

Sets the range of ports to be used by the TCP provider for its passive endpoint creation. This is useful where only a range of ports are allowed by the firewall for TCP connections. |

Prerequisites

The TCP provider has no prerequisites.

See the fi_tcp(7) man page for more details.

NETDIR

Description

The NETDIR provider runs over NetworkDirect* interface for the compatible hardware.

Common Control Parameters

| Name | Description |

FI_NETDIR_MR_CACHE |

Enables memory registration cache. The default value is 0. |

FI_NETDIR_MR_CACHE_MAX_SIZE |

Sets the maximum memory registration cache size. The default value is mem/core_count/2. |

Prerequisites

The NETDIR provider requires the following prerequisites:

IPoIBhas to be properly set up.- NetworkDirect* drivers for adapter should be installed.

Sockets

Description

The sockets provider is a mostly windows purpose provider for the Intel MPI Library that can be used on any system that supports TCP sockets to apply all libfabric provider requirements and interfaces. The provider lets you run the Intel MPI Library application over regular Ethernet environment.

Common Control Parameters

| Name | Description |

FI_SOCKETS_IFACE |

Specifies a particular network interface. |

FI_SOCKETS_CONN_TIMEOUT |

An integer value that specifies how many milliseconds to wait for one connection establishment. |

FI_SOCKETS_MAX_CONN_RETRY |

An integer value that specifies the number of socket connection retries before reporting as failure. |

Prerequisites

The Sockets provider has no prerequisites.

See the fi_sockets(7) man page for more details

Building and Installing Libfabric from the Source

To build a Windows Libfabric you need the NetDirect provider at first. Network Direct SDK/DDK may be obtained as a nuget package (preferred) from: NetworkDirect SPI 2.0 or downloaded from: HPC Pack 2012 SDK (on page press Download button and select NetworkDirect_DDK.zip.)

-

Extract header files from downloaded NetworkDirect_DDK.zip: \NetDirect\include\ file into <libfabricroot>\prov\netdir\NetDirect\, or add path to NetDirect headers into VS include paths.

-

Compile. Llibfabric has 6 Visual Studio solution configurations:

-

1-2: Debug/Release ICC (restricted support for Intel Compiler XE 15.0 only);

-

3-4: Debug/Release v140 (VS 2015 tool set);

-

5-6: Debug/Release v141 (VS 2017 tool set).

Note: Make sure you choose the correct target fitting your compiler. By default the library will be compiled to <libfabricroot>\x64\<yourconfigchoice>

-

-

Link your library:

-

Right click your project and select properties.

-

Choose C/C++ > General and add <libfabricroot>\include to "Additional include Directories"

-

Choose Linker > Input and add <libfabricroot>\x64\<yourconfigchoice>\libfabric.lib to "Additional Dependencies"

-

Using Your Built Libfabric

There are two variants to use your own built libfabric on Windows OS:

- Specify PATH environment variable to your build dll library:

set PATH=<path_to_your_dll>;%PATH% - Place your own dynamic library build libfabric.dll into target folder by path:

<impi_build>\intel64\libfabric\bin

References

- Open Fabrics Initiative Working Group

- "A Brief Introduction to the OpenFabrics Interfaces" by Paul Grun, Sean Hefty, Sayantan Sur, David Goodell, Robert D. Russell, Howard Pritchard, and Jeffrey M. Squyres

- 2019 OFA workshop

For more information about compiler optimizations, see our Optimization Notice.