Cloud native technologies empower organizations to build and run applications that take advantage of the distributed computing offered by cloud infrastructures, and exploit the scale, elasticity, resiliency, and flexibility that the clouds provide. The Cloud Native Computing Foundation (CNCF) [1] was founded to bring together developers, end users, and vendors to seed technologies that foster and sustain an ecosystem of open source projects to make cloud native computing ubiquitous. Kubernetes [2], originally developed by Google, is the de facto open-source container orchestration platform hosted by CNCF to automate the deployment, scaling, and management of containerized applications. It also provides a device plugin framework that allows vendors to advertise system hardware resources through device plugins, and makes allocations to workloads (e.g. video streaming) to take advantage of the hardware resources.

The global market size of video streaming is projected to grow from $419 billion in 2021 to $932 billion by 2028. The demand is continuously rising in several channels. Schools and universities record webinars and courses to enhance the learning processes. Businesses conduct professional conferences, staff training, and promote their products and services that enhance their brands and customer engagement activities. In addition, remote working and lockdown during the CDVID-19 pandemic have fueled the market growth even further.

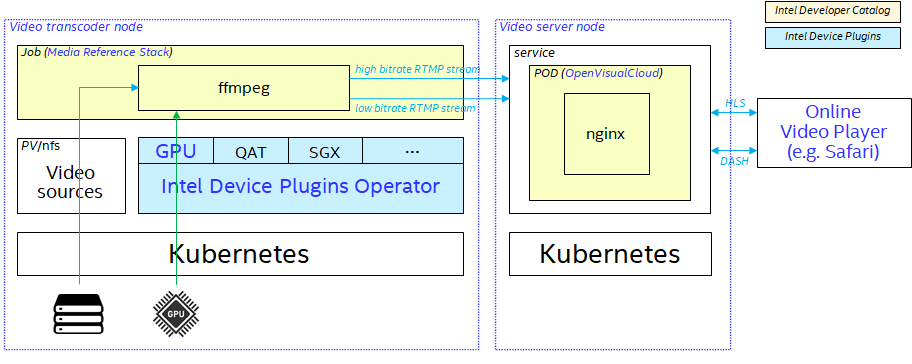

This article illustrates a cloud native-based video streaming example that offloads the transcoding work to the hardware GPU accelerators using the Intel GPU device plugin for Kubernetes [3].

Introduction

The cloud-based video streaming example consists of two nodes running software components as shown in the previous diagram. The video transcoder node on the left leverages Intel GPU hardware codecs to transcode and stream the videos to the video server node on the right. With the Intel GPU device plugin for Kubernetes, the transcoding workload is deployed only on the server with Intel GPU hardware. In addition to the Intel GPU, Intel device plugins for Kubernetes enable cloud-native workloads to utilize hardware features, such as to protect application’s code and data from modification using hardened enclaves with Intel® Software Guard Extensions (Intel® SGX) technology, or to accelerate compute-intensive operations with Intel® QuickAssist Technology (Intel® QAT), etc.

On the video server node, we are streaming videos though the Real-Time Messaging Protocol (RTMP) module of a NGINX [4] web server orchestrated by Kubernetes. RTMP, which was initially developed by Macromedia for streaming audio and video over the Internet, provides a low-latency delivery method for streaming high-quality videos, but it’s not natively supported by modern devices (e.g. smartphones, tablets, and PCs) and browsers. On the other hand, the HTTP protocol based on HLS (HTTP Live Streaming) and DASH (Dynamic Adaptive Streaming over HTTP), is compatible across any device and operating system. The HTTP protocol supports adaptive bitrate streaming that helps to deliver optimized video quality, according to the stability and the connection speed. The following Kubernetes configuration files deploy a workload running the NGINX RTMP module for streaming live videos. RTMP is used for ingesting video feeds from the video encoder / transcoder workloads. It then delivers HLS and DASH fragments to online video players via HTTP protocol. However, instead of building the NGINX-RTMP server from source, we are running the pre-integrated media streaming and web hosting software stack of an Open Visual Cloud [5] container image. Please reference the Intel Developer Catalog [6] for more detailed information on Open Visual Cloud software stacks.

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-conf

namespace: demo

data:

nginx.conf: |

worker_processes auto;

daemon off;

error_log /var/www/log/error.log info;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

rtmp {

server {

listen 1935;

chunk_size 4000;

application live {

live on;

interleave on;

hls on;

hls_path /var/www/hls;

hls_nested on;

hls_fragment 3;

hls_playlist_length 60;

hls_variant _hi BANDWIDTH=6000k;

hls_variant _lo BANDWIDTH=3000k;

dash on;

dash_path /var/www/dash;

dash_fragment 3;

dash_playlist_length 60;

dash_nested on;

}

}

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

tcp_nopush on;

keepalive_timeout 65;

gzip on;

server {

listen 80;

location / {

root /var/www/html;

index index.html index.htm;

add_header 'Access-Control-Allow-Origin' '*' always;

add_header 'Access-Control-Expose-Headers' 'Content-Length';

}

location /hls {

alias /var/www/hls;

add_header Cache-Control no-cache;

add_header 'Access-Control-Allow-Origin' '*' always;

add_header 'Access-Control-Expose-Headers' 'Content-Length';

types {

application/vnd.apple.mpegurl m3u8;

video/mp2t ts;

}

}

location /dash {

alias /var/www/dash;

add_header Cache-Control no-cache;

add_header 'Access-Control-Allow-Origin' '*' always;

add_header 'Access-Control-Expose-Headers' 'Content-Length';

types {

application/dash+xml mpd;

}

}

}

}

File: nginx-conf.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: streaming-server

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: nginx-rtmp

template:

metadata:

labels:

app: nginx-rtmp

spec:

containers:

- name: nginx-rtmp

image: openvisualcloud/xeone3-ubuntu1804-media-nginx

volumeMounts:

- name: nginx-conf

mountPath: "/usr/local/etc/nginx"

readOnly: true

ports:

- name: http

containerPort: 80

- name: rtmp

containerPort: 1935

command: [ "/usr/local/sbin/nginx", "-c", "/usr/local/etc/nginx/nginx.conf" ]

volumes:

- name: nginx-conf

configMap:

name: nginx-conf

---

kind: Service

apiVersion: v1

metadata:

name: svc-nginx-rtmp

namespace: demo

spec:

selector:

app: nginx-rtmp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: rtmp

protocol: TCP

port: 1935

targetPort: 1935

externalIPs:

- <External IP address for receiving RTMP streams>

File: streaming-server.yaml

It's a good practice to isolate Kubernetes objects in namespaces inside a cluster. A namespace demo is used to collect objects designed for live streaming described in this tutorial. To start waiting streams from video encoders, create the YAML files shown above and deploy the NGINX-RTMP server with the following commands:

$ kubectl create namespace demo

$ kubectl apply -f nginx-conf.yaml

$ kubectl apply -f streaming-server.yaml

With modern display technologies that provide the best user experiences, more and more video clips are produced in ultra-high resolutions. To stream ultra-high resolution videos over the Internet, the streaming servers or services usually transcode the videos to multiple lower resolution videos, so that the service can leverage the adaptive bitrate streaming protocols, such as HLS or DASH, to deliver to clients depending on the bandwidth of the connections. Most of the time, transcoding ultra-high resolution videos can only be achieved using hardware GPU codecs. Therefore, on the video transcoder node, we deploy the video transcoding workloads to Kubernetes nodes with GPU hardware codecs through the Intel GPU device plugin for Kubernetes.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-cdn-bundle

namespace: demo

spec:

storageClassName: sc-cdn

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 1Gi

---

kind: Job

apiVersion: batch/v1

metadata:

name: transcode

namespace: demo

spec:

backoffLimit: 0

template:

spec:

restartPolicy: Never

volumes:

- name: cdn

persistentVolumeClaim:

claimName: pvc-cdn-bundle

containers:

- name: ffmpeg

image: sysstacks/mers-ubuntu

volumeMounts:

- mountPath: /var/www/cdn

name: cdn

resources:

limits:

gpu.intel.com/i915: 1

command: [ "/var/www/cdn/bin/start.sh" ]

File: transcoding.yaml

For example, the above YAML file requests one Intel GPU for the video transcoding job with the following configuration. Kubernetes then deploys the job only to the worker node with GPU capability in the cluster:

...

resources:

limits:

gpu.intel.com/i915: 1

...

In this transcoding job example, the pre-integrated container image from the Media Reference Stack [7] is used to leverage the hardware codecs on Intel platforms. Since the job is scheduled on an Intel GPU-capable node to run an ephemeral container, the video source files are provided separately by a NFS server outside of the cluster through a Kubernetes Persistent Volume:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: sc-cdn

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv-nfs

spec:

storageClassName: sc-cdn

capacity:

storage: 10Gi

accessModes:

- ReadOnlyMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: <IP address of NFS server>

path: <Exposed path in the NFS server e.g. /mnt/nfs>

File: pv.yaml

Set up a NFS server that exposes a directory /clips/ containing *.mp4 video clips to be delivered, and creates a script /bin/start.sh with the following content to instruct the ffmpeg program to transcode the video clips in high (6000kb) and low (3000kb) bitrates:

#!/bin/bash

RTMP_SERVER=svc-nginx-rtmp

RTMP_ENDPOINT='live'

STREAM_NAME='my-video'

VARIANT_HI_MAXRATE=6000k

VARIANT_HI_VWIDTH=1920

VARIANT_LO_MAXRATE=3000k

VARIANT_LO_VWIDTH=1280

function streaming_file {

local file=$1

echo "Start streaming $file ..."

local render_device=''

local vendor_id=''

for i in $(seq 128 255); do

if [ -c "/dev/dri/renderD$i" ]; then

vendor_id=$(cat "/sys/class/drm/renderD$i/device/vendor")

if [ "$vendor_id" = '0x8086' ]; then

# Intel GPU found

render_device="/dev/dri/renderD$i"

break

fi

fi

done

local o_hwaccel=''

local o_audio='-map a:0 -c:a aac -ac 2'

local o_decode='-c:v h264'

local o_encode='-c:v libx264'

local o_scaler='-vf scale'

if [ "$render_device" != "" ]; then

# Use hardware codec if available

o_hwaccel="-hwaccel qsv -qsv_device $render_device"

o_decode='-c:v h264_qsv'

o_encode='-c:v h264_qsv'

o_scaler='-vf scale_qsv'

fi

local o_variant_hi="-maxrate:v $VARIANT_HI_MAXRATE"

local o_variant_lo="-maxrate:v $VARIANT_LO_MAXRATE"

local width=''

width=$(ffprobe -v error -select_streams v:0 -show_entries stream=width -of default=nw=1 "$file")

width=${width/width=/}

if [ "$width" -gt "$VARIANT_HI_VWIDTH" ]; then

# Scale down to FHD for variant-HI

o_variant_hi+=" $o_scaler=$VARIANT_HI_VWIDTH:-1"

fi

if [ "$width" -gt "$VARIANT_LO_VWIDTH" ]; then

# Scale down to HD for variant-LO

o_variant_lo+=" $o_scaler=$VARIANT_LO_VWIDTH:-1"

fi

eval ffmpeg -re "$o_hwaccel $o_decode -i $file" \

-map v:0 "$o_variant_hi $o_encode $o_audio" \

-f flv "rtmp://$RTMP_SERVER/$RTMP_ENDPOINT/$STREAM_NAME\_hi" \

-map v:0 "$o_variant_lo $o_encode $o_audio" \

-f flv "rtmp://$RTMP_SERVER/$RTMP_ENDPOINT/$STREAM_NAME\_lo"

}

ROOT=${BASH_SOURCE%/bin/*}

CLIPS=$ROOT/clips

while :

do

for clip in "$CLIPS"/*.mp4; do

streaming_file "$clip"

done

done

Create the above YAML files, start the NFS server, and enter the IP address of the NFS server to the above persistent volume configuration. Then run the following commands to start the video transcoding job:

$ kubectl apply -f pv.yaml

$ kubectl apply -f transcoding.yaml

You could enter the following URL to any HLS supported client, such as the Safari browser or VLC Media Player to receive the live streams:

http://<IP_Address_of_NGINX_RTMP_Server>/hls/my-video.m3u8

Summary

Typically, a Kubernetes cluster is made up of a variety of nodes, some of which may be installed with special hardware to accelerate specific applications. To expose those hardware features to containerized applications, Kubernetes device plugins are designed to selectively deploy the applications only to those nodes to leverage the installed hardware resources. This application note illustrates the use of Intel GPU device plugin for Kubernetes to deploy video transcoding services only to those Kubernetes nodes installed with Intel GPU cards to leverage the hardware codecs for acceleration, and also save the CPU cycles for other workloads. For instance, the previous script conducts one video decoding, two video scaling, and two video encoding operations to transcode videos to two streams at different bitrates. The following figures indicate the consumed CPU cycles can be significantly reduced to 21% from 1007%, if the transcoding job is deployed to a node with GPU hardware resources.

References

1. Cloud Native Computing Foundation (https://www.cncf.io/)

2. Kubernetes - Production-Grade Container Orchestration (https://kubernetes.io/)

3. Intel Device Plugins for Kubernetes (https://github.com/intel/intel-device-plugins-for-kubernetes)

4. NGINX-based Media Streaming Server (https://github.com/arut/nginx-rtmp-module)

5. Intel Open Visual Cloud (https://www.intel.com/content/www/us/en/developer/articles/technical/open-visual-cloud.html)

6. Intel® Developer Catalog (Intel® DevCatalog) (https://www.intel.com/content/www/us/en/developer/tools/software-catalog/about.html)

7. Intel Media Reference Stack (https://www.intel.com/content/www/us/en/developer/articles/containers/media-reference-stack-on-ubuntu.html)