Introduction

In this article, we describe the various mechanisms through which you can use Intel® Optane™ DC persistent memory as volatile memory in addition to dynamic random-access memory (DRAM) for your Java* applications. We start with a brief introduction to Java memory management and Intel® Optane™ DC persistent memory module OS support, then dive into the Java usage mechanism.

Java* Memory Management

The Java Virtual Machine (JVM) provides a managed runtime environment for executing Java programs on target platforms. The Java programs are compiled into platform-independent bytecodes and collated into jar files or modules and executed on the target platform using JVM.

Memory management is a key feature provided by the JVM. The JVM manages a chunk of memory, called the Java heap, on behalf of the application. All the Java objects created by the application, using new keywords, are allocated by the JVM on the Java heap. Java applications also do not need to free the memory allocated. The JVM automatically determines which objects are not being used and reclaims memory from such objects, using a process called garbage collection.

The default size of the Java heap varies in different JVM implementations and is usually determined based on the available memory in the system. You can also configure the Java heap on the JVM command line.

OpenJDK* is the leading free and open source implementation of the Java Platform, Standard Edition (SE) runtime and is widely adopted by the industry. The reference implementation for Java SE is also based on OpenJDK.

Intel® Optane™ DC Persistent Memory

Intel Optane DC persistent memory represents a new class of memory and storage technology architected specifically for data center usage. It offers an unprecedented combination of high-capacity, affordability, and persistence. By expanding affordable system memory capacity, customers can use systems enabled with this new class of memory to better optimize their workloads by moving and maintaining larger amounts of data closer to the processor and minimizing the higher latency of fetching data from system storage.

Intel® platforms with Intel Optane DC persistent memory modules can be configured in different modes depending on application requirements. In Memory Mode, persistent memory is exposed as the entire addressable memory of the system, and the DRAM acts as a layer of cache. For more control over placement of data, the Intel platform can be configured in App Direct mode where both the DRAM and the persistent memory modules are exposed to the application as addressable memory. You can find more details on configuring Intel® persistent memory in the article Preparing for the Next Generation of Memory.

All use cases and examples shown in this document use Intel Optane DC persistent memory modules in App Direct mode to provide high capacity and low-cost volatile memory.

OS Support

Operating systems (OS) such as Linux* and Windows* expose persistent memory through a special file system using direct access (DAX) mode. DAX mode lets the file systems (e.g., NTFS, EXT4, and XFS) mount persistent memory as a file system. It provides applications direct access to Intel Optane DC persistent memory modules, bypassing the OS page cache to read and write directly from the byte addressable persistent memory modules. File mappings (example, using mmap in Linux) in DAX-mounted file system will directly map pages in Intel Optane DC persistent memory modules into user space.

The process of mounting persistent memory modules for use as a DAX filesystem is described in more detail in the NDCTL Getting Started guide for Linux and Creating a Windows Development Environment at pmem.io.

Usage Scenarios in Java*

You can use Intel Optane DC persistent memory in either Memory or App Direct mode for your Java applications. For Memory mode usage you only need to configure the underlying OS to expose Intel Optane DC persistent memory modules as the entire addressable memory of the system with DRAM acting as a cache. You don’t need to make any changes to your Java application or JVM configuration to utilize Memory Mode. In this configuration, all of the JVM, just-in-time (JIT) compiled Java application code, metadata, Java stack, and the Java heap reside in persistent memory.

App Direct mode, where both DRAM and the Intel Optane DC persistent memory modules are exposed to the application as addressable memory, gives you more control. In this mode, you can continue to have the JVM, JIT-compiled Java application code, stack, and metadata in DRAM and host the entire Java heap, part of the Java heap, or Java objects at a finer granularity in persistent memory.

Intel Optane DC persistent memory has different latency and bandwidth characteristics than DRAM and the best configuration to use is based on your Java application characteristics and need. In the following sections, we describe how you can use persistent memory at different granularity levels in App Direct mode for your Java application.

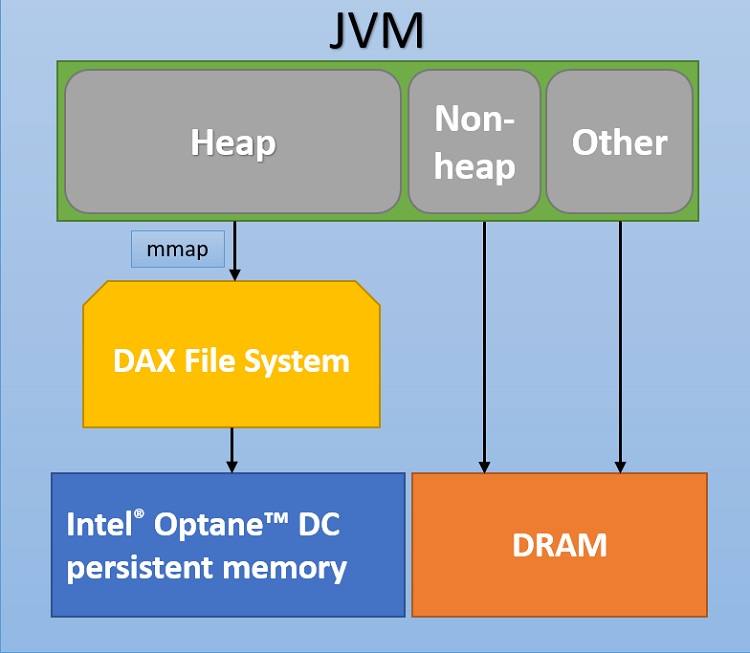

Whole Heap on Persistent Memory

The JVM allocates all the Java objects created by the application using new keywords on the Java heap. To host the Java heap on Intel Optane DC persistent memory, you will need to use a new feature added in OpenJDK. This feature is currently available as part of OpenJDK binaries for JDK 11. To enable this feature, use the command line flag -XX:AllocateHeapAt, which directs the JVM to allocate the application’s Java heap on persistent memory. This flag takes the path where the persistent memory is mounted and uses it to create a temporary file for use as a backing file for the Java heap. This is depicted in the following diagram:

Let’s look at an example in more detail on Linux.

- Intel Optane DC persistent memory module is mounted at /mnt/pmem1:

# sudo mount –v | grep /pmem /dev/pmem1 on /mnt/pmem1 type ext4 (rw,relatime,dax) - We run the MyApp class with 128 GB of heap allocated on Intel Optane DC persistent memory as shown below:

# java -version openjdk version "11.0.2" 2019-01-15 OpenJDK Runtime Environment 18.9 (build 11.0.2+9) OpenJDK 64-Bit Server VM 18.9 (build 11.0.2+9, mixed mode) # java -Xmx128g -XX:AllocateHeapAt=/mnt/pmem1 MyApp & [1] 13068 - The process maps file for the Java process shows 128 GB memory in the range 7ef394000000-7f1394000000 mapped to Intel Optane DC persistent memory modules:

# cat /proc/13068/maps | grep heap ... 7ef394000000-7f1394000000 rw-s 00000000 103:01 11 /mnt/pmem1/jvmheap ...

Note that other blocks of memory managed by the JVM, such as JIT-compiled Java application code, stack, and metadata continue to be allocated in DRAM. This flag does not change the behavior of the rest of the JVM. The existing heap and garbage collection-related flags such as -Xmx, -Xms, etc. have the same semantics as before.

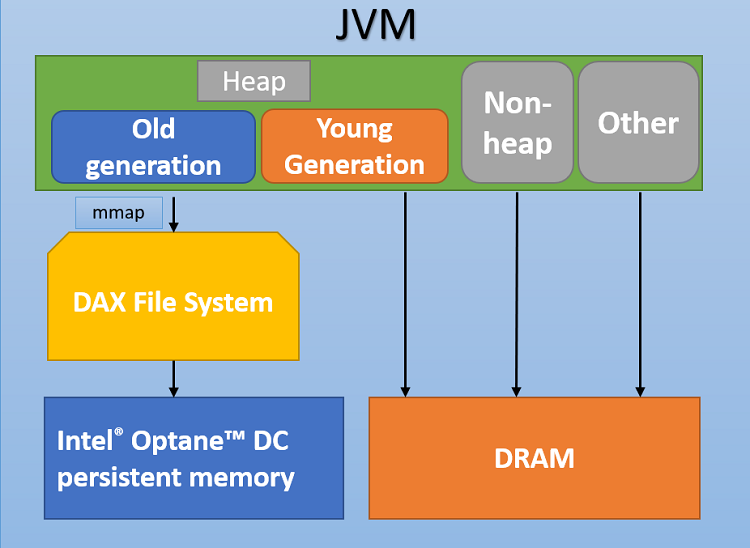

Partial Heap on Persistent Memory

Java memory management partitions the heap into two generations: young and old. New allocations are performed in the young generation, and objects stay in the young generation for a few garbage collection (GC) cycles. After an object survives a few GC cycles, it is moved into the old generation. Once moved to the old generation, the object stays there until it becomes unreachable and is collected by GC.

Although each application behaves differently, typically memory accesses are higher in the young generation as compared to the old generation. At the same time, the young generation is typically a small fraction of total heap. This means that applications could allocate a large old generation in Intel Optane DC persistent memory and place a smaller young generation on DRAM.

To host only the old generation of the Java heap on persistent memory, you will need to use a new experimental feature in OpenJDK. This feature is currently available as part of OpenJDK binaries for JDK 12. To enable the feature, use the command line flag -XX:AllocateOldGenAt, which directs the JVM to allocate the old generation of the Java heap on persistent memory. The young generation continues to be allocated on DRAM. The following diagram depicts this mapping:

Let’s look at an example on Linux:

- As before, Intel Optane DC persistent memory is mounted at /mnt/pmem1:

# sudo mount -v | grep /pmem /dev/pmem1 on /mnt/pmem1 type ext4 (rw,relatime,dax) - We run the MyApp class with the old generation placed on persistent memory:

# java -version openjdk version "12" 2019-03-19 OpenJDK Runtime Environment (build 12+33) OpenJDK 64-Bit Server VM (build 12+33, mixed mode, sharing) # java –XX:+UseG1GC -Xmx128g –Xmn16g –XX:+UnlockExperimentalVMOptions -XX:AllocateOldGenAt=/mnt/pmem1 MyApp

This option is supported for G1 and ParallelOld garbage collection algorithms. This feature maintains the GC ergonomics and works with dynamic sizing of heap generations. More details on this feature can be found in the Release Notes.

DirectByteBuffer on Persistent Memory

If you want to use persistent memory at a finer granularity than whole heap or partial heap for your Java application, you can use DirectByteBuffer objects and allocate them on Intel Optane DC persistent memory. ByteBuffers are available starting in JDK 1.4. This can be achieved via two mechanisms which are described below.

DirectByteBuffer using FileChannel

A DirectByteBuffer can be created by mapping a region of a file directly into memory. You can use the functionality provided by the FileChannel class to map a DirectByteBuffer to Intel Optane DC persistent memory.

The following code demonstrates this approach:

import java.nio.channels.FileChannel;

import java.nio.channels.FileChannel.MapMode;

import java.nio.ByteBuffer;

import java.nio.ByteBuffer;

import java.io.RandomAccessFile;

Class OffHeap {

public static void main(String[] args) {

// Intel DCPMM is mounted as a DAX filesystem at /mnt/pmem

// Open a file on Intel DCPMM for read/write

RandomAccessFile pmFile = new RandomAccessFile("/mnt/pmem/myfile", "rw");

// Get the corresponding file channel

FileChannel pmChannel = pmFile.getChannel();

// Maps a 1 GB region in DAX file into memory

ByteBuffer pmembb = pmChannel.map(MapMode.READ_WRITE, 0, (1 << 30) - 1);

// Perform writes

for (int i = 0; i < (1<< 31)-1; i++) {

pmembb.put(i, (byte)(i&0xff));

// Perform reads and verify

for (int i = 0; i < (1<< 31)-1; i++) {

if (pmembb.get(i) != (byte)(i&0xff)) {

System.out.println("Erorr at index:" + i);

}

}

// Truncate the file to 0 size

pmchannel.truncate(0);

// Close the file channel

pmchannel.close();

// Close the file

pmfile.close();

}

}

In the example above, put() and get() calls to ByteBuffer perform direct writes and reads into persistent memory. Note that you have to use modes READ_WRITE or READ. MapMode.PRIVATE does not provide a direct mapping to persistent memory.

DirectByteBuffer Using Java Native Interface(JNI)

OpenJDK also supports the creation of DirectByteBuffer from native code via Java Native Interface (JNI). You can use JNI to allocate DirectByteBuffer on persistent memory. The JNI code can use libraries such as Memkind3. Memkind manages different kinds of memories including Intel Optane DC persistent memory and supports calls such as memkind_alloc() to allocate memory blocks of different sizes. The article Use Memkind to Manage Large Volatile Memory Capacity describes how to use Memkind with Intel® Optane™ DC Persistent Memory. JNI has a mechanism called NewDirectByteBuffer4 which can then wrap the natively allocated memory persistent memory in a DirectByteBuffer for accessing from Java code.

Summary

In this article, we looked at various ways available today to use Intel Optane DC Persistent Memory in App Direct mode for Java applications. The different mechanisms provide powerful tools for users to control the placement of data on persistent memory at different levels of granularity. The best configuration to use depends on the application characteristics and needs.

About the Authors

Kishor Kharbas is a software engineer at Intel. He joined Intel in 2011 and has been working on Java virtual machine optimizations for Intel server platforms.

Sandhya Viswanathan is a software engineer at Intel with 25 plus years of industry experience in compilers and software development tools. She joined Intel in 2008 and has been working on Java virtual machine optimizations for Intel server platforms. She is a technical lead in the Java engineering team focusing on Java JIT compiler, runtime, and GC optimizations.