Digital displays and signs are all around you. You may have seen them cropping up at shopping centers and doctors’ offices. From video walls, to AR fitting mirrors, to ordering menus, digital signs are pervasive and are becoming a part of everyday shopping experience.

In order to develop cutting edge display experiences, it’s important to understand the ecosystem and underlying motivations around digital signage. The economics of digital signage is entirely based upon audience reach and influence and also the ability to attribute this reach and influence to a favorable outcome such as brand or product awareness or even an actual sale. Hence, the development motivation often centers on guaranteeing delivery of media content to an audience, gathering impression metrics, and reducing maintenance costs while increasing up time. Signs currently range from stand-alone and completely disconnected displays (which have slightly more capabilities than a standard DVD player) to smart signage with full sensor integration, and everything in between. Opportunity innovation lies in tying technologies together to increase the value of the information gathered, engage the audience to drive more revenue, and reduce operational costs.

In order to accomplish these goals, you as a developer have several tools at your disposal. The key is knowing when to apply what tool, and how best to map each tool to your situation and apply it appropriately.

The Modern Digital Sign

A state-of-the-art signage player consists of the following components:

- The media player is the central command of the digital sign. It’s the primary driver of information to the audience. It ultimately controls the advertising schedule and coordinates the decoding sequences that take media stored on a drive and display the content on the screen. System integrators have a choice of several open source as well as for-pay content management systems for the media player, available across various operating systems and hardware configurations.

- A camera is used to collect a data stream that is then processed for anonymous audience data. This data may include demographics, emotional reaction of viewers, and the number of impressions (people) the sign reached. The process of extracting this useful information from a camera feed is called computer vision.

- Analytics consists of audience data and the data from the log of what has been played, then processing information to uncover trends and patterns. This process gives businesses insight into their audience. It allows for tailoring of the message, as well as potentially adapting when and what the player chooses to play in order to have the most revenue impact.

- Security is necessary in a deployed environment to prevent hacking and malicious intrusions. It is especially critical as more digital signs become connected to the internet and may be exposed to a wider array of attacks.

- When the player goes down, for example if the OS is locked up or the hardware becomes faulty, remote device management is the first line of defense to avoid a service call in the field. It can also play an important role in optimizing your deployment, such as saving energy by turning off the signs at night or during closures. Additionally, remote management allows for scalable updates for both the advertising content as well as player software.

Media Player Implementation

There are many ways to implement the software for a player. Understanding the various hardware components involved allows you to better leverage the software available to get what you want, whether it’s better performance or saving more energy.

The CPU is the consistent element across any Intel hardware. All Intel processors will have a CPU with a common 64-bit architecture. While you can perform all of the key functionalities of the player using the CPU (scheduling, decoding, playback, and computer vision), the CPU is really ideal for scheduling, running the operating system, doing administrative tasks (related to device management), security, and perhaps a small amount of analytics.

The GPU is usually better for higher quality rendering and shading of frames, as well as computer vision applications. The GPU’s on the Intel® Core™ processor family range in capabilities from Intel® HD Graphics to Intel Iris™ graphics to Intel® Iris™ Pro graphics, in order of low to high capabilities.

There is one often overlooked sub-block of the GPU, sometimes referred to as the Media Fixed Function block1, which contains hardware accelerators for encoding, decoding, transcoding, and certain video processing filters. The media block is critical to digital signage applications because it allows for offloading of decoding from the CPU and GPU’s cores. For example the 7th Generation Intel® Processor family for S Platforms has these hardware accelerators for decoding of MPEG2, VC1/WMV9, AVC/H264, VP8, JPEG/MJPEG, and more [2]. In order to understand the capabilities of your particular processor, it’s best to check the datasheet.

The final component of the system is the display block, which drives the signal to the screen or monitor.

In order to understand how these all work together, below is a video analytics scenario showing how you would ideally use the various hardware components in combination with each other.

Video Analytics Example Data Flow

There are three main data flows to understand when implementing video analytics in digital signage.

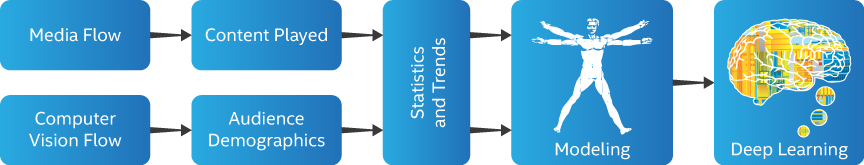

- The media flow plays the content on the screen.

- The computer vision flow gets a data stream from a camera and processes it for anonymous audience data.

- The analytics flow puts the media and computer vision flows together and extracts meaningful data.

An example of what this solution might looks like in its most basic form can be found in the RAS-100 Retail Analytics Solution Pack demo kit on the Intel Solutions Directory.

Media Flow

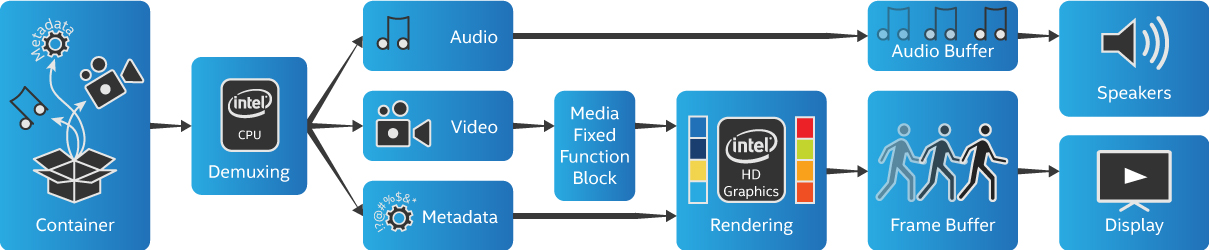

Going from left to right, the media starts out in a certain container format and is demuxed (basically split apart) by the CPU into audio, video, and metadata streams. The video stream is decoded by the Media Fixed Function block into a bitstream format and then rendered with any metadata. The rendered video is then put into a frame buffer that is in sync with the audio buffer.

Computer Vision Flow

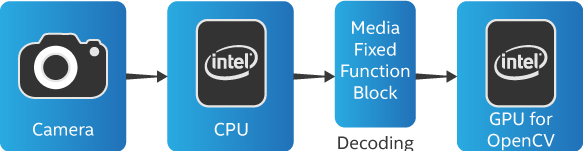

The camera sends an encoded stream (the format depends on the camera type you buy) to the CPU. The CPU unpacks the encoded stream and sends the video to the media block for decoding. The information from the media block can then be used for computer vision processing by the CPU using openCV or other computer vision libraries. Decoding through the media block isn’t strictly necessary, but depends on the application, since some computer vision libraries can process encoded streams.

Analytics Flow

The timestamp data from the media flow and the results of the computer vision flow are combined. Analysis can then be done on a range of scales. At the local level, the player could potentially adapt the advertising based on the current live audience. Either locally or on a server the data can be correlated for simple trends, such as which ads were seen the most, which had the most of a certain demographic, etc. At the highest level, you can use machine learning and deep learning to infer understanding about why certain ads are performing, and predict what impact certain ads might have. Tying all this information to ad campaigns or specific events can further increase value for advertisers.

Development Options

Now that you understand a little more how digital signage works under the hood, there are several development options for you depending on what you’re doing:

Intel® Media SDK

The Intel® Media SDK allows for hardware acceleration of encoding, decoding, RAW photo and video processing, and certain video processing filters. The Intel® Media SDK is the software API to employ when utilizing the underlying Media Fixed Function hardware. However, it’s important to know that you’re coding to an optimized framework, not directly to hardware. You should think of it more like a data stream or process that you set in motion than something you control step by step.

There are samples and tutorials that come with the Intel® Media SDK and they serve different purposes. The samples are ideal for command line feature tests (like installation verification) or evaluating new features. The tutorials are simple starting points with coding examples. So if it’s your first time using the SDK, you should start with the tutorials.

The Intel® Media SDK is available for Linux* client platforms as well in the form of the Intel® Media SDK for Embedded Linux. So whether your primary operating system is Windows* or Linux*, you can take advantage of these media processing capabilities.

For Windows client:

Additional material:

- Get Amazing GPU Acceleration for Media Pipelines - Webinar Replay

- Framework for developing applications using Media SDK

- Integrating Intel® Media SDK with FFmpeg for mux/demuxing and audio encode/decode usages

OpenCV

OpenCV (http://opencv.org/) is a BSD-licensed library for computer vision applications. It is cross-platform and there are vast amounts of tutorials available on the web. When a system has OpenCL (https://www.khronos.org/opencl/) installed, OpenCV can utilize resources across the CPU and GPU.

- To get started with OpenCV

- Installing the Intel SDK for OpenCL Applications

- Tips and Tricks to Heterogeneous Processing with OpenCL Webinar

Intel® Active Management Technology

Intel® Active Management Technology, or Intel® AMT, is a feature of the Intel® Core™ processor family with Intel® vPro™ technology. It is capable of out-of-band remote management even in the absence of an OS or hard disk drive. Specific advantages for a Digital Signage clients would be the ability to recover from crashed hard drives, or a non-booting OS as well as performing routine device maintenance such as BIOS and driver updates. Some of the common applications it allows:

- High-resolution keyboard, video, mouse (KVM) over network

- Remote power up, power down, power cycle

- Redirect of boot process to a different image (like a network share)

- Remote access to BIOS settings

There is an open source project called Meshcentral* (https://meshcentral.com/info/) which is one way to get started using Intel® AMT. There is also an application called the Intel vPro Platform Solution Manager that comes with several pre-installed plugins like KVM Remote Control and Power Management. The Intel® AMT SDK can be used to integrate remote features into an existing IT infrastructure. There is an Intel® vPro™ forum where you can learn about and ask questions concerning Intel® AMT.

Conclusion

With increased capabilities in the 7th Generation Intel® Core™ processor family , you get integrated fixed function media blocks for a number of mainstream video codecs, such as HEVC and VP9. Offloading media processing tasks to the Media Fixed Function block from the CPU and GPU prevents video stuttering and allows for on the fly quality improvements.

Whether you’re upgrading an existing low-end digital signage client or enhancing with computer vision, the sections in this article will give you the starting point to take you further in your development process.

For more information on developing engaging display experiences:

References

1 /content/www/us/en/develop/videos/get-amazing-intel-gpu-acceleration-for-media-pipelines-webinar-replay.html, 16:00

2 http://www.intel.com/content/www/us/en/processors/core/7th-gen-core-family-desktop-s-processor-lines-datasheet-vol-1.html , Section 2.4.3

Additional information

- Hardware systems on the Intel Solutions Directory (https://solutionsdirectory.intel.com/market-segment/digital-signage)

- Open Pluggable Specification (http://www.intel.com/content/www/us/en/embedded/retail/digital-signage/open-pluggable-specification/overview.html)