Introduction

Intel’s Cache Allocation Technology (CAT) helps address shared resource concerns by providing software control of where data is allocated into the last-level cache (LLC), enabling isolation and prioritization of key applications.

Originally introduced on a limited set of communications processors in the Xeon E5-2600 v3 Family of Server processors, the CAT feature is enhanced and now available on all SKUs starting with the Xeon E5 v4 family. The Xeon E5 v4 family provides more CLOS than prior-generation processors, enabling more flexible usage of CAT, and CPUID-based enumeration support, enabling software which uses CAT to be reused across generations with fewer changes.

This articles provides an overview of the CAT feature and architecture, while the next article in the series discusses use models. Information on proof points and software support is provided in subsequent articles.

Discussed separately, the Xeon E5 v4 family also introduces an extension to CAT called Code and Data Prioritization (CDP), as described in a series of articles here and here.

CAT and CDP are part of a larger series of technologies called Intel Resource Director Technology (RDT). More information on the Intel RDT feature set can be found here, and an animation illustrating the key principles behind Intel RDT is posted here.

Why is Cache Allocation Technology (CAT) needed?

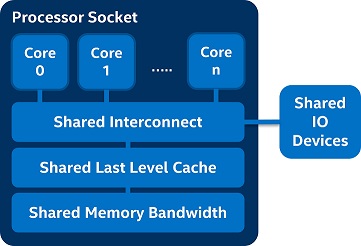

In today’s datacenter cloud environment where multitenant VMs are ubiquitous, often running many heterogeneous types of applications, ensuring consistent performance and prioritizing important interactive applications can be a challenge. Many shared resources are present in the datacenter, including the network, and within a platform shared resources such as last-level cache are common in modern multicore platforms:

Figure 1. Many resources within modern multi-core platforms are shared, including the last-level cache.

While these shared resources provide good performance scalability and throughput, certain applications such as background video streaming or transcode apps can over-utilize the cache, reducing the performance of more important applications. For example in the figure below, the “noisy neighbor” (on core zero) consumes excessive last-level cache, which is allocated on a first-come first-served basis, and this can cause performance loss in the higher-priority application shown in green on core one.

Figure 2. A “noisy neighbor” on core zero over-utilizes shared resources in the platform, causing performance inversion (though the priority app on core one is higher priority, it runs slower than expected).

Further, variability in the environment as new workloads are introduced and completed can pose a challenge in determining how long a given job will run, or whether its performance will vary over time in the case of long-running workloads.

What is Cache Allocation Technology (CAT?)?

Cache Allocation Technology (CAT) provides software-programmable control over the amount of cache space that can be consumed by a given thread, app, VM, or container. This allows, for example, OSs to protect important processes, or hypervisors to prioritize important VMs even in a noisy datacenter environment.

The basic mechanisms of CAT include:

- The ability to enumerate the CAT capability and the associated LLC allocation support via CPUID.

- Interfaces for the OS/hypervisor to group applications into classes of service (CLOS) and indicate the amount of last-level cache available to each CLOS. These interfaces are based on MSRs (Model-Specific Registers).

As software enabling support is provided, most users can leverage existing software patches and tools to use CAT.

Key concepts of CAT are described below, then subsequent articles in this series provide additional details on how CAT works. Specifically, discussion is included on usages, proof points and software enabling collateral.

Key Concepts

Cache Allocation Technology introduces an intermediate construct called a Class of Service (CLOS) which acts as a resource control tag into which a thread/app/VM/container can be grouped, and the CLOS in turn has associated resource capacity bitmasks (CBMs) indicating how much of the cache can be used by a given CLOS.

Figure 3. Class of Service (CLOS) enable flexible control over threads, apps, VMs, or containers, given software support.

Software can associate applications with CLOS as needed to support a variety of usage models, including prioritizing key applications. Full documentation about CLOS is available in the Intel® Software Developer’s Manual.

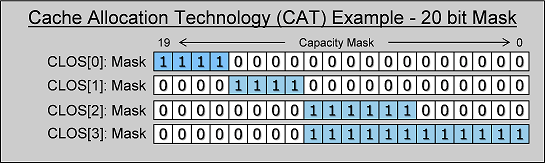

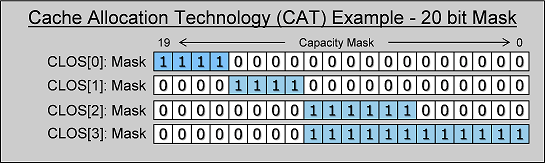

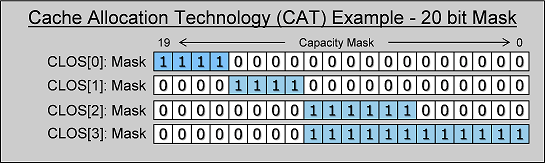

CAT also defines capacity bitmasks (CBMs) per CLOS, which control how much cache threads in a CLOS can allocate. The values within the CBMs indicate the relative amount of cache available, and the degree of overlap or isolation. As an example, in the figure below, CLOS [1] has less cache available than CLOS [3] and could be considered lower priority:

Figure 4. Examples of Capacity Bitmask (CBM) overlap and isolation across multiple Classes of Service (CLOS).

These key concepts will be described further and illustrated with examples in subsequent articles in this series.

Conclusion

Cache Allocation Technology (CAT) enables privileged software such as an OS or VMM to control data placement in the last-level cache (LLC), enabling isolation and prioritization of important threads, apps, containers, or VMs, given software support. While an initial version of CAT was introduced on a limited set of communications processors in the Intel Xeon processor E5-2600 v3 family, the CAT feature is significantly enhanced and now available on all SKUs starting with the Intel Xeon processor E5 v4 family.

The next article in the series provides information on usage models for the CAT feature, and information on proof points and software support is provided in subsequent articles.

Notices

No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document.

Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade.

This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest forecast, schedule, specifications and roadmaps.

The products and services described may contain defects or errors known as errata which may cause deviations from published specifications. Current characterized errata are available on request.

Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548-4725 or by visiting www.intel.com/design/literature.htm.

Intel, the Intel logo, and Intel Xeon are trademarks of Intel Corporation in the U.S. and/or other countries.

*Other names and brands may be claimed as the property of others.

© 2016 Intel Corporation.