I must admit that I love stories about bugs, strange and unexpected software behaviors, and debugging. They provide comic relief and drama in the supposedly dry world of software development. Some bugs are trivial and embarrassing to talk about once they’ve been resolved. While other bugs turn out to be as elusive as the mythical yeti, and are never found. Finally, there are bugs give us heroic narratives in which a courageous programmer slays the dragon (does debugging) through brilliant inspiration and sheer determination. This is the story of one such bug — a very tough bug that we found in the Linux kernel. We found it by using Wind River Simics* (Simics) to run Simics itself. However, just triggering and reproducing the bug required one network, two separate machines, and at least three processor cores (the 1-2-3 of the title). It was the type of bug that required true concurrency to trigger, not just multitasking on a single processor. Finding the bug then required following a long trail through both user space and Linux kernel code.

Background

In order to run Intel® Architecture (IA) targets quickly on IA-based hosts, Simics makes use of Intel® Virtualization technology (Intel® VT-x). This is known as VMP in Simics, and it requires that you install a Linux kernel module known as VMXMON on the host. VMXMON provides the kernel-level hardware access needed to use Intel VT-x to accelerate the execution of code in the user-level Simics process.

The story of this bug begins with a one-line change to VMXMON. The change was introduced to fix a previous problem. The fix was well-vetted, correct, and necessary. In testing before deployment, we found that the new version of VMXMON not only fixed the previous problem, but it was absolutely solid.

The bug hits

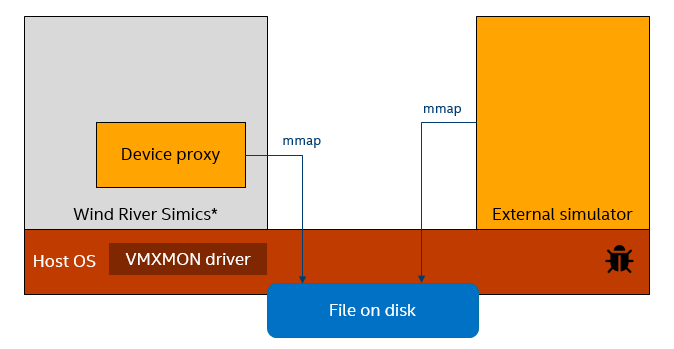

However, when we deployed the new version of VMXMON in a setup in which Simics was integrated with another simulator, we started to see failures. The combination of the new, modified, VMXMON, the same host kernel as before, and the same external simulator in the same version as before — this gave rise to an error that had not occurred before. Something in the new VMXMON had revealed a new bug — which was considered very surprising given the nature of the change. The setup that caused the issue was something like this (but not quite, as we were soon to find out):

Simics and the external simulator communicate over a file on the host. This file is opened using mmap in both simulators. When we used the newer VMXMON, we began seeing regular crashes with certain types of simulator workloads. The error was the same each time: The host Linux operating system stopped on an assert in the network file system (NFS) driver. The assert stated that a virtual memory page was considered both clean and dirty at the same time and, thus, the state of the file system was fundamentally broken, and there was nothing else to do except to assert.

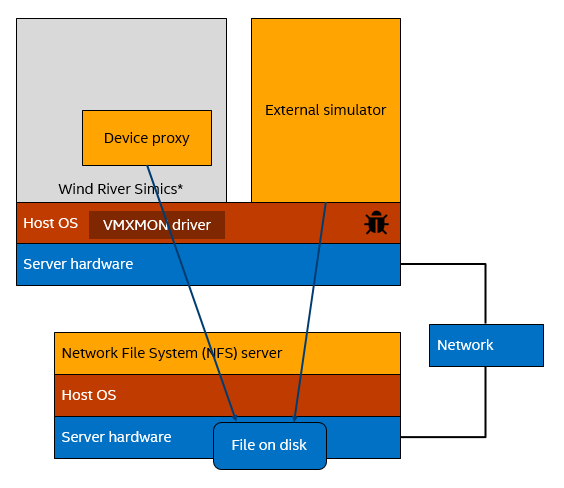

Thus, the test running on Simics and the other simulator ended up crashing the host machine, which is not a desired behavior. That the assert came from NFS made it clear that the setup was more complicated than what we initially thought. In actuality, the file was stored on an NFS file server and not locally. When the whole setup was local as shown above, the bug actually did not hit. We needed this setup to trigger it, adding a network file server:

The kernel assert provided a stack trace showing where the inconsistent state was detected, and the assert triggered. However, this obviously had little to do with the root cause of the issue. The real problem was to find the code that created the inconsistent state in the first place. Clearly, this was a perfect case for Simics reverse debugging, since it involved going back from the point at which the error was detected, to the point at which the state was changed. Being able to work backwards from an error is a tremendous advantage in identifying and resolving complicated bugs.

Reproducing the bug

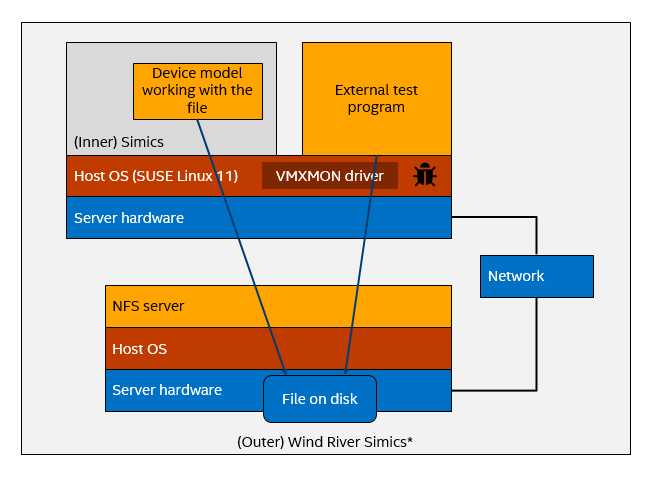

To get the bug up and running on Simics for analysis, we first simplified the setup. This helped us avoid having to include the rather complex external simulator in the setup. Fortunately, reproducing the bug turned out to be rather easy. The key was to have Simics and an external program share a file located on an NFS server. Working on the simplified reproduction setup provided some additional insights on the necessary preconditions for the bug:

- The NFS server had to be on a remote machine

- There had to be changes happening to the shared file, along with sync requests to make sure changes were written to disk and communicated to the other process

- The host Linux had to be a certain version. It turned out that the bug did not trigger on recent Linux kernel versions, but it did trigger when running on SUSE Linux Enterprise Server 11* (SUSE 11), based on a 2.6-series Linux kernel, as used on the test servers

This setup reliably reproduced the same kernel stack trace as the original bug. The same assert triggered on the same condition, and so we brought it into Simics for debugging. The Simics setup looked like shown below, with two machines on a network inside a single Simics session. Note that there is a Simics running inside of Simics, alongside the test program needed to force the error to appear. Note also that the NFS server is running on the second simulated machine.

When re-running the setup in Simics, we found that there was yet another important factor needed to trigger the bug: The machine running Simics and the external simulator had to have at least two processor cores. Without true concurrency between the software threads involved, the bug would not trigger. This is an example of a bug that would not trigger and be possible to (reverse) debug using the common strategy of serializing the execution of a multithreaded program on a single core. Such serialization is what most reverse debuggers do, since replaying the execution of a concurrent workload is hard to do deterministically unless you have a full-system simulator. For more information on ways to do reverse debugging, go to http://jakob.engbloms.se/archives/1564.

Triggering the bug in Simics was easy, and took just a few runs. In each run, the process was to:

- Take a Simics checkpoint before the run started.

- Set a bookmark to enable reverse execution.

- Run the test until the kernel crashed— which happened pretty quickly.

Analysis

At this point, the Simics debugger was attached to allow analysis of a crashed system. Debugging this on a physical machine would have been virtually impossible, since the kernel crashed as a result of the bug. A hardware debugger attached to the server might have been useful if the machine had supported connecting such a device. However, even with a debug port, that kind of setup would not be able to reverse-debug back to the root cause of the issue.

Once the Simics debugger was attached and inspecting the state of the machine, the kernel data involved in the crash turned out to be the metadata for memory pages maintained by the NFS file system and the kernel. There are two sets of flags for each page. The Linux kernel itself maintains a set of flags for all pages, used among other things to track if a page is dirty, or being written back to disk. In addition, when using NFS, NFS maintains its own flags including a separate NFS “clean/dirty” flag. In the problem case, the NFS flag was set to clean but the kernel flags indicated a dirty page. The assert triggered when the kernel detected this clean/dirty inconsistency.

To find the root cause of the change, it was a simple matter to:

- Set a breakpoint on the NFS flag for the page.

- Reverse the execution to find the last writer.

What this revealed was a race between threads in the Linux kernel.

The NFS clean flag was being set from one kernel thread of execution, as part of completing an NFS write-back of pages. The thread would clear the kernel’s dirty flag on the page, and set the write-back flag. Once the write-back had completed, the thread set the write-back flag to NFS clean and cleared the write-back flag. The time it took for the write-back to complete left a window open, in which a different thread could modify the metadata and create an inconsistency. That’s what happened here.

At the same time, running within the context of an ioctl call from a user thread, the VMXMON driver was marking pages as dirty, with the wrinkle that the pages were also locked — this caused the kernel code to take an unusual path. Finally, a different kernel thread was looking for dirty pages to write back. This thread found a page that NFS had marked clean, pending the completion of a write-back, but which VMXMON had marked as dirty in the meantime. Having found such a page, it triggered the assert that crashed the kernel.

If we take a step back to consider what happened here, we see that the bug was a race involving three different concurrent threads, and some really unlucky timing, coupled with an unusual combination of mmap and page locking. This is what happens in software, unfortunately: Software components that are robust and proven in use can still fail due to unforeseen or novel circumstances.

SUSE was notified about the issue, and created a patch for the specific, older version of Linux being used. In newer Linux kernels, this bug does not occur. Newer Linux kernel code has undergone extensive changes, and that appears to avoid the race.

Conclusions

If we look at this bug as a specimen, it is clearly not a simple “comic relief” bug. Rather, it was clearly a crippling bug for what we were trying to do, and it was time for some serious debugging. It was an heroic quest, tracking the dragon bug through the badlands of the kernel and the long trails of the network. In the end, the dragon was found, confronted, and slain.

When doing this, having the right tool was critical, and we were fortunate to be able to leverage Simics. Two properties of Simics were indispensable: 1) A debugger that allowed debugging a crashed OS. 2) Being able to repeat and reverse the execution of an entire system that contained multiple machines, with multiple processor cores, in a network and complete software stacks.

Finding and fixing this bug is a good example of the unique debug power of Simics full-system virtual platforms and tools. It’s also an example of how critical it is to simulate actual hardware concurrency in order to reveal bugs. Some bugs just do not trigger if tasks are forced to multitask on a single processor.