Introduction

Digitization continues to expand, from on-premises and cloud data centers to connected endpoints at the edge. International Data Corporation (IDC) predicts that by 2025, this global data sphere will grow to 175 zettabytes1. Per the global research and advisory firm Gartner2, only around 10 percent of the data today is created and processed outside centralized data centers. By 2025, about 75 percent of all data will need analysis and action at the edge.

In healthcare, an increasing number of providers are recognizing the need for a strong cloud-to-edge strategy. Cloud refers to traditional computing data centers with off-premise and on-premise hardware. Edge refers to infrastructure, like patient monitoring devices, workstations, and other devices that generate data away from the main data center.

According to the IDC, 48 percent of healthcare providers are already using edge computing. This number is likely to grow as providers shift to using more devices that can measure, store, and process data before uploading it to a centralized cloud. As Dr. S. Rab, chief information officer of Rush University Medical Center, points out3, “the key benefit of edge computing is the ability of devices to compute, process, and analyze data with the same level of quality as data analyzed in the cloud but without latency.”

With these facts in mind, Intel’s healthcare team modified and adopted the Intel® Edge Insights for Industrial (Intel® EII)4 software stack. This stack was originally created for the industrial segment but with stringent requirements such as uptime, redundancy, latency, and security that is well suited for applications in the healthcare domain.

Intel EII is a prevalidated, ready-to-deploy software reference design that takes advantage of modern CPU microarchitecture. This approach helps the original equipment manufacturers (OEMs), device manufacturers, and solution providers to integrate data from sensor networks, operational sources, external providers, and systems more rapidly.

Intel® Edge Insights for Industrial (Intel® EII)

The Intel EII software package was created for industrial use cases and is optimized for platforms based on Intel architecture. Built in a Docker* container environment, it is operating system-agnostic. As shown in Figure 1, you can run the Intel EII modular software stack on a single machine or on multiple machines. Intel EII allows machines to communicate interchangeably across different protocols and operating systems. This feature provides easy processing of data ingestion, analysis, storage, and management.

Intel EII supports multiple edge-critical Intel® hardware components like CPUs, graphics accelerators5, and Intel® Movidius™ Vision Processing Units (VPUs)6. The modular architecture of Intel EII gives OEMs, solution integrators or providers, and independent software vendors (ISVs) the flexibility to choose the modules, features, and capabilities to include and expand upon for customized solutions. This results in solutions that can get to market faster, thus speeding up customer deployments and allowing the system to optimize its hardware.

Figure 1. Intel Edge Insights for Industrial (Intel EII) architecture

Intel EII also protects against unauthorized data access with a two-stage process that relies on hardware-based security standards to protect certificates and configuration secrets. You can use Intel EII to develop and optimize AI and computer vision applications by taking advantage of the Intel® Distribution of OpenVINO™7 toolkit to maximize performance and extend workloads across Intel® hardware, including accelerators.

Figure 2. An example of modified Intel EII architecture for healthcare deployments

As shown in Figure 1 and Figure 2, the Intel EII architecture consists of multiple modules like time-series data ingestion, video ingestion, image store, analytics, and algorithms. In the heart of all modules is the Intel EII central data distribution bus that uses the ZeroMQ messaging library. You can decide to choose one, many, or none of these modules. The leanest form of using Intel EII is to use the message bus and add your own custom modules to it.

For healthcare deployments, a time-series module can have data coming from devices like EKG, patient monitors, temperature sensor, and oximeter. Similarly, the video ingestion module can have live data coming from an endoscopy machine, fluoroscopy, hospital room cameras, ultrasound, and so on. The image store module can have data coming from a picture archiving and communication system (PACS)8, a vendor neutral archive (VNA)9 system, or connected to data centers where medical images are stored. The modules can communicate to the data bus in parallel while feeding data to the analytics and algorithm module for data interpretation and insight. The results of the analytics and algorithm module would then be published on the data bus for any of the existing or new modules to use, or it could be sent directly to a display device. The Intel EII modules can all run on the same machine or run on different machines.

Intel EII provides a reference validated software architecture for OEMs, solution providers, and ISVs to adopt, modify, and deploy. For both time-series and video ingestion modules, the Intel EII stack comes with a sample code that can be used for testing. Similarly, the analytics and algorithm modules come with the Intel Distribution of OpenVINO toolkit that can be used to run Intel-optimized AI models on the data coming from other modules.

Currently, Intel EII is validated for the industrial market. However, the same stack can be easily modified and used in the healthcare domain.

Demonstrating Intel EII in Healthcare

MICCAI 2017 Robotic Instrument Segmentation

This sample application demonstrates how developers can use Intel EII to build custom parts of a solution for healthcare use cases. This example from MICCAI 2017 performs semantic segmentation to identify the segments of robotic surgical instruments within a video frame. The identified robotic instruments segments are then highlighted in the output with each segment appearing in a different color. The code in this sample refers to the winning solution by Alexey Shvets, Alexander Rakhlin, Alexandr A. Kalinin, and Vladimir Iglovikov in the MICCAI 2017 Robotic Instrument Segmentation Challenge10. The model is modified from the original model found on GitHub11, which is made available with an MIT license.

This sample implementation demonstrates the use of the video ingestion module, the data bus, and the analytics and algorithm module of the Intel EII stack, shown in Figure 1.

Get Started with Intel EII

- Download the Edge Insights for Industrial package.

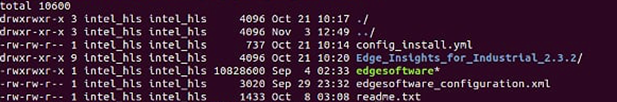

Refer to the Get Started Guide. This demonstration uses the Intel EII 2.3.2 package. - The Intel EII installation package appears as shown in Figure 3.

Figure 3. Intel EII installation package

3. After installing the Intel EII package, access the Docker modules in the following directory.

$<INSTALLATION_PATH>/edge_insights_industrial/Edge_Insights_for_Industrial_2.3.2/IEdgeInsights

Figure 4. Snapshot of the Intel EII directory with the installed Docker module

Robotic Surgical Instrument Segmentation Demonstration

This section provides a step-by-step guide on how to use Intel EII as part of a solution to a healthcare use case. The application uses the VideoIngestion, VideoAnalytics, and Visualizer services that are provided in the Intel EII software package.

The VideoIngestion module packages the video ingestion service into a Docker container. The same module uses GStreamer and OpenCV to provide a preconfigured ingestion pipeline that you can modify for different camera types and preprocessing algorithms. The VideoIngestion container publishes the video data, which consists of metadata and frames, to the Edge Insights for Industrial data bus.

The VideoAnalytics container uses the Intel EII data bus to subscribe to the input stream published by the Video Ingestion container, and performs video analytics jobs with single or multiple instances. The Intel Distribution of OpenVINO toolkit and the Intel® oneAPI Math Kernel Library (oneMKL)12 are included in the container used for analytics. The visualizer module displays the processed video.

The parameters specific to the use case can be supplemented in the provision configuration file. Use the following path to locate the provision configuration file.

$<INSTALLATION_PATH> /edge_insights_industrial/Edge_Insights_for_Industrial_2.3.2/IEdgeInsights/build/provision/config/eis_config.json

The default Intel EII provision configuration is for the printed circuit board (PCB) classification demonstration. This file contains the configuration for each of the following containers:

Video Ingestion

Video ingestion takes the input from a video file located inside the VideoIngestion module directory. To locate this file, use this installation path.

$<INSTALLATION_PATH>/ edge_insights_industrial/Edge_Insights_for_Industrial_2.3.2/IEdgeInsights/VideoIngestion/test_videos/short_source.mp4

In the Video Ingestion section, change the ingestor pipeline to run the robotic instrument segmentation video.

Original:

"ingestor": {

"type": "opencv",

"pipeline": "./test_videos/pcb_d2000.mp4",

"loop_video": "true",

"queue_size": 10,

"poll_interval": 0.2

},

Updated version after making changes:

"ingestor": {

"loop_video": true,

"pipeline": "./test_videos/short_source.mp4",

"poll_interval": 0.2,

"queue_size": 10,

"type": "opencv"

},

This configuration tells Intel EII to use an OpenCV library to read the video short_source.mp4 and loop the video for processing.

Also, in the original PCB configuration file, the video stream input is preprocessed with a Python script pcb/pcb_filter.py. This is not needed for the robotics surgery tool segmentation demo. This Python script is a user-defined function (UDF) that uses the parameters n_left_px, n_right_px, n_total_px, scale_ratio, and training_mode to select the input frames. For this demonstration, extra preprocessing for the input video frame is not needed, so a placeholder UDF, named dummy, is used instead. Another way is to remove all the elements in the UDFs list so you can provide VideoIngestion as empty UDFs list.

Original:

"udfs": [{

"name": "pcb.pcb_filter",

"type": "python",

"training_mode": "false",

"n_total_px": 300000,

"n_left_px": 1000,

"n_right_px": 1000

}]

Updated version after making changes:

"udfs": [

{

"name": "dummy",

"type": "python"

}

]

If the input video frames need additional visual analytics, you can write a UDF for this purpose. For more information on creating UDFs, refer to the Video Analytics section.

Video Analytics

The Intel EII software stack uses UDFs to enable you to perform data preprocessing and postprocessing, and to deploy data analytics or deep learning models. Intel EII supports both C++ and Python UDFs. UDFs can modify, drop, or generate metadata from the frame. You can refer to the sample document on how to write a UDF, which is included in the Intel EII software package and can be located in the following installation path.

$<INSTALLATION_PATH>/ edge_insights_industrial/Edge_Insights_for_Industrial_2.3.2/IEdgeInsights/common/video/udfs/HOWTO_GUIDE_FOR_WRITING_UDF.md

This demonstration uses the Python UDF API with two aspects to it:

- Use the Intel EII Python API to write the actual UDF for processing the frame. You can start with a dummy UDF, as shown here. The UDF includes two components, one for initialization and one for actual ingested data processing.

def __init__(self): """Constructor """ self.log = logging.getLogger('DUMMY') self.log.debug(f"In {__name__}...") def process(self, frame, metadata): """[summary] :param frame: frame blob :type frame: numpy.ndarray :param metadata: frame's metadata :type metadata: str :return: (should the frame be dropped, has the frame been updated, new metadata for the frame if any) :rtype: (bool, numpy.ndarray, str) """ self.log.debug(f"In process() method...") return False, None, NoneThe following script shows how to modify the dummy UDF and the libraries required for the use case.

import os import logging import cv2 import numpy as np import json import threading from openvino.inference_engine import IECore from distutils.util import strtobool import time import sys import copy

The following script shows what changes can be made to the video processing workflow.def __init__(self, model_xml, model_bin, device): self.log = logging.getLogger('Robotics Surgery Tool Segmentation') self.model_xml = model_xml self.model_bin = model_bin self.device = device assert os.path.exists(self.model_xml), \ 'Model xml file missing: {}'.format(self.model_xml) assert os.path.exists(self.model_bin), \ 'Model bin file missing: {}'.format(self.model_bin) # Load OpenVINO model self.ie = IECore() self.neuralNet = self.ie.read_network( model=self.model_xml, weights=self.model_bin) if self.neuralNet is not None: self.inputBlob = next(iter(self.neuralNet.input_info)) self.outputBlob = next(iter(self.neuralNet.outputs)) self.neuralNet.batch_size = 1 self.executionNet = self.ie.load_network(network=self.neuralNet, device_name=self.device.upper()) if self.device == "CPU": supported_layers = self.ie.query_network(self.neuralNet, "CPU") not_supported_layers = \ [l for l in self.neuralNet.layers.keys() if l not in supported_layers] if len(not_supported_layers) != 0: self.log.error("Following layers are not supported by " "the plugin for specified device {}:\n {}". format(self.ie.device, ', '.join(not_supported_layers))) sys.exit(1) else: self.log.error("No valid model is given!") sys.exit(1) self.profiling = bool(strtobool(os.environ['PROFILING_MODE'])) # Main classification algorithm def process(self, frame, metadata): """Reads the image frame from input queue for classifier and classifies against the specified reference image. """ if self.profiling is True: metadata['ts_rs_segmentation_entry'] = time.time()*1000 # Resize the input image to match the expected value cropHeight, cropWidth = (1024,1280) imgHeight, imgWidth = frame.shape[0], frame.shape[1] startH = int((imgHeight - cropHeight) // 2) startW = int((imgWidth - cropWidth) // 2) frame1 = frame[startH:(startH+cropHeight),startW:(startW+cropWidth),:] orig_frame = copy.deepcopy(frame1) # Convert from BGR to RGB since model expects RGB input frame1 = frame1[:,:,np.argsort([2,1,0])] n, c, h, w = self.neuralNet.inputs[self.inputBlob].shape cur_request_id = 0 labels_map = None inf_start = time.time() initial_h = frame1.shape[0] initial_w = frame1.shape[1] in_frame = cv2.resize(frame1, (w, h)) ## Change data layout from HWC to CHW in_frame = np.transpose(in_frame/255.0, [2, 0, 1]) in_frame = np.expand_dims(in_frame, 0) res = self.executionNet.infer(inputs={self.inputBlob: in_frame}) inf_end = time.time() det_time = inf_end - inf_start # Parse detection results of the current request res = res[self.outputBlob] sliced = res[0, 1:, :, :] mask_frame = np.zeros((1024,1280,3), dtype=np.uint8) mask_frame = (np.floor(np.transpose(sliced, [1,2,0])*255)).astype(np.uint8) out_mask_frame = cv2.addWeighted(orig_frame, 1, mask_frame, 0.5, 0) out_frame =np.zeros((imgHeight, imgWidth, 3), dtype=np.uint8) out_frame[startH:(startH+cropHeight),startW:(startW+cropWidth),:] = out_mask_frame if self.profiling is True: metadata['ts_va_classify_exit'] = time.time()*1000 return False, out_frame, metadata - After the UDF is ready, you can add it to the Intel EII software stack by altering different configurations and building the UDF into the container. This is done by making changes to the default VideoAnalytics configuration in the eis_config.json file. You need to rerun the provision and rebuild the containers for all the changes to take effect.

Following is the default VideoAnalytics module configuration for the PCB classifier demonstration."/VideoAnalytics/config": { "encoding": { "type": "jpeg", "level": 95 }, "queue_size": 10, "max_jobs": 20, "max_workers":4, "udfs": [{ "name": "pcb.pcb_classifier", "type": "python", "ref_img": "common/udfs/python/pcb/ref/ref.png", "ref_config_roi": "common/udfs/python/pcb/ref/roi_2.json", "model_xml": "common/udfs/python/pcb/ref/model_2.xml", "model_bin": "common/udfs/python/pcb/ref/model_2.bin", "device": "CPU" }] }, - Make the changes as shown in the following code snippet to point to the correct UDF files, model weights, and relevant reference files. For best results, create a new folder, and then copy the edited codes into the folder. With this, you can easily refer to the changed codes and understand the file names.

"/VideoAnalytics/config": { "encoding": { "level": 95, "type": "jpeg" }, "max_jobs": 20, "max_workers": 4, "queue_size": 10, "udfs": [ { "device": "CPU", "model_bin": "common/udfs/python/surgery_segmentation/ref/surgical_tools_parts.bin", "model_xml": "common/udfs/python/surgery_segmentation/ref/surgical_tools_parts.xml", "name": "surgery_segmentation.surgery_segmentation", "type": "python" } ] },

Visualizer

The Visualizer Docker module can be used as-is for the robotic surgical instrument segmentation use case. As opposed to the PCB classifier example, we ran the VideoIngestion, VideoAnalytics, and Visualizer-based containers on the same host. This means the Inter-Process Communication (IPC) mode for data transmission is used between different containers. The Visualizer service in the docker-compose.yml file needs to be updated for this purpose, since the default in these three containers is to be running on different hosts using Transmission Control Protocol (TCP) mode. Follow this installation path to locate the yml file.

$<INSTALLATION_PATH>/edge_insights_industrial/Edge_Insights_for_Industrial_2.3.2/IEdgeInsights/build/docker-compose.yml

To use IPC instead of TPC, make the following changes:

- In ia_video_ingestion service, update the IPC/TCP section as follows:

# Use IPC mode (zmq_ipc) when VideoIngestion and VideoAnalytics based

# containers are running on the same host. If they are running on diff

# host, please use TCP mode for communication.

# Eg: Stream cfg for

# IPC: zmq_ipc, <absolut_socket_directory_path>

# TPC: zmq_tcp, <publisher_host>:<publisher_port>

PubTopics: "camera1_stream"

camera1_stream_cfg: "zmq_ipc,${SOCKET_DIR}/"

# Server: "zmq_tcp,127.0.0.1:66013"

Remove the comment for line 'Server: "zmq_tcp,127.0.0.1:66013"' to use the configuration for IPC; and comment out the line 'camera1_stream_results_cfg: "zmq_ipc,${SOCKET_DIR}/"' to disable the use of the TCP. This enables the use of IPC to send the video stream to video analytics services.

In ia_video_analytics service and ia_visualizer service, make similar updates if all services are running on the same node. Following is the updated scripts to use the IPC modes.

In ia_video_analytics:

# Use IPC mode (zmq_ipc) when VideoIngestion and VideoAnalytics based

# containers are running on the same host. If they are running on diff

# host, please use TCP mode for communication.

# Eg: Stream cfg for

# IPC: zmq_ipc, <absolute_socket_directory_path>

# TPC: zmq_tcp, <publisher_host>:<publisher_port>

SubTopics: "VideoIngestion/camera1_stream"

camera1_stream_cfg: "zmq_ipc,${SOCKET_DIR}/"

PubTopics: "camera1_stream_results"

# camera1_stream_results_cfg: "zmq_tcp,127.0.0.1:65013"

camera1_stream_results_cfg: "zmq_ipc,${SOCKET_DIR}/"

In ia_visualizer:

# Use IPC mode (zmq_ipc) when VideoIngestion, VideoAnalytics and Visualizer

# based containers are running on the same host. If they are running on diff

# host, please use TCP mode for communication.

# Eg: Stream cfg for

# IPC: zmq_ipc, <absolute_socket_directory_path>

# TPC: zmq_tcp, <publisher_host>:<publisher_port>

# For default video streaming usecase alone

SubTopics: "VideoAnalytics/camera1_stream_results"

# camera1_stream_results_cfg: "zmq_tcp,127.0.0.1:65013"

camera1_stream_results_cfg: "zmq_ipc,${SOCKET_DIR}/"

After making these changes, follow these steps to build the containers and start the service.

$ xhost +

$ cd $EII_INSTALLATION_DIRECTORY/build

$ sudo sg docker -c ‘docker-compose up –build -d’

For more details, refer to the Intel EII documentation.

Figure 5. Examples from Intel EII ia_video_analytics container output log

Conclusions

This article is intended to give you an example of how to apply Intel EII to healthcare use cases. For more articles like this, follow Beenish Zia on LinkedIn*.

References

- IDC: The Digitization of the World: From Edge to Core

- Gartner: What Edge Computing Means for Infrastructure and Operations Leaders

- HealthTech: Will Edge Computing Transform Healthcare

- Intel® Edge Insights for Industrial (Intel® EII)

- Graphics Accelerators

- Intel® Movidius™ Vision Processing Units (VPUs)

- Intel® Distribution of OpenVINO™ Toolkit

- Picture Archiving and Communication System

- Vendor Neutral Archive

- MICCAI 2017 Robotic Instrument Segmentation Challenge

- GitHub

- Intel® oneAPI Math Kernel Library (oneMKL)