Overview

Intel® Edge Software Device Qualification (Intel® ESDQ) for Intel® Edge AI Box allows customers to run an Intel-provided test suite at the target system, aiming to enable partners to qualify on their platform.

The following information is specific to the Intel® ESDQ for Intel® Edge AI Box package. For documentation on the Intel® ESDQ CLI binary, refer to Intel® Edge Software Device Qualification (Intel® ESDQ) CLI Overview.

Select Configure & Download to download Intel® ESDQ for Intel® Edge AI Box from the Intel® Edge AI Box page and refer to Get Started for the installation steps.

Target System Requirements

- 11th, 12th, or 13th generation Embedded Intel® Core™ processors

- Intel® Core™ Ultra Processors

- 12th generation Intel® Core™ Desktop processors with Intel® Arc™ A380 Graphics

- Intel Atom® Processor X7000 Series, (formerly Alder Lake-N)

- Intel® Processor N-series (formerly Alder Lake-N)

- 4th Gen Intel® Xeon® Scalable Processors

- Operating System:

- Ubuntu* Desktop 22.04 (fresh installation)

- Preferred Ubuntu 22.04.3 LTS (fresh installation) for Core and Core Ultra platform

- Preferred Ubuntu 22.04.4 LTS Server (fresh installation) for Xeon platform

- Ubuntu* Desktop 22.04 (fresh installation)

- At least 80 GB of disk space

- At least 16 GB of memory

- Direct Internet access

Ensure you have sudo access to the system and a stable Internet connection.

How It Works

The AI Box Test Module in the Intel® Edge AI Box interacts with the Intel® ESDQ CLI through a common test module interface (TMI) layer, which is part of the Intel® ESDQ binary.

The selected components from the download options will be validated via the automated test suite.

Test results will be stored in the output folder. Intel® ESDQ generates a complete test report in HTML format and detailed logs packaged as one ZIP file, which you can email to the Intel® ESDQ support team at edge.software.device.qualification@intel.com.

AI Box Test Module

The AI Box test module is the validation framework for Intel® Edge AI Box. This module validates the installation of software packages and measures the performance of the platform using the following benchmarks:

- OpenVINO™ based neural network model benchmarks

- Media performance benchmark

- Video pipeline benchmark

- Memory benchmarks

- GPU AI frequency measurement

Note: For Xeon platform, all benchmarks above run on CPU (ensure CPU utilization between 25% and 75%). For other platforms, the benchmarks run on iGPU and dGPU (if exists)

The following telemetry data will be collected when running benchmarks:

- CPU Frequency

- CPU Utilization

- Memory Utilization

- GPU Frequency

- GPU EU Utilization

- GPU VDBox Utilization

- GPU Power

- Package Power

OpenVINO Benchmark

The following neural network models are benchmarked using the OpenVINO™ benchmark tool. Both latency and throughput are measured. Benchmark results are included in the ESDQ report.

- resent-50-tf

- ssdlite-mobilenet-v2

- yolo-v3-tiny-tf

- yolo-v3-tf

- yolo-v4-tf

- efficientnet-b0

- yolo-v5 n/s/m

- yolo-v8 n/s/m

- mobilenet_v2

Media Performance Benchmark

Media Performance Benchmark contains the following benchmarks:

- Media Encode Benchmark: Encode video streams to different video codec (h264, h265) and resolution (1080p, 4K) combination using random noise as source. Measures max number of video streams can be encoded at 30 FPS.

- Media Decode Benchmark: Decodes video streams with different video codec (h264, h265) and resolution (1080p, 4K) combination. Measures max number of video streams can be decoded at 30 FPS.

- Media Decode + Compose Benchmark: Decode video streams with different video codec (h264, h265) and resolution (1080p, 4K) combination and composed into a video wall. Measures max number of video streams can be decoded and composed into video wall at 30 FPS

We recommend connecting 2x 4K monitors to the machine before running this benchmark, then the composed video in Media Decode + Compose benchmark will be driven to each monitor.

Video Pipeline Benchmark

Video Pipeline Benchmarks include the following domain specific proxy pipeline benchmarks:

- Smart NVR Pipeline: Measure the max number of AI-enabled video streams that the platform can support while keeping output frame rate equal to the input frame rate(20fps) out of a fixed number of input.

- Headed Visual AI Pipeline: Measures the max number of channels the platform can run while keeping output frame rate equal to the input frame rate(30fps).

- VSaaS Gateway with Storage and AI Proxy Pipeline: Measures the max number of channels supported for the AI VSaaS Gateway pipeline while keeping the output frame rate equal to the input frame rate(30fps).

We recommend connecting a 4K monitor to the machine before running Smart NVR pipeline and Headed Visual AI Pipeline, the composed video will be driven to the monitor.

Memory Benchmark

The memory benchmark measures the sustained memory bandwidth based on STREAM.

AI Frequency Measurement

The AI frequency benchmark was designed to stress the GPU for an extended period. The benchmark records the GPU frequency while it runs an inference workload using the OpenVINO™ Benchmark Tool.

Get Started

The AI Box Test Module and the Intel® ESDQ CLI tool are installed as part of the Intel® Edge AI Box development package. As a result, many of the steps are common with the instructions given in the Get Started section of Intel® Edge AI Box.

NOTE: The screenshots may show a package version number different from the current release. See the Release Notes for information on the current release.

Prerequisite

- Perform a clean installation of Ubuntu 22.04 or higher on the target system (For Core and Core Ultra platform, Ubuntu 22.04.3 LTS is preferred, for Xeon platform, Ubuntu 22.04.4 LTS Server is preferred).

Note for Xeon platform: AI Box expects a pure CPU configuration on Xeon Platform, all benchmarks will run on CPU. If your system has graphics card, please unplug the graphics card before installing the AI Box package.

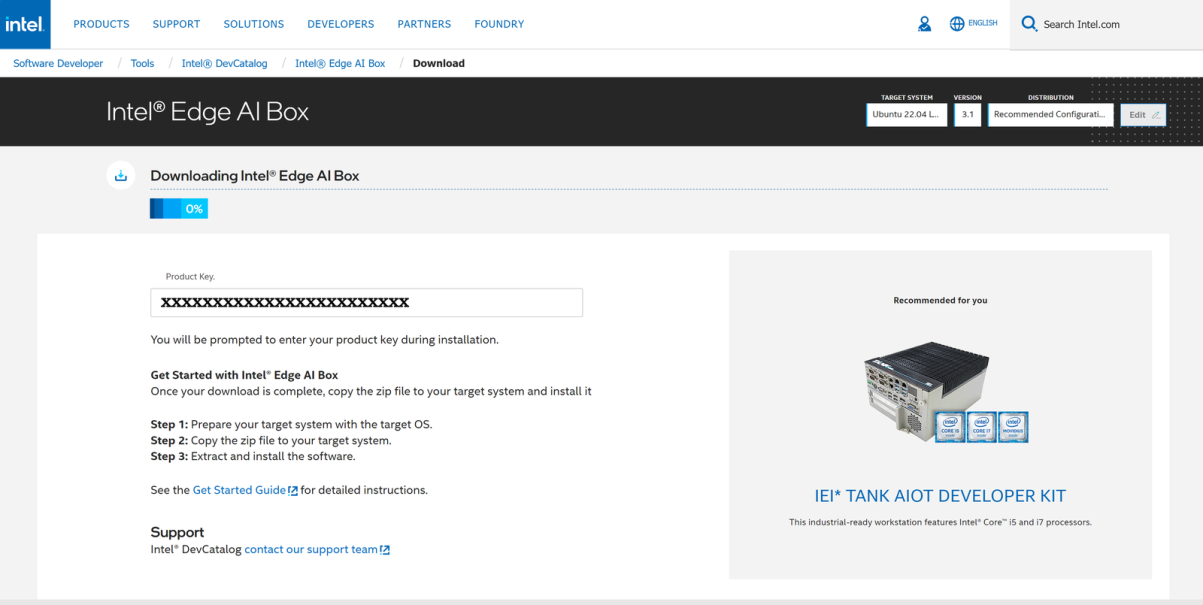

Download and Install Intel® ESDQ for Intel® Edge AI Box

-

Select Configure & Download to download the Intel® Edge AI Box package.

Configure & Download

- Transfer the downloaded package to the target Ubuntu* system and unzip:

unzip intel_edge_ai_box.zip

NOTE: Please use the same credential that was created during Ubuntu installation to proceed with the installation for Intel® Edge AI Box

- Go to the intel_edge_ai_box/ directory:

cd intel_edge_ai_box

- Change permission of the executable edgesoftware file:

chmod 755 edgesoftware

- For Atom and Core platform, if your OS kernel is 6.5.0-35-generic, check if ‘echo $LC_IDENTIFICATION’ does show *UTF-8. If no, then proceed the following steps:

sudo apt-get install locales -y

sudo locale-gen en_US.UTF-8

sudo update-locale LANG=en_US.UTF-8 LC_CTYPE=en_US.UTF-8 LC_IDENTIFICATION=en_US.UTF-8

sudo reboot

- Install the Intel® Edge AI Box package:

./edgesoftware install

- When prompted, enter the Product Key. You can enter the Product Key mentioned in the email from Intel confirming your download (or the Product Key you copied in Step 2).

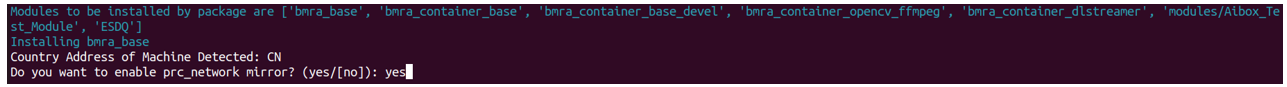

Note for People’s Republic of China (PRC) Network:

- If you are connecting from the PRC network, the following prompt will appear before downloading the modules

- Type No to use the default settings, or yes to enter the local mirror URL for pip and apt package managers.

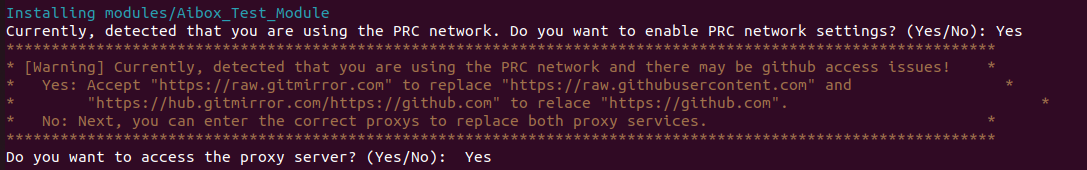

- Similar prompt will appear during bmra base installation:

- Type Yes, to avoid download failure in the PRC network, some follow up prompts will appear.

- Type No to use the default GitHub mirror and Docker hub mirror.

**If you want to enable RDM and XPU Manager under PRC network, you need to type Yes to enter an available Docker hub mirror

- Then you can choose whether to install XPU Manager, if you type yes, please make sure you have replaced the default Docker hub mirror in previous step:

- The following prompt will appear when installing AIBox Samples, type yes to use default Github mirror:

- For Atom and Core platform, it will prompt to reboot the system if your kernel is not 6.5.0.35-generic. After rebooting the system, go to step 6 again to check $LC_IDENTIFICATION. Then install again with command ./edgesoftware install

- You can choose whether to enable the RDM (Remote Device Management) feature, if you type Yes, enter the ThingsBoard server IP, port and access token. If you want to enable it under the PRC network, please make sure you have replaced the default Docker hub mirror in previous step:

- When prompted for the BECOME password, enter your Linux* account password.

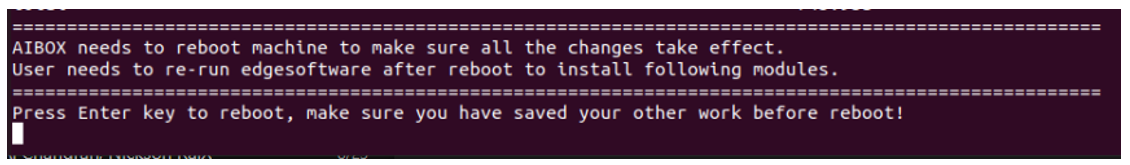

- When prompted to reboot the machine, press Enter. Ensure to save your work before rebooting.

- After rebooting, resume the installation:

cd intel_edge_aibox

./edgesoftware install

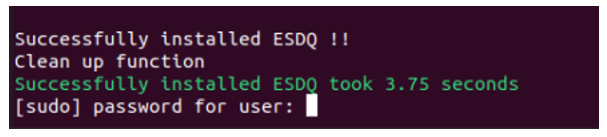

- After the ESDQ is installed, you will be prompted for the password. Enter the password to proceed.

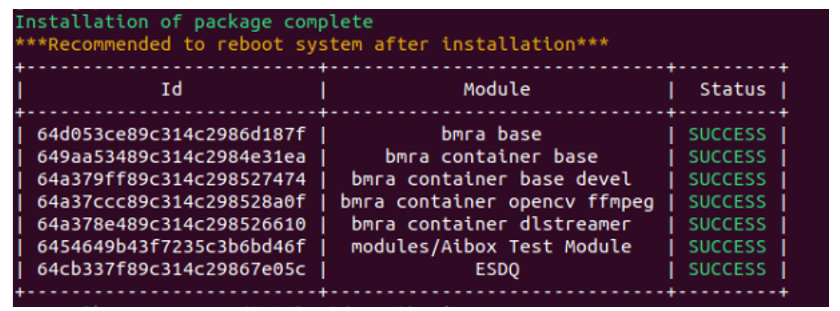

- When the installation is complete, you will see the message “Installation of package complete” and the installation status for each module.

- Reboot the system:

sudo reboot

Run the Application

For the complete Intel® ESDQ CLI, refer to Intel® ESDQ CLI Overview. To find the available Intel® Edge AI Box tests, run the following command:

cd intel_edge_aibox/Intel_Edge_AI_Box_4.0

esdq --verbose module run aibox --arg "-h"

You have the option to run an individual test or all tests together. The results from each test will be collated in the HTML report.

Note for Core Ultra platform: Some telemetry data (e.g. GPU Frequency, RCS usage) in the ESDQ report on the Core Ultra platform may be low.This is because intel-gpu-tools installed from apt install do not fully support the Core Ultra platform, you can follow the steps below to build latest intel-gpu-tools from source in this case.

-

Install dependencies:

sudo apt-get update

sudo apt-get install -y gcc flex bison pkg-config libatomic1 libpciaccess-dev libkmod-dev libprocps-dev libdw-dev zlib1g-dev liblzma-dev libcairo-dev libpixman-1-dev libudev-dev libxrandr-dev libxv-dev x11proto-dri2-dev meson libdrm-dev

sudo apt-get install -y libunwind-dev libgsl-dev libasound2-dev libxmlrpc-core-c3-dev libjson-c-dev libcurl4-openssl-dev python-docutils valgrind peg libdrm-intel1

sudo apt-get install -y build-essential cmake git

sudo apt-get clean

-

Clone intel-gpu-tools v1.28 source code:

git clone -b v1.28 https://gitlab.freedesktop.org/drm/igt-gpu-tools.git

-

Build from source:

cd igt-gpu-tools && ./meson.sh

cd igt-gpu-tools && make

-

The new intel-gpu-tools is at igt-gpu-tools/build/tools, to check the GPU usage when benchmark is running, run below command before running benchmarks:

sudo igt-gpu-tools/build/tools/intel_gpu_top -l

Run Full ESDQ

Run the following commands to execute all the Intel® Edge AI Box tests and generate the full report.

cd intel_edge_aibox/Intel_Edge_AI_Box_4.0

esdq --verbose module run aibox --arg "-r all"

Run OpenVINO Benchmark

The OpenVINO benchmark measures the performance of commonly used neural network models on the platform. The following models are supported:

- resent-50-tf

- ssdlite-mobilenet-v2

- yolo-v3-tiny-tf

- yolo-v3-tf

- yolo-v4-tiny-tf

- yolo-v4-tf

- efficientnet-b0

- yolo-v5 n/s/m

- yolo-v8 n/s/m

- mobilenet_v2

Note: yolo-v4-tf and yolo-v8 n/s/m are skipped for NPU device on Core Ultra platform because they can’t run on NPU device for now.

The following OVRunner runner commands benchmark all the models using dGPU for 180 seconds.

cd intel_edge_aibox/Intel_Edge_AI_Box_4.0

esdq --verbose module run aibox --arg "-r OVRunner -d dGPU -t 180"

OVRunner parameters:

- -d {CPU, GPU, iGPU, dGPU, NGPU} specifies the device type.

- -t <seconds> specifies the benchmark duration in seconds.

- -m {resnet-50-tf, ssdlite_mobilenet_v2, yolo-v3-tiny-tf, yolo-v4-tf, efficientnet-b0, yolo-v3-tf, yolo-v4-tiny-tf, yolo-v5n, yolo-v5s, yolo-v5m, yolo-v8n, yolo-v8s, yolo-v8m, mobilenet-v2-pytorch} specifies model. Do not include this parameter to run all models.

- -p {INT8, FP16} specifies model precision

The following is the example of resnet-50-tf benchmark result:

Run Memory Benchmark

The memory benchmark measures the sustained memory bandwidth based on STREAM. To measure memory bandwidth for media processing, invoke the MemBenchmark runner:

cd intel_edge_aibox/Intel_Edge_AI_Box_4.0

esdq --verbose module run aibox --arg "-r MemBenchmark"

After the MemBenchmark runner completes, the location of the test report is displayed. The following is the example report:

Run AI Frequency Measurement

The AI inference frequency benchmark was designed to stress the GPU for an extended period. The benchmark records the GPU frequency while it runs an inference workload using the OpenVINO™ Benchmark Tool.

The following FreqRunner runner command measures GPU frequency for 10 hours.

cd intel_edge_aibox/Intel_Edge_AI_Box_4.0

esdq --verbose module run aibox --arg "-r FreqRunner -t 10"

FreqRunner parameters:

- -t <seconds> specifies the benchmark duration in hours.

The following is an example plot:

Run Media Performance Benchmark

The following Media Runner runner command invokes this benchmark for all video codec (H264, H265) and resolution (1080p, 4K) on all available devices (iGPU on Core and Core Ultra platform, dGPU, CPU on Xeon platform).

cd intel_edge_aibox/Intel_Edge_AI_Box_4.0

esdq --verbose module run aibox --arg "-r MediaRunner"

The following is the example report:

Run Smart NVR Proxy Pipeline Benchmark

Smart NVR pipeline benchmark contains the following process:

- Decodes 25 1080p H264 video channels

- Stores video stream in local storage

- Runs AI Inference on a subset of the video channels

- Composes decoded video channels into multi-view 4K video wall stream

- Encodes the multi-view video wall stream for remote viewing

- Display the multi-view video wall streams on attached monitor (4k monitor is preferred)

The following command runs the pipeline benchmark:

esdq --verbose module run aibox --arg "-r SmartAIRunner"

Run Headed Visual AI Proxy Pipeline Benchmark

Headed Visual AI Proxy Pipeline contains the following process:

- Decodes a specified number of 1080p video channels

- Runs AI Inference on all video channels

- Composes all video channels into a multi-view video wall stream

- Encodes the multi-view video wall stream for remote viewing

- Display the multi-view video wall stream on attached monitor (4K monitor is preferred)

The following command runs the pipeline benchmark:

esdq --verbose module run aibox --arg "-r VisualAIRunner"

Run VSaaS Gateway with Storage and AI Proxy Pipeline Benchmark

VSaaS Gateway with Storage and AI Proxy Pipeline contains the following process:

- Decode a specified number of 1080p h264 video channels

- Stores video stream in local storage

- Runs AI inference on all video channels

- Transcodes the video channels to h265 for remote consumption

The following command runs the pipeline benchmark:

esdq --verbose module run aibox --arg "-r VsaasAIRunner"

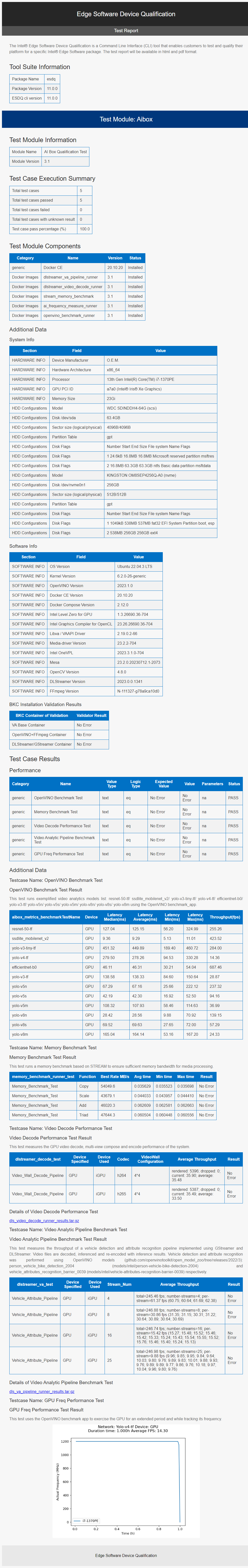

Sample Full ESDQ Report

Known Issues

[AIBOX-293]: Report.zip is empty when first time to generate the report.

[AIBOX-420]: Permission Issues Found During Installation with new user ID.

[AIBOX-623]: No Data in Intel XPU Manager Exporter on MTL Platform.

[AIBOX-624]: MODULE_STDERR Occurred During EAB4.0 Installation. Workarounds refer to Troubleshooting.

[AIBOX-629]: GPU Telemetry data is Too Low in ESDQ Report of MTL Platform. Workarounds refer to Run The Application.

Troubleshooting

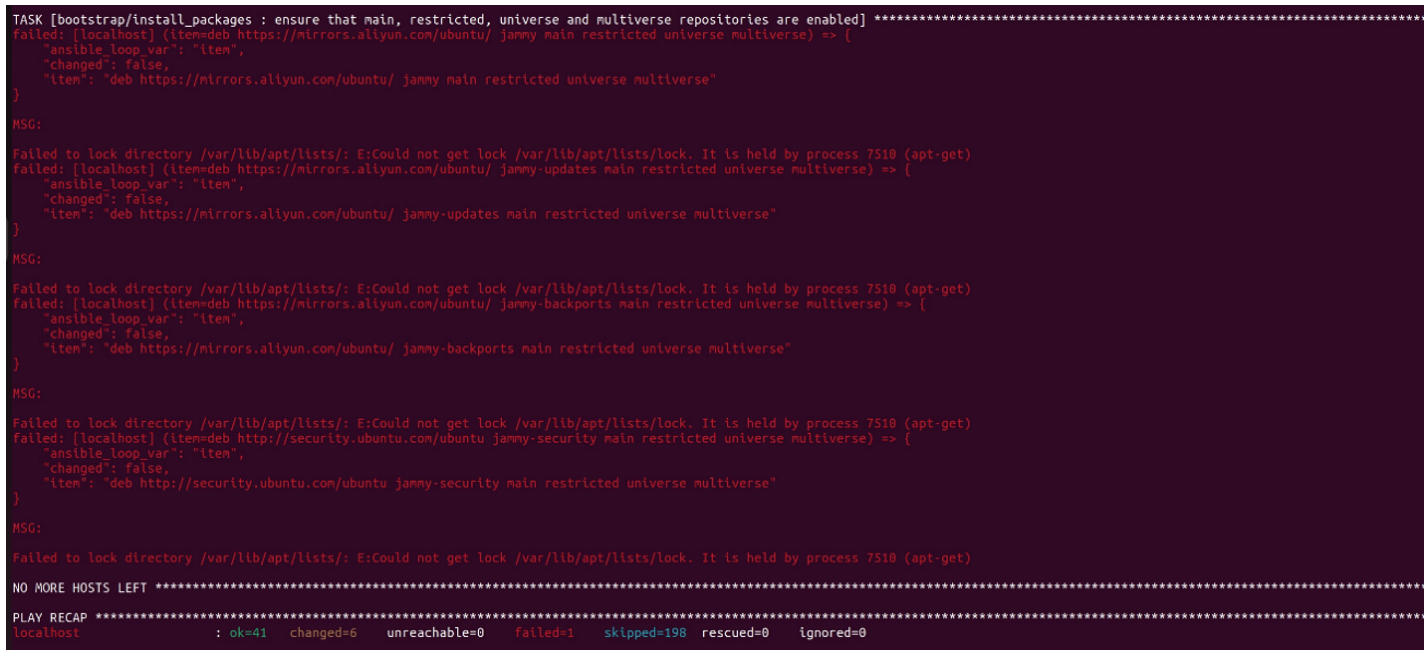

- As the system is being automatically updated in the background, you may encounter the following errors:

To resolve this issue, reboot and manually update the system. Then, rerun the installation:

sudo reboot

sudo apt update

./edgesoftware install

- If the network quality is poor, you may encounter the following error:

To resolve this issue, you can configure to run sudo without entering a password before installing:

sudo visudo

Navigate to “# User privilege specification” section and add the following line to grant the current user sudo privileges without requiring a password:

USER ALL=(ALL) NOPASSWD: ALL

Please replace USER with your actual username.

After adding the line, save and exit, then reinstall the AIBox package.

Refer to the Troubleshooting section of the Intel® Edge AI Box package.

If you’re unable to resolve your issues, contact the Support Forum.

Release Notes

Current Version: 4.0

New in This Release

- Added INT8 models in OpenVINO benchmark

- Added NPU support in OpenVINO benchmark

- Replaced Video Decode Performance Benchmark with Media Performance Benchmarks

- Replaced Video Analytic Pipeline Benchmark with domain specific proxy pipeline benchmark

- Added telemetry information in benchmark report

- Added graph results in benchmark report

- Added Intel Core Ultra and SPR-SP platform support

- Added Remote Device Management

Version: 3.1.1

- Fixed ESDQ hung error when prompt for user input for "parted"

- Fixed ESDQ Test module error with “-h”

- Fixed ESDQ Test module report generation error

Version: 3.1

New in This Release

- Changed proxy URL for PRC network.

- Fixed issues related to dependencies version updates.

Version: 3.0

New in This Release

- Updated ESDQ infrastructure to version v11.0.0

- Built Benchmarks and executed them in Video Analytics Base Library containers.

- Added support for discrete GPU.

- Added memory benchmark.

Version: 2.6

New in This Release

- Updated the test module to support Intel® Distribution of OpenVINO™ toolkit 2022.2 Samples.

- Added tests for Intel® Distribution of OpenVINO™ toolkit in a container.

- Added video decode 16 channel 1080p 4x4 video wall tests.

- Included GPU Inference Frequency Plot test.

Version: 2.5

New in This Release

- Updated the test module to support Intel® Distribution of OpenVINO™ toolkit 2022.1 Samples.

- Removed SVET, Intel® Media SDK and Yolo tests.

- Included the functionality test of Pipeline and Inference Benchmark requirements.

Version: 2.0

New in This Release

- Integrated Intel® Distribution of OpenVINO™ toolkit 2021.4.2.

- Included the functionality test of Azure IoT PnP Bridge, Intel® Distribution of OpenVINO™ toolkit TensorFlow Bridge, and Amazon Web Services Greengrass.

Known Issues

- Smart Video and AI Workload Reference Implementation test requires the user to feed in the root password. However, when Intel® ESDQ is run on a graphical interface, the system switches to console mode during SVET execution, and the control to Intel® ESDQ terminal that displays the password prompt is lost. This results in a hang situation. Log in to the system using the SSH terminal and execute the Intel® ESDQ tests to work around this issue.

Version: 1.2

New in This Release

- Latency and Throughput information included in the HTML report.

- Included the functionality test of Smart Video and AI Workload Reference Implementation.

Version 1.0

New in This Release

- Initial features for recommended configuration.

Known Issues

- If yolo-v3-tf.xml is not downloaded, then Latency and Throughput metrics will be null in the Intel® ESDQ HTML report page.