Introduction

To address the ever-changing requirements for both cloud and network functions virtualization, the Intel® Ethernet 700 Series was designed from the ground up to provide increased flexibility and agility. One of the design goals was to take parts of the fixed pipeline used in Intel® Ethernet 500 Series, 82599, X540, and X550, and move to a programmable pipeline allowing the Intel Ethernet 700 Series to be customized to meet a wide variety of customer requirements. This programmability has enabled over 60 unique configurations all based on the same core silicon.

Even with so many configurations being delivered to the market, the expanding role that Intel® architecture is taking in the telecommunication market requires even more custom functionality, the most common of which are new packet classification types that are not currently supported, are customer-specific, or maybe not even fully defined yet. To address this, a new capability has been enabled on the Intel Ethernet 700 Series network adapters: Dynamic Device Personalization (DDP).

This article describes how the Data Plane Development Kit (DPDK) is used to program and configure DDP profiles. It focuses on the GTPv1 profile, which can be used to enhance performance and optimize core utilization for virtualized enhanced packet core (vEPC) and multi-access edge computing (MEC) use cases.

DDP allows dynamic reconfiguration of the packet processing pipeline to meet specific use case needs on demand, adding new packet processing pipeline configuration profiles to a network adapter at run time, without resetting or rebooting the server. Software applies these custom profiles in a nonpermanent, transaction-like mode, so the original network controller’s configuration is restored after network adapter reset, or by rolling back profile changes by software. The DPDK provides all APIs to handle DDP packages.

The ability to classify new packet types inline, and distribute these packets to specified queues on the device’s host interface, delivers a number of performance and core utilization optimizations:

- Removes requirement for CPU cores on the host to perform classification and load balancing of packet types for the specified use case.

- Increases packet throughput; reduces packet latency for the use case.

In the case that multiple network controllers are present on the server, each controller can have its own pipeline profile, applied without affecting other controllers and software applications using other controllers.

DDP Use Cases

By applying a DDP profile to the network controller, the following use cases can be addressed.

- New packet classification types (flow types) for offloading packet classification to network controller:

- IP protocols in addition to TCP/UDP/SCTP; for example, IP ESP (Encapsulating Security Payload), IP AH (authentication header)

- UDP Protocols; for example, MPLSoUDP (MPLS over UDP) or QUIC (Quick UDP Internet Connections)

- TCP subtypes, like TCP SYN-no-ACK (Synchronize without Acknowledgment set)

- Tunnelled protocols, like PPPoE (Point-to-Point Protocol over Ethernet), GTP-C/GTP-U (GPRS Tunnelling Protocol-control plane/-user plane)

- Specific protocols, like Radio over Ethernet

- New packet types for packets identification:

- IP6 (Internet protocol version 6), GTP-U, IP4 (Internet protocol version 4), UDP, PAY4 (Pay 4)

- IP4, GTP-U, IP6, UDP, PAY4

- IP4, GTP-U, PAY4

- IP6, GTP-C, PAY4

- MPLS (Multiprotocol Label Switching), IP6, TCP, PAY4

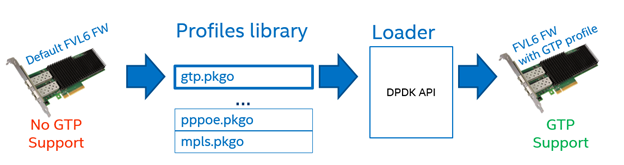

DDP GTP Example

Figure 1. Steps to download GTP profile to Intel® Ethernet 700 Series network adapter.

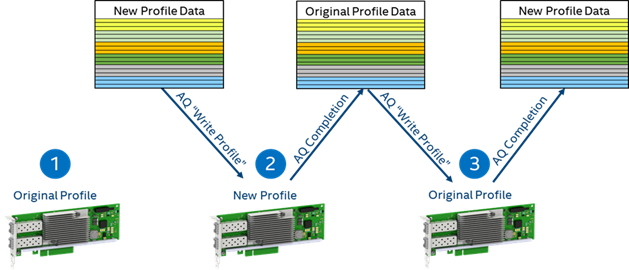

The original firmware configuration profile can be updated in transaction-like mode. After applying a new profile, the network controller reports back the previous configuration, so original functionality can be restored at runtime by rolling back changes introduced by the profile, as shown in Figure 2.

Figure 2. Processing DDP profiles.

Personalization profile processing steps, depicted in Figure 2:

- Original firmware configuration; no profile applied.

- On applying a new profile, the firmware returns the original configuration in the profile's buffer.

- Writing the returned configuration back to the hardware will restore the original state.

Firmware and Software Versions

DDP requires an Intel Ethernet 700 Series network adapter with the latest firmware 6.01.

Basic support for applying DDP profiles to Intel Ethernet 700 Series network adapters was added to DPDK 17.05. DPDK 17.08 and 17.11 introduced more advanced DDP APIs, including the ability to report a profile's information without loading a profile to an Intel Ethernet 700 Series network adapter first. These APIs can be used to try out new DDP profiles with DPDK without implementing full support for the protocols in the DPDK rte_flow API.

Starting with version 2.7.26, Intel® Network Adapter Driver for PCIe* 40 Gigabit Ethernet Network Connections Under Linux supports loading and rolling back DDP profiles using ethtool.

The GTPv1 DDP profile is available from: Intel® Ethernet Controller X710/XXV710/XL710 Adapters Dynamic Device Personalization GTPv1 Package . For information on additional DDP profiles, please contact your local Intel representative.

DPDK APIs

The following three calls are part of DPDK 17.08:

rte_pmd_i40e_process_ddp_package(): This function is used download a DDP profile and register it or rollback a DDP profile and un-register it.

int rte_pmd_i40e_process_ddp_package(

uint8_t port, /* DPDK port index to download DDP package to */

uint8_t *buff, /* buffer with the package in the memory */

uint32_t size, /* size of the buffer */

rte_pmd_i40e_package_op op /* operation: add, remove, write profile */

);

rte_pmd_i40e_get_ddp_info(): This function is used to request information about a profile without downloading it to a network adapter.

int rte_pmd_i40e_get_ddp_info(

uint8_t *pkg_buff, /* buffer with the package in the memory */

uint32_t pkg_size, /* size of the package buffer */

uint8_t *info_buff, /* buffer to store information to */

uint32_t info_size, /* size of the information buffer */

enum rte_pmd_i40e_package_info type /* type of required information */

);

rte_pmd_i40e_get_ddp_list(): This function is used to get the list of registered profiles.

int rte_pmd_i40e_get_ddp_list (

uint8_t port, /* DPDK port index to get list from */

uint8_t *buff, /* buffer to store list of registered profiles */

uint32_t size /* size of the buffer */

);

DPDK 17.11 adds some extra DDP-related functionality:

rte_pmd_i40e_get_ddp_info(): Updated to retrieve more information about the profile.

New APIs were added to handle flow type, created by DDP profiles:

rte_pmd_i40e_flow_type_mapping_update(): Used to map hardware-specific packet classification type to DPDK flow types.

int rte_pmd_i40e_flow_type_mapping_update(

uint8_t port, /* DPDK port index to update map on */

/* array of the mapping items */

struct rte_pmd_i40e_flow_type_mapping *mapping_items,

uint16_t count, /* number of PCTYPEs to map */

uint8_t exclusive /* 0 to overwrite only referred PCTYPEs */

);

rte_pmd_i40e_flow_type_mapping_get(): Used to retrieve current mapping of hardware-specific packet classification types to DPDK flow types.

int rte_pmd_i40e_flow_type_mapping_get(

uint8_t port, /* DPDK port index to get mapping from */

/* pointer to the array of RTE_PMD_I40E_FLOW_TYPE_MAX mapping items*/

struct rte_pmd_i40e_flow_type_mapping *mapping_items

);

rte_pmd_i40e_flow_type_mapping_reset(): Resets flow type mapping table.

int rte_pmd_i40e_flow_type_mapping_reset(

uint8_t port /* DPDK port index to reset mapping on */

);

ethtool Commands

RHEL* 7.5 or later or Linux* Kernel 4.0.1 or newer is required.

To apply a profile, copy it first to the intel/i40e/ddp directory relative to your firmware root (usually /lib/firmware).

For example:

/lib/firmware/intel/i40e/ddp

Then use the ethtool -f|--flash flag with region 100: ethtool -f 100

For example:

ethtool -f eth0 gtp.pkgo 100

You can roll back to a previously loaded profile using '-' instead of profile name: ethtool -f - 100

For example:

ethtool -f eth0 - 100

For every rollback request, one profile will be removed, in last to first (LIFO) order. For more details, see the driver's readme.txt.

NOTE: ethtool DDP profiles can be loaded only on the interface corresponding to first physical function of the device (PF0), but the configuration is applied to all ports of the adapter.

Using DDP Profiles with testpmd

To demonstrate DDP functionality of Intel Ethernet 700 Series network adapters, we will use the GTPv1 profile with testpmd. The profile is available here: Intel® Ethernet Controller X710/XXV710/XL710 Adapters Dynamic Device Personalization GTPv1 Package. For information on additional DDP profiles please contact your local Intel representative.

Although DPDK 17.11 adds GTPv1 with IPv4 payload support at rte_flow API level, we will use lower-level APIs to demonstrate how to work with the Intel Ethernet 700 Series network adapter directly for any new protocols added by DDP and not yet enabled in rte_flow.

For demonstration, we will need GTPv1-U packets with the following configuration:

Source IP 1.1.1.1

Destination IP 2.2.2.2

IP Protocol 17 (UDP)

GTP Source Port 45050

GTP Destination Port 2152

GTP Message type 0xFF

GTP Tunnel id 0x11111111-0xFFFFFFFF random

GTP Sequence number 0x000001

-- Inner IPv4 Configuration --------------

Source IP 3.3.3.1-255 random

Destination IP 4.4.4.1-255 random

IP Protocol 17 (UDP)

UDP Source Port 53244

UDP Destination Port 57069

Figure 3. GTPv1 GTP-U packets configuration.

As you can see, the outer IPv4 header does not have any entropy for RSS as IP addresses and UDP ports defined statically. But the GTPv1 header has random tunnel endpoint identifier (TEID) values in the range of 0x11111111 to 0xFFFFFFFF, and the inner IPv4 packet has IP addresses randomly host-generated in the range of 1 to 255.

A pcap file with synthetic GTPv1-U traffic using the configuration above can be downloaded here - host provided embedded pcap file alongside the article.

We will use the latest version of test-pmd from the DPDK 17.11 release. First, start testpmd in receive only mode with four queues, and enable verbose mode and RSS:

testpmd -w 02:00.0 -- -i --rxq=4 --txq=4 --forward-mode=rxonly

testpmd> port config all rss all

testpmd> set verbose 1

testpmd> start

Figure 4. testpmd startup configuration.

Using any GTP-U capable traffic generator, send four GTP-U packets. A provided pcap file with synthetic GTPv1-U traffic can be used as well.

As all packets have the same outer IP header, they are received on queue 1 and reported as IPv4 UDP packets:

testpmd> port 0/queue 1: received 4 packets

src=3C:FD:FE:A6:21:24 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0xd9a562 - RSS queue=0x1 - hw ptype: L2_ETHER L3_IPV4_EXT_UNKNOWN L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - Receive queue=0x1

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

src=3C:FD:FE:A6:21:24 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0xd9a562 - RSS queue=0x1 - hw ptype: L2_ETHER L3_IPV4_EXT_UNKNOWN L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - Receive queue=0x1

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

src=3C:FD:FE:A6:21:24 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0xd9a562 - RSS queue=0x1 - hw ptype: L2_ETHER L3_IPV4_EXT_UNKNOWN L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - Receive queue=0x1

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

src=3C:FD:FE:A6:21:24 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0xd9a562 - RSS queue=0x1 - hw ptype: L2_ETHER L3_IPV4_EXT_UNKNOWN L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - Receive queue=0x1

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

Figure 5. Distribution of GTP-U packets without GTPv1 profile.

As we can see, hash values for all four packets are the same: 0xD9A562. This happens because IP source/destination addresses and UDP source/destination ports in the outer (tunnel end point) IP header are statically defined and do not change from packet to packet; see Figure 3.

Now we will apply a GTP profile to a network adapter port. For the purpose of the demonstration, we will assume that the profile package file was downloaded and extracted to the /lib/firmware/intel/i40e/ddp folder. The profile will load from the gtp.pkgo file and the original configuration will be stored to the gtp.bak file:

testpmd> stop

testpmd> port stop 0

testpmd> ddp add 0 /lib/firmware/intel/i40e/ddp/gtp.pkgo,/home/pkg/gtp.bak

Figure 6. Applying GTPv1 profile to device.

The 'ddp add 0 /home/pkg/gtp.pkgo,/home/pkg/gtp.bak' command first loads the gtp.pkgo file to the memory buffer, then passes it to rte_pmd_i40e_process_ddp_package() with the RTE_PMD_I40E_PKG_OP_WR_ADD operation, and then saves the original configuration, returned in the same buffer, to the gtp.bak file.

In the case where a supported Linux driver is loaded on the device's physical function 0 (PF0) and testpmd uses any other physical function of the device, profile can be loaded with ethtool command:

ethtool -f eth0 gtp.pkgo 100

We can confirm that the profile was loaded successfully:

testpmd> ddp get list 0

Profile number is: 1

Profile 0:

Track id: 0x80000008

Version: 1.0.0.0

Profile name: GTPv1-C/U IPv4/IPv6 payload

Figure 7. Checking whether the device has any profiles loaded.

The 'ddp get list 0' command calls rte_pmd_i40e_get_ddp_list() and prints the returned information.

Track ID is the unique identification number of the profile that distinguishes it from any other profiles.

To get information about new packet classification types and packet types created by the profile:

testpmd> ddp get info /lib/firmware/intel/i40e/ddp/gtp.pkgo

Global Track id: 0x80000008

Global Version: 1.0.0.0

Global Package name: GTPv1-C/U IPv4/IPv6 payload

i40e Profile Track id: 0x80000008

i40e Profile Version: 1.0.0.0

i40e Profile name: GTPv1-C/U IPv4/IPv6 payload

Package Notes:

This profile enables GTPv1-C/GTPv1-U classification

with IPv4/IPV6 payload

Hash input set for GTPC is TEID

Hash input set for GTPU is TEID and inner IP addresses (no ports)

Flow director input set is TEID

List of supported devices:

8086:1572 FFFF:FFFF

8086:1574 FFFF:FFFF

8086:1580 FFFF:FFFF

8086:1581 FFFF:FFFF

8086:1583 FFFF:FFFF

8086:1584 FFFF:FFFF

8086:1585 FFFF:FFFF

8086:1586 FFFF:FFFF

8086:1587 FFFF:FFFF

8086:1588 FFFF:FFFF

8086:1589 FFFF:FFFF

8086:158A FFFF:FFFF

8086:158B FFFF:FFFF

List of used protocols:

12: IPV4

13: IPV6

17: TCP

18: UDP

19: SCTP

20: ICMP

21: GTPU

22: GTPC

23: ICMPV6

34: PAY3

35: PAY4

44: IPV4FRAG

48: IPV6FRAG

List of defined packet classification types:

22: GTPU IPV4

23: GTPU IPV6

24: GTPU

25: GTPC

List of defined packet types:

167: IPV4 GTPC PAY4

168: IPV6 GTPC PAY4

169: IPV4 GTPU IPV4 PAY3

170: IPV4 GTPU IPV4FRAG PAY3

171: IPV4 GTPU IPV4 UDP PAY4

172: IPV4 GTPU IPV4 TCP PAY4

173: IPV4 GTPU IPV4 SCTP PAY4

174: IPV4 GTPU IPV4 ICMP PAY4

175: IPV6 GTPU IPV4 PAY3

176: IPV6 GTPU IPV4FRAG PAY3

177: IPV6 GTPU IPV4 UDP PAY4

178: IPV6 GTPU IPV4 TCP PAY4

179: IPV6 GTPU IPV4 SCTP PAY4

180: IPV6 GTPU IPV4 ICMP PAY4

181: IPV4 GTPU PAY4

182: IPV6 GTPU PAY4

183: IPV4 GTPU IPV6FRAG PAY3

184: IPV4 GTPU IPV6 PAY3

185: IPV4 GTPU IPV6 UDP PAY4

186: IPV4 GTPU IPV6 TCP PAY4

187: IPV4 GTPU IPV6 SCTP PAY4

188: IPV4 GTPU IPV6 ICMPV6 PAY4

189: IPV6 GTPU IPV6 PAY3

190: IPV6 GTPU IPV6FRAG PAY3

191: IPV6 GTPU IPV6 UDP PAY4

113: IPV6 GTPU IPV6 TCP PAY4

120: IPV6 GTPU IPV6 SCTP PAY4

128: IPV6 GTPU IPV6 ICMPV6 PAY4

Figure 8. Getting information about the DDP profile.

The 'ddp get info gtp.pkgo' command makes multiple calls of rte_pmd_i40e_get_ddp_info() to get different information about the profile, and prints it.

There is a lot of information, but we are looking for new packet classifier types:

List of defined packet classification types:

22: GTPU IPV4

23: GTPU IPV6

24: GTPU

25: GTPC

Figure 9. New PCTYPEs defined by GTPv1 profile.

There are four new packet classification types created in addition to all default PCTYPEs available (see Table 7-5. Packet classifier types and its input sets of the latest datasheet.

To enable RSS for GTPv1-U with the IPv4 payload we need to map packet classifier type 22 to the DPDK flow type. Flow types are defined in rte_eth_ctrl.h; the first 21 are in use in DPDK 17.11 and so can map to flows 22 and up. After mapping to a flow type, we can start to port again and enable RSS for flow type 22:

testpmd> port config 0 pctype mapping update 22 22

testpmd> port start 0

testpmd> start

testpmd> port config all rss 22

Figure 10. Mapping new PCTYPEs to DPDK flow types.

The 'port config 0 pctype mapping update 22 22' command calls rte_pmd_i40e_flow_type_mapping_update() to map new packet classifier type 22 to DPDK flow type 22 so that the 'port config all rss 22' command can enable RSS for this flow type.

If we send GTP traffic again, we will see that packets are being classified as GTP and distributed to multiple queues:

port 0/queue 1: received 1 packets

src=00:01:02:03:04:05 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0x342ff376 - RSS queue=0x1 - hw ptype: L3_IPV4_EXT_UNKNOWN TUNNEL_GTPU INNER_L3_IPV4_EXT_UNKNOWN INNER_L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - VXLAN packet: packet type =32912, Destination UDP port =2152, VNI = 3272871 - Receive queue=0x1

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

port 0/queue 2: received 1 packets

src=00:01:02:03:04:05 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0xe3402ba5 - RSS queue=0x2 - hw ptype: L3_IPV4_EXT_UNKNOWN TUNNEL_GTPU INNER_L3_IPV4_EXT_UNKNOWN INNER_L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - VXLAN packet: packet type =32912, Destination UDP port =2152, VNI = 9072104 - Receive queue=0x2

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

port 0/queue 0: received 1 packets

src=00:01:02:03:04:05 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0x6a97ed3 - RSS queue=0x0 - hw ptype: L3_IPV4_EXT_UNKNOWN TUNNEL_GTPU INNER_L3_IPV4_EXT_UNKNOWN INNER_L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - VXLAN packet: packet type =32912, Destination UDP port =2152, VNI = 5877304 - Receive queue=0x0

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

port 0/queue 3: received 1 packets

src=00:01:02:03:04:05 - dst=00:10:20:30:40:50 - type=0x0800 - length=178 - nb_segs=1 - RSS hash=0x7d729284 - RSS queue=0x3 - hw ptype: L3_IPV4_EXT_UNKNOWN TUNNEL_GTPU INNER_L3_IPV4_EXT_UNKNOWN INNER_L4_UDP - sw ptype: L2_ETHER L3_IPV4 L4_UDP - l2_len=14 - l3_len=20 - l4_len=8 - VXLAN packet: packet type =32912, Destination UDP port =2152, VNI = 1459946 - Receive queue=0x3

ol_flags: PKT_RX_RSS_HASH PKT_RX_L4_CKSUM_GOOD PKT_RX_IP_CKSUM_GOOD

Figure 11. Distribution of GTP-U packets with GTPv1 profiles applied to the device.

Now, the Intel Ethernet 700 Series parser knows that packets with UDP destination port 2152 should be parsed as GTP-U tunnel, and extra fields should be extracted from GTP and inner IP headers.

If the profile is no longer needed, it can be removed from the network adapter and the original configuration restored:

testpmd> port stop 0

testpmd> ddp del 0 /home/pkg/gtp.bak

testpmd> ddp get list 0

Profile number is: 0

testpmd>

Figure 12. Removing GTPv1 profile from the device.

The 'ddp del 0 gtp.bak' command first loads the gtp.bak file to the memory buffer, then passes it to rte_pmd_i40e_process_ddp_package() but with the RTE_PMD_I40E_PKG_OP_WR_DEL operation, restoring the original configuration.

Summary

This new capability provides the means to accelerate packet processing for different network segments providing needed functionality of the network controller on demand by applying a DDP profile. The same underlying infrastructure (servers with installed network adapters) can be used for optimized processing of traffic of different network segments (wireline, wireless, enterprise) without the need for resetting network adapters or restarting the server.

References

- Intel® Ethernet Controller XL710 datasheet

- Intel® Network Adapter Driver for PCIe 40 Gigabit Ethernet Network Connections Under Linux

- Intel Ethernet 700 Series Dynamic Device Personalization presentation at DPDK Summit, San Jose, November 2017, slides

- Intel Ethernet 700 Series firmware 6.01 or newer

- Synthetic pcap files with GTP traffic—download link is at the top of this article

- GTPv1 (GPRS Tunnelling Protocol) dynamic device personalization profile for Intel Ethernet 700 Series is available here: Intel® Ethernet Controller X710/XXV710/XL710 Adapters Dynamic Device Personalization GTPv1 Package

- PPP (Point-to-Point Protocol) dynamic device personalization profile for Intel Ethernet 700 Series is available here: Intel® Ethernet Controller X710/XXV710/XL710 Adapters Dynamic Device Personalization PPPoE Package

- Telco/Cloud Purley Enablement Guide: Intel® Ethernet Controller 700 Series Dynamic Device Personalization Guide

- For information on additional DDP profiles, please contact your local Intel representative. The following DDP profiles are available for evaluation purposes: MPLSoGRE/MPLSoUDP with inner L2 packets, QUIC, L2TPv3, IPv4 Multicast, GRE without payload parsing.

About the Authors

Yochai Hagvi: Product enablement engineer in Network Platform Group at Intel, DDP FW technical lead.

Andrey Chilikin: Software Architect working on developing and adoption of new networking technologies and solutions for telecom and enterprise communication industries.

Brian Johnson: Solutions Architect focusing on defining networking solutions and best practices in data center networking, virtualization, and cloud technologies.

Robin Giller: Software Product Manager in the Network Platform Group at Intel.