Kubernetes* plug-ins are game-changers in transforming the deployment and management of containerized applications, revolutionizing the orchestration and optimization of workflows.

The Intel device plugins framework for Kubernetes makes it easy to accelerate complex workloads by taking advantage of advanced hardware resources such as graphics processing units (GPUs), high-performance network interface cards (NICs), and field-programmable gate arrays (FPGAs). In this post we’ll provide an overview of the framework and share how we’re enabling device technologies upstream and downstream to make it easier for users to implement Intel solutions.

Overview of the device plugin framework

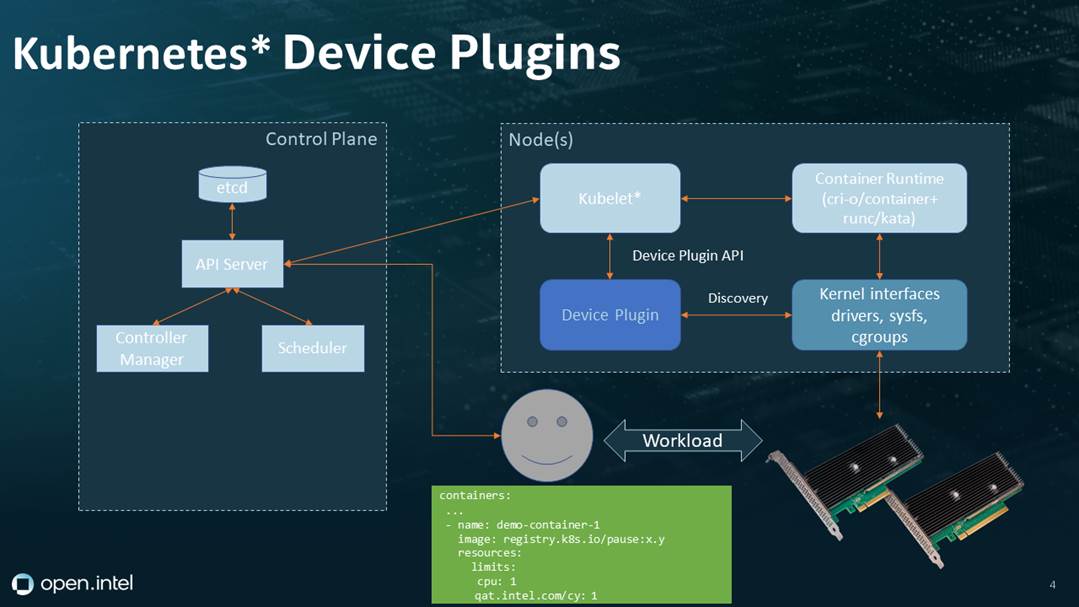

The device plugin framework allows vendors to register their hardware resources in Kubernetes, making them available to use in applications for use cases like GPU-accelerated artificial intelligence and video transcoding, 5G network functions virtualization (NFV) deployments, and compression and data movement acceleration. The framework allows vendor plugins to be maintained outside the core Kubernetes code base and follows the same resource model as native resources (such as CPU and memory). This model delivers security benefits by enabling unprivileged containers to access hardware.

For example, to implement Intel® QuickAssist Technology (Intel® QAT) with cryptographic acceleration, users just register the device plugin, and it will send a list of devices it manages to the Kubernetes control plane via kubelet. This allows the scheduler to read which nodes have Intel® QAT cryptographic resources available for end-user applications.

The registration process for an Intel® QAT device using the device plugin framework.

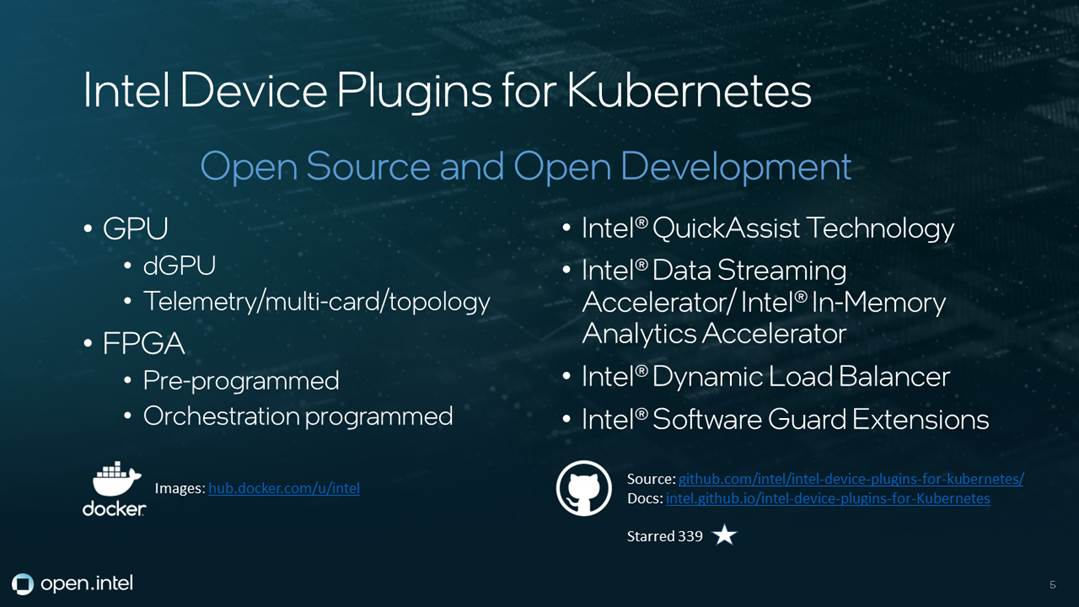

Intel device plugins for Kubernetes is an open source and open development project designed to help users access all Intel device plugins in one place. To support end-user adoption, the project publishes prebuilt container images on Docker Hub* that users can leverage instead of rebuilding the device plugins themselves.

- Visit the GitHub* repo for Intel device plugins

- Go to the Docker Hub container image library

- View the readme for Intel device plugins

Intel device plugins for Kubernetes is a one-stop shop for Intel accelerators. It now includes the integrated accelerators for 4th Generation Intel® Xeon® Scalable processors.

Three Types of Applications

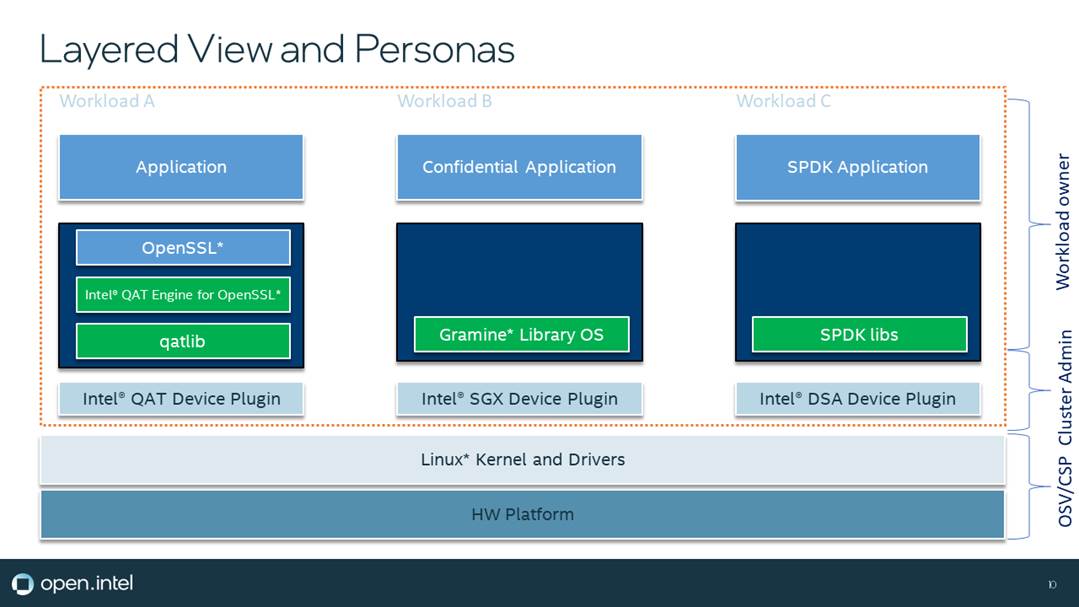

Deploying hardware-accelerated workloads involves three types of partners working together across the infrastructure: workload owners, cluster admins, and either operating system vendors (OSVs) or cloud service providers (CSPs).

In the application layer, workload owners deploy containerized applications that need access to hardware features, such as the three examples in the next image: Workload A uses Intel® QAT for crypto acceleration, Workload B uses Intel® Software Guard Extensions (Intel® SGX) to support confidential applications, and Workload C is a storage application that uses Intel® Data Streaming Accelerator (Intel® DSA) for data movement acceleration.

Hardware-aware containers are built without host operating system library dependencies, only requiring the hardware driver to provide the kernel interface. The cluster admin deploys and manages the device plugin, which advertises the cluster's hardware resources.At the bottom layer is the distribution OSV or CSP responsible for ensuring the necessary kernel drivers are in place. Some deployments also require an out-of-tree driver, which can be deployed by either the cluster admin or the OSV or CSP.

Three examples of applications using different hardware plugins: Intel® QAT device, Intel® SGX device, and Intel® DSA device.

Easy Device Enabling with Intel Device Plugins Operator

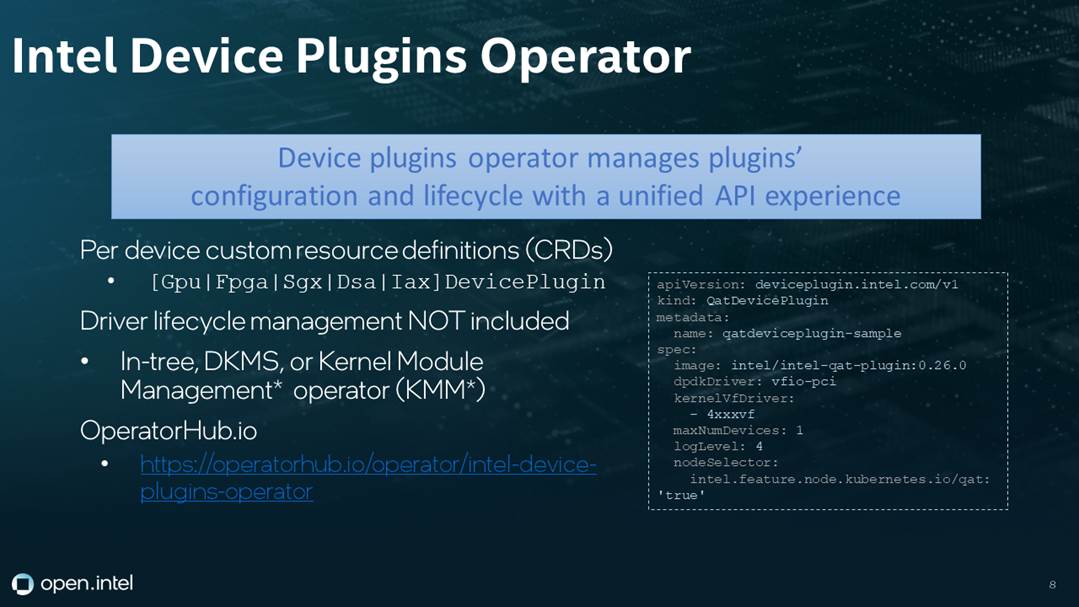

The Intel Device Plugins Operator is designed to help cluster admins enable devices. Using the Kubernetes operator pattern, the device plugin operator is Golang* based and supplies hardware-specific resource definitions to the kubelet, making it easier to install Intel device plugins and manage them across the life cycle. The operator supports several in-tree resources—such as the GPU, the FPGA, and Intel® SGX—for upstream Linux* kernel releases and can be installed from OperatorHub.io.

The next image includes an example of how the Intel Device Plugins Operator makes it possible to deploy an Intel® QAT device using simple instructions. End users specify the image name, image version, and Intel® QAT configuration parameters, and the operator enables the cluster to access the device.

A simplified Intel® QAT device deployment using the Intel Device Plugins Operator.

Making specific hardware features available across clusters

All device dependencies take place upstream during the cluster provisioning process. Intel offers Node Feature Discovery configurations to detect Intel hardware features on Kubernetes clusters and deliver more-intelligent scheduling of available node capabilities.

This process is more complicated for use cases that involve new or out-of-tree dependencies. For instance, the Intel® Data Center GPU Flex Series leverages Red Hat Enterprise Linux* (RHEL*) backporting, then configures the cluster dependencies and security requirements necessary for CoreOS* and Red Hat OpenShift* to complete the driver container image provisioning using the Kernel Module Management (KMM) Operator*. Once the driver and the dependencies are enabled, OSVs and cluster admins can use the Intel Device Plugins Operator to configure the device plugins the same way they would a device with in-tree dependencies.

Intel also provides frameworks and tools to enable AI-based Intel technology for cloud-native workloads. For example, Intel works with Red Hat* to deploy the test frameworks and tools using Intel’s operator and driver release candidates. We also frequently test pre-release code with Intel clusters, as the OpenVINO* and oneAPI library teams did to improve their framework operator before release. This early integration test also helped Intel identify opportunities to improve the operator configuration and framework to better support workloads. Red Hat’s managed data science solution for machine learning, Red Hat OpenShift Data Science* (RHODS*), currently supports Habana® and NVIDIA* GPUs, and Red Hat is working with Intel to complete the full integration with it for Intel discrete GPUs (dGPUs).

Red Hat OpenShift Use Cases

The first integrated solution was Intel® SGX in spring of 2022; support for the Intel® Data Center GPU Flex Series was recently added. Intel also recently released the Intel® QAT 2.0 firmware, included in RHEL and CoreOS, and we’re completing the integration into the certified Intel Device Plugins Operator. The device drivers are provisioned with new processes that change from a specific resource operator to KMM, which recently reached general availability (GA) with the OpenShift 4.12 release.

The certified Intel Device Plugins Operator is available and can be easily provisioned through the Red Hat Ecosystem Catalog. Intel works with Microsoft Azure* to support Intel® SGX-enabled secure enclaves and is helping develop a secure access service edge (SASE) for comms service providers that leverages Intel® QAT and cryptographic acceleration. Last fall, Intel helped ACL Digital* deliver a more secure communication path between services for a commercial 5G control plane by leveraging Intel® SGX enclaves and the network and edge bare metal reference architecture, which includes multiple Intel features bundled together for customer integration and testing.

If you’d like to follow the open source projects, see Intel® Technology Enabling for OpenShift* and the Intel® Data Center GPU Driver for OpenShift* on GitHub.

SUSE Rancher* Use Cases

The device plugin operator is just as easy to provision in the SUSE Rancher marketplace using Helm* Charts. Intel® QAT is currently available for early adoption, enabling use cases with Istio* service mesh integration and Transport Layer Security (TLS) handshake acceleration. Intel® SGX is expected to be available soon, which you can see demoed in this talk that was first presented at KubeCon* + CloudNativeCon* Europe 2023.

Working upstream to support more-complex use cases

While the device plugin framework is effective for resources that are simple and local to a node, you’ll need a different model for complex use cases. Intel has been working upstream to build the Dynamic Resource Allocation (DRA) project that enables advanced resources, more details in this post.

About the Presenters

Mikko Ylinen is a cloud and edge software architect on Intel’s cloud software open source team. He comes with an embedded Linux and operating systems engineering background but has most recently worked on security and confidential computing related topics in containers and cloud infrastructure. In his free time, he enjoys ultra distance sports.

Michael Kadera leads customer enabling of cloud orchestration solutions, cloud engineering and teams supporting the end-to-end integration of Cloud Native workloads that utilize Intel features. He has extensive experience leading Intel IT open cloud programs in the design and implementation of private and hybrid cloud solutions and distributed enterprise application development. His team delivers deep technical solutions and shares architecture knowledge across a wide spectrum of cloud practices including infrastructure orchestration, security and compliance, multi-tenancy, and scalability of edge to cloud applications.