John Stone

Integrated Computer Solutions, Inc.

Introduction

In this article we’ll make a performance comparison between a seventh generation Intel® Core™ i7-7700K processor and a seventh generation Intel® Core™ i5-7600K processor. We will do so using two identical systems provided by Intel, each containing 32 GB of RAM, an Intel® Solid State Drive 240 GB 540s Series, and an NVIDIA* GTX1080 video card. Both systems currently run Windows® 10 and have been updated to the latest drivers. We’ll first compare performance using two industry-standard benchmarks, the AnandTech* 3D Particle Movement benchmark and the Maxon* Cinebench* 15 benchmark. Then we’ll create a new benchmark that has roots in visualization and virtual reality, which is available as a download by clicking the link at the top of the article.

Now that we have our test bed, let’s go over what we can expect to see. Exactly what is the difference between a seventh generation Intel Core i7-7700K processor and a seventh generation Intel Core i5-7600K processor? The answer can be found by going to Intel’s website and looking at the specs for each.

I used the Export Specifications link at the top-right of each page and combined both into a single Excel* spreadsheet. I used Excel’s Conditional Formatting to highlight the exact differences between the processors. Ignoring things like model number, price, and thermal information, this is what I found:

| Feature |

Intel® Core™ i5 Processor |

Intel® Core™ i7 Processor |

Difference |

|---|---|---|---|

| # of Cores |

4 |

4 |

--- |

| # of Threads |

4 |

8 |

2.00x |

| Processor Base Frequency GHz |

3.8 |

4.2 |

1.11x |

| Max Turbo Frequency GHz |

4.2 |

4.5 |

1.07x |

| Intel® Smart Cache MB |

6 |

8 |

1.33x |

Examining the table, we can see that the Intel Core i7 processor has a little higher clock frequency (8–10 percent) and a bigger cache (33 percent more), but by and large the biggest difference is the # of Threads feature (two times more). Notice both processors have four cores, but the Intel Core i7 processor has a bit of extra hardware attached to it to turn it into eight Intel® Hyper-Threading Technology (Intel® HT Technology) cores. Intel HT Technology helps to allow better use of all parts of a CPU.

From Wikipedia:

“For each processor core that is physically present, the operating system addresses two virtual (logical) cores and shares the workload between them when possible. The main function of hyper-threading is to increase the number of independent instructions in the pipeline; it takes advantage of superscalar architecture, in which multiple instructions operate on separate data in parallel. With HTT, one physical core appears as two processors to the operating system, allowing concurrent scheduling of two processes per core. In addition, two or more processes can use the same resources: if resources for one process are not available, then another process can continue if its resources are available.”

So, what this means is that in single-threaded workloads we would only expect to see the difference from the Intel Core i7 processor’s higher clock, or about an 8–11 percent speed-up, but when we have a heterogeneous workload that can be solved using parallel techniques, we’d expect to see a significant speed-up on the Intel Core i7 processor. Note: Heterogeneous workloads are required so that different parts of the CPU, like branch/predict and multiply/add can be used at the same time, thus taking full advantage of Intel HT Technology. It is important to remember there are only four real cores in both processors; the Intel Core i7 processor just allows them to be shared among two threads simultaneously.

Standard Benchmarks

Okay, let us run some industry-standard benchmarks on the two systems and see if our reasoning has any relationship with reality. I picked two different benchmarks for this vetting.

The first comes from AnandTech and is based on simulating random movement of physical particles.

The second benchmark, the venerable Cinebench* 15, comes from Maxon. I ran both benchmarks on my Intel provided hardware and created these charts to summarize the results. Note: ST is single-threaded and MT is multi-threaded.

| ST |

Intel® Core™ i5 Processor |

Intel® Core™ i7 Processor |

Difference |

|---|---|---|---|

| 3D Particle Movement |

|||

| trig |

47.3905 |

50.949 |

1.08x |

| bipy |

40.861 |

43.7562 |

1.07x |

| polarreject |

21.1323 |

22.6415 |

1.07x |

| cosine |

16.2957 |

17.4326 |

1.07x |

| hypercube |

8.744 |

9.355 |

1.07x |

| normdev |

6.5789 |

7.0894 |

1.08x |

| Cinebench* 15 |

|||

| cpu |

n/a |

n/a |

n/a |

| MT |

Intel® Core™ i5 Processor |

Intel® Core™ i7 Processor |

Difference |

|---|---|---|---|

| 3D Particle Movement |

|||

| trig |

122.8053 |

232.7907 |

1.90x |

| bipy |

136.509 |

266.2884 |

1.95x |

| polarreject |

82.3286 |

165.2374 |

2.01x |

| cosine |

50.1714 |

101.2858 |

2.02x |

| hypercube |

36.5688 |

73.6832 |

2.01x |

| normdev |

26.1227 |

52.2271 |

2.00x |

| Cinebench* 15 |

|||

| cpu |

694 |

990 |

1.43x |

We can tell by looking at these numbers that the 3D Particle Movement benchmark is perfectly parallel and heterogeneous in its implementation, giving us nearly theoretical improvement numbers while the Cinebench gives us somewhat of a lesser improvement, which reflects the kind of results you can expect in the real world.

Custom Benchmarks

Okay so far, so good. Now, how can we take what we have learned so far and create a new benchmark that has roots in visualization and virtual reality? What we need is a problem in graphics that can be easily decomposed into a parallel workflow but also can produce interesting visualization results. After much thinking, I decided to take a page out of AnandTech’s playbook and create a graphical particle system. I then spent some time trawling the Internet (thanks, Google*) looking at various implementations of particle systems. Time and again I found code derived from or inspired by the work David McAllister did back in 1997 in his paper The Design of an API for Particle Systems.

McAllister’s Paper

Following this trend, I decided to implement our benchmark using the ideas described in McAllister’s paper, but while doing so also taking into consideration the differences between the Intel Core i5 processor and Intel Core i7 processor described above.

Note: McAllister describes a free library of code in the paper, but trying to find a copy of it today turned out to be very difficult since the website from which he distributed it went dark in the early 2000s. I was eventually able to find two copies of his code version 1.2 included as libraries in other free software, and while the work I’m describing here was inspired by that work, my work is a complete reimplementation of his ideas in a modern C++ 14 application.

Data Structure

This first thing I realized was that I really needed to optimize the particle state data structure to align with the L2 cache requirements of these Intel processors. Both the Intel Core i5 processor and Intel Core i7 processor have 64 bytes per cache-line and it is important to fit the particle state data evenly in a cache-line for optimal performance. Another thing I wanted to do is 16-byte align the vector items to match the data alignment requirements for single instruction, multiple data instructions.

After going back and forth for a while (most particle systems use non-multiples of 32/64 for their particle state) this is what I was able to come up with:

#include <glm/vec3.hpp>

#include <glm/vec4.hpp>

using vector = glm::vec3;

using color = glm::tvec4<uint8_t, glm::packed>;

struct AOS {

vector pos; // 12 bytes; position<x,y,z> -- 16-byte aligned

color clr; // 4 bytes; color

vector vel; // 12 bytes; velocity<x,y,z> -- 16 byte aligned

float age; // 4 bytes; age

}; // 32 bytes; total -- 2 particles per cache-line

static_assert(32==sizeof(AOS), "32 != sizeof(AOS)");Now, for optimal performance and to show the Intel Core i7 processor in the best light possible, I decided to size my particle pool to the Intel Core i7 processor L2 cache size. This is what I came up with:

// core i7 cache: L2=256Kb, L3=8Mb

// core i5 cache: L2=256Kb, L3=6Mb

static constexpr int nParticles = ((8)*(1024*1024) - (1024)*(4)) / sizeof(AOS);Notice how I reserved 4K of the cache space out of the available 8 Mb for use in caching the variables and data needed to implement the behavior of the particle system while using the rest of the space for the particle data pool.

Now, what we are going to be doing is running through this particle pool performing calculations on and making updates to this particle data to create the behavior of the particles through time, which is the essence of implementing a particle system.

One further optimization we can do is tell the compiler that pointers to the particles are not going to be aliases of each other; thus, we define our particle iterators to be:

using item = AOS;

using iterator = item* __restrict;

using reference = item& __restrict;What we have built is a highly optimized data structure of particle state data. Now let’s consider the types of operations we need to perform to bring it to life.

Operations

Generalizing and simplifying the ideas in McAllister’s paper leads us to the following conclusions:

- A particle system must have a source which is responsible for initializing/creating particles and adding them to the simulation. This leads to the concept of a particle source.

- When particles are initialized/created different operations must be performed on the different members of the particle state data. This leads to the concept of a particle initializer.

- Active particles must have their state updated through time. Each state can be updated in several unique ways, depending on the type of simulation being run (including the death of a particle). This leads to the concept of a particle action.

- This simulated pool of data needs to be visualized by something. This leads to the concept of a particle renderer.

I went back and forth on the most optimal way to implement these concepts in C++. At first I thought that using templated std::function<> objects would be the best, but it turns out the mechanism used in its type-erasure property is really bad for performance. This, coupled with the fact that C++ compilers have been optimizing virtual function dispatch for decades, led me to the following design:

struct Source { virtual iterator operator()(time, iterator, iterator) = 0; }

struct Initializer { virtual void operator()(time, iterator) = 0; }

struct Action { virtual void operator()(time, iterator) = 0; }

struct Renderer { virtual void* operator()(time, iterator, iterator, bool&) = 0;

virtual void* allocate(size_t) = 0; };

// A system has all the components to run a collection of particles

struct System

{

System(

Source* &&,

std::vector<Action*>&&,

Renderer* &&

);

bool frame(time dt);

};So, the idea in these interfaces is that each operation is given a time and two iterators (begin and end) to use in implementing their behavior. Also notice how the interfaces in renderer return “void*”. This is a side effect of deciding to use optimized data-upload techniques in the rendering system (OpenGL* ARB Storage), which allows shared memory use between the CPU and GPU.

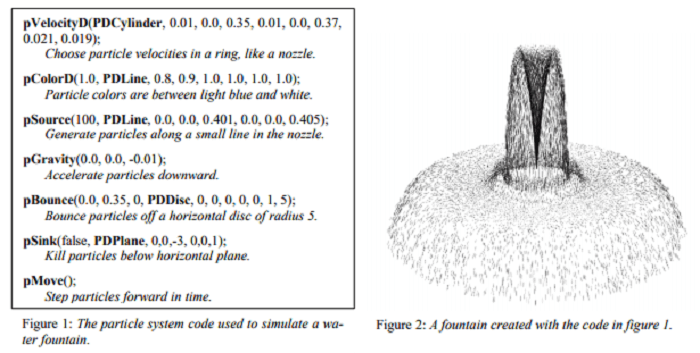

Duplicating McAllister’s Particle Fountain

Now that we’ve got a data structure and an interface, let us see how we can go about creating the particle fountain example shown in Figure 2 in McAllister’s paper. Figure 1 shows the code used in creating this effect, and our job is to create modern/performant C++ code to implement the functions shown there.

Actions and Domains

The first thing to note is that each field in the particle state structure is being associated with a different geometric object (PDCylinder and PDLine). Furthermore, notice that some actions are also associated with a geometry (PDDisc, PDPlane). McAllister calls these geometrical items domains because they describe the mathematical domain from which, or on which, the various fields are being operated. For example, particle colors are being randomly chosen along a line in color-space between {0.8,0.8,1.0} and {1.0,1.0,1.0}, particles are being bounced off (with resilience 0.35) a disc at {0,0,0} in the X-Y plane with radius 0.5, and particles below a plane at {0,0,-3} facing up along the Z-axis are being destroyed.

I encapsulate these concepts this way:

struct Domain

{

bool isIn(vector const&) const; // is this vector in this domain?

template<typename T> void emit(T&); // emit some value from this domain.

};Here are some implementations of this concept:

namespace Particle { namespace Domains {

struct Color

{

color c1, c2;

Color(color const&, color const&);

void emit(color&);

};

struct Line

{

vector p1, p2;

Line(vector const&, vector const&);

void emit(vector&);

};

struct Plane

{

vector p, u, v, n; vector::value_type d;

Plane(vector const&, vector const&, vector const&);

bool isIn(vector const&) const;

void emit(vector&);

};

struct Disc

{

vector p, n; vector::value_type r1, r2, d;

Disc(vector const&, vector const&, vector::value_type r1, vector::value_type r2);

void emit(vector&);

};

struct Cylinder

{

vector p1,p2,u,v; vector::value_type r1, r2;

Cylinder(vector const&, vector const&, vector::value_type r1, vector::value_type r2);

void emit(vector&);

};

}}And then I templatize my actions that need a domain with a specific type of domain:

namespace Particle { namespace Actions {

struct Gravity : Action {

vector g;

Gravity(vector const&);

void operator()(time, iterator) override;

};

struct Move : Action {

void operator()(time, iterator) override;

};

template<typename Domain>

struct Bounce : Action {

Domain domain;

vector::value_type oneMinusFriction, resilience, cutoff;

Bounce(vector::value_type friction, vector::value_type resilience, vector::value_type cutoff, Domain&&);

void operator()(time, iterator) override;

};

template<typename Domain>

struct SinkPosition : Action {

Domain domain;

bool kill_inside;

SinkPosition(bool, Domain&&);

void operator()(time, iterator) override;

};

} }Sources

Now that we have the design of the actions fleshed out, let us turn our attention to the source. A source is something that emits particles, and to actually emit them it must initialize each field in the particle state to a value consistent with some known effect. I’ve created two sources. Flow emits particles at a given rate in particlesPerSecond, while Max emits all available particles:

namespace Particle { namespace Sources {

struct Flow : Source

{

Flow(double particlesPerSecond, std::vector<Initializer*>&& initializers);

iterator operator()(time, iterator, iterator) override;

};

struct Max : Source

{

Max(std::vector<Initializer*>&& initializers);

iterator operator()(time, iterator, iterator) override;

};

}}And here are the initializers I’ve implemented, one for each field in the particle state. Notice how they are templatized on a domain and use the domain’s emit() interface to initialize the particle state:

namespace Particle { namespace Initializers {

template<typename Domain>

struct Position : Initializer

{

Domain d;

Position(Domain&& _) : d(_) {}

void operator()(time, iterator v) override { d.emit(v->pos); }

};

template<typename Domain>

struct Color : Initializer

{

Domain d;

Color(Domain&& _) : d(_) {}

void operator()(time, iterator v) override { d.emit(v->clr); }

};

template<typename Domain>

struct Velocity : Initializer

{

Domain d;

Velocity(Domain&& _) : d(_) {}

void operator()(time, iterator v) override { d.emit(v->vel); }

};

template <typename Domain>

struct Age : Initializer

{

Domain d;

Age(Domain&& _) : d(_) {}

void operator()(time, iterator v) override { d.emit(v->age); }

};

}}Renderers

The last thing to consider are the renderers. I have implemented three of them: Null is as the name says—does nothing. It is used when we are running our performance benchmark. OpenGL is a renderer that displays the particle system in a window and Oculus* is a renderer that displays the particle system in virtual reality:

namespace Particle { namespace Renderers {

struct Null : Renderer

{

void* allocate(size_t) override;

void* operator()(time, iterator, iterator, bool&) override;

};

struct OpenGL : Renderer

{

void* allocate(size_t) override;

void* operator()(time, iterator, iterator, bool&) override;

};

struct Oculus : Renderer

{

void* allocate(size_t) override;

void* operator()(time, iterator, iterator, bool&) override;

};

}}Putting it All Together

Now we finally have all the pieces we need to create a particle fountain like the one in Figure 2 of McAllister’s paper. In main.cpp we have:

namespace Particle { namespace Renderers {

struct Null : Renderer

{

void* allocate(size_t) override;

void* operator()(time, iterator, iterator, bool&) override;

};

struct OpenGL : Renderer

{

void* allocate(size_t) override;

void* operator()(time, iterator, iterator, bool&) override;

};

struct Oculus : Renderer

{

void* allocate(size_t) override;

void* operator()(time, iterator, iterator, bool&) override;

};

}}

Putting it All Together

Now we finally have all the pieces we need to create a particle fountain like the one in Figure 2 of McAllister’s paper. In main.cpp we have:

// std::chrono demands this if you want to use the "min" literal operator

using namespace std::chrono;

#define VISUAL 1

#define OCULUS 0

int main(int argc, char const* argv[])

{

// Set our priority high to get consistant performance

if (!SetPriorityClass(GetCurrentProcess(), HIGH_PRIORITY_CLASS))

fprintf(stderr, "YIKES! Can't set HIGH_PRIORITY_CLASS -- %08X\n", GetLastError());

// Create the particle system

Particle::System particles{

// Source -- initializers, one for each attribute

#if VISUAL

new Particle::Sources::Flow{ 10240*3/2, {

#else

new Particle::Sources::Max{ {

#endif

new Particle::Initializers::Age <Particle::Domains::Constant> { 0.f },

new Particle::Initializers::Position<Particle::Domains::Point >{{ {0,0,0.15} }},

new Particle::Initializers::Velocity<Particle::Domains::Cylinder>{{ {0.0,0,0.85}, {0.0,0,1.97}, 0.21f, 0.19f }},

new Particle::Initializers::Color <Particle::Domains::Color >{{ {255,0,0,255}, {0,0,0,255} }},

}},

{ // Actions

new Particle::Actions::Gravity{{0,0,-1.8}},

new Particle::Actions::Bounce<Particle::Domains::Disc>{0.f, 0.35f, 0.f, {{0,0,0}, {0,0,1}, 1.5, 0}},

new Particle::Actions::Move{},

new Particle::Actions::SinkPosition<Particle::Domains::Plane>{false, {{0,0,-60},{1,0,-60},{0,1,-60}}},

},

// Renderer

#if VISUAL

#if OCULUS

new Particle::Renderers::Oculus{}

#else

new Particle::Renderers::OpenGL{}

#endif

#else

new Particle::Renderers::Null{}

#endif

};

// Run the particle system

auto start = high_resolution_clock::now(), last = start, now = start; do

{

auto dt = duration<Particle::time>(now-last).count();

if (particles.frame(dt)) break;

last = now; now = high_resolution_clock::now();

} while (now-start < 3min || true);

// Exit

return 0;

}Source Code

Introduction

In the source code associated with this paper you’ll find a Microsoft Visual Studio* 2017 project containing this implementation. It has three configurations: debug, release, and parallel. Debug has no optimizations and a smaller particle pool size, release is the single-threaded implementation, and parallel is the multi-threaded implementation. You change between visualization and benchmark mode by changing #define VISUAL 1 at the top of main.cpp. Setting it to 0 enables the Max source and the Null renderer and implements the benchmark mode. Setting it to 1 chooses either the OpenGL renderer or the Oculus renderer, depending on the value of #define OCULUS 0, also at the top of main.cpp.

PIMPL*

I want to take moment and point out that this code uses the PIMPL* design pattern extensively. The PlMPL pattern is used to remove implementation details from C++ header files. More information on this design pattern is available at the PlMPL site.

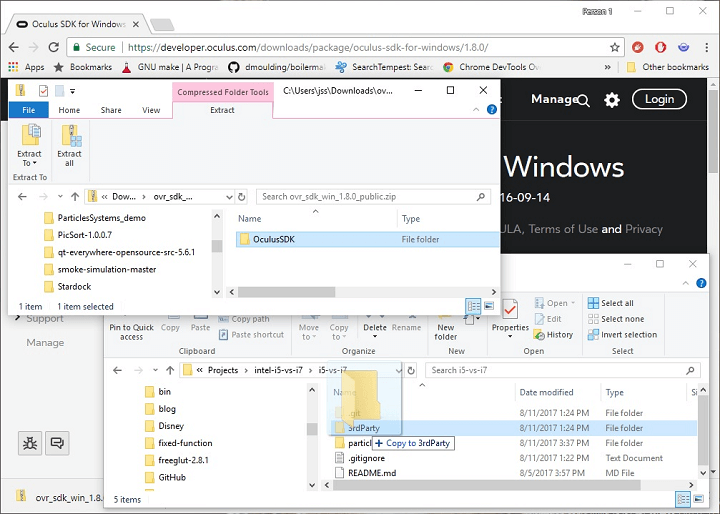

Oculus*

Before you can build this project, you have to download the Oculus SDK. And then install it into the i5-vs-i7\3rdParty directory like this:

Note: The Oculus SDK does not come with a prebuilt debug version of the SDK like it does for the release. If you want to build the debug version of the particle system project, you’ll first need to build the debug version of the Oculus SDK using Visual Studio 2015. The solution file you need is at 3rdParty\OculusSDK\Samples\Projects\Windows\VS2015\Samples.sln. Choose the Debug target and the x64 platform, and then right-click on LibOVR and choose build.

Results

Now that we’ve done all this work let’s see the results. I built the project on both an Intel Core i5 processor machine and an Intel Core i7 processor machine using Visual Studio 2017 Community Edition with #define VISUAL 0. I then used the Debug->Start Without Debugging menu to launch them. I let them run for about 10 minutes and then took the average of the last four reported times to get this (the units are in millions of particles processed per second):

| Intel® Core™ i5 Processor |

Intel® Core™ i7 Processor |

Difference |

|

|---|---|---|---|

| ST |

64 |

69 |

1.08 |

| MT |

185 |

285 |

1.54 |

All in all, this is very much in line with what we saw in the non-graphical benchmarks we ran above. This result shows that careful data structure and algorithm design can produce results in the graphics and virtual reality domains just as it does in regular desktop workload domains.

About the Author

John Stone is a seasoned software developer with an extensive computer science education and entrepreneurial background and brings a wealth of practical real-world experience working with graphical visualization and virtual reality (VR). As a former technical director of research for a VR laboratory at the University of Texas at Austin, he spent years honing his craft developing VR-based data acquisition systems in OpenGL for scientific experiments and projects.