In the previous posts I talked about User Experience and User Interface with the Mixed Reality worlds. Today though, I would like to talk about one of key feature of our MixedReality application TraIND40: Remote Training.

Let's start by focusing a use case: a trainee is in AR with HoloLens on the physical machinery and remotely the Mentor/Teacher, in VR or in AR, can support training and interact in hologram space with trainee, adding elements, giving instructions and reporting problems.

As many of you know the actual internet is not made for real-time communication, when transmitting the data for a voice or video call over the internet, the two most important performance metrics are latency and packet loss. Data on the internet backbone are routed via the cheapest route, not the most direct, this means that often packets take an extremely circuitous path to their destination, sending data over internet can involve up to 30 hops. Latency is measured in milliseconds, and any latency higher than about 150ms is bad for real-time communication. Anything higher than 400ms is non-interactive. The second issue of real-time communication is packet loss, a situation where data packets aren’t delivered, this is caused by the way routers prioritize packets for example if a router gets overwhelmed, it will drop UDP packets before it will drop TCP packets. There are some protocol-level solutions to improve internet performance where real-time communication is concerned, such as SIP and RTP. The best solution is to route real-time data so that it takes the fewest possible hops for less packet loss and takes the most direct path to reduce latency times. To achieve this, some communications carriers utilize private networks to streamline data routing and optimize performance for real-time communications applications.

The implementation of our use case involves a high functional and technological complexity, in the article we will focus on the technological solution that is based on the WebRTC standard.

WebRTC (Web Real-Time Communication) is a free, open-source project that provides web browsers and mobile applications with real-time communication (RTC) via simple application programming interfaces (APIs). It allows audio and video communication to work inside web pages & apps by allowing direct peer-to-peer communication, eliminating the need to install plugins or download native apps or frameworks. Below some advantages:

- Platform and device independence: Any WebRTC-enabled browser with any operating system and a web services application can direct the browser to create a real-time voice or video connection to another WebRTC device or to a WebRTC media server.

- Secure voice and video: WebRTC has always-on voice and video encryption. The Secure RTP protocol (SRTP) is used for encryption and authentication of both voice and video.

- Advanced voice and video quality: WebRTC uses the Opus audio codec that produces high fidelity voice. The Opus codec is based on Skype's SILK codec technology. The VP8 codec is used for video.

- Reliable session establishment: WebRTC supports reliable session establishment.

- Adaptive to network conditions: WebRTC supports the negotiation of multiple media types and endpoints. This produces an efficient use of bandwidth delivering the best possible voice and video communications.

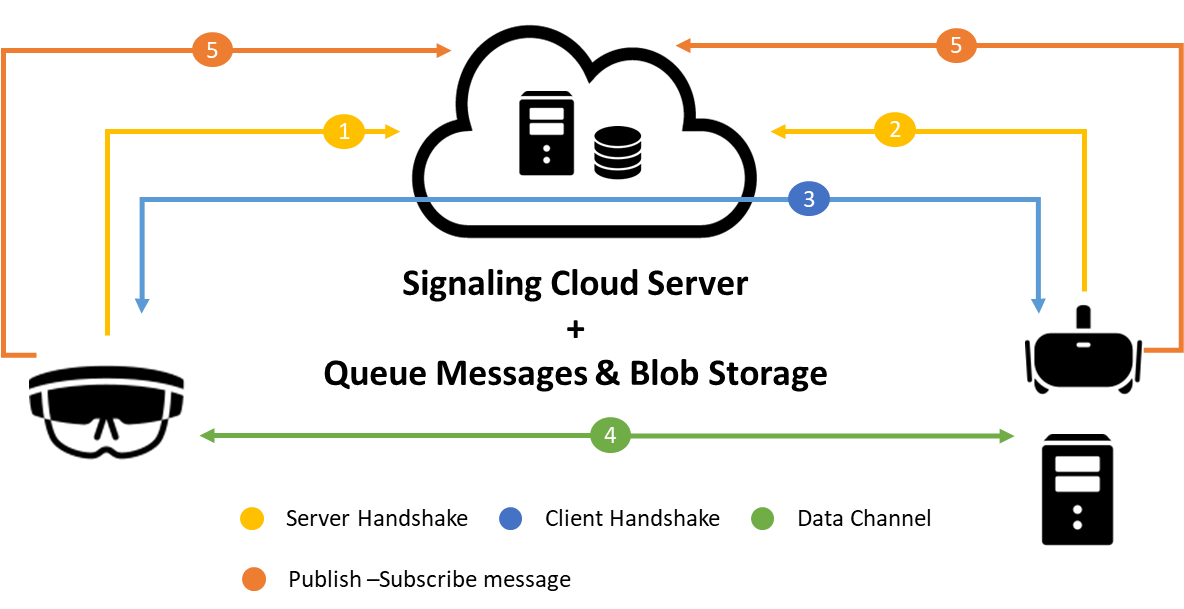

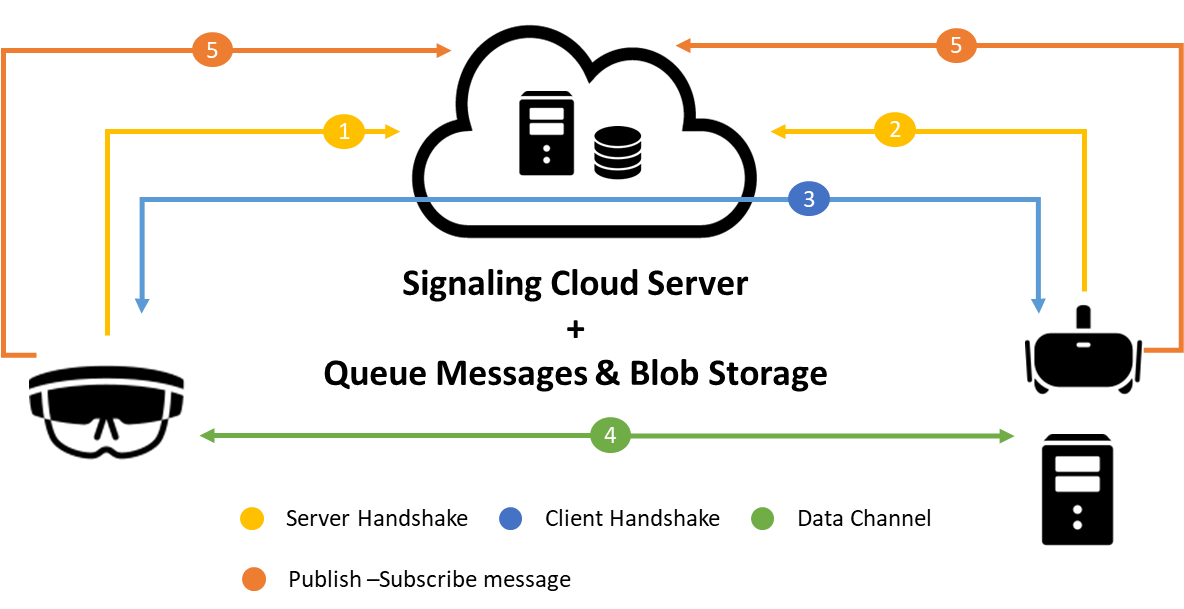

Below a picture which show a data flow of message communication between peers (VR or AR device) and server in the Cloud.

WebRTC requires that both peers have access to a "signalling server", in our case the server is on Microsoft Azure cloud, this is a server app that must be accessible via internet (on specific ports), that both peers use to register themselves with. Using the signalling server, both the trainee and mentor app indirectly indicate to each other how to best communicate with each other. After signalling is complete, trainee-mentor communication happens independently of the signalling server (i.e. not all video traffic is routed via the signalling server).

At this moment, our solution allows the only exchange of video and audio information but we also want to exchange of other information, in particular application commands (mentor send to trainee a list of commands to execute) or 3D objects to visualize. For this reason, we have added a new technology element to the solution (see figure below), it is a queue message service, a cloud service.

Queue service provides cloud messaging between application components. In designing applications for scale, application components are often decoupled, so that they can scale independently. Queue service delivers asynchronous messaging for communication between application components, whether they are running in the cloud, on the desktop, on an on-premises server, or on a mobile device.

Thanks to the queue service we will have an asynchronous message exchange, in real time between the different peers (mentor and trainee), also in order to allow two-way communication, each peer will have 4 unique topics (two for actions and the other two for 3D objects) through which it will exchange messages for reading or writing actions or 3D object.

The challenge is coming to an end and in two weeks we will be telling the full story of the project.

Keep cheering for FlyingTurtle and share our posts if you liked them.