A Powerful New Tool

Alibaba and Intel have collaborated to improve developer productivity by combining three key components:

-

Neural Coder component of Intel® Neural Compressor

-

BladeDISC open-source compiler for machine learning workloads

Neural Coder simplifies deployment of deep learning (DL) models via one-click, automated code changes (e.g., to switch accelerator devices or enable optimizations). It uses static program analysis and heuristics to help users take advantage of Intel® DL Boost and hardware features to improve performance. This one-click enabling boosts developer productivity while making it easier to take advantage of acceleration. This can all be done through a convenient JupyterLab* GUI extension to Neural Coder.

Alibaba Cloud ML Platform for AI (PAI) provides end-to-end machine learning (ML) services, including data processing, feature engineering, model training, model prediction, and model evaluation. The Data Science Workshop (DSW) of PAI is an integrated development environment in the cloud. It integrates JupyterLab and provides plug-ins for customized development.

BladeDISC is one of the key components of PAI-Blade inference accelerator. It provides general, transparent, and easy performance optimization for TensorFlow* and PyTorch* workloads on CPU and GPU backends.

How it Works

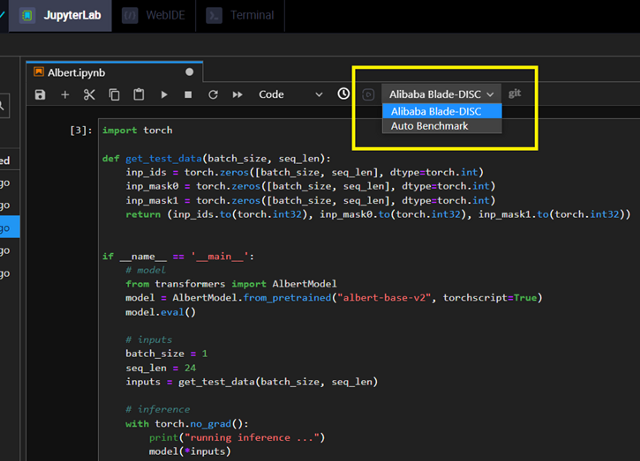

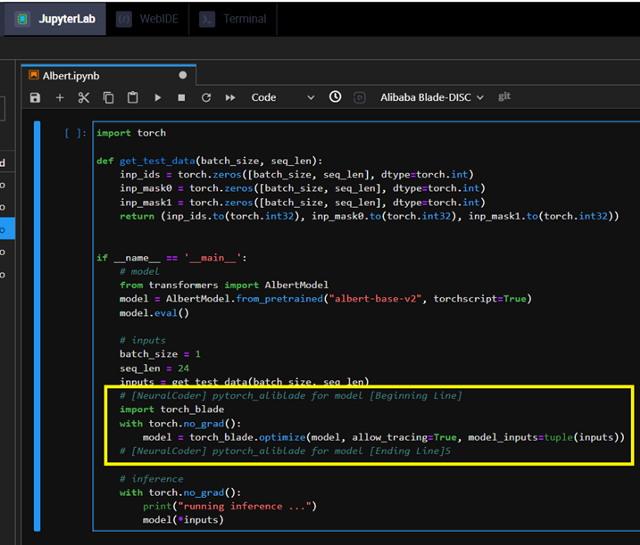

Neural Coder has been integrated into PAI-DSW and includes BladeDISC as one of its optimization backends (Figure 1). This simplifies access to the inference acceleration that BladeDISC provides. For example, the DL script of Hugging Face’s Albert Model can be one-click optimized using the “Alibaba Blade-DISC” option of the Neural Coder extension (Figure 2).

Figure 1. Neural Coder as an extension in the Alibaba PAI-DSW platform

Figure 2. One-click optimization of using the “Alibaba Blade-DISC” option of the Neural Coder extension

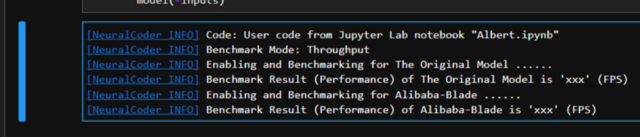

The “Auto Benchmark” option shown in Figure 1 tells Neural Coder to run benchmarks to inform automatic code optimization. It compares before and after performance and provides a benchmark log below the code cell of the Jupyter notebook (Figure 3).

Figure 3. Automatic benchmark log

Putting it Together

Intel and Alibaba collaborated to enhance DL productivity by providing a no-code solution to enable Alibaba Blade-DISC optimization. The integration of Neural Coder into Alibaba Cloud PAI DSW greatly simplifies the deployment of Alibaba Blade-DISC optimization, and can help users accelerate their DL models with just one click.

DL practitioners can try out this extension in Alibaba Cloud PAI DSW platform by creating a DSW g7 CPU instance in PAI. Alternatively, you can try the standalone version of Neural Coder extension in JupyterLab. We also encourage you to check out Intel’s other AI Tools and Framework optimizations and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio.