Authors: Quan Yin & Du Yaru

Many gamers like to share their game highlights on social platforms. This article shows how you can use artificial intelligence (AI) character recognition technology to catch game highlights. With the new instruction set, Vector Neural Network Instructions (VNNI), and Intel® Distribution of OpenVINO™ toolkit, you can maximize the use of different Intel® hardware. This combination allows AI to generate exciting moments in real-time without affecting the player’s gaming experience.

Introduction

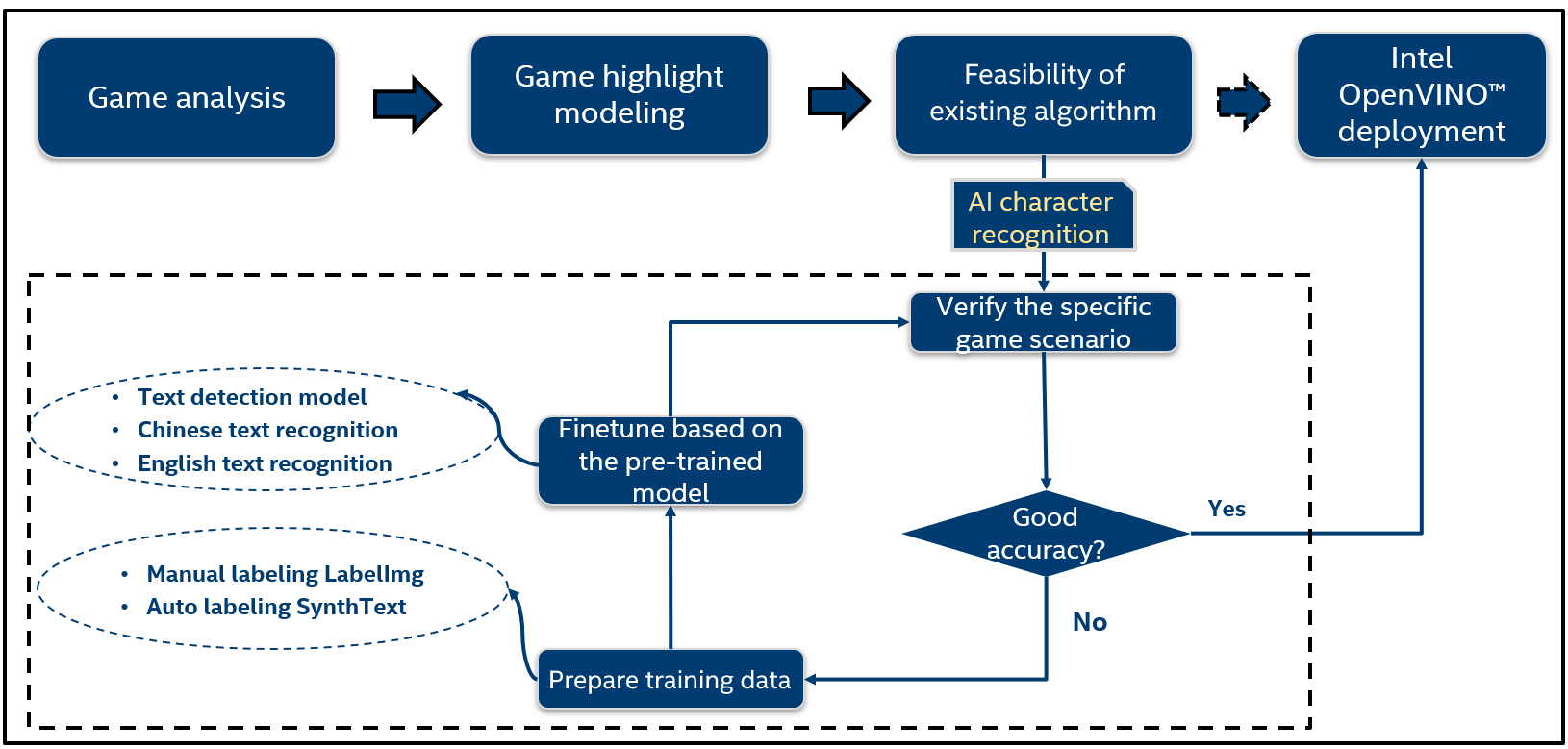

You can recognize the game highlights by watching what happens in the game and noting the related information that appears on the screen. For example, when a player kills someone in a first-person shooter (FPS) game, the screen displays critical information such as “A kills B.” If this information is captured, you can locate the related time frame and record it. AI character recognition technology can be used to recognize whether it is crucial information. Using the PlayerUnknown's Battleground (PUBG) game as an example, Figure 1 shows the process of automatically generating the game highlights through AI character recognition technology.

Figure 1. An example of how game highlights are automatically generated

The following process describes how game highlights are generated. Figure 2 illustrates this process.

- Analyze the content of a specific game and define the game highlights.

- Model the task into a computable problem from the perspective of computer vision.

- Based on AI character recognition technology, use the pretrained model to verify whether the accuracy is suitable to the current scenario. If not, you need to retrain the model until it is good enough.

- If the accuracy is good for the specific game scenario, you can deploy the model by using the Intel Distribution of OpenVINO toolkit.

Figure 2. Process for generating game highlights

The solution to generate game highlights based on AI character recognition technology is scalable. General models like text detection and text recognition are suitable in most cases. To get better accuracy in a specific game, you can use the GameSynthText tool to create multiple training datasets in the same style as the game without any manual labeling work. With the Intel Distribution of OpenVINO toolkit, the model can support real-time inference with good performance.

Game Highlights for World of Tanks

Game Analysis

World of Tanks is a 5v5 multiplayer game. During the game, a successful attack on the enemy injures the enemy, decreasing their blood value. The enemy is dead when the blood value is zero. The following table summarizes the analysis and highlights from the World of Tank game.

| Highlight Moment | Content | Content Feature | Computer Vision Task | Algorithm Feasibility | Fine Tuned (Yes or No) |

|---|---|---|---|---|---|

| Status information | At the beginning of the game, “battle start” is displayed on the screen. At the end of the game, “victory” or “defeated” is displayed. |

The text region is fixed. | Recognize each status in the relative areas. | Get 99% accuracy based on the pretrained Chinese text recognition model. | No |

| Kill information | At the right bottom, the text of the kill information is displayed.

|

The text region is not fixed, sometimes has multiple lines, sometimes has a single line. | Split each line of kill information, then recognize which player is the kill based on the color. | Use the text detection model to split each line, and then use the color comparison algorithm to check whether the color in each line is orange. | Yes |

| Damage | In the bottom left, the self’s damage value is displayed. The value is decreased when the enemy attacks the gamer. | The text region is fixed. | Recognize the text number in the relative area. | The pretrained English text recognition model cannot recognize some numbers in the alphabet. For example, "1" is similar to "l". | Yes |

Figure 3. Status information (a, b, c, d)

Figure 4. Kill information (a, b, c) and damage information (d)

Model Training

For the text detection model’s training, the LabelImg tool was used to mark the kill information. During analysis, semi-transparent text was not marked in the training data, and therefore the model does not detect the semi-transparent text. In the end, about 800 pictures were marked and fine-tuned based on the existing text detection model. The following table provides specific information about the training process.

| Source code | Text_detection |

|---|---|

| Pretrained model | Text_detection.pb |

| Train dataset | 800 images |

| Batch size | 64 |

| Learning rate | 0.001 |

| Iteration | 1,000 |

| Hardware | NVIDIA 2080Ti |

| Time cost | 1 hour |

For the text recognition model, we trained a model that only recognizes numbers. The GameSynthText tool generated many pictures with a consistent style in World of Tank. Specifically, the damage value is formatted as “x,xxx” if the number has 4 digits, for example, “1,400”. Therefore when generating training data, such data will be reprocessed. Finally, about 20,000 pictures were annotated and fine-tuned based on the pretrained English text recognition model. The following table provides specific information about the training process.

| Source code | Text_recognition |

|---|---|

| Pretrained model | Text_recognition.pb |

| Train dataset | 20,000 images |

| Batch size | 10 |

| Learning rate | 0.001 |

| Iteration | 24,000 |

| Hardware | NVIDIA 2080Ti |

| Time cost | 5 hours |

Deployment with Intel® Technology

VNNI improves performance significantly by combining three instructions into one. It maximizes computing resources, uses the cache better, and avoids potential bandwidth bottlenecks. You can directly benefit from it if the AI model is in INT8 precision.

Intel Distribution of OpenVINO toolkit facilitates deep learning model optimization using an inference engine on the Intel architecture platform. The AI inference gains better performance on any Intel architecture platform by converting the trained neural network from its source framework to an intermediate representation (IR) that Intel Distribution of OpenVINO toolkit supports. The total solution gains better performance on all Intel architecture platform not only CPUs.

Intel Distribution of OpenVINO toolkit SDK only provides sample code but does not effectively construct OpenVINO for the whole project. Therefore, we created an extensible project architecture for OpenVINO integration.

Project Architecture

The architecture contains two parts:

- AI inference with Intel Distribution of OpenVINO toolkit

- Business logic with OpenCV

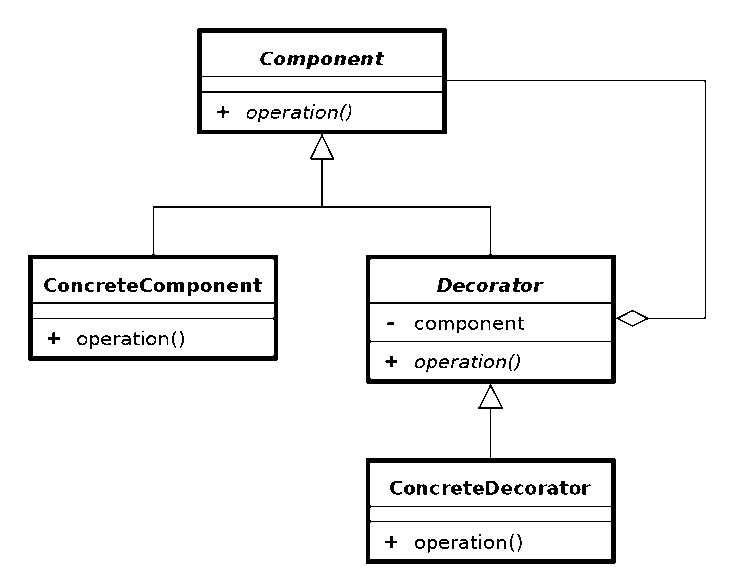

Business logic for different uses, such as preprocessing or post-processing, is always changeable, while the functionality of the OpenVINO toolkit remains unchanged. Therefore, the OpenVINO module and business logic is separated based on the Decorator Design Pattern.

In object-oriented programming, the decorator pattern is a design pattern that allows behavior to be added to an individual object dynamically, without affecting other objects’ behavior from the same class. The inference engine is considered as a concrete component. The business logic object is added to the inference engine object as an individual object. Hence, in this scenario the business logic object is a decorator.

This architecture increases the flexibility and reusability of the design. The inference engine is the primary function of an AI application, and it does not need to change often. The business logic needs to change frequently for different AI uses, and it can be added to the concrete component as a decorator at any time. The relationship between the inference engine and the business logic object is like the jet bridge and airplane. A jet bridge on the airport is concrete, but the airplane connected with the jet bridge is always different.

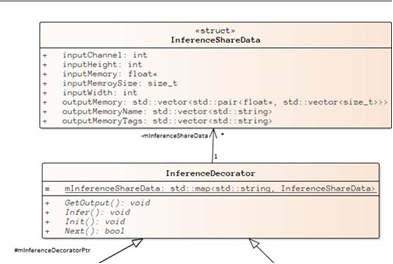

Figure 5. Decorator UML class diagram

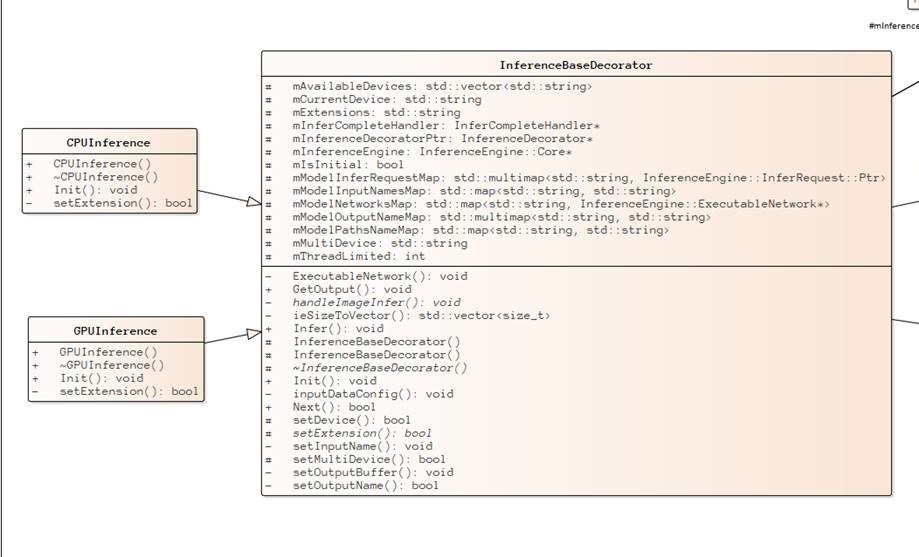

The InferenceBaseDecorator is a base class that encapsulates the inference module, and is also an abstract class and does not implement. The users inherit the InferenceBaseDecorator to customize inference deployment mode, for example, deploying on specific hardware or both CPU and GPU.

Figure 6. InferenceBaseDecorator UML class diagram

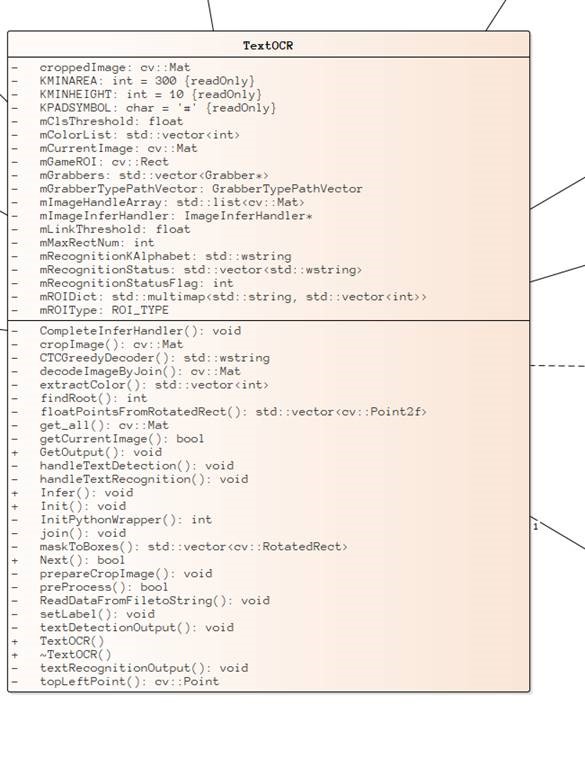

When running game highlights, the business logic performs text optical character recognition (OCR). Hence, the TextOCR class is designed as the business logic class. The business logic module's implementations vary because there are different usage scenarios. The design of TextOCR class can be used as a reference for other business logic.

Figure 7. TextOCR UML class diagram

The InferenceDecorator class is a bridge of two classes and the invoking public interface. You use this interface to manage an instance that encapsulates the AI inference module and the business logic module.

The InferenenceSharedData shares data between the OpenVINO engine model and the business logic model.

Figure 8. InferenceDecorator and InferenceShareData UML class diagram

The grabbing image function is also an independent model to create. This function uses the Factory Design Pattern to implement three grabbing methods:

- one image (ImageGrabber)

- images list (ImageListGrabber)

- video (VideoGrabber)

You can use this pattern as a base to explore more image-grabbing methods.

Figure 9. Graber related UML class diagram

Tips for Deployment

Programming Language Requirements

The project must use std::variant to return different values and the C++ 17 programming language. The “C++ Language Standard” must be set to “ISO C++ 17 Standard (/std:c++17)”

To avoid compiling problems, the project must add two macros on “C/C++ -> Preprocessor Definitions”:

_SILENCE_CXX17_CODECVT_HEADER_DEPRECATION_WARNING

_SILENCE_ALL_CXX17_DEPRECATION_WARNINGS

Test Program

You can add the test program for your main project with an established test framework like the Google Test Framework.

To add your main project to the test project

- Create the library file with .obj files for the test program.

- Add the following script to “Build Event -> Post-Build Event”.

not exist "$(SolutionDir)test\lib" mkdir "$(SolutionDir)test\lib"

/NOLOGO /OUT:"$(SolutionDir)test\lib\$(TargetName).lib" "$(ProjectDir)$(Platform)\$(Configuration)\*.obj"

3. Add the library file with .obj files to the linker of the test project.

Add $(SolutionName).lib to "Linker -> Additional Dependencies”.

4. Set the Runtime Library Type.

Set “C/C++ -> Runtime Library” to “Multi-threaded DLL (/MD)”.

To use the Google Test on Microsoft Visual Studio* Test Explorer:

- Install the Microsoft Visual Studio* extension.

To adopt the test explorer on Visual Studio, install Google Test Adapter on extensions of Visual Studio. - Set the test environment by setting files, and then add files to the “Test -> Test Setting” option. The setting files (.runsettings) should use the following format:

<?xml version="1.0" encoding="utf-8"?>

<RunSettings>

<GoogleTestAdapterSettings>

<SolutionSettings>

<Settings>

<WorkingDir>$(SolutionDir)</WorkingDir>

<EnvironmentVariables>PATH=$(SolutionDir)third-party\opencv\bin;$(SolutionDir)third-party\openvino\bin\dll_release;$(SolutionDir)third-party\tbb\bin;$(SolutionDir)third-party\ngraph\lib;$(SolutionDir)third-party\yam-cpp\bin\x64_release;%PATH%</EnvironmentVariables>

</Settings>

</SolutionSettings>

</GoogleTestAdapterSettings>

</RunSettings>

Performance Comparison

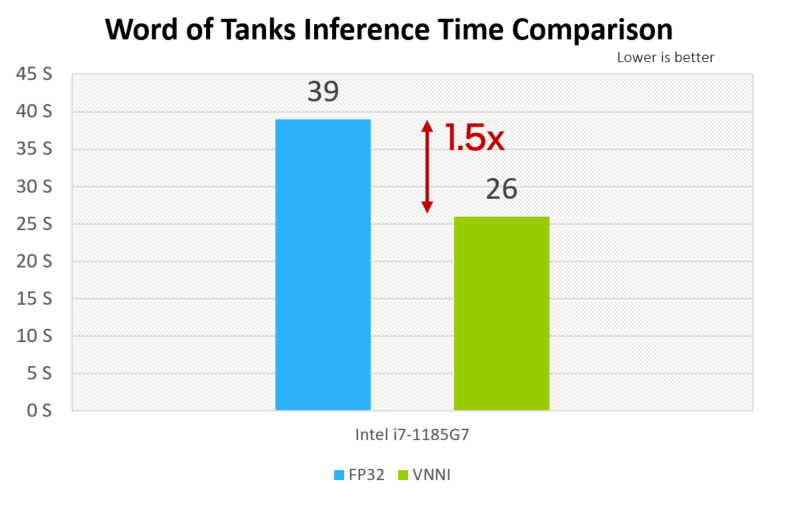

Figure 10. Inference performance comparison between FP32 and Intel® Advanced Vector Extensions 512 (Intel® AVX-512) with Vector Neural Network Instructions (VNNI).

The 11th generation Intel® Core™ i7-1165G7 processor using Vector Neural Network Instructions (VNNI) gains 1.5x performance improvement than FP32. We used the video as input data and measured the time taken to create a sparkle-time. Inference with VNNI instruction improves the performance of convolutional neural network (CNN) layers.

Conclusion

Using Intel® AVX-512, Vector Neural Network Instructions (VNNI), and the Intel Distribution of OpenVINO toolkit, you can implement AI character recognition technology to generate game highlights in real time. It is a great way to enhance your gaming experience.