The Hadoop* Authentication Service (HAS), an authentication framework contributed by Intel to Apache Kerby* 2.0, has helped Alibaba* improve the quality and performance of its Cloud E-MapReduce (EMR) and Cloud HBase* user identity information management, with lower maintenance costs. The pluggable authentication framework helps developers and their customers integrate existing enterprise identity management systems into Kerberos*, a widely-used, secure network authentication protocol.

Figure 1. Authentication and authorization in the Hadoop big data ecosystem

In the Hadoop big data ecosystem, Kerberos is the only built-in, secure, user authentication mode. Most open source data components can enable Kerberos authentication for services and users. However, Kerberos authentication on big data platforms brings along two challenges:

- The support provided by Java* Development Kit (JDK) and Java Runtime Environment* (JRE*) lacks complete encryption and checksum types, and the Generic Security Service Application Program Interface (GSSAPI) and Simple Authentication and Security Layer (SASL) is hidden, making it difficult to change and add functions.

- When it is used by Hadoop, Kerberos does not support other authentication mechanisms except password authentication, making it is difficult to connect an existing identity authentication system to the Kerberos authentication flow.

HAS addresses those challenges by providing a complete authentication solution for the Hadoop open source ecosystem. HAS is based on a Java implementation of the Kerberos protocol, and by integrating with existing authentication and authorization systems, HAS supports other authentication modes besides Kerberos on Hadoop/Spark*. In addition, it does not require separate maintenance of the identity information, reducing complexity and risks.

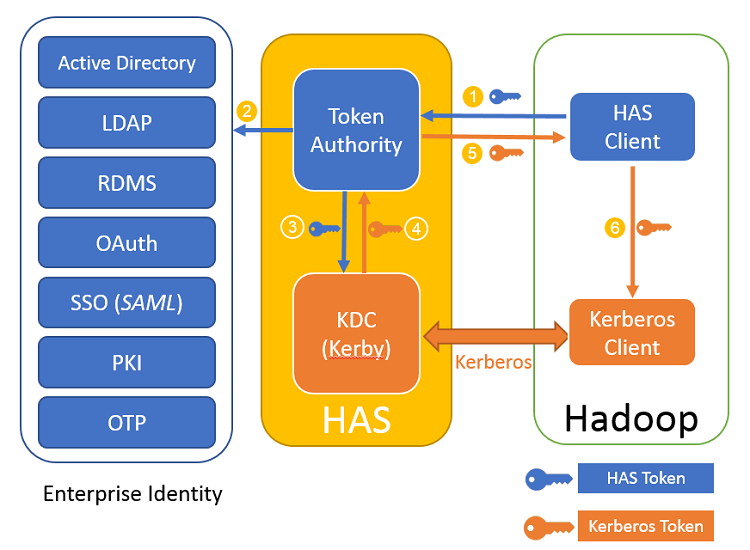

Figure 2. HAS uses the key distribution center (KDC) provided by Apache Kerby (a Java* version of Kerberos) to efficiently implement a new authentication solution for the Hadoop open source big data ecosystem.

HAS offers these key advantages:

- Hadoop services use the original Kerberos authentication mechanism. Counterfeit nodes cannot communicate with nodes inside the cluster because counterfeits do not obtain key information in advance. Malicious use of the Hadoop cluster is prevented.

- Hadoop users can continue to login using a familiar authentication mode. HAS is compatible with the Massachusetts Institute of Technology* (MIT*) Kerberos protocol. Users also can be authenticated by using passwords or Keytab before accessing corresponding services.

- The HAS plugin mechanism connects to the existing authentication system, and multiple authentication plugins can be implemented based on user requirements.

- Security administrators don’t need to synchronize user account information to the Kerberos database, reducing maintenance costs and information leakage.

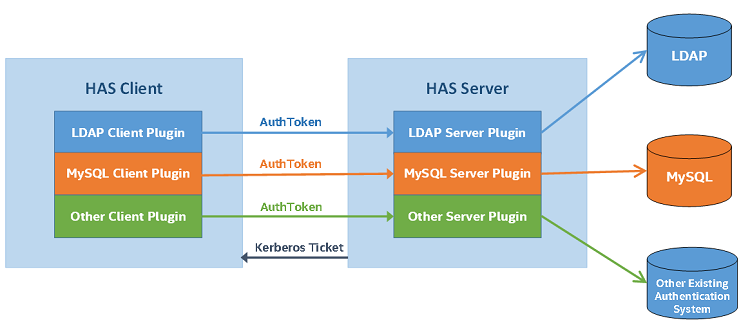

Figure 3. HAS supports Lightweight Directory Access Protocol (LDAP), MySQL*, and other client and server plugins

HAS is compatible with the Kerberos protocol, so all components in the Hadoop ecosystem can use the HAS-provided Kerberos authentication mode.

HAS provides a series of interfaces and tools to help simplify deployment. It also provides interfaces to help users implement plugins to integrate Kerberos with other user identity management systems.

At present, HAS supports ZooKeeper*, MySQL*, and LDAP. HAS will be contributed to the Apache Kerby project. According to the community plan, the HAS function will be released in Kerby 2.0.

Get Started

Read the technical white paper, Big Data Security Solution Based on Kerberos for more details on HAS, and visit the Intel® Data Analytics & AI page for tools and resources to build your end-to-end AI-based data analytics solutions.

Related Content

Apache Kerby, A Kerberos Protocol and key distribution center (KDC) Implementation: Apache Kerby provides a rich, intuitive and interoperable implementation, library, KDC and facilities to integrate public key infrastructure (PKI), OTP and token (OAuth2) in environments such as cloud, Hadoop and mobile.

Accelerate Innovations of Unified Data Analytics and AI at Scale: Intel has developed new open source software technologies that unify data analytics and AI an integrated workflow.

BigDL Framework: Write deep learning applications as standard Apache Spark programs, which can directly run on top of existing Spark or Hadoop clusters.

Analytics Zoo Toolkit: This unified analytics and AI open source platform seamlessly unites Apache Spark, TensorFlow*, Keras*, BigDL, and other future frameworks into an integrated data pipeline.

Develop Advanced Analytics Solutions with AI at Scale Using Apache Spark and Analytics Zoo: More than 90% of all data in the world was generated in the past few years—each day, the world creates more than 2.5 quintillion bytes of data, and the pace is accelerating.