Introduction

Modern enterprises are embracing the cloud model to take advantage of shared infrastructure in private data centers. This also helps them realize the significant benefits offered by public cloud services for flexibility, total cost of ownership (TCO), and infrastructure scalability.

Customers expect their confidential data to be protected in the cloud as they migrate mission-critical applications to public cloud services. Cloud customers who own the workloads and data have a basic need to protect their data across public and private cloud services, but traditional security controls no longer apply. New solutions must address privacy, regulatory, and left-behind (residual) data requirements. Whether the workloads are packaged as virtual machines (VM) or containers, they have to be protected at rest, in-transit, and while the workloads are running and data is in use.

Data at rest in local or network-attached storage needs to be protected using strong encryption protocols. This is a universal practice in the cloud and for the enterprise. Similarly, data in transit is commonly encrypted using SSL/TLS/HTTPS protocols. However, when the CPU processes the same data, it is in the memory and is not protected using cryptography. Memory contains high-value assets such as storage encryption keys, session keys for communication protocols, or personally identifiable information and credentials. So, it is critical that data in memory has comparable protection to data at rest in storage devices. It is not enough to encrypt memory content; encrypting content on the memory bus is equally important. The encryption has to be general purpose, flexible, supported in hardware with limited impact on applications and workloads, and not a lot of work to enable the operating system or hypervisor.

Intel has introduced Intel® Total Memory Encryption - Multi-Key (Intel® TME-MK), a new memory encryption technology, on 3rd generation Intel® Xeon® Scalable processors (formerly code named Ice Lake). This technology addresses data protection needs by encrypting memory content at runtime using the National Institute of Standards and Technology (NIST) AES-XTS1 algorithms. This article describes the design goals, provides a technical overview of the technology, and then reviews a performance analysis based on an implementation with Microsoft Azure*.

Intel introduced Intel® Total Memory Encryption (Intel® TME) and Intel TME-MK2 to address runtime data protection needs for all platform memory via the ability to encrypt the memory while it's running or is in use.

Design Goals and Objectives

- Build on the security controls that are already implemented in data centers: hardware root of trust, attestation, measured boot, secure boot, and more.3,4

- Support for DRAM, and in the future, extensible to non-volatile RAM (NVRAM).

- Support for CPU-generated encryption keys or tenant-provided encryption keys, giving full flexibility to customers.

- Trust privileged code, like the hypervisor, that has been honed from security perspective. Provide the hypervisor with flexibility on use of memory encryption keys for VM encryption or container encryption.

- No modifications are made to guest VMs. This provides the ability to lift and shift customer workloads to use Intel TME-MK seamlessly. No application refactoring is needed either.

- Bypass encryption for certain VMs, containers, or workloads if needed by cloud service providers (CSP) or customers.

- Minimal performance impact to applications and workloads.

Technical Overview

Intel TME encrypts the entire physical memory of a system with a single encryption key. It addresses concerns with cold boot and physical attacks on the memory subsystem. This capability is enabled in the early stages of a BIOS boot process. Once Intel TME is configured and locked, it encrypts all the data on external memory buses of a system-on-a-chip (SOC) using an NIST-standard AES-XTS algorithm with 128-bit keys. The encryption key used for Intel TME uses a hardware random number generator implemented in an Intel® SOC. The keys are inaccessible through the software or by using external interfaces to an Intel SOC. Intel TME capability does not require any operating system or system-software enabling.

Intel TME-MK technology is built on top of Intel TME. It enables the use of multiple encryption keys, allowing selection of one encryption key per memory page using the processor page tables. Encryption keys are programmed into each memory controller. The same set of keys is available to all entities on the system with access to that memory (all cores, direct memory access [DMA] engines, and more). Intel TME-MK inherits many of the mitigations against hardware attacks from Intel TME. Like Intel TME, Intel TME-MK does not mitigate vulnerable or malicious operating systems, or virtual machine managers (VMM). Intel TME-MK offers more mitigations than Intel TME. Intel TME and Intel TME-MK use the AES encryption algorithm in the AES-XTS mode. This mode, usually used for block-based storage devices, takes the physical address of the data into account when encrypting each block. This ensures that the effective key is different for each block of memory. Moving encrypted content across a physical address results in garbage data on read, mitigating block-relocation attacks. This property is the reason many of the discussed attacks require control of a shared physical page to be handed from the victim to the attacker.

Intel TME-MK provides the following mitigations against familiar software attacks.5 These mitigations are not possible with just Intel TME.

- Kernel Mapping Attacks: Information disclosures that use the kernel-direct map are mitigated against disclosing user data.

- Freed Data Leak Attacks: Removing an encryption key from the hardware mitigates future disclosures of user information.

The following attacks depend on specialized hardware, such as an evil DIMM or a dual data-rate (DDR) interposer:

- Cross-Domain Replay Attack: Data is captured from one domain (guest) and later replayed to another.

- Cross-Domain Capture and Delayed Compare Attack: Data is captured and later analyzed to discover confidential information.

- Key Wear-out Attack: Data is captured and analyzed to weaken the AES encryption.

The Intel TME-MK technology maintains a key table (internal to the CPU and invisible to the software) that stores key and encryption information that's associated with each KeyID. Each KeyID may be configured for:

- Encryption using a software-specified key

- Encryption using a hardware-generated key

- No encryption (memory is plain text)

- Encryption using a default platform TME Key

The operating system and hypervisor can assign the proper KeyID in the upper physical address bits contained in the paging-structure entries. This gives the hypervisor ultimate control over these domains via the Extended Page Table (EPT) paging structures that control the physical address space. For a bare-metal operating system, the IA-32 page tables are used to determine the proper key domain. For direct physical mappings (such as VMX pointers, CR3 with no EPT), the behavior is the same. The software can choose the proper KeyID by using the upper physical address bits in these addresses.

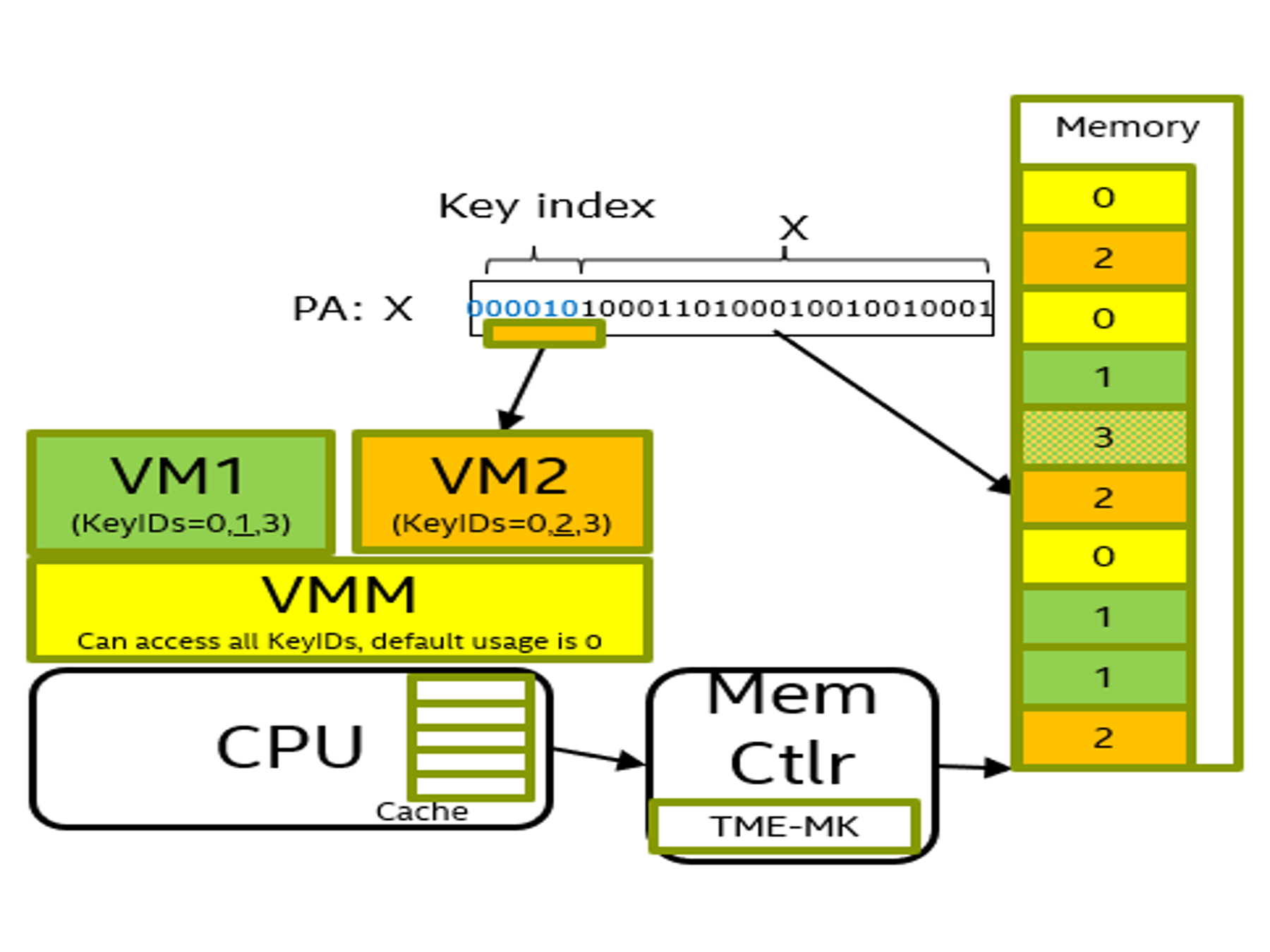

Page Granular Encryption

Intel TME-MK supports page-granular encryption. As an example, Figure 1 shows one hypervisor, one VMM, and two VMs. By default, the hypervisor uses KeyID 0 (same as Intel TME). VM1 uses KeyID 1 for its own private pages and VM2 is using KeyID 2 for its own private pages. In addition, VM1 can always use KeyID 0 for any page and is opting to use KeyID 3 for shared memory between itself and VM2. The KeyID is included in the upper bits of the physical address field (Figure 1 shows KeyID 2). The remaining bits in the physical address field address bits in the memory. Figure 1 shows one possible page assignment along with the KeyID. In this case, the hypervisor has full freedom to use any KeyID with any pages for itself or any of its guest VMs.

Figure 1

Intel TME-MK is enabled in Windows* with Hyper-V* and is deployed in Azure confidential computing as part of the new Intel®-based DCsv3-series confidential VMs. The rest of this article provides the performance analysis for Intel TME-MK based on an Azure implementation.6, 7

Results & Discussion

When customers deploy a security solution in their environments, a key concern is a performance penalty from the security feature. The performance impact of Intel TME-MK was extensively studied under different scenarios. It was less than or equal to 2.2% for all the cases, well within a run-to-run variation observed for these workloads. To discuss the performance impact of Intel TME-MK, let's review the:

- Configuration of the test bed and the workloads

- Performance impact of Intel TME-MK on a single guest VM

- Performance impact in a multi-VM scenario where the platform is evenly subscribed with evenly sized multiple VMs

Configuration

The system being tested is a server from Intel that's based on a 3rd generation Intel Xeon Scalable processor (formerly code named Ice Lake) with Hyper-V version 22526. The workloads are run in a generation 2 Ubuntu* 20.04 LTS Hyper-V Linux* VM created on the server.

To consider the Intel TME-MK impact on large and small guest VMs, a DC48s_v38 VM configuration was used for Bert (an AI workload) and DC16s_v3 VM configuration was used for the other workloads. Though the DC16s_v3 VM is a relatively large VM, it is limited to one physical non-uniform memory access (NUMA) node of the platform. However, a DC48s_v3 VM was large enough to span two physical NUMA nodes of the platform and has an affinity to specific CPUs corresponding to the respective NUMA nodes.

Workloads considered for analysis include Fio, NTttcp, LINPACK, Redis (Memtier), Nginx (s_time) and Bert. This particular workload list was selected for:

- Creating a mix of CPU, memory, network, and disk-intensive workloads

- Including memory bandwidth-intensive and latency-sensitive workloads

- A mix of micro benchmarks (Fio and NTttcp) and workloads that include popular customer use-case scenarios like in-memory database servers (Redis), AI workloads (Bert), web servers (Nginx), and HPC workloads (LINPACK)

- Handling TLS requests through Nginx was introduced in the mix to demonstrate the impact of Intel TME-MK memory encryption on a workload that performs cryptographic operations on the CPU

These are commonly used workloads and microbenchmarks for performance assessment purposes in the industry.

Performance Impact on a Single Guest

To consider the Intel TME-MK performance impact on a single guest, every workload was run twice inside the Linux guest to quantify the run-to-run variation of the workload with Intel TME-MK performance impact.

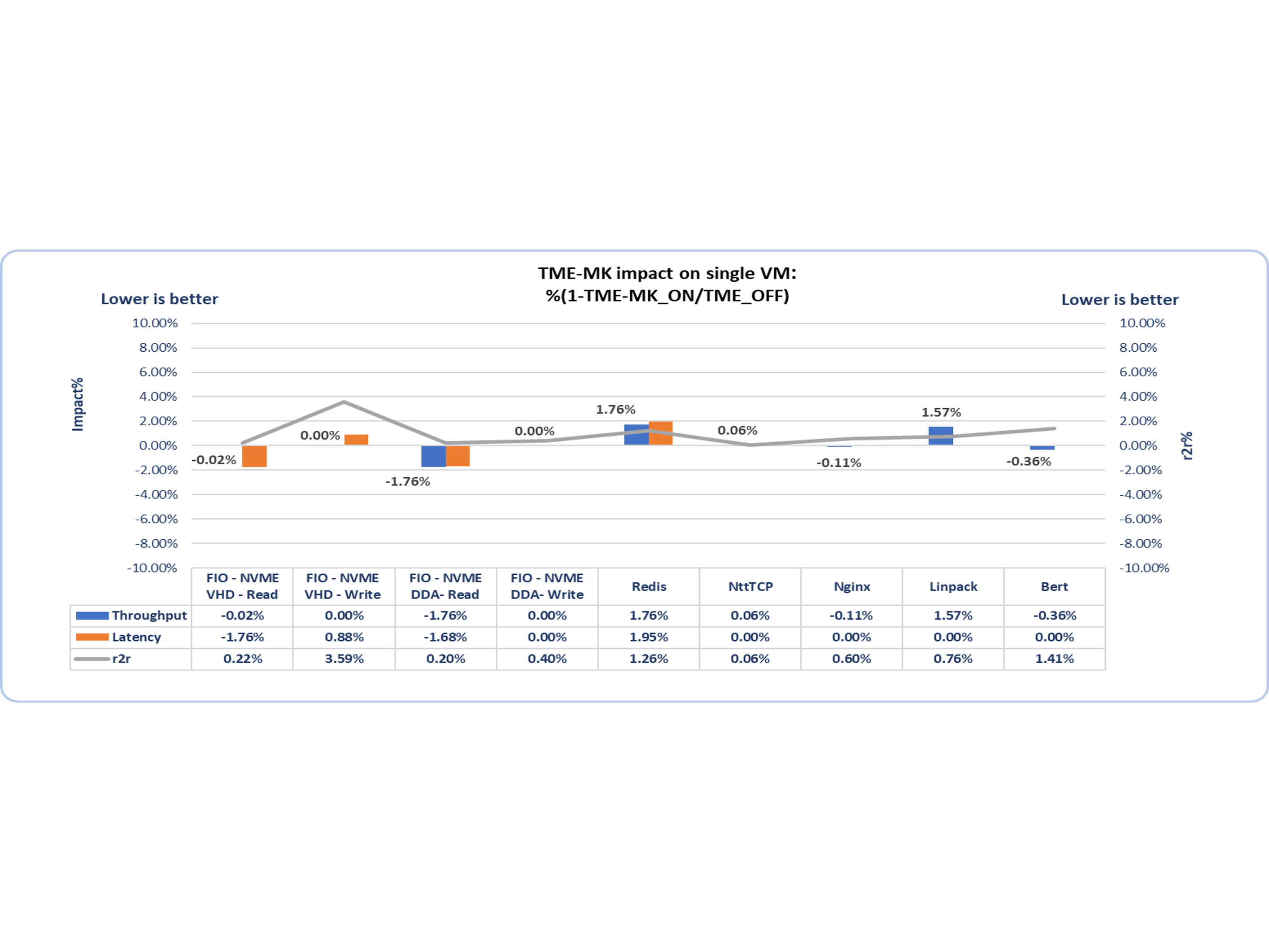

To complete its operation, every workload had its throughput and, wherever applicable, its latency assessed. As shown in Figure 2, two scenarios were compared:

- One where the memory of the guest was encrypted with Intel TME-MK (TME-MK_ON)

- The other with platform memory encryption disabled, implying the VM memory was not encrypted (TME_OFF)

The workload performance impact of Intel TME-MK for the workloads in Figure 2 is low: less than 2%. Figure 2 shows the performance impact from Intel TME-MK and the run-to-run variation observed for each workload.

Figure 2

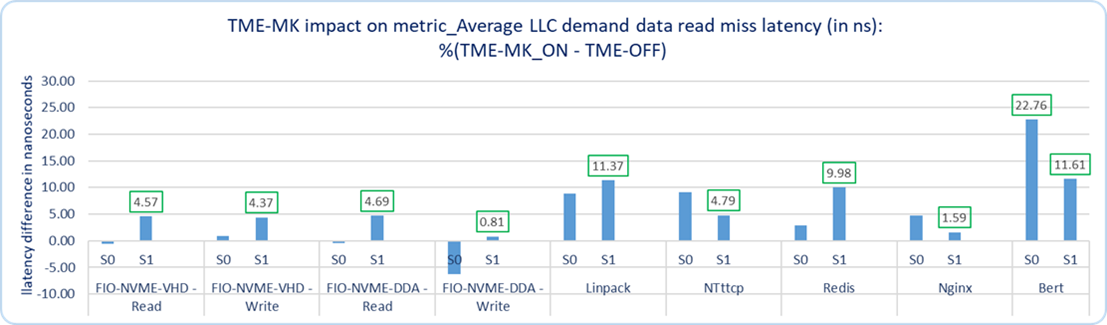

Besides workload throughput performance, the impact of Intel TME-MK on CPU usage, memory bandwidth, and memory access latencies on the platform was also studied. CPU usage and memory bandwidths were not impacted and were within run-to-run variance as expected. Any impact due to Intel TME-MK is due to:

- Encryption of data when written to memory

- Decryption of data when read from memory that translates to a memory access delay observed at the memory controller of the CPU

The last level cache data read miss latency was measured. The impact due to Intel TME-MK was observed to be less than or equal to 11.6 ns as expected (shown in Figure 3). Since DC16s_v3 VM runs only in the second socket of the platform, the graph only calls out memory access latency overhead on second socket S1 for all cases except Bert. Bert shows 22 ns overhead for the first socket S0 within the run-to-run for memory access latency observed for Bert, which is 22.4 ns.

Figure 3

Intel TME-MK Impact on a Multi-VM Scenario

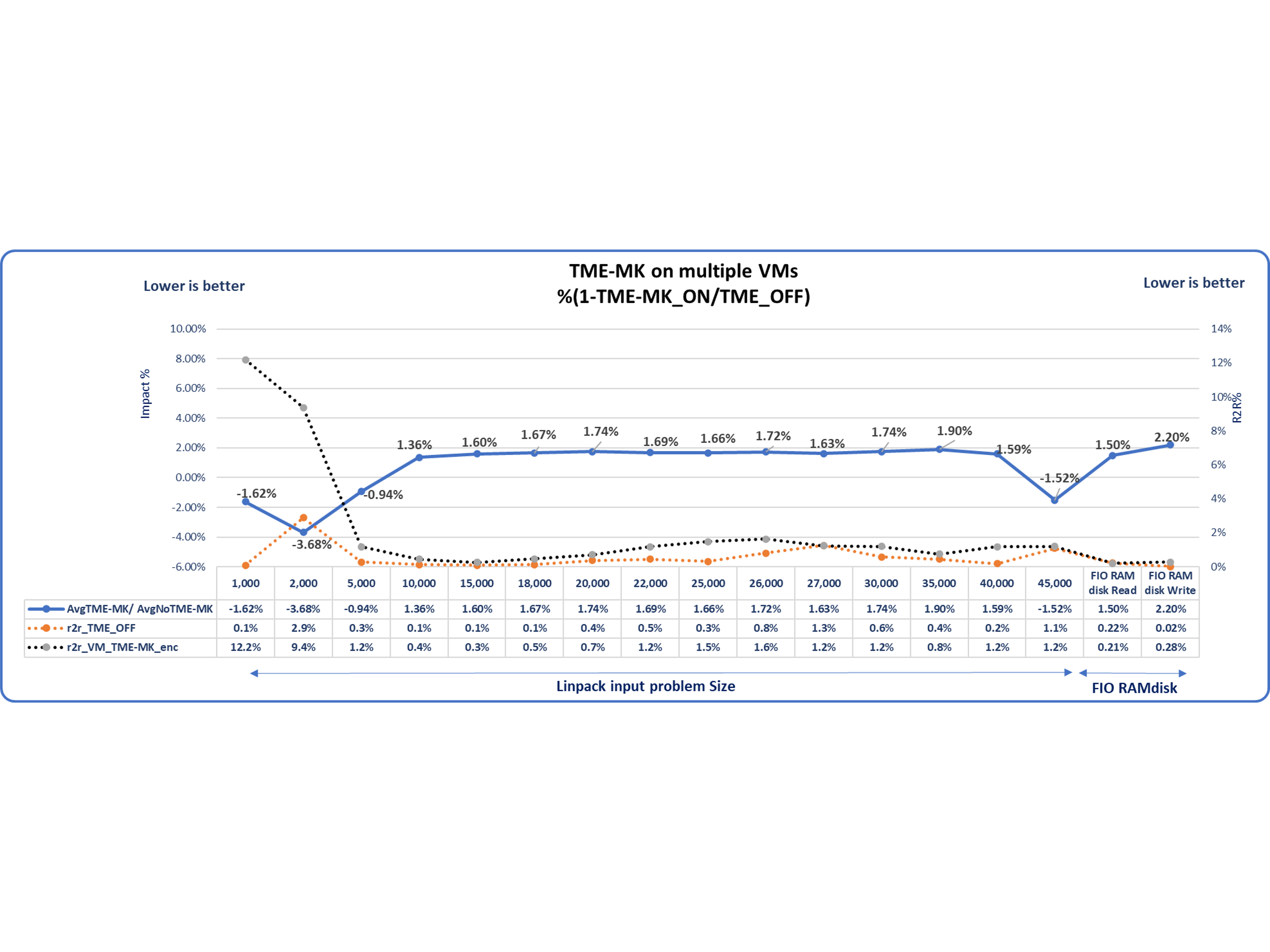

For the multi-tenant VM scenario, the platform was evenly subscribed with 18 VMs of 4 vCPUs and 16 GB memory each on the 72-core server under test so that we have 9 VMs on socket 0 and 9 VMs on socket 1. To assess the impact on a multi-VM scenario, three different memory-intensive use cases were considered. All experiments have a homogeneous setup for ease of comparison. This implies that all VMs in every experiment are running the same workload for a given case. For a lesser run-to-run (R2R) variation and better vCPU to logical processor affinity, a CPU group9 was created for every VM.

- Ram Drive Read: Four ramdisks were created in the guest, and one Fio process was used to issue 4 Kbytes of I/O random-read operations to each of the ramdisks.

- Ram Drive Write: Like the previous case, Fio was used to issue 4 KB of I/O random-write operations to each ramdisk.

- LINPACK: A shared-memory version of LINPACK was run (as previously mentioned) so that the CPU was saturated for all four virtual processors of the guest, for all the guests.

Performance impact observed is less than or equal to 2.2% as shown in the following Figure 4.

Figure 4

Conclusion

As more customers migrate to public or hybrid cloud environments, security becomes a growing concern for their mission-critical applications and confidential data. While there are several ways of securing data at rest in storage or data in motion over the network, Intel TME-MK provides a way for encrypting entire system memory at runtime and is deployed by key customers like Azure. While this technology provides great flexibility with multiple encryption keys and ease of enabling (no changes to guest VMs), it also addresses a key concern for customers: the performance impact of security. With 0%-2.2% performance impact, Intel TME-MK becomes a viable first step towards securing runtime memory for the customers, helping them migrate to the public cloud with minimal impact.

References

- NIST for AES-XTS

- Intel TME-MK Specification

- Intel® Trusted Execution Technology

- LWN.net: Enable Intel TME-MK

- Intel Powers New Azure Confidential Computing VMs

- Key Foundations for Protecting Your Data with Azure Confidential Computing

- VM type: DCv3 and Dv5 VM specifications

- CPU Groups

- RFC 5246 - The Transport Layer Security (TLS) Protocol